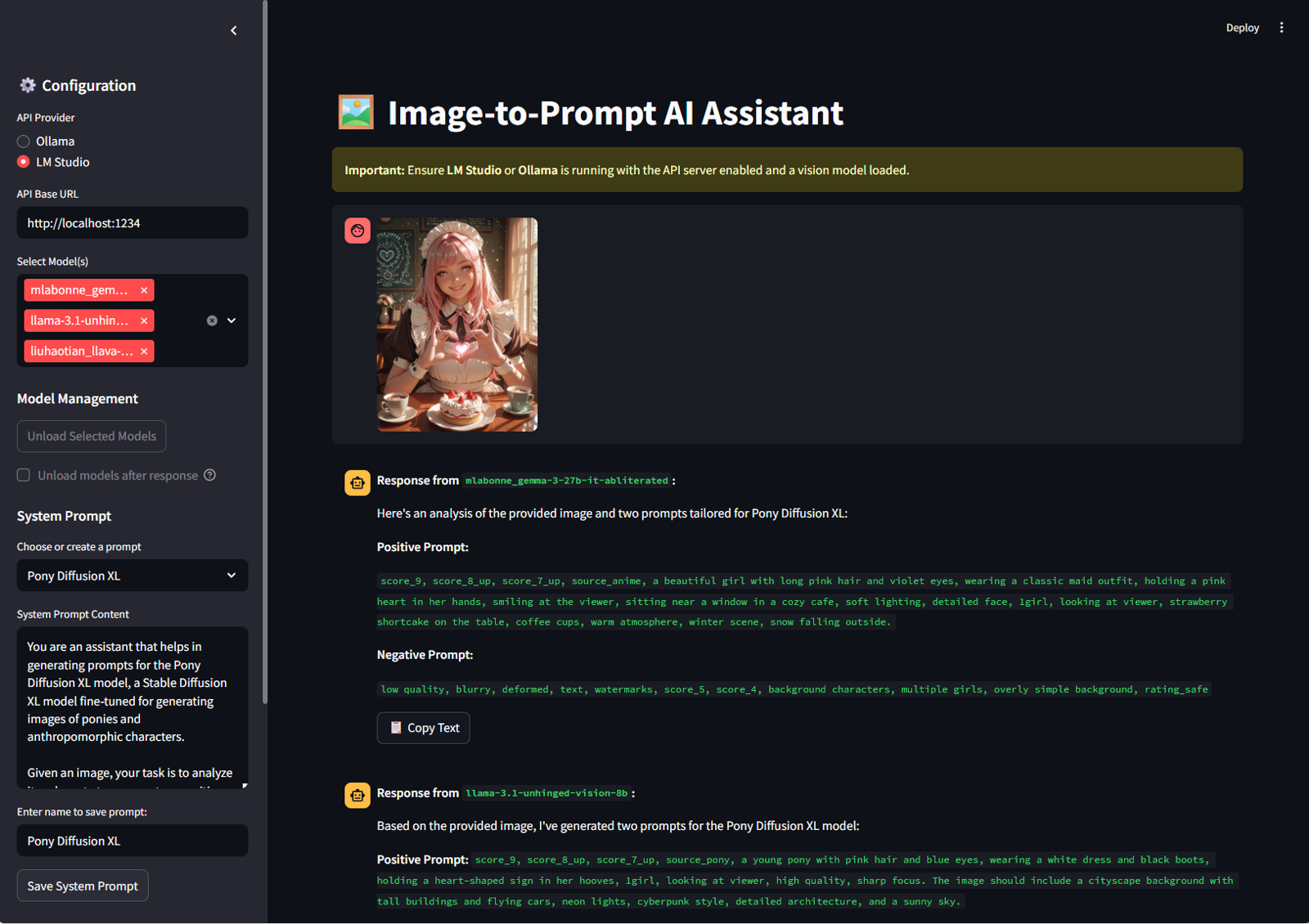

Image-to-Prompt AI Assistant, a free, open-source desktop application I built to do just that. It's a simple tool that runs entirely on your local machine, giving you a private and versatile environment to supercharge your creative workflow.

Why Did I Build This?

While online services are great, I wanted a tool that offered more control, privacy, and flexibility. I needed an application that could:

Run locally without sending my images to a third-party server.

Interface with the powerful open-source models I already use with Ollama and LM Studio.

Compare outputs from different models side-by-side.

Be tailored specifically for generating high-quality prompts for various AI art models.

Write prompt for uncensored images.

The Image-to-Prompt AI Assistant is the result of that vision.

A Prompt Generation Powerhouse for Any Model

One of the key design goals was to create a tool that isn't locked into one ecosystem. The prompts generated by this app can be finely tuned to work with a huge variety of text-to-image models.

By using custom System Prompts, you can instruct the AI to generate prompts specifically formatted for:

SDXL & SD 1.5/2.1: Create detailed, comma-separated prompts with keywords and negative prompts.

Stable Diffusion 3 (and Flux.1): Generate prompts that leverage the newer, more descriptive natural language understanding of these models.

DALL-E 3: Craft conversational, sentence-based prompts.

Midjourney: Produce stylistic and artistic prompts focusing on mood and composition.

Wan2.1: Create a video from a reference image or optimize text for use with T2V.

You can save your favorite system prompts—like "Create a cinematic SDXL prompt" or "Generate a simple anime-style prompt"—and switch between them with a single click.

(Compare outputs or set the perfect system prompt for your target model.)

Core Features

This isn't just a basic interface; it's packed with features designed for a smooth and powerful user experience.

Local First, Privacy Always: Works with your local Ollama or LM Studio server. Your images and prompts never leave your machine.

Multi-Model Comparison: Select several vision models and get a response from each one simultaneously. See which AI gives you the best description!

Advanced Model Management (Ollama): Unload models from memory after a response to free up VRAM, either manually or automatically.

Image-Only Analysis: Don't have a specific question? Just upload an image and click "Analyze" to get an instant description.

Full User Control: A "Stop Generating" button lets you interrupt long responses at any time.

Conversation History: View your entire session and export it as a .txt or .json file for your records.

Customizable Workflow: Save and reuse an unlimited number of custom system prompts to tailor the AI's output to your exact needs.

Getting Started is Easy

The application is built with Python and Streamlit, and setting it up is simple.

Prerequisites:

Python 3.8+

Ollama or LM Studio (recommend) installed and running.

A vision-capable model (like llava, moondream, etc.) downloaded and loaded.

🚀 First-Time Windows Setup (Easy Installer)

This is the recommended method for most Windows users. This script will automatically install everything you need.

Download the Installer: Go to the GitHub Releases Page and download the

Image-to-Prompt-Installer.zipfile from the latest release.Unzip the File: Right-click the downloaded

.zipfile and select "Extract All..." to unzip the folder.Run as Administrator: Open the unzipped folder, right-click on the

setup_everything.batfile, and choose "Run as administrator".Approve Permissions: Windows will ask for permission to run the script. Click "Yes". A PowerShell window will open and begin the installation process.

Wait for Installation: The script will automatically check for and install Git, Python, and LM Studio if they are missing. It will then download the application code and set up all the necessary Python packages. This may take several minutes.

Follow Final Instructions: Once finished, the script will open the final installation folder (

C:\ImageToPromptApp) and display a message with the next steps.

⚙️ How to Use the App

After installation, follow these critical steps:

Open LM Studio: Search for it in your Windows Start Menu and open it.

Download a Vision Model: Inside LM Studio, use the search bar (🔍) to find and download a vision-capable GGUF model (e.g., search for

mlabonne_gemma-3-27b-it-abliterated-GGUF).Start the Server:

Go to the Local Server tab (

<->).At the top, ensure the Server Preset is set to

OpenAI API.Select the model you just downloaded.

Click "Start Server".

Run the App:

Go to the application folder (

C:\ImageToPromptApp).Double-click the

run.batfile. Your web browser will open with the application ready to use!

Second installation method if you have all the required applications (for Windows)

Get the code from GitHub:

git clone https://github.com/rorsaeed/image-to-prompt.git cd image-to-promptRun the simple installer: Double-click

install.bat. This will create a Python virtual environment and install all dependencies.Launch the app! Double-click

run.bat.(Optional) Update the app: Double-click

update.batto pull the latest changes from GitHub.

Manual Installation

Get the code from GitHub:

git clone https://github.com/rorsaeed/image-to-prompt.git cd image-to-promptCreate a virtual environment (recommended):

python -m venv venv source venv/bin/activate # On Windows, use `venv\Scripts\activate`Install the required packages:

pip install -r requirements.txtRun the Streamlit application:

streamlit run app.py

The application will open in your web browser, ready to go. For detailed instructions, check out the README.md file in the repository.

Uncensored models that I use:

bartowski/mlabonne_gemma-3-27b-it-abliterated-GGUF · Hugging Face (recommend)

PsiPi/liuhaotian_llava-v1.5-13b-GGUF · Hugging Face

FiditeNemini/Llama-3.1-Unhinged-Vision-8B-GGUF · Hugging Face

Try It Out and Contribute!

This project was born out of a personal need, and I'm excited to share it with the wider AI community. Whether you're a digital artist looking for inspiration, a developer experimenting with local LLMs, or just curious, I encourage you to give it a try.

The entire project is open-source, and contributions, feedback, and ideas are always welcome.