This is a tech report, asking for ... nothing. How can you validate if this solved open questions? The objective and KPI is never clear here, opposing to a "new architecture pretrained model with lots of GPU hours and dataset." This is just art exploration, with some AI professional insights, and revising the history of merged models in SD1.5 (novelaileak) era.

This merge may contains many "special cases" on modified AI / ML algorithms. My solution may not apply to other scenarios effectively.

Meanwhile, as in 2506, there is MoE based merging algorithms, but it is way more expensive then training the model directly! Training models takes only 1 random timesteps per image per epoch, but training routers in MoE takes entire prediction process!

Tools used: (not this) comfy-mecha, (this) sd-mecha with my own python notebook.

Changelog:

v3.01: Fix broken images again.

v3: Added for NIL1.5-AK part.

v2: Added for exploring base models and vpred model.

v1.1: Heck, why images are lost? Fill in recent images instead.

v1.01: Changed some wordings.

v1: Initial content

1. Problem: Merging models from 2 base models

Applied to AstolfoKarmix-XL.

It is harder than "many base models". For k=2, algorithm is not enough.

For merging algorithm, see my another article on merging. I have successfully merged 200+ models coming from many base models.

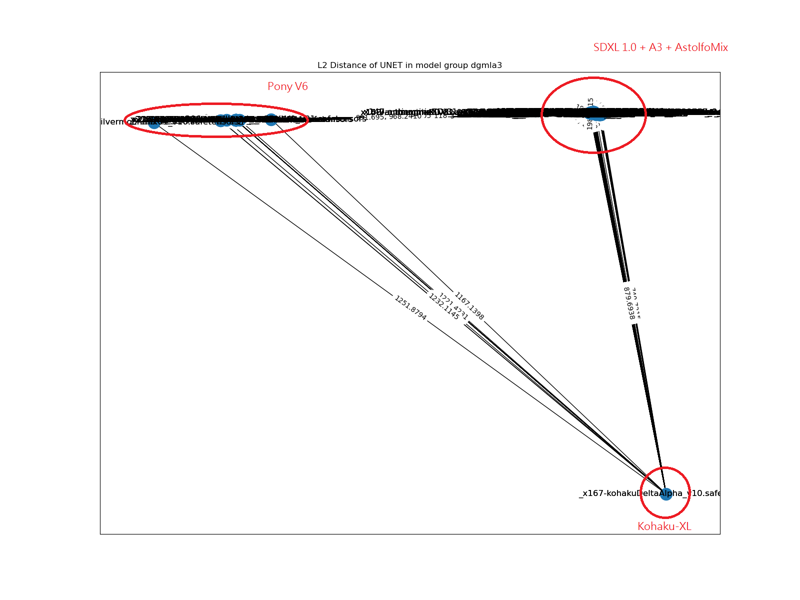

The concept of "finding the center" while merging models holds for a long time, even when performing MBW / LBW merge. No matter doing by hand or by algorithm, unless the entire interpolation path between 2 models are good for the prediction task, staying close to a cluster of models will be most likely to have a successfull merge. It explains why most merges are limited within the same base model. The "animagine + pony" merge is still a open question which seems impossible.

Existing algoirithms works with k>2 cases.

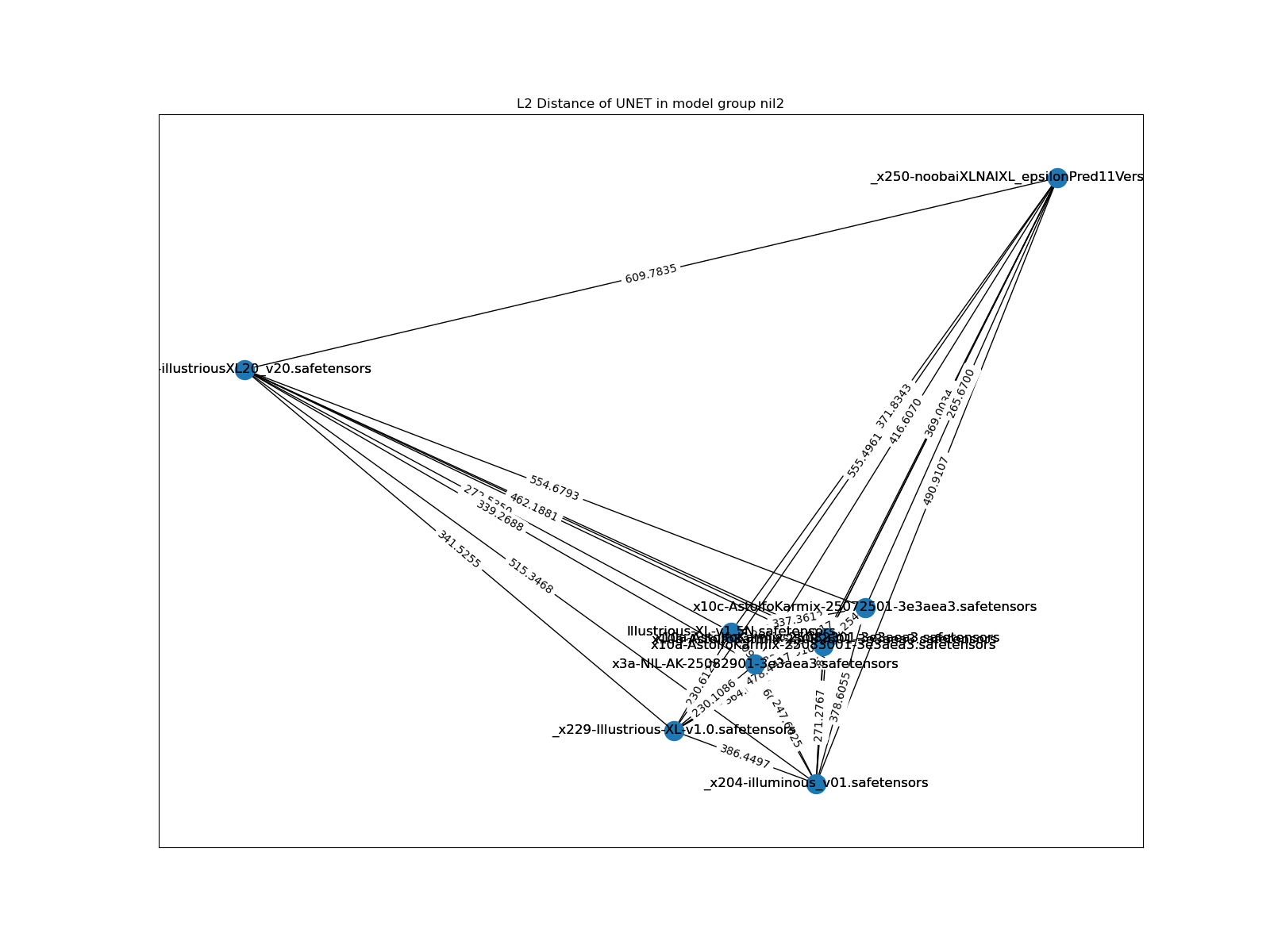

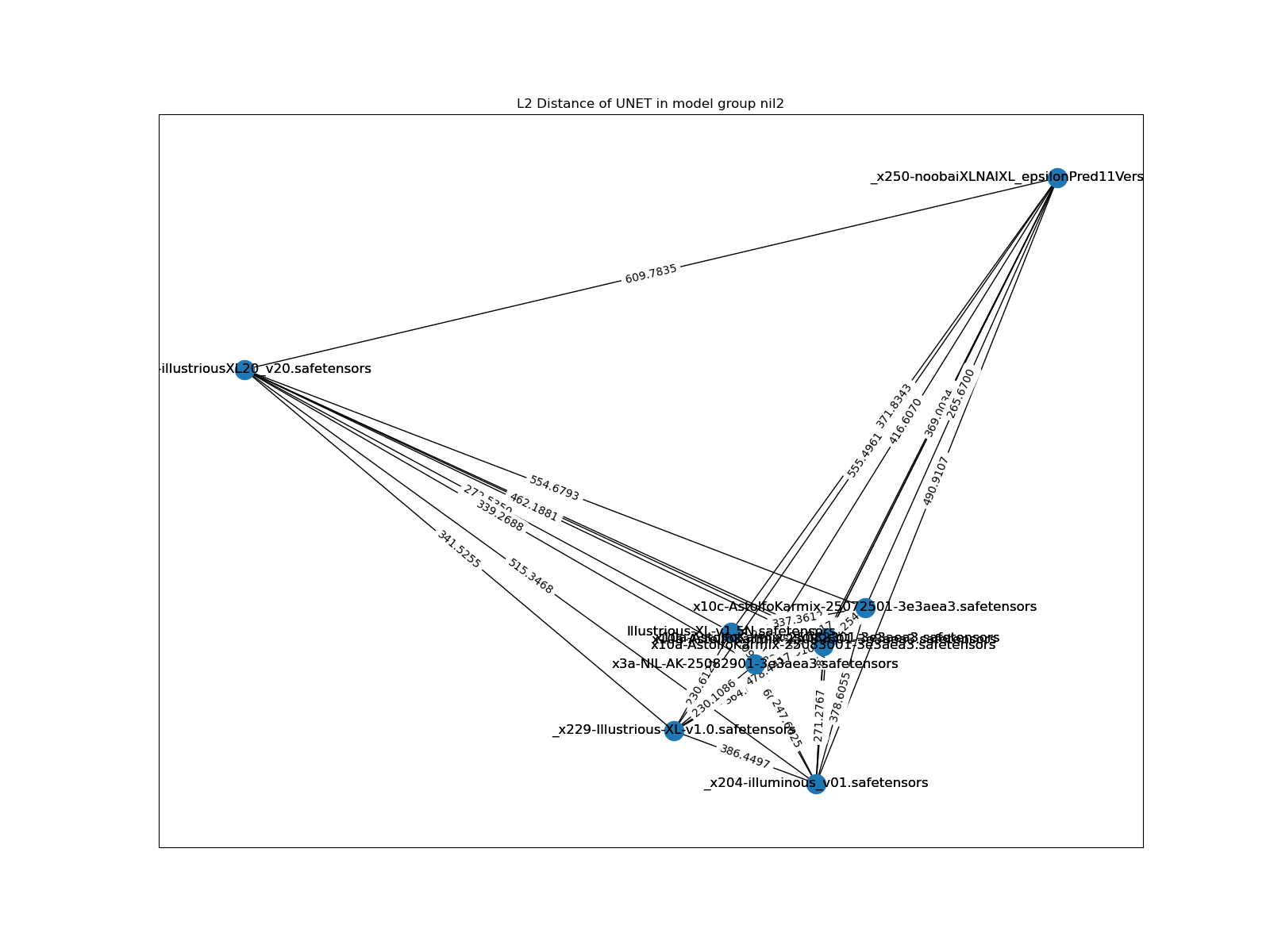

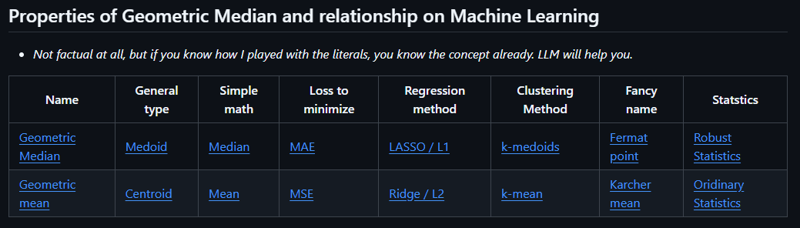

Back in the development of AstolfoMix, inspired by Model Stock, I discovered Geometric Median, or Fermat point in a simple triangle, along with the real base model SDXL 1.0 and sophisated weight alignment / filtering algorithm "DELLA".

Coining the concept of vector fields (flow matching paper) and task vector (merge model paper), existing algorithms merge by eliminating contradicted vectors (by math sign function and ranking), meanwhile finding the optimal magnitude. It is effective when the "model pool" are having similar model weights, especially some merging papers assumed all models are finetuned from the same base model with simple task. The "task" here is obviously way too complicated.

If the objective is merging only 2 cluster of models, or just 2 models, if the trajectory of weight shift are opposite each other, no matter many hyperparameters you dump (e.g. 26-30 in SD 1.5 MBW), it don't work when you add them together. You must pick either one side to survive the merge. The concept of "picking good model weight" is a novel task which there will be no professors can teach is such task exist before?.

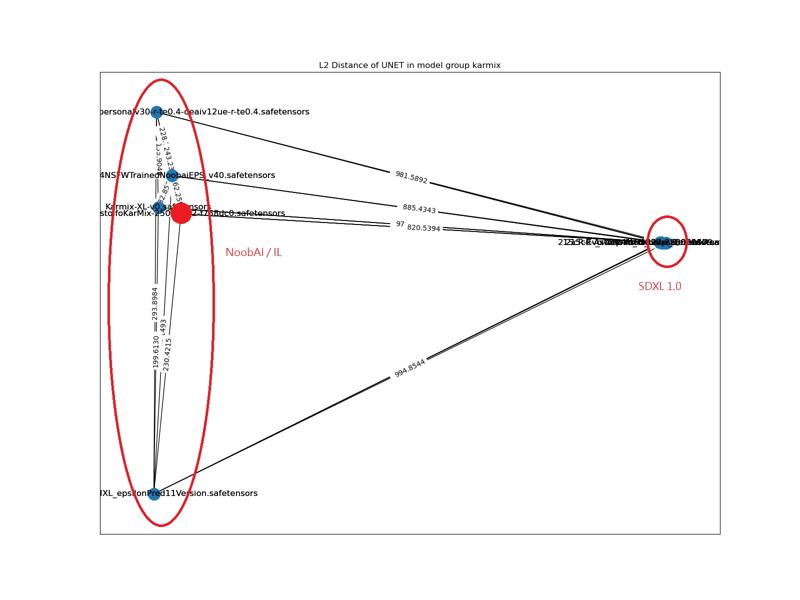

For example, AstolfoMix is based from SDXL1.0, meanwhile Karmix is based from IL (denied Noob AI base), even both of them are having similar prediction, they can have diverse activation which just can't add up.

Solution / Compromise: Chosing either side.

Model weights are not supposed to be merged. Given a common algorithm (neural networks), fiddling model weights (assuming all models are trained properly instead of pure random) implies to performing Manifold Alignment as a by-product. The manifoid may look better when all models are trained on aligned task, however in such abstract, subjective, and complicated task ( "art", unless you limit contents to numbers (MINST) or fixed clases (ImageNet-1K, COCO) ), the manifold can expect super ugly. The manifold here is exactly task dependent gradient in parameter space. Without the sparsity of modern large nerual network, the "point of the emerged gradient" is usually non-sense with unknown loss metric in an unknown gradent with an unknown task. It is just luck, or Risk Diversification.

To be "greedy" here, finding the lowest loss metric in a "unknown but probably very ugly gradient between 2 arbitary points", it is still best to eliminate contradicted vectors and find the optimal magnitude of remaining vectors. Instead of assume absoluite 0 as the origin, we still need a base model.

Therefore, here comes my solution: Make 2 version of the merge from both base models, which is SDXL 1.0 and NoobAI. For example, picking the NoobAI will yield to the red dot which is likely have 87.5% content of NoobAI, I believe that it is the best solution right now.

To make the merge more effective, I extended the cluster size by including some trained / merged models on top of the base models. However, since the tie breaker is requried, make sure cluster size is balanced, otherwise the "geometric median" will go to the dense cluster, like what medoid does.

And then... it pays off.

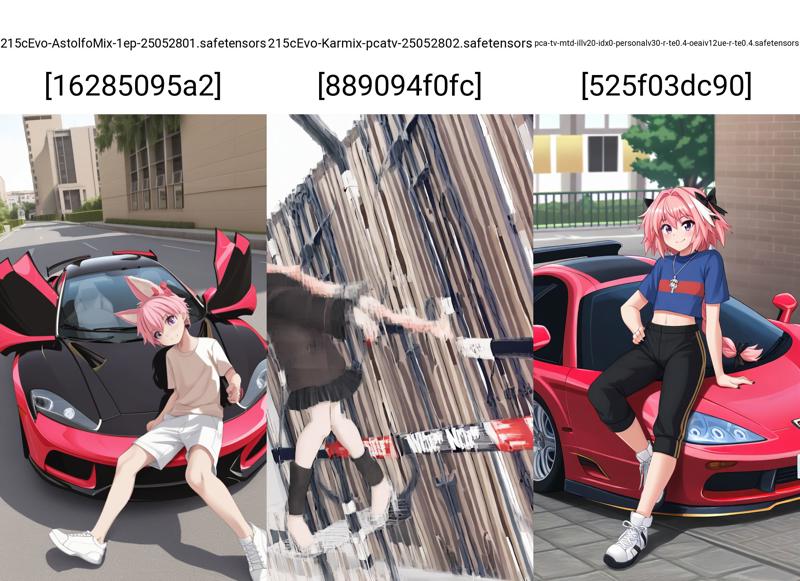

Squared: AstolfoKarmix. Circled: NoobAI, Karmix, AstolfoMix.

Invisible advantage of AstolfoKarmix

Shout-out to hinablue and foreest919 to train on top of my models, while testing an optimizer under developement.

Disclaimer: Image preview below is based from "x6a" preview version but it should be 99% similar to the published "x6c" version. Mathmatically the expected value over random action (rebasin) remains the same.

Although it still looks like another merged IL model, the invisible advantage of AstolfoMix (or SDXL 1.0) is inherited.

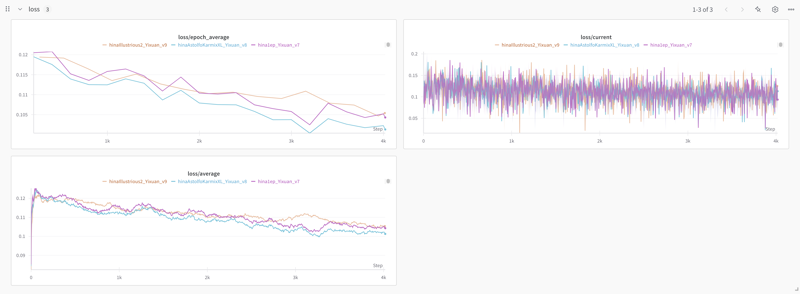

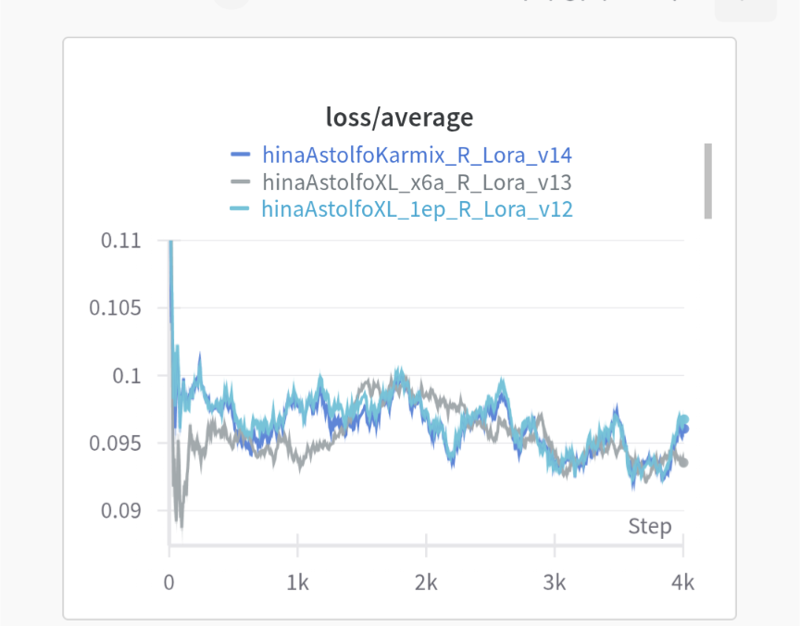

First, further finetune is easier and faster to be effective, as shown in the loss curve. Credit: hinablue

It makes realistic model over IL / NoobAI becomes effective (400 step with customized optimizer). Credit: hinablue

Although not as superior as Chroma, my 1EP has (pre)trained with unfiltered content, which is absent from most anime models (images are filtered by aesthetic score). Credit: hinablue

Image lost and chat history is lost.

Anime content has similar result. Extra low loss (although MSE loss explained almost nothing). Credit: forest919

Image lost and chat history is lost.

Art style is accurate. Credit: forest919

Image lost and chat history is lost.

Meanwhile asymmetric features can be learned effectively Credit: hinablue

Image lost and chat history is lost.

In theory, SDXL 1.0 based (or just AstolfoMix) will be easier to train, but it may be less practical for making community based content. Image coming soon too hard to motivate people to try.

2. Is it good to merge other bases like Animagine 4.0 + IL 2.0?

Applied to AstolfoKarmix-XL (NIL1.5 based).

The proof is left as an exercise for the reader. I'm happy to see my theory proven in many other specific cases, and being a general solution. I have tried myself: No. it breaks.

After examining the "model pool" of Karmix and the AK, I found that average merging works already. I can keep making the AK out of NIL1.5, and having enhanced performance.

I think this is just majorly sheer luck. Although all 3 of them are based from IL0.1, it is insufficient to explain the survival of this simple merge. For example, A3 / A4 / PonyV6 are all based from SDXL 1.0, but none of them success.

3. Does vpred matters in SDXL?

Applied to AstolfoCarmix-VPredXL.

First: vpred in SDXL and vpred in Flow Matching are different concepts! They are using different maths! Vpred in sdxl is same as vpred in SD2.1 / SD1.5, or NovelAI v3.

If you decided to train from a eps model, yes.

If you decided to merge from vpred models, model choices are scarce. Almost all of them are NoobAI vpred based (including RouWei and Jakun).

Difference is not very great among different merged models, disregarding human recipe or mahcine recipe.

People may claim for "better colors", I think it is just introducing bias like what latent offset did in SD1.5. Meanwhile, the "fading vs noise" in low-confident area (unconditional generation, "undefined contents") are up to user's preference.

Left: vpred, Right: eps

4. Fermat Point vs Centroid

Applied as NIL1.5-AK, and the rebuild AstolfoKarmix-XL.

The discussion of the algorithm part referes to my other article. I'll focus on how it affects AK.

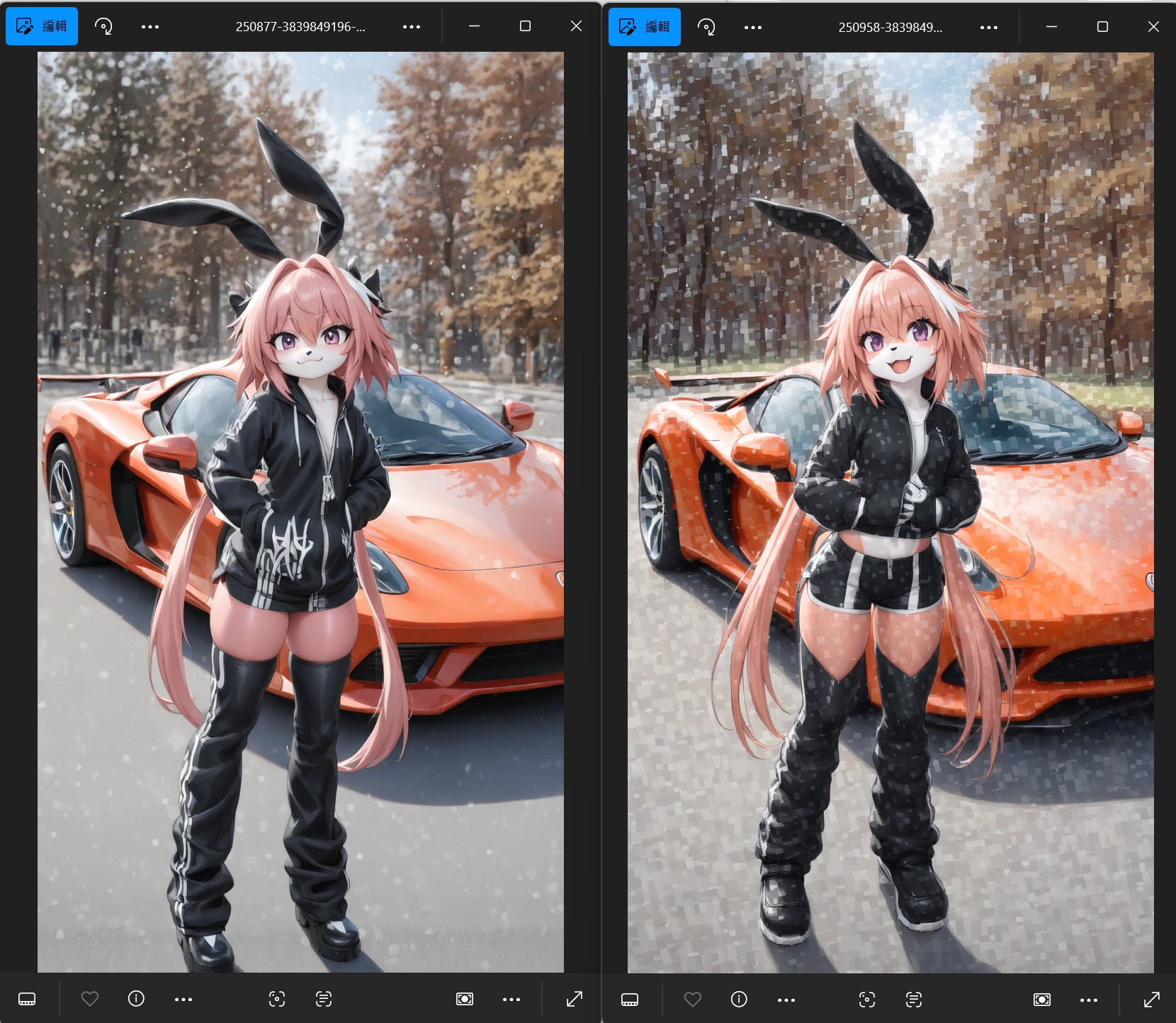

After releasing the NIL1.5-based model, I found that it is still unstable, while the glitch mainly appears in "unconditional content filling". I suspect random nan will propagate into glitched images. Although reboot the WebUI process will fix the problem, I better remake the NIL1.5 with algorithm, and named as NIL1.5-AK.

After redoing the entire merge, it appears stable again, and the "unconditional content filling" works a lot better. However, the color tends to be less bright for "certain conditional content". Also, it tends to trade specific trained concept for wider general ability for "coherence". I don't know the capability for the original IL1 / IL2 / NoobAI Eps but at least I can get the ballpark from XY plots.

There is quite a bit of weight shifting, but eventually they are minimized without lossing too much trained content.