This is a response to n_Arno's article titled, Having fun with good old stuff 🥰

I noticed that they're using another app (Forge or Auto1111), and wanted to mention tests I did with ComfyUI.

The basic principle is simple. You combine two different ksamplers together. This isn't a new idea and is a common part of two-step sampling in Comfyui. What a lot of people don't know is that when an image is turned into latent space the checkpoint reacts different to pure black and whites.

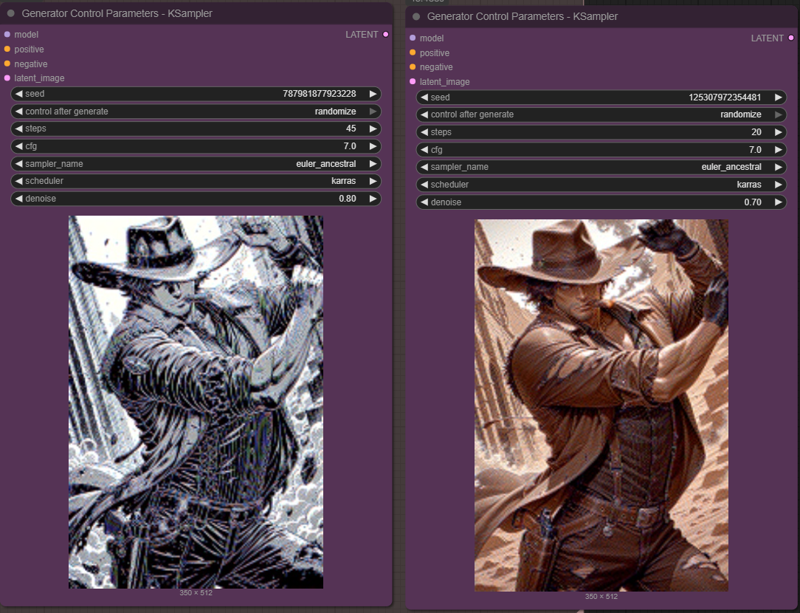

In this example, I'm using the AI_beret's checkpoint Beret Mix Manga and Axelros's Tiberium Illustrious mix V3. I like Beret Mix because it has a very strong and unique manga look and design. Tiberium is great for semi realistic styles with a very good depth of field and soft details.

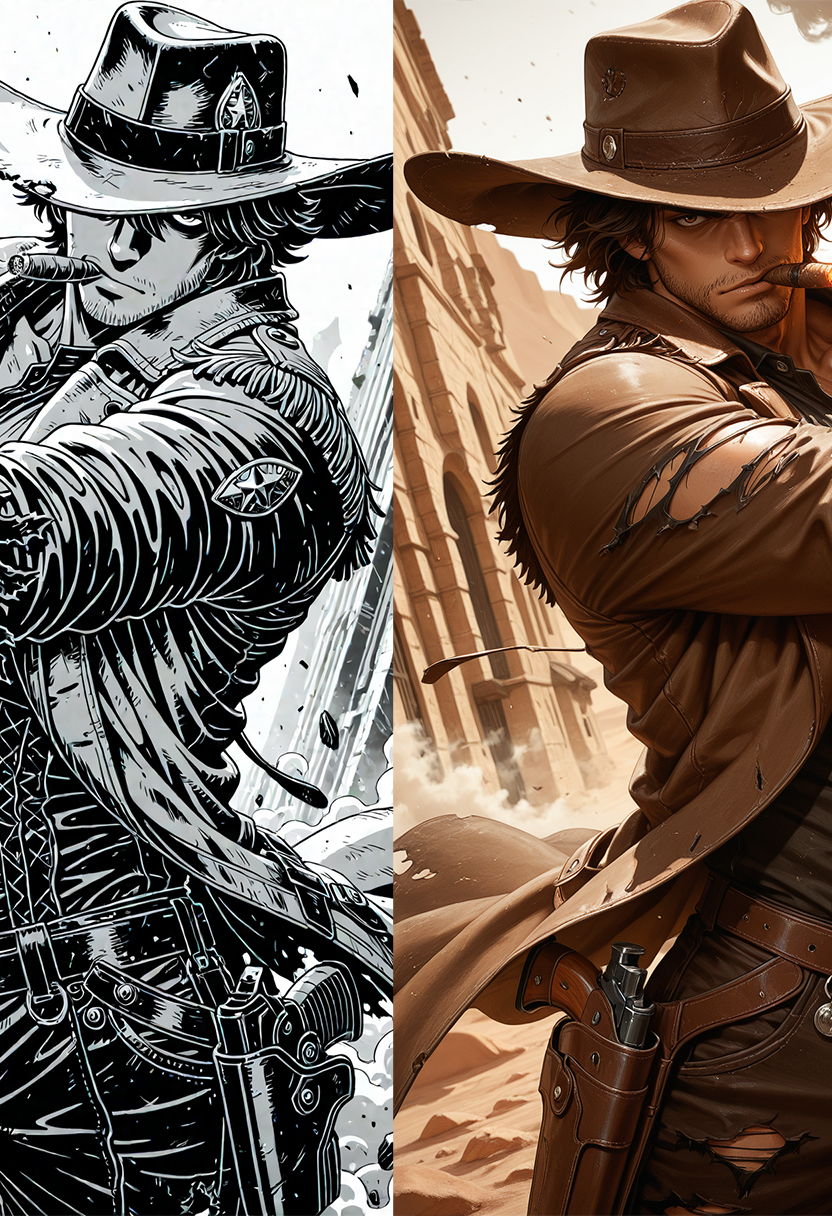

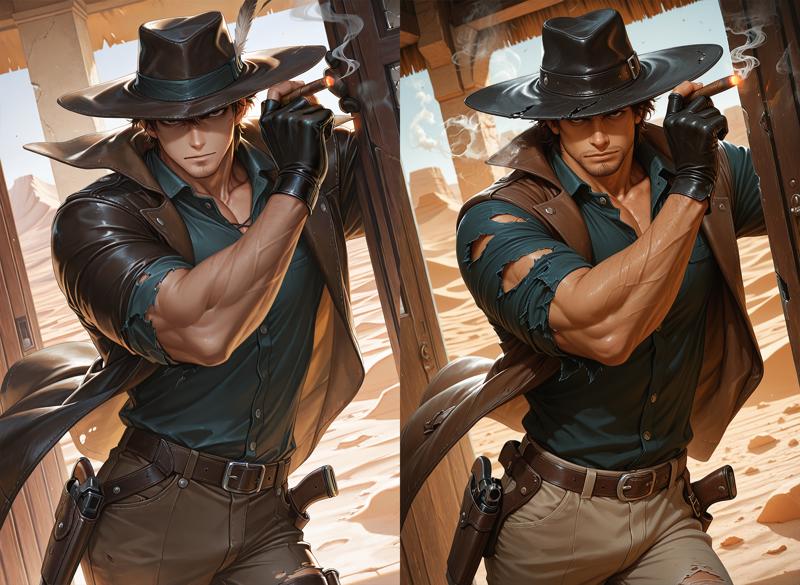

As you can see in the image, the Beret Mix is on the left and the color image to the right is Tiberium.

You just need to plug one checkpoint into the first ksampler and load another into the second. The only trick is changing the denoise amount. Generally, a 0.70 denoise give the model enough freedom to fix issues or rearrange elements, but still adhere to the overall structure of the first ksampler's latent output.

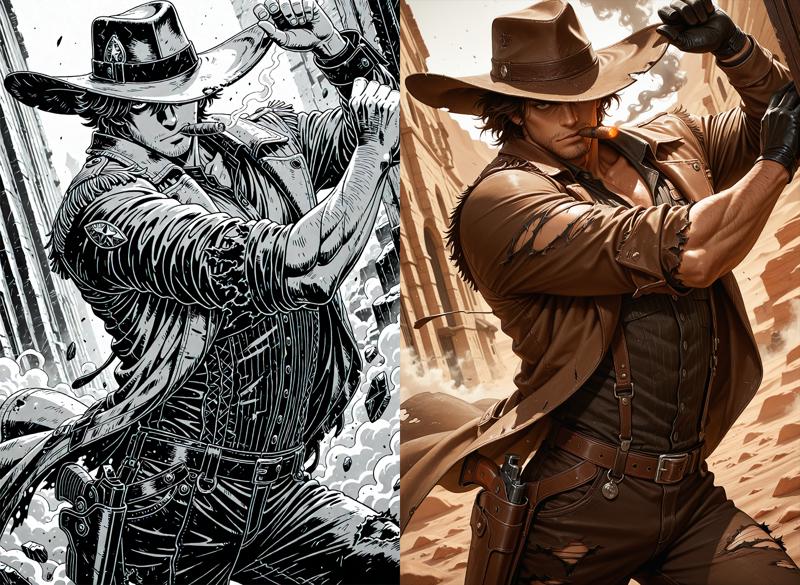

Of course, you don't need to use a black and white to get this effect. In this example, I use NovaFlat to generate the first ksampler latent output, and Tiberium for the second.

One of the advantages of using black and white is that the model also samples colors when turned into latent space. Unless you crank up the denoise higher or inpaint, you're going to get similar colors to the original latent output. A black and white image allows the model to select colors for itself and only worry about composition.

For comparison, here is Beret Mix and a merge I'm working on based on Tiberium Mix.

As a base reference, this is my merge without using two checkpoints.

So this is an easy way to combine checkpoints without having to do anything complicated. In ComfyUI you just need to copy your ksampler node, attach another checkpoint to the model input, and lower the denoise to something like 0.70 or lower depending on the effect you're going for. Since every workflow uses a ksampler this effect can be applied to any existing workflow without breaking anything. Of course you can get even more complicated with more samplers or even plugging in a different checkpoint into the ADetailer nodes, but you get the idea.

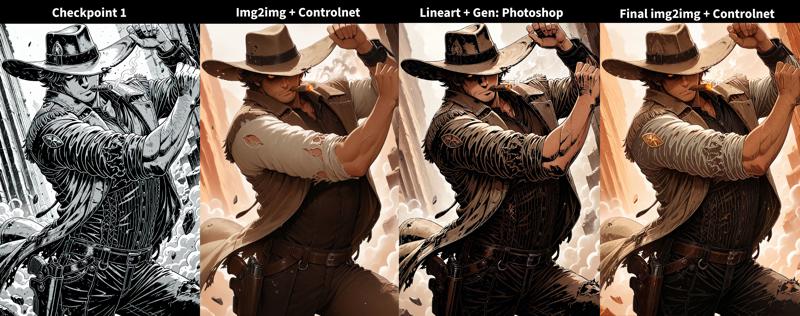

You can also combine line art and generation with img2img and control net, but that's more steps.