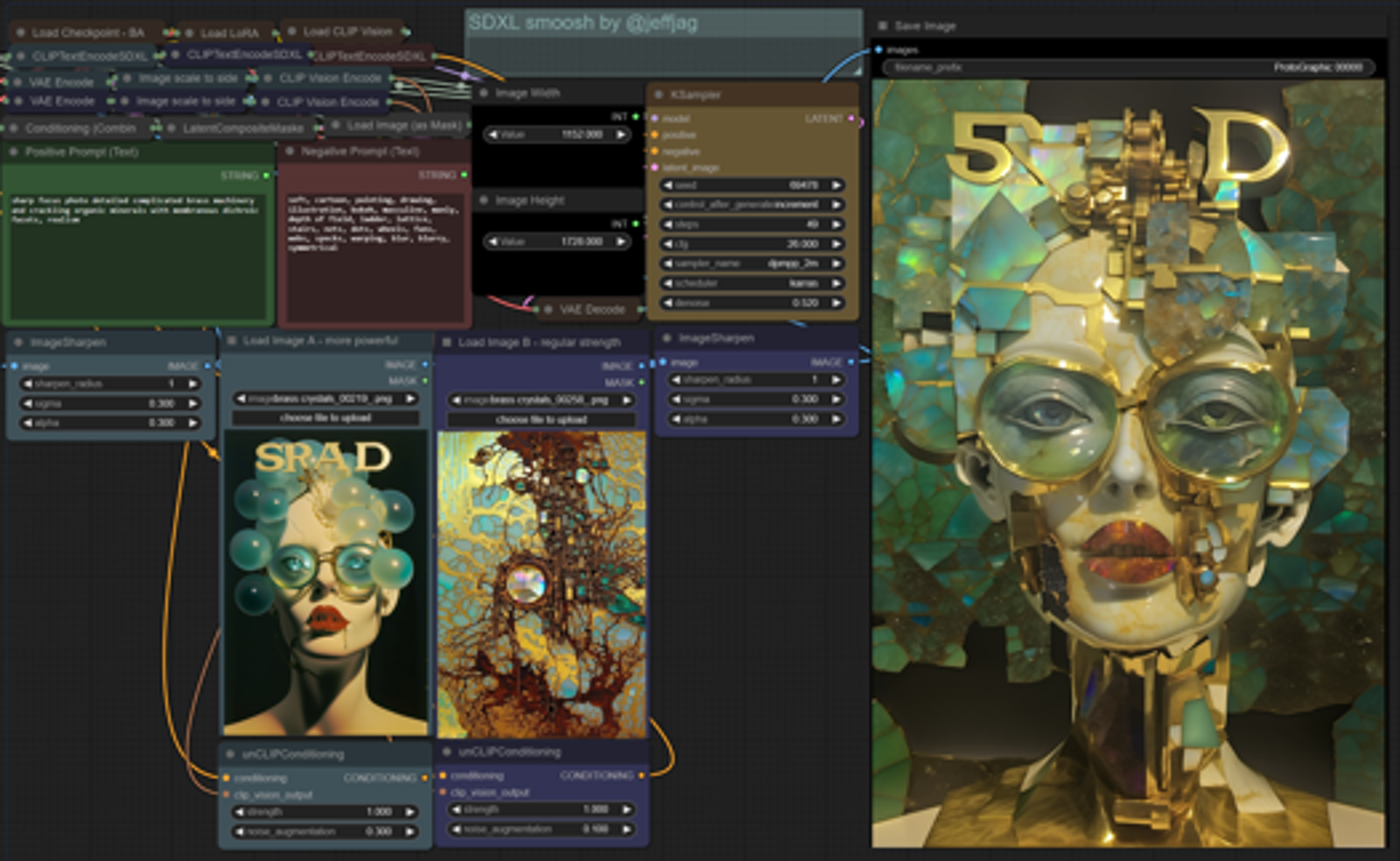

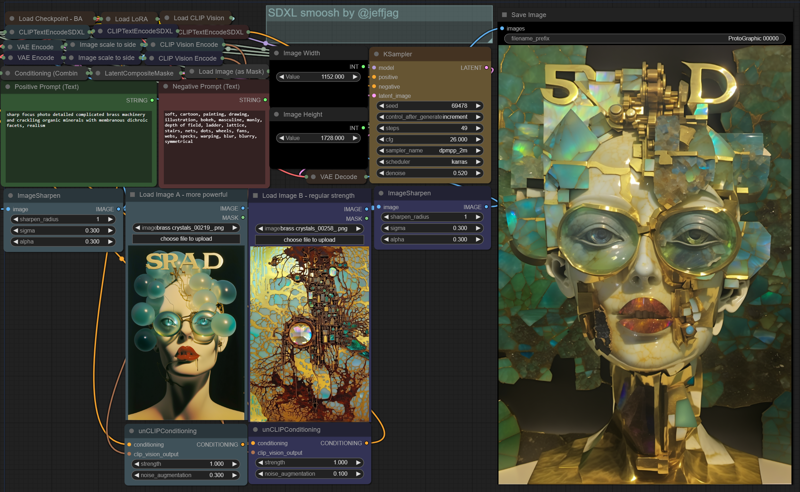

Introducing: #SDXL SMOOSH by @jeffjag

A #ComfyUI workflow to emulate "/blend" with Stable Diffusion. Have fun!

Grab the Smoosh v1.1 png or json and drag it into ComfyUI to use my workflow:

https://github.com/JeffJag-ETH/ComfyUI-SD-Workflows

** Please install ComfyUI, download the SDXL model and get comfyui working before attempting this. It's fairly easy to use if you're familiar with comfy.

SMOOSH is a bit more "literal" feeling than the MJ blend tool, but there's a lot of things you can tweak and adjust to get your desired results. Notes included in the workflow.

You'll need to install ComfyUI-Manager and a few more custom nodes. When you load the image/workflow you can go to the manager and "install missing custom nodes" to fill the gaps.

I used the SDXL workflow from Scott Detweiler of Stability AI and the ComfyAnonymous unCLIPConditioning example as jumping off points.

Check out Scott's youtube tutorials: https://www.youtube.com/watch?v=Zteta2_JvdA

and the unCLIP example: https://comfyanonymous.github.io/ComfyUI_examples/unclip/

I prefer the "all in one screen" interface style of comfy, so I packed everything in the corner that you don't need to ever look upon again with human eyes.

My setup varies from default setups in a few ways:

- I've included a LoRA loader - Keep in mind you'll need to use SDXL compatible LoRAs for use with SDXL 1.0 base model.

- Updated for SDXL with the "CLIPTextEncodeSDXL" and "Image scale to side" nodes so everything is sized right.

- Not starting with empty latent. Maybe I did something wrong, but this method I'm using works. Both images use the VAE Encode from the img2img workflow to contribute a Latent_image to start from. Both are combined with "Latent Composite Masked" using a 50% gray PNG file saved to the same file size as the intended output.

- Each image loader has color-coded friends to keep it straight.

Tweakable Variables:

- unCLIPConditioning controls how much of each image prompt you'll be absorbing with the smoosh. For ease-of-use try keeping strength at 1 and adjust "noise augmentation" from 0 (close to the original) to 1 (far from the original). I think the top image tends to be more dominant so I turn it down to 0.3-0.5 by default. Your results may vary.

- KSampler CFG cranked to all heck at 26 gives me great results, you may like it lower, but I think results get too blurry/soft below 15.

- KSampler Denoise around 0.5 is a good starting point but 0.4 to 0.6 give me good results. My sweet spot was 0.52-0.55. Lower than 0.4 gave me double image/overlay vibes. Above 0.6 will give you a lot of fun times and wild emotions! Whoooo!

- Text Prompts will influence the SMOOSH as per usual. I've founds it helps to have at least something relating to the desired result even if you want straight smooshing of images.

- CLIP Vision Models. Newer versions of ComfyUI require that you use the clip_vision_g.safetensors model in the CLIP Vision Loader. You can get the file here: https://huggingface.co/comfyanonymous/clip_vision_g/tree/main

- Image Size. I simplified the image size section (Integer nodes) to propagate where needed so you don't have to keep track of that.

- Image Loaders first go to a slight image sharpener before being resized to fit exactly into the output size. I like crispy images.

50% gray image for mask: