https://github.com/AbstractEyes/comfy-clip-shunts/tree/dev

RIGHT NOW it's a lot like trying to pilot an airplane, so bare with it through it's growing pains please, the simplifiers are planned and the experimental outcomes are coalescing into style gauges and noise controlling agents.

For now you can fixate on the t5-unchained element, which is part of the overall structure but also independent from the overall structure in it's own way.

It was easier to just catch-all in one big basket, instead of trying to build a bunch of smaller baskets.

V0.5 highlights

Massive rehauls across the board.

First,

beatrix52k's first cook failed, so I've been redesigning her core a bit here and there.T5-unchained loads in the current setup for single, dual, triple, and quad clip loaders.

This is tested to work with hidream without error or issue.

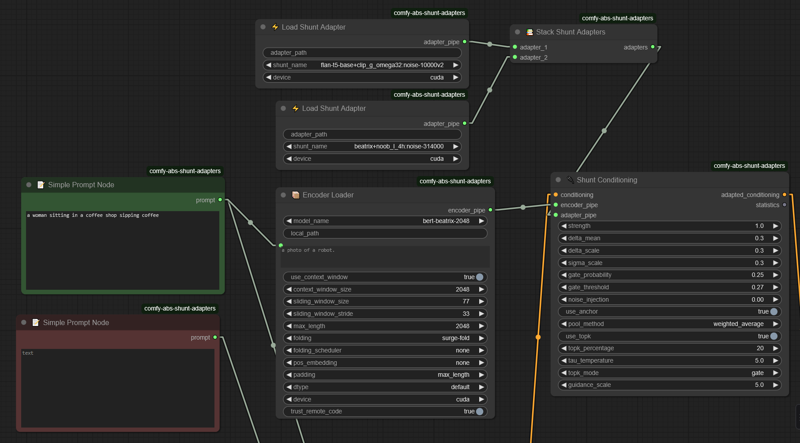

"EncoderLoader": "📦 Encoder Loader"

loads any of the supported encoders and passes them down the pipeline. Some work, some have no real tools for. This will be expanded.

This is the primary used and workhorse of the current version.

"SimpleEncoderLoader": "🔍 Simple Encoder Loader",

A simpler variation that requires less steps, semi-working sometimes I think, I'd need to test to be sure.

"LoadAdapterShunt": "⚡ Load Shunt Adapter",

The workhorse loader of the shunts, automatically jiggered to most if not all the currently supported shunts on the repo.

It's a little wonky at times but it gets the job done.

"StackShuntAdapters": "📚 Stack Shunt Adapters",

Lets you pack them up, not the most convenient but you can stack up as many shunts as you want to run at runtime.

"MergeShunts": "🔀 Merge Shunt Adapters",

Allows very simple merging, has fun effects at times. I suggest trying to merge the laion shunts with the booru shunts.

"ShuntScheduler": "📊 Shunt Scheduler",

Tragically not functional yet, but the sampler is almost ready. It'll be ready by release.

"UnloadShuntModels": "🗑️ Unload Shunt Models",

Unloads the shunts. They are pretty lightweight, but I probably need one for the encoders as they can be quite large.

"ShuntConditioning": "🔌 Shunt Conditioning",

You pass conditioning through here, it gets modified by all the shunts.

supports top_k, gated selection, and a multitude of other options that work very well with the conditioning process.

"ShuntConditioningAdvanced": "🎛️ Shunt Conditioning Advanced",

I believe I hijacked the simple one and made it advanced, so this one is technically the older version of advanced.

"SimpleShuntSetup": "🔧 Simple Shunt Setup",

Should just get a simple shunt setup going with no effort. Probably doesn't work, I'd need to check it.

# General utility nodes "Prompt": "📝 Simple Prompt Node", "ConcatPrompts": "🔗 Concatenate Prompts",

Simple really, string user, and string squisher.

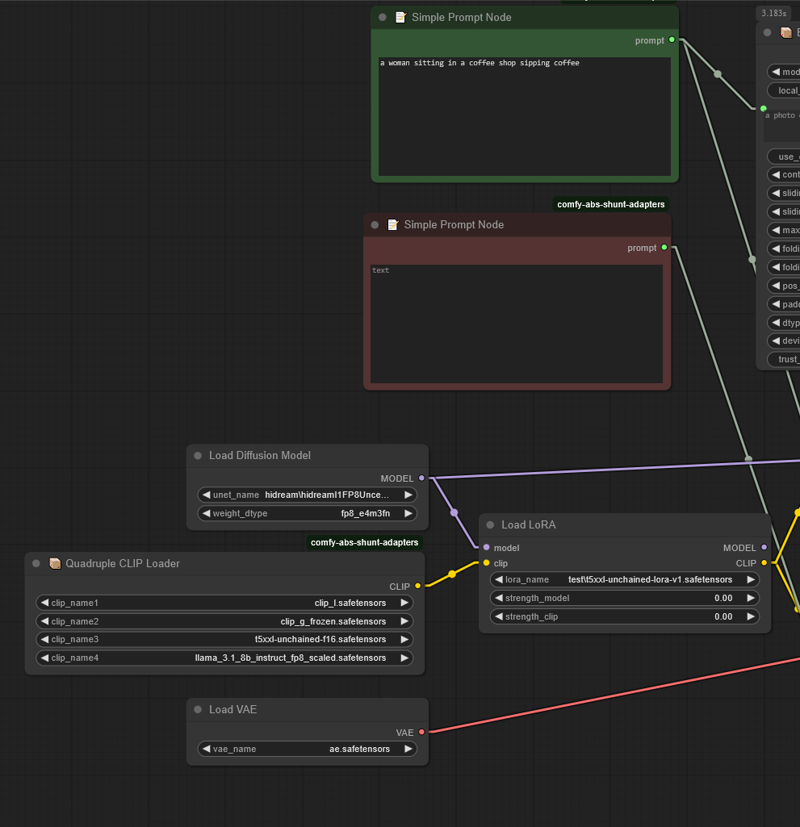

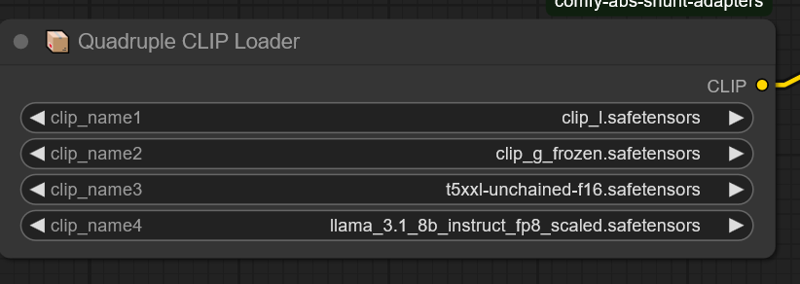

"AQuadrupleCLIPLoader": "📦 Quadruple CLIP Loader", # Loads a quadruple CLIP model (clip-l, clip-g, t5, llama)

"ACLIPLoader": "📦 A-CLIP Loader", # Loads a dual CLIP model (clip-l, clip-g)

"ADualCLIPLoader": "📦 Dual CLIP Loader", # Loads a dual CLIP model (clip-l, clip-g)

"ATripleCLIPLoader": "📦 Triple CLIP Loader", # Loads a triple CLIP model (clip-l, clip-g, t5)

These support t5-unchained-xxl, function identically to the core variations otherwise - with a twist. Everything is encapsulated away from the comfyui system for easier modification, meaning it's all compartmentalized in a series of scripts.

Original T5;

Unchained + shunts to control background effects and noise through top_k

V0.2 highlights

Big Changes:

Massive Additions Incoming

Folded token window - enabling the earlier mentioned and broken 2048 token window code hooks that went unimplemented.

LLM integration - LLAMA, BERT

T5xxl-Unchained integration

Live hot-swap configuration potential for CLIP models

clip pipeline controllers for adding or removing nodes from clip

Full capability for encoder blending using the beatrix_x52 encoding resonator tasked with blending subjectivity from words directly into the clip encodings.

On full release this will include an entire suite of easy-to-use nodes that house the behavioral responses in easy to use ways - helper nodes, and simple hot-plug node that will just ENABLE it on a clip if you pass the pipeline through it, with a single dropdown for style modifications.

dtype and qtype conversion attempts

Currently Deployed Warning

This change will be housed in the dev branch for now, but it will be made core after testing.

If you have it in your workflows beware. I'll be deprecating multiple nodes and including some safety nets for those nodes, but I may not catch everything.

Potentially if the node DOES NOT WORK CURRENTLY, I'll try to fix it or remove it for behavioral incompatibility. So you may have conflicting changes if you changed or fixed one of those broken nodes.

Current Problems

Many live nodes don't work correctly and many do. I'll be giving it the full bench test today and ensuring the outcome conforms to the necessary information.

The T5 is fairly hardcoded, and I'm moving to softcode and integrate configuration utilities as well as a dedicated "models/huggingface" folder within the standard comfyui structure. This can be changed in the settings menu dedicated to the clip-shunts-suite, or by editing the config file.

Upcoming Core Changes for "DEV" branch:

Big bugs fixed so far:

timesteps not working correctly

schedulers not working correctly

Minor refactors

Most of the node structure will remain unchanged, however some nodes will have their behaviors moved to additional files. I'll try to ensure nothing gets destroyed in the process, since I will be micro managing the code instead of letting AI man the ship this time.

Configuration

widened the configuration capability to include additional model types from various additional llms that went through testing.

Refined encoder catches

this does a better job at detecting and validating which clip model is attached to the pipelines and are less likely to accidentally target incorrect models

T5-xxl-unchained integrationThis enables the use of the T5xxl in standard pipelines while still preserving your standard t5xxl configurations.Included a "Unchain-T5xxl" node, which hot-swaps the one you loaded, attempting to match the dtype conversion if possible. This requires the t5-unchained to be downloaded, or the checkbox cenabled to allow it to load the model to the "models" folder automatically from huggingface download.A second node to "CHAIN-T5" will attempt to restore your original T5 loaded model and all of it's loaded traits.This is fairly untested and will likely have trouble with quantized models, but I'm working out that variation asap.

Finally, a third node will ensure that vram is cleaned if it somehow sneaks by the model cleanup within COMFYUI.Technically this was done months ago, but I had not integrated it's return into the main pipeline.

T5-Unchained has been given full support within four core nodes;

It auto detects the loaded T5xxl model and unchains if the unchained size is detected.

ACLIPLoad

ADualCLIPLoad

ATripleCLIPLoad

AQuadrupleCLIPLoad

BERT-uncased and BERT-cased model integration

This enables the usage of bert within the paradigm. Full documentation for the associative integration will be included.

This will support bert models of many shapes and many forms, with multiple integration capabilities for summarization, masking, and so on. You can use BERT to interrogate words, classify your prompts, and so on.

Includes loader, introductory and simple inference capabilities, res4lyf sampling capability, and unloading.

NOMIC-BERT model integration

Similar to bert, but requires remote code to be executed from the nomic-bert-2048 or the abstractphil/bert-beatrix-2048 repos accordingly.

The abstractphil/bert-beatrix-2048 repo has the SAME FROZEN custom code hosted onnomic-bert-2048, and will be given an MD5 validation check using a third party validation for official release to ensure the code itself isn't tampered with.

If you have ANY security concerns analyze it yourself and make an issue.

ALWAYS be cautious of remote code. This code is required to load nomic bert as it's kind of hacky, but it does enable the 2048 context windows.

Without this nomic-bert-2048 will misbehave.

LLAMA 1b and 8b instruct integration

Along with the other models, I'll be integrating LLAMA and the more commonly used llama models with this build as well.

These will have llm request capability and a simple override set for usage.

LLAMA loader and unloader will also be present.

Future Changes for "DEV" branch:

QWEN / INSTRUCT

Good for generic requests and so on.

GPT4 summarization, vision, and audio integration.

Useful for categorization, classification, summarization, and whatever else you would want.

SIGLIP and JoyCaption integration

Additionally with the other models, the more common identification vision models will also be included for image identification and utilization.

imgutils integration

This be a similar system as to what I used to caption all my images.

Looks at a picture, runs a detection, returns useful captions from that.

This will include a generic node for loading and passing text through, as well as a checklist that instead of enabling one at a time will just have the full list so you can turn them on or off.

There will an offloader for vram and configurations in the menu for these.

full clip, llm, and model merge structure

This will enable the usage of much more complex formulas than are currently enabled in any of the merge systems - including schedulers, teacher/student, master/apprentice, and a series of interpolation features with this.

Integration of the beatrix_x100k, which is a more advanced physics-heavy 100,000 shunt variation of the BEATRIX_x52k which has only 52000 and is lighter on the physics rules.