Retraining Text Encoder for a model

The following are notes based on my personal observations, and also my research partner, ChatGPT4.1, which is surprisingly knowlegable about training these days.

Training and research is still ongoing for this.

Target

My target as of this writing, is to retrain SD1.5 to use the T5 TE, with a projection layer to map it to 768 dimensions. I am leaving the vae untouched, and will only be training the UNet

(edit: July 5th update: I have pivoted to use T5 plus sdxl vae now)

TE Scaling

Note that I had to tweak the embedding scaling for the TE output to map it to a stdist of around 0.2

This is apparently what the SD1.5 model is optimized for.

Resetting unet layers

Theoretically, it is best to reset layers that hold the training for CLIP -> UNet mappings.

You might choose to reset the "QK" layers, the "cross attention" layers, or ALL attention layers.

Here's what GPT has to say about that:

Cross-attention reset: Only resetting cross-attention layers keeps most of the original spatial/visual knowledge (i.e., how to render “anything”), but forces relearning of how text embeddings are mapped to image features. This tends to be most effective when swapping text encoders, especially with a strong guiding concept (like just “woman”).

QK-only reset: Resetting only the QK (query/key) weights breaks attention mechanisms, and often requires higher LR or longer training to re-converge. It can lead to noise if the learning rate isn’t high enough to “unstick” those layers.

Full attention reset: This is the most destructive, and generally leads to output collapse or heavy noise unless you crank up the LR, dataset size, and/or training duration.

The above comments by it, were based on my question why I only saw the crossattention reset giving useful results at LR=1e-05

QK reset samples

Here is what the output looked like for the first 5 epochs, after just QK reset, at 1e-5.

First image is step 0

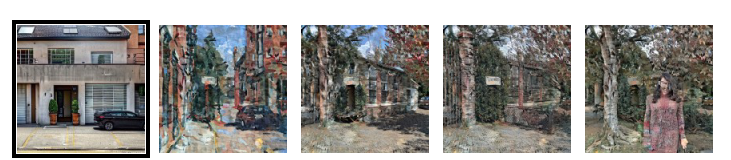

Cross-attention reset

In comparison, here are the first 5 for cross attention reset only, instead of all-attention reset.

Note that in both this and the previous example, the sample prompt is "woman"

Full attention reset

The results for full attention reset at LR 1e-5 arent worth posting. They are primarily just noise.

After 10 epochs, there is a slight suggestion of something, but oddly, it mostly resembles the TREE in the last image from the cross-attention reset.

Full attention reset, take 2

When I increase my dataset size, and focus on training ONLY "woman solo" type dataset pairs, I get the following progression at LR4e-5, bs64, const. (One sample image per epoch)

This is using around 20k images

Bonus fun image.

Here's the full sample image the title banner was drawn from

(LR 5e-5, attn reset, 1 epoch)

Note that while my first round of comparisons used 1e-5, I had to crank it to 5e-5 to get useful output after the full attention reset.

Observations for later

t5 sdx

4e-5, bs64, cosine, 10000 steps, with SNR, seemed to be best I could do with a single round of training on a 20k dataset, all of "woman, solo"

However, when I augmented the dataset to 30k with other things.... may be better wity LOWER LR?

experimenting..