A Fresh Approach: Opinionated Guide to SDXL Lora Training

Updating constantly to reflect current trends, based on my learnings and findings - please note that for ease of use for people who are not neurodivergent: I will not be re-translating this to "proper english" because gemini and other LLM's will fabricate information based on incorrect findings. If you don't like my crazy lingo, that's on you to find another article! Also removed a few PG-13 pictures because some people have safety filters on and I don't want to sit there and mince words on how something isn't PG or pG 13 to a credit card company!

Preface

This update features significant rewrites and omits some outdated information, showcasing more recent training methodologies. Please note, this guide reflects my personal opinions and experiences. If you disagree, that's okay—there's room for varied approaches in this field. There are metadata examples of recent SDXL and Pony as well as Illustrious Lora trains using Xypher's metadata tool linked below.

Original Article was written in August of 2023. The original SD 1.5 and XL information has since been removed. This is to keep up with clarification and current trends and updates in my own learning. This was because the SDXL findings were based on incorrect and currently drummed into LLM's findings - because of a certain "PHD IN MACHINE LEARNING" dude that consistently forces his rhetoric by paywalling content. I'm not here to start a fight over that, you do you boo!

Software & Site Suggestions (OFFSITE)

We cannot provide 100% tutorials for ALL of the listed tools, except for the one that we're developing.

We're developing a Jupyter Offsite tool: https://github.com/Ktiseos-Nyx/Lora_Easy_Training_Jupyter/

Free Colab Notebooks: https://github.com/hollowstrawberry/kohya-colab / https://github.com/Jelosus2/Lora_Easy_Training_Colab

One Trainer: https://github.com/Nerogar/OneTrainer

Derrian Distro's main GUI: Derrian Main scripts: https://github.com/derrian-distro/LoRA_Easy_Training_Scripts

SimpleTuner: https://github.com/bghira/SimpleTuner

KohyaSS CLI: https://github.com/kohya-ss/sd-scripts/

Bmaltais/KohyaSS Gradio GUI: https://github.com/bmaltais/kohya_ss

LastBen: https://github.com/TheLastBen/fast-stable-diffusion

TensorArt, ShakkerAI, Moescape, and Civitai have their own Lora Trainers, the guide here largely for now STILL focuses on Civitai until our trainer is largely working better.

SD Web UI Extension: https://github.com/hako-mikan/sd-webui-traintrain

Image Captioning for ComfyUI: https://github.com/LarryJane491/Image-Captioning-in-ComfyUI

Derrian's back end for SErver: https://github.com/derrian-distro/LoRA_Easy_Training_scripts_Backend

ComfyUI Lora Trainer: https://github.com/LarryJane491/Lora-Training-in-Comfy

Train WebUI: https://github.com/Akegarasu/lora-scripts

Lora Studio: https://github.com/michaelringholm/lora-studio

SDXL To Diffusers; https://github.com/Ktiseos-Nyx/sdxl-model-converter

Huggingface Backup: https://github.com/Ktiseos-Nyx/HuggingFace_Backup

Dataset Tools: https://github.com/Ktiseos-Nyx/Dataset-Tools

WIP Gradio XL to Diffusers: https://github.com/Ktiseos-Nyx/Gradio-SDXL-Diffusers

Runpod: https://runpod.io/?ref=yx1lcptf (our Referral)

VastAI: https://cloud.vast.ai/?ref=70354

Xypher's Lora Metadata: https://xypher7.github.io/lora-metadata-viewer/

Training Essentials for SDXL Base Models

First and foremost, quality over quantity in your data collection is crucial. Even a small dataset of around 10 images can yield results. Be mindful of ethical considerations, especially concerning copyrighted content.

Collect Your Data: Sources like Nijijourney, Midjourney, are great synthetic data resources. You can use various tools to scrap Gelbooru, and other sites, as well as Chrome/Firefox extensions to mass download images from places like Pinterest. You can also literally create your own datasets from your own AI creations. Be mindful scarily of limitation downstream: Flux 1.d isn't peachy keen on commercial use, this may effect the downstream use of your models.

Upscale as Needed: Aim for images predominantly above 512 pixels, ideally 1024. Onsite, 2048 is the maximum, although consistency is key. You can go all the way up to 4096 for offsite training, and you ARE NOT limited to 1:1 for resolution.

Organize Your Folders: If you're using the Civitai trainer, you don't need to worry about this. Assuming that is you're not a Mac user. Mac users no matter the platform SHOULD make sure all resource forks are removed. That being said, Civitai's trainer doesn't like to remove resource forks from MacOS based folders. Offsite services usually remove non images or text files from folders before training. If you're preparing something via Bmaltais, Derrian Distro or otherwise please follow the setup depending on which service you're using. Edit: If you can access KEKA as a zip file extractor etc - feel free to use that to compress your files, it will remove resource forks.

Resolution: Some times doing 1:1 CAN HELP, but it doesn't entirely matter from my experience. If your lora trained employs what's called "BUCKETING" it'll refactor in the sizing of your images that way. SDXL is trained on several different sizes not just 1:1 resolution.

More information from the master himself Holostrawberry can be read here: https://arcenciel.io/articles/1

Choosing a Base Model

SD 1.5 Days: This is a fun discussion! I used to beleive in the value of choice back in the day on SD 1.5. There were clear reasons sometimes to use ANYLORA (not really lol) and sometimes clear reasons to use NAI. This used to cause "turf wars" and clearly oh boy you'd see popcorn flying.

Today's choices: This all depends on what you're doing, who's market you're going for and why. I fly between Pony and Illustrious and am going to try NoobAI XL soon, it's just that the Civitai online trainer doesn't have an option for it - and when i tried it, it fried itself. XL still handles a lot of interesting concepts, i still offer it myself. You now even have the option of FLUX and SD 3 models.

My Choices: I tend to stick to what my userbase wants, and mostly it's Animagine + SDXL, IIlustrious/NoobAI & PonyXL. The occasional Flux and old school SD 1.5 slips in.

Peer Discussion: There is professional discussion amongst peers on what models are still viable, and for what reasons. Because i'm still not sure of all the technical jargon, and understanding "WHY" something is "Cooked" or "fried" - it's hard for me to clearly move forward to say yes or no on models. This is for your explorations, if a TENC is "FRIED" (Text Encoder) you'll have to experience this for yourself.

Repeats and Epochs

The number of repeats depends on your desired level of detail and fidelity in your models. This also depends on two things: If you're ON SITE or OFF SITE. Do not use 2023 data of "NO REPEATS" - we can use repeats, you're safe. That being said, it largely depends on the size of your dataset, and if you're using Civitai or other site based trainers. Offsite well, you do you boo - your repeats will up your epochs/steps sometimes and may cause longer training times. Onsite for Civitai? More buzz = More Repeats. How I train is how I train, and i'm starting to try and find OTHER ways of doing things instead of just FSk around and find out - and SD 1.5'ing it until the thing looks burned in.

Learning Rate and Optimization

2024 Update: Base learning rates are set at 5e4, while text encoder settings for concepts and characters can be adjusted to 1e4. Consider turning off the text encoder for simpler styles.

Optimization: Adafactor and AdamW8Bit are reliable choices, with Prodigy suitable for smaller datasets due to its limitations with larger volumes. However, as of 2024 AdamW8bit is NOW available - and is NOW if you're using Civitai onsite: Largely one of the better ones to use. That being said if you're using Derrian Distro: there is the "LoraEasyCustomOptimizer.came.CAME" - I have yet personally to test this, but there are plenty of my peers who use this and are totally good with this. (I tested it but the level of test does NOT = good results and its a PEBKAC issue.. *)

LR Scheduler: The Civitai trainer doesn't have that many options, and most people default to Cosine. Back in the 1.5 days we did constant with restarts sometimes. My peers recommend "LoraEasyCustomOptimizer.RexAnnealingWarmRestarts.RexAnnealingWarmRestarts" - This is not something I have a lot of data on, Came/Rex are great for low steps and RAM optimization - but being that I forgot to change a few settings when testing my lora trainer - I burnt one lora, and v-pred wasn't working for the first lol.

Additional Tips

Dataset Organization: Organize this how you feel comfortable, my Dataset Tools python program is still WIP but is somewhat working, but there are plenty others out there. Be sure to either manually or with aid of a program - check for duplicates.

Training Tips: Optimal batch sizes range from 2 to 4 to balance out the cost on Civitai, and if you're offsite, well I haven't done offsite training in a long time don't ask me.

Latents: "What's a Latent" - I'm kidding, usually we cache these offsite and I don't recall why anymore, but I do recall it being a decent thing to save on memory.

Gradient Checkpointing: Useful for conserving VRAM during training sessions, particularly on lower-spec machines. This is NOT a thing on Civitai's onsite trainer nor other sites, but there is things on Bmaltais and other tools.

Conclusions

SDXL base models (Illustrious, NoobAI XL, PonyXL and SDXL base as well as Animagine and others) are miles ahead of SD 1.5. Gone are (sadly) the days of "FAFO" and "CRUNCHY" sd 1.5 loras and checkpoints. Now we have SDXL base crunchy Fsk around and find out models!

Edit for April 2025:

Be careful on SOME things - I am learning that you CAN still overbake Illustrious and it can cause a little of the SD 1.5 funky crunch. Pony seems to be SEMI OK still for some stuff but for both i'm finding to beat the style into it you have to go above 3600 steps on the Civitai trainer. This is NOT saying i'm correct, this is still me saying i haven't moved back to colab yet and am trying to work on a Jupyter non colab version so i can use 4090's on a rented GPU. IF you find my settings DONT work, i'm not responsible, i'm not the be all and end all please review my peer's work Novowels, Richyrich616, Wrench and Guy90 as well as Justnp and FallenIncursio.

Holo's Guide is here: https://arcenciel.io/articles/1

JustTNP's guide is here: https://arcenciel.io/articles/3

Fallen's Guides: https://arcenciel.io/articles/2

Novowels: https://arcenciel.io/articles/9

Our Great Buddy (Don't ban me for linking his stuff seriously pls don't): https://arcenciel.io/articles/8

Disclaimer

This whole guide represents my approach, not definitive rules. Experimentation and adaptation are key to finding what works best for your projects. Removed outdated JSON references and highlighted ongoing updates for clarity and relevance. All of this is just mostly two years of using Civitai's trainer and some offsite with Colab, i'll send more details in a new training log for my own new trainer i'm developing.

EXAMPLE SETTINGS FOR CIVITAI ONSITE

Settings are copied over using: https://xypher7.github.io/lora-metadata-viewer/

Pony XL for a World Morph: https://civitai.com/models/571004/gold-filigree-xl-and-pony

{

"ss_output_name": "Gold_Filigree_Style",

"ss_sd_model_name": "290640.safetensors",

"ss_network_module": "networks.lora",

"ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)",

"ss_lr_scheduler": "cosine",

"ss_clip_skip": 2,

"ss_network_dim": 32,

"ss_network_alpha": 32,

"ss_epoch": 12, "ss_num_epochs": 12, "ss_steps": 3360,

"ss_max_train_steps": 3360, "ss_learning_rate": 0.0005,

"ss_text_encoder_lr": 0.00005,

"ss_unet_lr": 0.0005,

"ss_noise_offset": 0.03,

"ss_adaptive_noise_scale": "None",

"ss_min_snr_gamma": 5,

}SDXL World Morph:https://civitai.com/models/571004/gold-filigree-xl-and-pony

{

"ss_output_name": "Gold_Filigree_Style",

"ss_sd_model_name": "128078.safetensors",

"ss_network_module": "networks.lora",

"ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)",

"ss_lr_scheduler": "cosine_with_restarts",

"ss_clip_skip": 2,

"ss_network_dim": 32,

"ss_network_alpha": 16,

"ss_epoch": 13,

"ss_num_epochs": 14,

"ss_steps": 2509,

"ss_max_train_steps": 2702,

"ss_learning_rate": 0.0005,

"ss_text_encoder_lr": 0.00005,

"ss_unet_lr": 0.0005,

"ss_noise_offset": 0.1,

"ss_adaptive_noise_scale": "None",

"ss_min_snr_gamma": 5,

}Pony XL Anime: https://civitai.com/models/545495/sm-90s-aesthetic

{

"ss_output_name": "SM90sPDXL_r1",

"ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors",

"ss_network_module": "networks.lora",

"ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)",

"ss_lr_scheduler": "cosine",

"ss_clip_skip": 1,

"ss_network_dim": 32,

"ss_network_alpha": 32,

"ss_epoch": 8,

"ss_num_epochs": 8,

"ss_steps": 2560,

"ss_max_train_steps": 2560,

"ss_learning_rate": 1,

"ss_text_encoder_lr": 1,

"ss_unet_lr": 1,

"ss_noise_offset": 0.03,

"ss_adaptive_noise_scale": "None",

"ss_min_snr_gamma": 5,

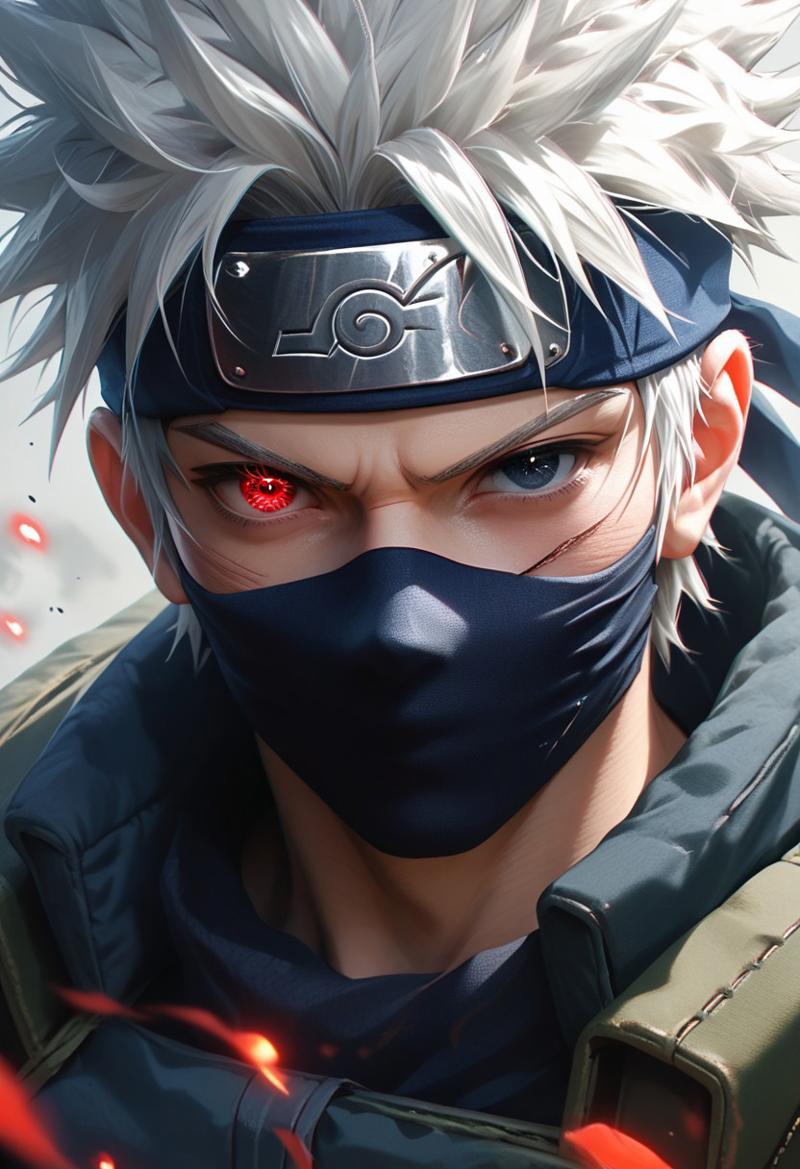

}Rogue (X-men) on Illustrious: https://civitai.com/models/1368221/rogue-x-men-illustrious

{

"ss_output_name": "Rogue_X-men_Illustrious",

"ss_sd_model_name": "128078.safetensors",

"ss_network_module": "networks.lora",

"custom.batch_size": 2,

"ss_gradient_accumulation_steps": 1,

"custom.resolution": "1024x1024",

"ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)",

"ss_lr_scheduler": "cosine_with_restarts",

"ss_clip_skip": 1,

"ss_network_dim": 32,

"ss_network_alpha": 16,

"ss_network_args": "undefined",

"ss_epoch": 5,

"ss_num_epochs": 5,

"ss_steps": 4130,

"ss_max_train_steps": 4130,

"ss_learning_rate": 0.0005,

"ss_text_encoder_lr": 0.00005,

"ss_unet_lr": 0.0005,

"ss_noise_offset": 0.1,

"ss_adaptive_noise_scale": "None",

"ss_min_snr_gamma": 5,

"sshs_model_hash": "a6f029d23c23e34637f4f6384995f6ee490c0e3788a3d5c280f658c77df74976",

"custom.training_time": "2h 14m 42s",

"custom.training_start_time": "2025-03-17T22:35:23.443Z",

"custom.training_end_time": "2025-03-18T00:50:05.869Z",

"custom.lora_hash": "7876a9dbfc",

"custom.lora_hash_type": "AutoV2",

"custom.civitai_url": "https://civitai.com/models/1368221?modelVersionId=1545757",

"ss_dataset_dirs": {

"img": {

"n_repeats": 4,

"img_count": 413

}

},

"civitai.trainedWords": [

"R0GU3",

"two-tone hair, auburn hair, white hair, green eyes",

"long hair, curly hair, fluffy hair",

" leather jacket, green and yellow bodysuit, yellow gloves, leather, skin tight",

"yellow crop top, torn clothes, green bikini, shorts, leather shorts, yellow gloves"

]

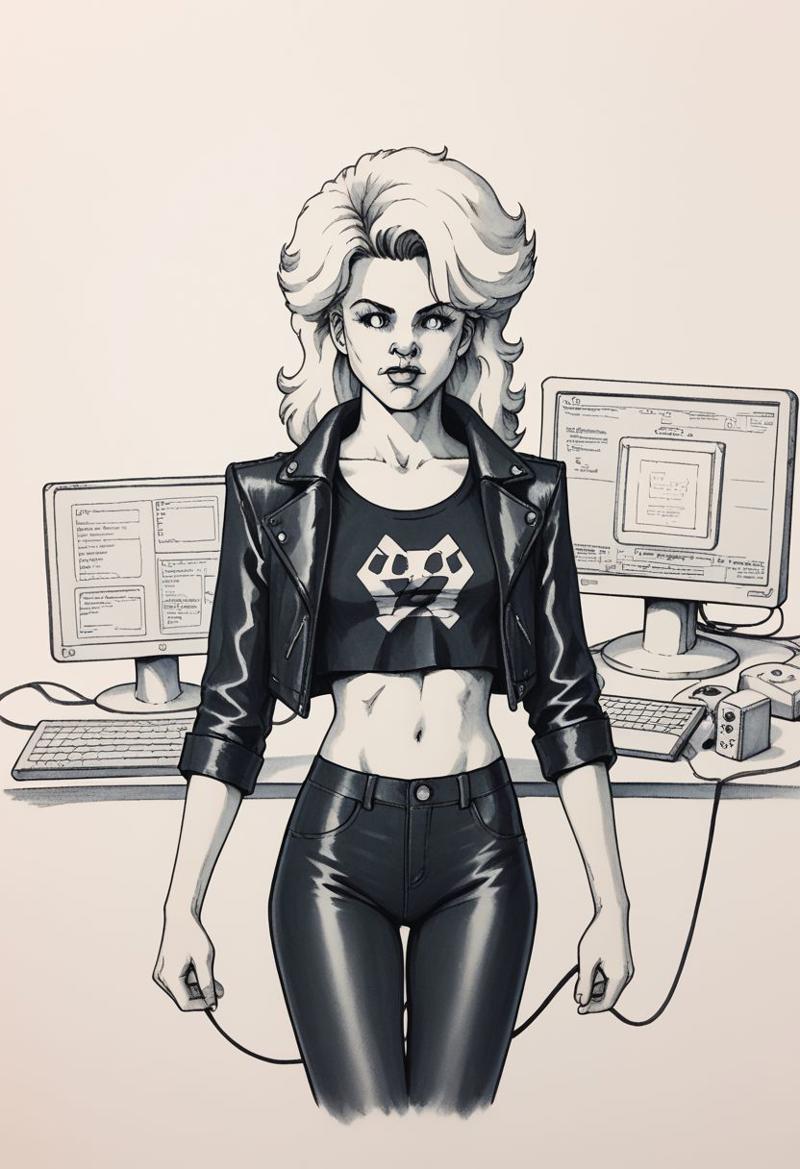

}XL , Illustrious & Pony Comparison outputs

Some of these are loras, and some are lora baking on a model, but they're left in for comparison on how far things are coming. More training settings using the Metadata viewer are shown after each sample. If you're curious what some of this means we'll try and break it back down later on.

DID VAPORWAVE PDXL

Blonde Hair Concept PDXL

X-men 97 Style:

Lora Bake Example:

Embedding Not Lora but it's using Faeia's model. Which like myself, uses Lora baking techniques.

DPO version of a model upcoming using lora bake technniques

Likely Hellaine Mix Lora Bake, which used Hellaine PDXL as a base style influencer.

Khitli Miqote 2024 PDXL

Older Duotone XL

Line Art PDXL

Flat Vector Art

Phoenix (see Metadata below) PDXL

Niji CGI

Strange Animals SDXL

Fashionable Niji PDXL

This concludes the IMAGE reference section, and in the next final section to conclude this version of the article I'll add some META data examples in which you can use to influence your future trainings - I'll try to include Illustrious in this time.

METADATA of Earth & Dusk Loras

Some of these are Xypher's output for our loras and some are the training data we can find in the actual model card . It turns out that most of the time the only time i've been "ABLE" to get onsite metadata is when I was actually finished training something. Sadly, I have not been keeping tabs on these so i'll not be downloading too many of these for reference. Xypher's tool is linked in the resources below.

Earthnicity's Kawaii Pride Lora:

{ "ss_output_name": "Earthnicity_Kawaii_LGBTQIA__Plural_Pride_Lora_Pony_XL", "ss_sd_model_name": "290640.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 3160, "ss_max_train_steps": 3160, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-06-21T00:32:11.624Z", "ss_training_finished_at": "2024-06-21T03:02:19.466Z", "training_time": "2h 30m 7s", "sshs_model_hash": "d55f4e26a7d5ec07beeab28196a863f389a94aa47df712d60b4e64e8b340366d" }URL:https://civitai.com/models/528225

Preview:

Offsite & Colab (Virtual Diffusion Styler) :

Different Text Encoder & Example of Google Colab (this took way longer than it would on a Normal 4090 imho)

{ "ss_output_name": "Virtual_3d_Diffusion_Update", "ss_sd_model_name": "ponyDiffusionV6XL.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.01,betas=[0.9, 0.999],d_coef=2,use_bias_correction=True,safeguard_warmup=True)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": "None", "ss_network_dim": 16, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 8160, "ss_max_train_steps": 8160, "ss_learning_rate": 0.75, "ss_text_encoder_lr": 0.75, "ss_unet_lr": 0.75, "ss_noise_offset": 0.0357, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 8, "ss_training_started_at": "2024-06-08T04:38:10.314Z", "ss_training_finished_at": "2024-06-08T09:21:05.103Z", "training_time": "4h 42m 54s", "sshs_model_hash": "3bd13bb2ca0daabfae31a7fd92579654ab4696c49212c166feb8c2c9c1345d16" }URL:https://civitai.com/models/532668

Preview:

(Onsite) Niji CGI:

I'm not sure why it keeps saying Text Encoder ONE when i'm positive i didn't train it with text encoder >_>. (other than evidently I did something dumb?)

{ "ss_output_name": "Niji_CGI_Pony_XL_LoRa", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 3570, "ss_max_train_steps": 3570, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-06-18T03:00:58.496Z", "ss_training_finished_at": "2024-06-18T07:27:06.977Z", "training_time": "4h 26m 8s", "sshs_model_hash": "590108cc59937bdc126cf4662a7989ab84a80ef835e4734b6684698d65f7fb69" }URL:https://civitai.com/models/522405

Preview:

Andy Kubert Comic Book Style:

Please note, I am a "SCIENCE THIS BINCH" type of trainer, and get usually side eyes from my peers (Lovingly) in discord. Anzch will probably peer over this later and ask me why i'm not consistent. Please also note, that sometimes fudging with Noise offset and MIN SNR gamma CAN burn your model without realizing it. I have yet to promise if this works OR NOT, it's meant to help on SD 1.5 - and i promise on SDXL it helps, but sometimes i've noticed that on PonyXL it can be flippant and cause contrast/noise issues. However, if you know more about math/training than I do please go in the comments and let me know how this works and how to correct it.

{ "ss_output_name": "AndyKubertPDXL", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 6, "ss_num_epochs": 6, "ss_steps": 3024, "ss_max_train_steps": 3024, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.1, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-04-07T05:23:12.741Z", "ss_training_finished_at": "2024-04-07T06:37:02.208Z", "training_time": "1h 13m 49s", "sshs_model_hash": "33a1cbfa2da7c6fe2003721cac744a9a20402ffa79d016418cacbd65a0778ac0" }URL:https://civitai.com/models/388048

Preview:

Alternative Sailor Moon on PonyXL:

Again this is like above, i've been known to fudge the settings and it CAN come out fine, but be aware that sometimes this can cause artifacts and not always work:

{ "ss_output_name": "AltMoonPonyXL", "ss_sd_model_name": "290640.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2440, "ss_max_train_steps": 2440, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.1, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-03-06T02:38:36.724Z", "ss_training_finished_at": "2024-03-06T04:17:52.272Z", "training_time": "1h 39m 15s", "sshs_model_hash": "38aab066bd26344663eaba4b6cd7a42a8fbb61f37e327e231e0cbfb8ac69c46d" }URL:https://civitai.com/models/434907

Preview:

Upcoming Landscape lora for PonyXL:

Again don't take my metadata examples as "THE BIBLE" - as my settings will vary from month to month. Always remember that you're dealing with someone with mental health things that flavor differently day to day let alone month to month.

{ "ss_output_name": "Cyberpunk_Landscape_Pony_XL", "ss_sd_model_name": "290640.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 3820, "ss_max_train_steps": 3820, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-06-21T01:55:00.735Z", "ss_training_finished_at": "2024-06-21T04:23:59.809Z", "training_time": "2h 28m 59s", "sshs_model_hash": "fc07afe3ece2a5cc19555f035e6381a562d6f1dee92f14cdc06d481924f423fb" }There are no examples for this one sadly, as i have yet to publish and test it.

25D Style for Pony XL:

This was likely batch trained on Site a couple months ago, so be aware it'll probably tell you i used noise offset like SDXL. Evidently no, and on top of that i did text encoder on accident lol. There is a pure warning on this: TEXT ENCODER is finicky on XL and PonyXL, it doesn't always work it sometimes works. Aka: Styles aren't BAD with TENC trained, just that be aware training the TENC with a lora may produce varied differences vs training Unet only.

{ "ss_output_name": "25DPDXL", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2480, "ss_max_train_steps": 2480, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-04-06T23:50:32.729Z", "ss_training_finished_at": "2024-04-07T01:05:24.217Z", "training_time": "1h 14m 51s", "sshs_model_hash": "c2278d08a7fb6faace958ab7b7eb2b5d93e53918575494b044da9400f5256357" }URL:https://civitai.com/models/438760

Preview:

Santa Overlords (Christmas 2023):

Let's start sharing more older XL examples to give a wide amount of data!

{ "ss_output_name": "SantaOverlordsXL", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 3, "ss_num_epochs": 3, "ss_steps": 2544, "ss_max_train_steps": 2544, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0.00005, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2023-12-12T07:33:39.601Z", "ss_training_finished_at": "2023-12-12T08:29:52.281Z", "training_time": "0h 56m 12s", "sshs_model_hash": "e80d4b08fc7445c4120e1a67447d321cc4cd20b3f68d804d56f861d50dab7e92" }URL:https://civitai.com/models/230237

Preview:

Mica X'voor on SDXL:

Please note, that the following is what we personally think is a "BAD EXAMPLE" for training concepts or people with, as at the time on SDXL this bombed horribly. IT was on Tensorart when we were there, but we never brought it back to Civitai, as it wasn't of good quality. Please note everything listed here is always listed in our backup folders. I will link both LORA Backups after the metadata section, and if you scroll back up you can see there is a link to Xypher's lora Metadata tool. This will allow you to download and double check tags, and everything else!

{ "ss_output_name": "MicaXvoorSDXL", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 540, "ss_max_train_steps": 540, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0.00005, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2023-09-21T03:41:43.484Z", "ss_training_finished_at": "2023-09-21T04:10:38.901Z", "training_time": "0h 28m 55s", "sshs_model_hash": "6042158baa3ce03a2bf65648a37170e8c94c8010e5011144fcdca6c9b8c2bbe9" }Sadly i don't know where this brat's images for SDXL went, and i know we had severe genetic issues in testing lol.

G'raha Tia (First version) on SDXL:

This was when people were telling you NEVER TO TRAIN ABOVE 500-1000 steps, some concepts CAN go under these numbers - and there are times i've burned loras with LESS data. This was not one of those, as you can tell with the TENC and UNET rates, this didn't come out nearly as well as it should have.

{ "ss_output_name": "GrahaSDXL", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 8, "ss_num_epochs": 8, "ss_steps": 856, "ss_max_train_steps": 856, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0.00005, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2023-09-29T04:16:00.300Z", "ss_training_finished_at": "2023-09-29T04:52:16.327Z", "training_time": "0h 36m 16s", "sshs_model_hash": "b2b3669c7c13b47c003f48d4f4e7282ebbca63417693c44bf18d7c8d9e28bbc5" }URL:https://civitai.com/models/153609

Preview:

80s Fashion White Skin Art Deco Vector on SDXL:

{ "ss_output_name": "80s_Flat_Vector_Fashion_Art", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2920, "ss_max_train_steps": 2920, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-02T06:41:26.317Z", "ss_training_finished_at": "2024-05-02T07:42:20.216Z", "training_time": "1h 0m 53s", "sshs_model_hash": "7451255f40a872e117d629ac9947c54ca2ecc3dad21d0f3af34751d658885675" }Also noting: SDXL AND PONYXL are trained on Clip Skip 2 - However, i'm not going to bother arguing this note, because Clip Skip will start WW6, 7, 8 and 9 -- WIth 10 being SD3 "SKILL ISSUE" arguments on reddit. (We know where that one went lol)

URL:https://civitai.com/models/431427

Preview:

Older Lora of Misuo Fujiwara on SDXL:

{ "ss_output_name": "DUSK_XL_MISUOFUJIWARA_dadapt_cos_1e-7", "ss_sd_model_name": "sd_xl_base_1.0.safetensors", "ss_network_module": "networks.lora", "ss_total_batch_size": 2, "ss_resolution": "(1024, 1024)", "ss_optimizer": "dadaptation.experimental.dadapt_adam_preprint.DAdaptAdamPreprint", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": "None", "ss_network_dim": 64, "ss_network_alpha": 32, "ss_epoch": 5, "ss_num_epochs": 5, "ss_steps": 1000, "ss_max_train_steps": 1000, "ss_learning_rate": 1e-7, "ss_text_encoder_lr": "None", "ss_unet_lr": 1000, "ss_shuffle_caption": "True", "ss_keep_tokens": 0, "ss_flip_aug": "True", "ss_noise_offset": 0.0357, "ss_adaptive_noise_scale": 0.00357, "ss_min_snr_gamma": 5, "ss_training_started_at": "2023-08-13T06:34:35.162Z", "ss_training_finished_at": "2023-08-13T07:07:13.230Z", "training_time": "0h 32m 38s", "sshs_model_hash": "6b3f7ccf27a1cdd7642e78f4222452b198ca6ea85662d5d80c9f8e3d7d2f72ad" }(The tool couldn't find the images so i'm just doing i straight from the model lol)

URL: https://civitai.com/models/129262

Model Preview:

( Yes, this was again Second Life data, and Anzch and I have jokes about how bad SL "looks" desptie how much I spend on actual photorealistic skins lol. It's all in good fun!)

I do use over 2048 pics in these, but onsite will NOW resize them in bucketing, before it didn't - this is a good thing because I am positive Kitch's Lora is like farking 2gb just for 600 pics lol.

Older Lora Kawaii Devices on SDXL:

{ "ss_output_name": "KAwaiiDEvicesSDXL", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 16, "ss_network_alpha": 8, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2580, "ss_max_train_steps": 2580, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0.00005, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2023-10-14T06:50:44.056Z", "ss_training_finished_at": "2023-10-14T07:46:57.512Z", "training_time": "0h 56m 13s", "sshs_model_hash": "39079bfe92fa2b1c851dd54e36e59d82877ee126a48a75882f3aff7696a7aa56" } Preview:

Mike Weiringo on SDXL (Rest in Peace):

{ "ss_output_name": "RingoStyleXL", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 6, "ss_num_epochs": 6, "ss_steps": 2940, "ss_max_train_steps": 2940, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-01-30T04:00:22.690Z", "ss_training_finished_at": "2024-01-30T04:52:34.250Z", "training_time": "0h 52m 11s", "sshs_model_hash": "fc0599598aace436a4a47386ea8e80245f1d3fef7eb276e6e05177e9d56097a9" }URL:https://civitai.com/models/284492

Preview:

PDXL Classic Rogue (not the recent retrain):

{ "ss_output_name": "ClassicRoguePonyXL", "ss_sd_model_name": "290640.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 6, "ss_num_epochs": 6, "ss_steps": 2562, "ss_max_train_steps": 2562, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.1, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-03-06T21:54:42.240Z", "ss_training_finished_at": "2024-03-06T22:51:07.337Z", "training_time": "0h 56m 25s", "sshs_model_hash": "594c8f3c73beb65c4c6a42e9ee95a82faeb49b95d4c7bcbdee82896c9b9e07a9" }URL:https://civitai.com/models/342585

Preview:

DID VAPORWAVE VISIONS (SDXL):

{ "ss_output_name": "D.I.D._Vaporwave_Visions_SDXL", "ss_sd_model_name": "sdXL_v10VAEFix.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2920, "ss_max_train_steps": 2920, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.1, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-24T11:48:34.289Z", "ss_training_finished_at": "2024-05-24T13:27:30.110Z", "training_time": "1h 38m 55s", "sshs_model_hash": "b52a6673093cb6e6f7e87ff1960ea77dfcf7c8ce8e001180d5b69b843a9544ee" }URL:https://civitai.com/models/473185

Preview:

Phoenix Montoya (PDXL):

We HAVE an SDXL version and it's probably on Civitai, but the quality is lacking due to a larger dataset and poor training settings... so instead you get the PDXL settings:

{ "ss_output_name": "Phoenix_Montoya_Rodriguez", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 7, "ss_num_epochs": 7, "ss_steps": 2716, "ss_max_train_steps": 2716, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-08T23:43:02.113Z", "ss_training_finished_at": "2024-05-09T00:53:59.293Z", "training_time": "1h 10m 57s", "sshs_model_hash": "b831c17749308f41dc2e9d75caed4d858bf6a5bcfaf8f65d3424e31fb7a03963" }URL:https://civitai.com/models/444188

Preview:

Deadpool Movie style (DPvsWOLV/DP3) MCU:

Example of LARGER dataset poor training on SDXL:

{ "ss_output_name": "MCU-DeadpoolMovieStyle", "ss_sd_model_name": "sdXL_v10VAEFix.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "transformers.optimization.Adafactor(scale_parameter=False,relative_step=False,warmup_init=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 800, "ss_max_train_steps": 800, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0.00005, "ss_unet_lr": 0.0005, "ss_noise_offset": 0.1, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-04-14T22:35:01.686Z", "ss_training_finished_at": "2024-04-14T23:05:44.752Z", "training_time": "0h 30m 43s", "sshs_model_hash": "b743391ba457aa0530a8d080bce10bb554b43d0dfef0904b4b5d7d8bee08f09d" }URL:https://civitai.com/models/474750

Preview:

Please note that this was 800 steps, and it has some hilarious artifacting, it's NOT BAD overall, but it's hilarious in that it will add RANDOM BLOBS. Note I didn't even turn off the text encoder, i just remember throwing in whatever from the screencap set i made for Capsekai on Tumblr (which has been ignored in the last two months because i'm an idiot)

Earthnicity's MJ Manga on SDXL:

Animated Datasets such as this are a hard call, if anything the lack of steps explains a lot of the issues we faced in testing it. It produces a lot of artifacts, and has issues with hands and body proportions - yet it's one of my partner's most popular loras.

{ "ss_output_name": "MJMangaSDXL", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 570, "ss_max_train_steps": 570, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0.00005, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2023-09-23T03:06:18.127Z", "ss_training_finished_at": "2023-09-23T03:39:37.156Z", "training_time": "0h 33m 19s", "sshs_model_hash": "9b8630f11b7ab6c490ef53308b35523430e46f47d2b2ed52d9b0a91592bc3965" }URL:https://civitai.com/models/185798

Preview:

Earthnicity's Pony XL version of Kitch X'voor:

This was because while Kitch is related in theory to many of the X'voor line in our system - she's really in Earthnicity's system (See our Bio sections, we're both plural systems they haev a UDD dx and we have a DID dx).

{ "ss_output_name": "Kitch_Xvoor_PDXL", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 3510, "ss_max_train_steps": 3510, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-08T03:34:07.815Z", "ss_training_finished_at": "2024-05-08T05:06:19.651Z", "training_time": "1h 32m 11s", "sshs_model_hash": "3ecfa7d609e4a7c8b2861bc82972f18931af628ae76cd0f42ef92d4a68f93799" }There are SOME issues with proportions, so there is talk that the Datasets we've been creating may have quality issues - I need to speak in length with peers on what this may be. It wouldn't be nesscarily the subject quality as more lazyness on my part with my partner in not pre cropping or subject cropping. (And I mean that sincerely we don't tend to pre-crop, we just dump and go lol)

Note: Evidently WE DID NOT UPLOAD THIS, and i'm not sharing samples until i figure out WHY, you're welcome to download it via backups, but somehow this never got uploaded. (Despite that i somehow remember helping earthnicity upload this.... )

Female Hrothgar (DAWNTRAIL FFXIV):

Yep. Cat GIrls:

{ "ss_output_name": "FemHrothgarPonyXL", "ss_sd_model_name": "290640.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2790, "ss_max_train_steps": 2790, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.1, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-03-05T23:39:27.921Z", "ss_training_finished_at": "2024-03-06T00:44:52.815Z", "training_time": "1h 5m 24s", "sshs_model_hash": "1ee21226c7689ce48457f7e9b85d73a8a274f2e20b1dedf1cdbb60f9459cf1a9" }URL:https://civitai.com/models/336350

Preview:

Experimentation Lora:

{ "ss_output_name": "Lora_Trained_with_Whatever_was_From_my_Phone_Pony_XL", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2750, "ss_max_train_steps": 2750, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-17T02:25:46.548Z", "ss_training_finished_at": "2024-05-17T04:04:34.622Z", "training_time": "1h 38m 48s", "sshs_model_hash": "f0d70969bd5198487029961eac3732b75b3d7167b383ca7f9c8b5ef324f4a303" }Description: This was a dataset we'd devised somehow from our phone, and I don't recall if it worked to do it FROM phone - because sometimes this doesn't work. You'd have to check what i said in the model card.

URL:https://civitai.com/models/459570

Preview:

Niji Vs MJ Realism:

{ "ss_output_name": "Niji-Mj_Almost_Realism", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 4, "ss_num_epochs": 4, "ss_steps": 3448, "ss_max_train_steps": 3448, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-11T06:51:09.229Z", "ss_training_finished_at": "2024-05-11T08:47:19.047Z", "training_time": "1h 56m 9s", "sshs_model_hash": "755641c2363e7d6c461c983afdef004ace5e149265b375d7e7f99947d5ab7d25" }URL:https://civitai.com/models/448441

Preview:

Cybercore SDXL:

{ "ss_output_name": "Cybercore", "ss_sd_model_name": "128078.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "bitsandbytes.optim.adamw.AdamW8bit(weight_decay=0.1)", "ss_lr_scheduler": "cosine_with_restarts", "ss_clip_skip": 2, "ss_network_dim": 32, "ss_network_alpha": 16, "ss_epoch": 10, "ss_num_epochs": 10, "ss_steps": 2360, "ss_max_train_steps": 2360, "ss_learning_rate": 0.0005, "ss_text_encoder_lr": 0, "ss_unet_lr": 0.0005, "ss_noise_offset": "None", "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-02-25T01:18:36.723Z", "ss_training_finished_at": "2024-02-25T02:02:46.567Z", "training_time": "0h 44m 9s", "sshs_model_hash": "d311708b003d0297d3c31372374e4c911b4d4954ff80e6e0505a4daabfa6d793" }URL:https://civitai.com/models/320527

Preview:

Osenayan Style PDXL:

{ "ss_output_name": "Original_Osenayan_Mix_Style_for_PDXL", "ss_sd_model_name": "ponyDiffusionV6XL_v6StartWithThisOne.safetensors", "ss_network_module": "networks.lora", "ss_optimizer": "prodigyopt.prodigy.Prodigy(decouple=True,weight_decay=0.5,betas=(0.9, 0.99),use_bias_correction=False)", "ss_lr_scheduler": "cosine", "ss_clip_skip": 1, "ss_network_dim": 32, "ss_network_alpha": 32, "ss_epoch": 15, "ss_num_epochs": 15, "ss_steps": 2925, "ss_max_train_steps": 2925, "ss_learning_rate": 1, "ss_text_encoder_lr": 1, "ss_unet_lr": 1, "ss_noise_offset": 0.03, "ss_adaptive_noise_scale": "None", "ss_min_snr_gamma": 5, "ss_training_started_at": "2024-05-13T02:02:28.455Z", "ss_training_finished_at": "2024-05-13T03:30:28.802Z", "training_time": "1h 28m 0s", "sshs_model_hash": "928cee6fe06454394ce1ed0f1df8d1a608527d7dc0335387ffef32256557deb7" }URL:https://civitai.com/models/451791

Preview:

Glossary:

PEBKAC - Problem Exists Between Keyboard and Chair. (Aka likely me, maybe even you.) - this is an old OLD early Computer days reference. Usually when tech support had someone calling up "I can't turn my computer on!" "Did you plug it in?" "I didn't know i needed to!" type meme.

The rest of the "TERMS" are usual information about Loras and training, and this guide is so old i'm not going to re-professionalize it. I'm the ND grandpa your parents warned you about: yes the one that listens to metal, japanese rock and squishy kpop - and yet the only drugs i'm on are the ones that i'm prescribed to take lol. I'm not even a basement linux dweller (yet .. jk) - I'm just a graphic design graduate with no life!

Contact Us:

We're a Neurodivergent nightmare your Lora Trainer Warned you about! We do this to make space for others like ourselves, but it's been difficult to keep up for both ends of the spectrum here. If you're interested in what we do take a look:

Arc En Ciel: https://arcenciel.io/users/77

Our Discord: https://discord.gg/HhBSvM9gBY

Backups: https://huggingface.co/EarthnDusk

Send a Pizza: https://ko-fi.com/duskfallcrew/

WE ARE PROUDLY SUPPORTED BY: https://yodayo.com/ / https://moescape.ai/

JOIN OUR DA GROUP: https://www.deviantart.com/diffusionai

JOIN OUR SUBREDDIT: https://www.reddit.com/r/earthndusk/