So, the goal of this article is to demonstrate a technique to get a realistic version of an anime character generated using a classic "Anime" checkpoints.

The resources used are:

An anime checkpoint (here, it is WAI14)

A realistic checkpoint (of the same ancestry, so, Illustrious if the anime one is Illustrious). I'll be using my own checkpoint for that: UnNamedIXL V3

A1111 with ControlNet and Adetailer extensions installed

ControlNet Model: CN-anytest_V4-marged.safetensors

Upscaler model: 4xNMKD-Superscale

Generating the anime picture

You can do it in any way you like, just keep in mind it is better to have at least 40 steps to follow the tutorial.

Here is Nyaruko-chan from anime Haiyore! Nyaruko-san

Here is the generation information:

1girl, Nyaruko, from below, jumping through the air, dynamic pose, three quarter view, looking at viewer, evil smile, holding crowbar, full moon, red moon, japan, city street, at night, depth of field, dramatic lighting, stunningly beautiful, IllusP0s

Negative prompt: IllusN3g, young, child, loli

Steps: 40, Sampler: Euler a, Schedule type: Automatic, CFG scale: 6, Seed: 1255154182, Size: 832x1216, Model hash: bdb59bac77, Model: waiNSFWIllustrious_v140, VAE hash: 235745af8d, VAE: sdxl_vae.safetensors, Denoising strength: 0.3, Clip skip: 2, RNG: NV, ADetailer model: face_yolov8s.pt, ADetailer confidence: 0.6, ADetailer dilate erode: 4, ADetailer mask blur: 4, ADetailer denoising strength: 0.15, ADetailer inpaint only masked: True, ADetailer inpaint padding: 32, ADetailer use separate steps: True, ADetailer steps: 40, ADetailer use separate VAE: True, ADetailer VAE: None, ADetailer version: 24.11.1, Hires upscale: 1.5, Hires steps: 20, Hires upscaler: 4xNMKDSuperscale, Discard penultimate sigma: True, Version: v1.10.1, Source Identifier: Stable Diffusion web UIFirst thing to notice:

I use the same number of steps in Adetailer than in the generation

In Adetailer, i set the VAE to None

I use a fixed VAE for all models (the base SDXL VAE)

I used Hires.Fix to get more a detailed first picture

Starting the transformation

Now, we redo the same picture but with Refiner and ControlNet activated to get a first "semi realistic" picture (this one is going to be a bit uncanny).

The idea is to switch to a realistic model during generation while keeping it constrained to the original picture. I'll also disable Hires.Fix for now.

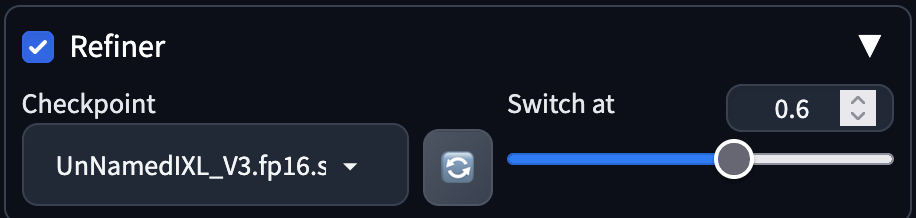

Here is the refiner config:

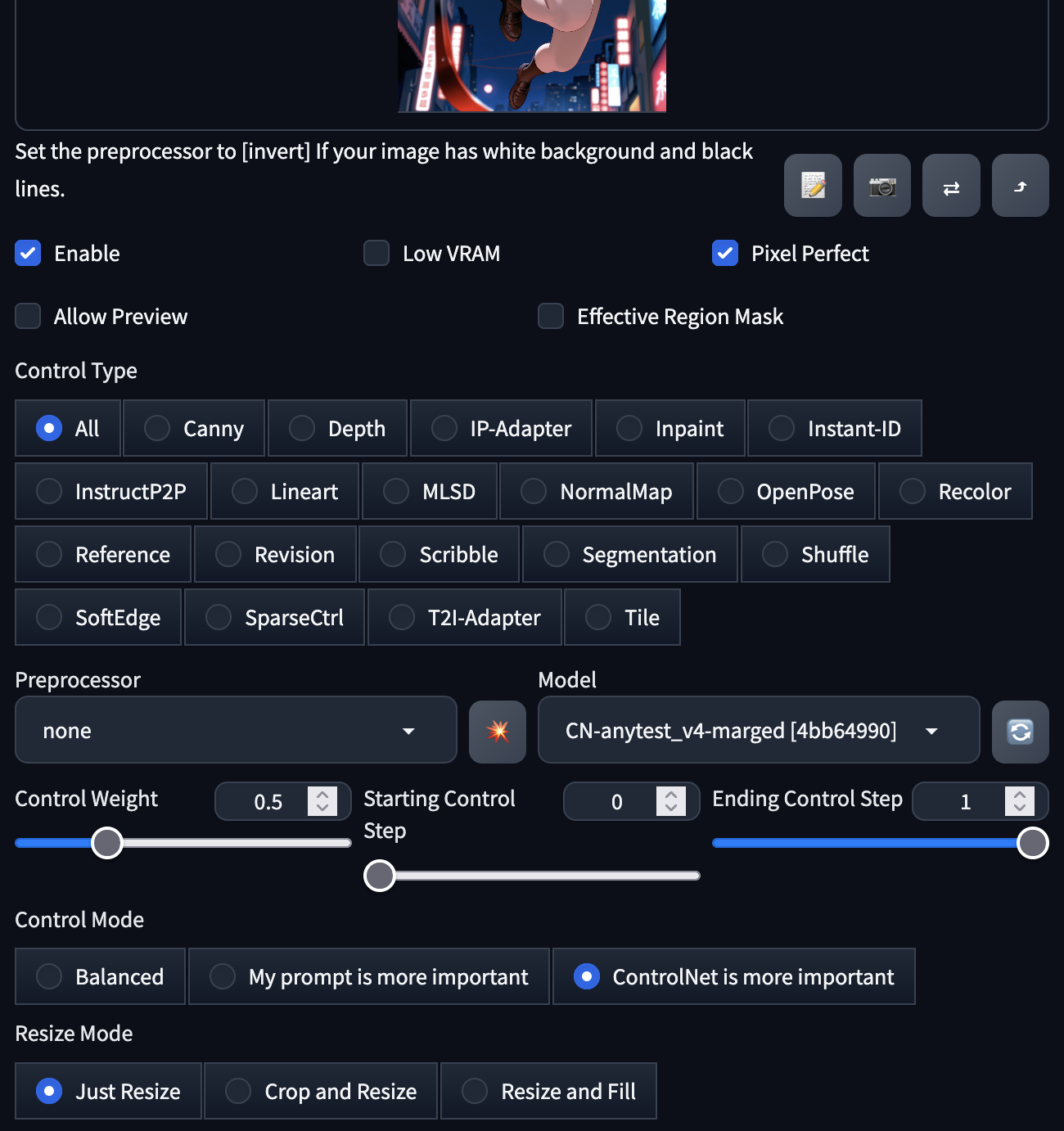

And the ControlNet config:

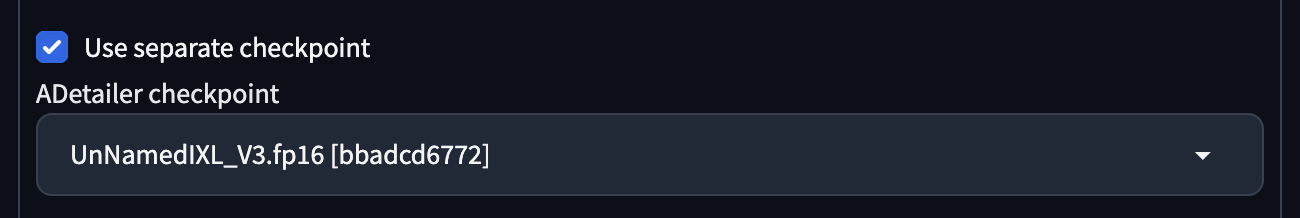

Additionally, note that i now use the target realistic checkpoint in Adetailer:

Let's go for it!

I didn't lie about the uncanny part! Here is the generation metadata:

1girl, Nyaruko, from below, jumping through the air, dynamic pose, three quarter view, looking at viewer, evil smile, holding crowbar, full moon, red moon, japan, city street, at night, depth of field, dramatic lighting, stunningly beautiful, IllusP0s

Negative prompt: IllusN3g, young, child, loli

Steps: 40, Sampler: Euler a, Schedule type: Automatic, CFG scale: 6, Seed: 1255154182, Size: 832x1216, Model hash: bdb59bac77, Model: waiNSFWIllustrious_v140, VAE hash: 235745af8d, VAE: sdxl_vae.safetensors, Clip skip: 2, RNG: NV, ADetailer model: face_yolov8s.pt, ADetailer confidence: 0.6, ADetailer dilate erode: 4, ADetailer mask blur: 4, ADetailer denoising strength: 0.15, ADetailer inpaint only masked: True, ADetailer inpaint padding: 32, ADetailer use separate steps: True, ADetailer steps: 40, ADetailer use separate checkpoint: True, ADetailer checkpoint: UnNamedIXL_V3.fp16 [bbadcd6772], ADetailer use separate VAE: True, ADetailer VAE: None, ADetailer version: 24.11.1, ControlNet 0: "Module: none, Model: CN-anytest_v4-marged [4bb64990], Weight: 0.5, Resize Mode: Just Resize, Processor Res: 512, Threshold A: 0.5, Threshold B: 0.5, Guidance Start: 0.0, Guidance End: 1.0, Pixel Perfect: True, Control Mode: ControlNet is more important", TI hashes: "IllusP0s: da04b1767dea, IllusP0s: da04b1767dea, IllusN3g: dbecff3f6065, IllusN3g: dbecff3f6065", Discard penultimate sigma: True, Refiner: UnNamedIXL_V3.fp16 [bbadcd6772], Refiner switch at: 0.6, Version: v1.10.1, Source Identifier: Stable Diffusion web UIRefining the result

Now, the whole idea is to switch the refiner step change to 0.3, do a generation, switch ControlNet picture and redo. We start with our uncanny image, here is the first round:

Already a bit better, here is the metadata, it will not change for the next few round, only the ControlNet picture.

1girl, Nyaruko, from below, jumping through the air, dynamic pose, three quarter view, looking at viewer, evil smile, holding crowbar, full moon, red moon, japan, city street, at night, depth of field, dramatic lighting, stunningly beautiful, IllusP0s

Negative prompt: IllusN3g, young, child, loli

Steps: 40, Sampler: Euler a, Schedule type: Automatic, CFG scale: 6, Seed: 1255154182, Size: 832x1216, Model hash: bdb59bac77, Model: waiNSFWIllustrious_v140, VAE hash: 235745af8d, VAE: sdxl_vae.safetensors, Clip skip: 2, RNG: NV, ADetailer model: face_yolov8s.pt, ADetailer confidence: 0.6, ADetailer dilate erode: 4, ADetailer mask blur: 4, ADetailer denoising strength: 0.15, ADetailer inpaint only masked: True, ADetailer inpaint padding: 32, ADetailer use separate steps: True, ADetailer steps: 40, ADetailer use separate checkpoint: True, ADetailer checkpoint: UnNamedIXL_V3.fp16 [bbadcd6772], ADetailer use separate VAE: True, ADetailer VAE: None, ADetailer version: 24.11.1, ControlNet 0: "Module: none, Model: CN-anytest_v4-marged [4bb64990], Weight: 0.5, Resize Mode: Just Resize, Processor Res: 512, Threshold A: 0.5, Threshold B: 0.5, Guidance Start: 0.0, Guidance End: 1.0, Pixel Perfect: True, Control Mode: ControlNet is more important", TI hashes: "IllusP0s: da04b1767dea, IllusP0s: da04b1767dea, IllusN3g: dbecff3f6065, IllusN3g: dbecff3f6065", Discard penultimate sigma: True, Refiner: UnNamedIXL_V3.fp16 [bbadcd6772], Refiner switch at: 0.3, Version: v1.10.1, Source Identifier: Stable Diffusion web UIHere is the result after round 4. At one point, there is not much change happening.

Nailing the landing

Now, it is the leap of faith. We'll switch to the full on realistic model, reactivate Hires.Fix, disable Refiner and up the ControlNet weight to 0.9.

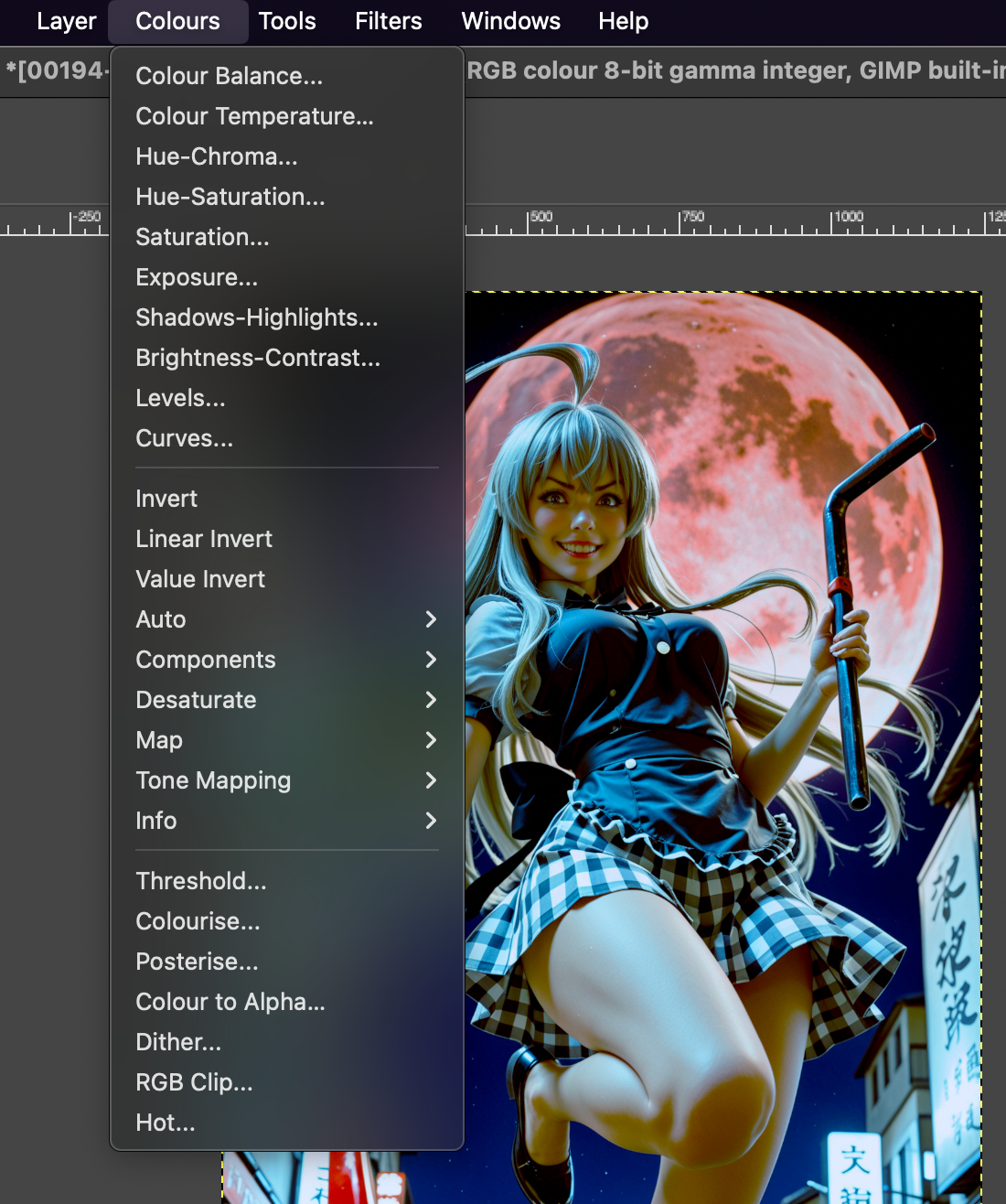

Not bad! We can do a second round using this picture as ControlNet after fixing the Brightness/Contrast/Saturation to our liking in an external tool like GIMP (it will not have a huge impact anyway)

Here is the metadata:

1girl, Nyaruko, from below, jumping through the air, dynamic pose, three quarter view, looking at viewer, evil smile, holding crowbar, full moon, red moon, japan, city street, at night, depth of field, dramatic lighting, stunningly beautiful, IllusP0s

Negative prompt: IllusN3g, young, child, loli

Steps: 40, Sampler: Euler a, Schedule type: Automatic, CFG scale: 6, Seed: 1255154182, Size: 832x1216, Model hash: bbadcd6772, Model: UnNamedIXL_V3.fp16, VAE hash: 235745af8d, VAE: sdxl_vae.safetensors, Denoising strength: 0.3, Clip skip: 2, RNG: NV, ADetailer model: face_yolov8s.pt, ADetailer confidence: 0.6, ADetailer dilate erode: 4, ADetailer mask blur: 4, ADetailer denoising strength: 0.15, ADetailer inpaint only masked: True, ADetailer inpaint padding: 32, ADetailer use separate steps: True, ADetailer steps: 40, ADetailer use separate VAE: True, ADetailer VAE: None, ADetailer version: 24.11.1, ControlNet 0: "Module: none, Model: CN-anytest_v4-marged [4bb64990], Weight: 0.9, Resize Mode: Just Resize, Processor Res: 832, Threshold A: 0.5, Threshold B: 0.5, Guidance Start: 0.0, Guidance End: 1.0, Pixel Perfect: True, Control Mode: ControlNet is more important", Hires upscale: 1.5, Hires steps: 20, Hires upscaler: 4xNMKDSuperscale, TI hashes: "IllusP0s: da04b1767dea, IllusP0s: da04b1767dea, IllusN3g: dbecff3f6065, IllusN3g: dbecff3f6065", Discard penultimate sigma: True, Version: v1.10.1, Source Identifier: Stable Diffusion web UIGoing the extra mile

Now, with this picture, we can adjust a few things if we want, like increasing the number of steps, relaxing a bit the ControlNet, changing sampler/scheduler or using Adetailer to fix the expression a bit by changing the prompt. You can also add "detailed background" to the positive prompt if it was not here already and "blurry background" to the negative.

Here is the final metadata:

1girl, Nyaruko, from below, jumping through the air, dynamic pose, three quarter view, looking at viewer, evil smile, holding crowbar, full moon, red moon, japan, city street, at night, depth of field, dramatic lighting, stunningly beautiful, IllusP0s, detailed background

Negative prompt: IllusN3g, young, child, loli, blurry background

Steps: 60, Sampler: DPM++ 2M SDE Heun, Schedule type: SGM Uniform, CFG scale: 6, Seed: 1255154182, Size: 832x1216, Model hash: bbadcd6772, Model: UnNamedIXL_V3.fp16, VAE hash: 235745af8d, VAE: sdxl_vae.safetensors, Denoising strength: 0.3, Clip skip: 2, RNG: NV, ADetailer model: face_yolov8s.pt, ADetailer prompt: "1girl, Nyaruko, from below, jumping through the air, dynamic pose, three quarter view, looking at viewer, smile, holding crowbar, full moon, red moon, japan, city street, at night, depth of field, dramatic lighting, stunningly beautiful, IllusP0s", ADetailer confidence: 0.6, ADetailer dilate erode: 4, ADetailer mask blur: 4, ADetailer denoising strength: 0.15, ADetailer inpaint only masked: True, ADetailer inpaint padding: 32, ADetailer use separate steps: True, ADetailer steps: 60, ADetailer use separate VAE: True, ADetailer VAE: None, ADetailer version: 24.11.1, ControlNet 0: "Module: none, Model: CN-anytest_v4-marged [4bb64990], Weight: 0.9, Resize Mode: Just Resize, Processor Res: 832, Threshold A: 0.5, Threshold B: 0.5, Guidance Start: 0.0, Guidance End: 1.0, Pixel Perfect: True, Control Mode: ControlNet is more important", Hires upscale: 1.5, Hires steps: 20, Hires upscaler: 4xNMKDSuperscale, TI hashes: "IllusP0s: da04b1767dea, IllusP0s: da04b1767dea, IllusN3g: dbecff3f6065, IllusN3g: dbecff3f6065", Discard penultimate sigma: True, Version: v1.10.1, Source Identifier: Stable Diffusion web UIAnd the side to side comparison:

And here are the full size pictures: https://civitai.com/posts/20145938

Conclusion

Here, i went for a more difficult picture: a really anime like character and the "evil smile" expression that can really mess up the realistic version. But this method is a good way to make a realistic picture out of any anime character. NB: it can also help to start with a semi-realistic checkpoint instead of a full-on anime one, but it was not the homework assigned in the bounty ;-)

![[Tutorial] Yet Another "Anime character to realistic version" method :D](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/585b500a-89cc-4a7f-ad65-afcfd8300d3f/width=1320/Screenshot 2025-07-28 at 06.10.55.jpeg)