Alibaba’s Qwen-Image is making waves in the AI art community by offering a free, open-source alternative to models like Midjourney and DALL·E. It's a 20-billion-parameter powerhouse that excels at tasks previously dominated by proprietary systems. In this post, we'll explore how Qwen-Image pushes the boundaries of open-source image generation – from its innovative architecture and ultra-high resolution, to its uncanny ability to draw actual text (in multiple languages!) inside images, and even edit images with fine-grained control. We'll also see where it stands against both open and closed competitors, and why its release is a game-changer for creators and developers alike.

Architecture Deep Dive: MMDiT + Qwen 2.5-VL

Qwen-Image introduces a novel architecture called Multimodal Diffusion Transformer (MMDiT), packing a whopping 20 billion parameters into a text-to-image model. Unlike typical diffusion models that rely on a fixed-text encoder like CLIP, Qwen-Image uses a dual encoder approach . Here's how it works:

• Language Model Backbone (Qwen 2.5-VL) – The system incorporates Alibaba's Qwen 2.5-VL as a language model to understand the prompt. This large vision-language model encodes the semantic meaning of the user’s prompt in great detail . Essentially, Qwen-Image has an LLM-grade brain interpreting your instructions, rather than a small text encoder.

• Visual Encoder & Diffusion Decoder – For generating images, Qwen-Image uses an autoregressive transformer as the diffusion model. The prompt embedding from Qwen 2.5-VL guides this diffusion transformer to progressively create an image in a latent space (a compressed image representation). During editing tasks, a visual encoder can also process an input image into this latent space, so the model understands the original image’s content.VAE (Variational Autoencoder) – Finally, a VAE takes the completed latent representation and decodes it into the final image pixels 2 . The VAE also works in reverse for image editing: it can encode an existing image into latents that the transformer then modifies.In simpler terms, Qwen-Image marries a big language model with a diffusion image generator. This allows it to handle complex prompts and image edits in a unified framework. The Qwen team notes that this approach (an LLM + diffusion combo) was “last seen in OpenAI’s GPT-4” model 3 – hinting that QwenImage is adopting cutting-edge ideas similar to what might power DALL·E 3 or GPT-4's image abilities. By training the model on multiple tasks (image generation and editing), Qwen-Image can seamlessly switch modes – for example, generating a picture from scratch or altering a user-supplied image – all with the same neural network. This enhanced multi-task training is what gives Qwen-Image its precise image editing prowess without losing the original image’s realism 4 .

Resolution Capabilities: 3584×3584 Pixel Outputs

One of Qwen-Image’s standout features is the huge native resolution it supports. The model can generate images up to 3584×3584 pixels in size directly, without external upscalers 5 . To put that in perspective, 3584×3584 is over 12 million pixels – far beyond the 512 or 1024-pixel grids that most open-source models typically produce. It rivals the output resolution of many professional, closed-source tools.

How does this compare to other models? Most current open-weight models operate at much lower resolutions: for example, Stable Diffusion XL (SDXL) usually works around 1024×1024 pixels by default, and earlier Stable Diffusion versions were often trained on 512px images (relying on secondary upscaling for larger outputs). Other open models like HiDREAM or Flux have made strides towards higher-res generation, but Qwen-Image’s 14-megapixel native canvas is in a league of its own. Even proprietary systems often employ trickery (like multi-pass upscaling) to achieve high-res images. Midjourney and Adobe Firefly, for instance, produce stunning high-resolution results, but typically through internal pipelines that upscale or refine smaller images. In contrast, Qwen-Image can paint in high definition in one go, which is a major milestone for open models. Why does resolution matter? For use cases like printing posters, design mockups, or detailed artwork, starting with a larger native image means crisper details and less reliance on post-processing. QwenImage users have the freedom to specify custom aspect ratios and sizes – the model’s interface even allows selecting the “frame size” before generation 6 . So whether you need a 16:9 banner or an Instagram square, Qwen-Image can directly render the “right-sized” image for your project. This is a boon for content creators who would otherwise generate a smaller image and then upscale it (often losing some quality). Of course, pushing so many pixels does demand more computation. Running Qwen-Image at maximum resolution requires a powerful GPU (the model’s FP16 weights are ~41 GB, though 8-bit compressed versions at ~20 GB exist) 7 . In practice, generating a full 3584×3584 image may be overkill for every scenario. But the fact that Qwen-Image was trained to handle that scale demonstrates the potential for future open models. We can expect upcoming open-source systems to similarly aim for higher native resolutions or to integrate efficient upscalers. Qwen-Image has effectively raised the bar, showing that ultrahigh-res output is no longer the exclusive domain of closed labs.

Prompt Token Handling: Super-Sized Prompts (Up to 32K tokens!)

If you’ve ever tried crafting a prompt for Stable Diffusion, you know there's a limit to how much detail you can cram in before it gets truncated (usually around 75 words for the old CLIP models). Qwen-Image blows past these limits by inheriting a 32,000-token prompt window from its Qwen 2.5-VL language backbone. That’s the equivalent of a short story or an entire design spec that you could feed into the model! In theory, you could write a multi-page description of an image, and Qwen-Image will attempt to parse and use all of it.

In practical terms, prompts of several thousand tokens are now viable, whereas other open models typically accept a few hundred at best . This huge context capacity means you can be extremely precise and verbose in describing your desired image. For example, you might input a detailed scene description with multiple requirements, or even paste in formatted text (like a poem or a list of RPG character stats) for the model to render visually. Qwen-Image’s transformer will encode all that information without dropping the end of your prompt . That said, using all 32K tokens at once might be overkill for most single images – and it could be computationally heavy. Current practical usage tends to stay in the 1–2k token range for very detailed prompts, simply because extremely long prompts can become unwieldy or confuse the image generation. But even a 1,000-token prompt (roughly 750+ words) is orders of magnitude more detail than the ~75 token limit of Stable Diffusion’s CLIP encoder. This enables richer storytelling and complex instructions in the prompt. Comparison with other models: SDXL and similar diffusion models have tried to relax the 77-token limit a bit (some use tricks like segmenting text or using larger text encoders), but none come close to QwenImage’s lengthy prompt support. For instance, many Stable Diffusion pipelines still effectively cap at 77 tokens (with any extra words beyond that often ignored or given less weight) 10 . A few research models (e.g., DeepFloyd IF) experimented with a few hundred tokens by using a large T5 encoder, but even those are an order of magnitude shorter than Qwen’s 32K. In short, Qwen-Image currently stands alone in letting your imagination run wild in paragraph form.

For users, this means you can include backstory, context, and multiple instructions in one go. Want an image of a fantasy city with a written lore blurb and labels on the map? Qwen-Image is far more likely to handle that multi-part prompt in one shot, thanks to its LLM-grade prompt understanding. The model treats prompt text almost like a script or HTML for an image – you can specify sections (header, subtext, footnote in the image, etc.) and Qwen will do its best to lay them out accordingly. This is a completely new level of prompt design freedom that we haven’t seen in open-source image generators before.

(Of course, with great power comes great responsibility – or at least the need for some prompt engineering finesse. Extremely long prompts might still produce unexpected results if the model struggles to prioritize among many details. But the key is, the length ceiling itself is no longer a shackle. You won’t hit a hard cutoff and lose the tail end of your instruction as with other models.)

Text Rendering & Multilingual Magic

Perhaps the most jaw-dropping capability of Qwen-Image is its ability to generate legible, complex text within images. This has long been a pain point for generative models – ask DALL·E 2 or Stable Diffusion to write "OpenAI" on a sign, and you’d likely get gibberish or broken letters. Qwen-Image, by contrast, was explicitly designed for native text rendering in both English and Chinese, and it shows. The model can handle multi-line paragraphs, different fonts, and even mixed languages with impressive fidelity. Qwen-Image can generate complex scenes with seamlessly integrated text, as shown in this Miyazaki-inspired market street. Notice the Chinese characters on the shop signs (“”, “”, “”) and the “” calligraphy on the jar – all rendered accurately with proper depth of field and style . In tests, Qwen-Image has demonstrated it can produce clean, readable text in scenarios ranging from storefront signage to book covers and PowerPoint-like slides 11 14 . It supports both alphabetic scripts (like English) and logographic scripts (like Chinese), maintaining correct characters and layout for each 12 11 . The model doesn't get tripped up by multi-byte characters or complex fonts – it can generate Chinese calligraphy on a hanging scroll just as readily as it can generate neat English block letters on a poster. For example, in one demo it rendered Chinese couplets with an artistic calligraphic style and got all the characters correct 15 . In another, it produced an English bookstore window display with titles of books clearly written on the covers 16 . These are feats that would have seemed almost science-fictional for an image generator a year or two ago!

So how does Qwen-Image pull off this magic? A few factors are at play:

Specialized Training Data: Alibaba’s team heavily curated the training set to include a lot of images containing text. They report focusing on design content like posters, infographics, slides (about 27% of training data) and also synthetic images specifically created for text rendering tasks 17 18 . They even used different strategies like "Pure Rendering" (simple text on plain backgrounds), "Compositional Rendering" (text in real scenes), and "Complex Rendering" (structured layouts) to teach the model how text should look in all kinds of contexts 18 . By avoiding any AI-generated text in the training and using only clean, controlled examples, Qwen-Image learned to map written content to visuals with high accuracy.

Innovative Positional Encoding (MSRoPE): Qwen-Image introduces a new approach called Multimodal Scalable RoPE to better align text tokens within images 19 . In essence, instead of treating text as a simple 1D sequence, MSRoPE encodes text in a 2D spatial manner (arranging embeddings along a diagonal grid in the image space). This helps the model understand where to place each word or character when drawing the image, even at different resolutions 19 . The result is improved placement and coherence of rendered text – letters appear in the right order and orientation, with consistent spacing, far more often than in previous models. In other words, QwenImage actually knows it's writing words on an image and keeps those words spatially organized, whereas older diffusion models largely faked text without true spatial awareness. Multilingual Support: Because the Qwen 2.5-VL backbone and training data were bilingual, QwenImage can seamlessly switch languages or even mix them. One example prompt had a woman holding a sign with a paragraph of text that included both English and Chinese sentences, one after the other – the model reproduced the entire bilingual paragraph in a neat handwritten style on the sign 20 . You could imagine making a poster that has an English title and a Chinese subtitle, and Qwen will handle both scripts appropriately in one image. This multilingual text rendering strength is particularly useful for global users (and notably, Alibaba’s focus on Chinese means it excels at Chinese text in images, where Western models tend to falter 21 ). The bottom line: Qwen-Image turns text-in-image from a weakness into a strength for open AI art. It “excels at complex text rendering, including multi-line layouts, paragraph-level semantics, and fine-grained details” 22 . In benchmarks, it outperformed previous state-of-the-art models by a wide margin on textheavy image tests like LongText-Bench (both English and Chinese) and TextCraft 23 . For Chinese text, it's essentially unchallenged – it leads by a significant margin on Chinese text rendering benchmarks 24 21 . Even for English, it is among the top performers (only slightly behind a proprietary model in certain metrics) 21 . For creators, this means new possibilities. You can generate an image that includes a quote, a title, labels, or instructions directly within the image. No more Photoshoping text in afterward – Qwen-Image can do it in one pass. Make a fantasy novel cover complete with the title and author name, or a comic panel with dialogue balloons, or an infographic with several text boxes, and have the AI draw all the visuals and text cohesively. There may still be occasional spelling quirks or font oddities (it’s not 100% perfect yet, as small errors like a missing letter can occur 25 ), but the advancement is dramatic. As one early reviewer noted, “Qwen-Image models are exceptional at incorporating complex texts... It works equally well with English and Chinese with the same ease.” 26 This level of text rendering was basically unheard of in open models before Qwen.

Multimodal Editing & Semantic Control

Beyond generating images from scratch, Qwen-Image also shines in image editing and controlled generation. It’s not just a text-to-image model; it’s a multimodal tool that you can use to modify existing images or guide creations with additional inputs. Alibaba achieved this by training Qwen-Image on various editing operations, giving it a sort of built-in “Photoshop” ability where you simply tell it what to change. Impressively, these edits maintain the semantic meaning and visual realism of the original image 27 – meaning the output looks like the same image but edited, rather than a completely different or glitchy result.

Here are some of the editing and control capabilities Qwen-Image offers out-of-the-box:

Style Transfer and Visual Filters: You can instruct Qwen-Image to apply a different style to an image (e.g., “make this photo look like an oil painting” or “turn this daytime scene into a neon cyberpunk version”). The model will change the stylistic attributes while keeping the underlying scene content consistent 28 . The developers report that Qwen-Image supports a wide range of artistic styles, from photorealism to impressionist art to anime, which it can apply or mix as needed

Additions and Deletions (Object-Level Editing): Qwen can add new objects or remove existing ones in an image via prompt. For example, if you have a photo of a person in a room, you could say “add a vase of flowers on the table” or “remove the lamp from the background,” and Qwen-Image will edit the image to include or exclude those elements 28 . It does so while respecting lighting and perspective, so the added objects look like they naturally belong. One user test asked Qwen-Image to replace a cat with a puppy in a photo (as well as change the person’s clothing and time of day) – the model pulled it off near-perfectly, changing the pet and clothes and lighting as instructed 30 .

Text Editing in Images: Not only can Qwen generate new text, it can also edit existing text in images 28 . This is super useful for things like correcting a sign in a photo or updating a poster’s date. Traditional image models have no concept of editing text – Qwen-Image does, thanks to its text rendering skills. So you could tell it “change the sign’s words to ...” and it will re-draw that part of the image.

Character Pose Manipulation: Qwen-Image can adjust the pose or position of characters in an image if asked 28 . This suggests it has some understanding of the 3D structure and pose of people/ objects in the scene. If you want an arm raised or a head turned, Qwen might be able to re-imagine the subject in that new pose (within reason) while keeping the character identity the same.

Depth and Perspective Control: In a nod to advanced ControlNet-like features, Qwen-Image covers classic computer vision tasks such as depth estimation and viewpoint change 31 . That means the model can internally gauge the depth map of an image and use it to maintain consistency when editing or even generate a new perspective of the scene. For example, you might provide a single image and ask Qwen-Image to generate a view of that scene from a different angle – something it can attempt by leveraging depth understanding. This is cutting-edge stuff that blurs the line between 2D generation and 3D scene modeling.

• Sketch/Edge Guidance: Although not explicitly mentioned in the initial announcements, multimodal models like this often support taking an edge sketch or outline as input, using it as a structural guide for generation. Given Qwen-Image’s focus on preserving layout and structure, it wouldn’t be surprising if you could feed it a simple wireframe or pose skeleton and have it fill in the rest in the requested style. (The model’s open release means the community can experiment with such inputs via tools like ComfyUI.)

Importantly, all these edits are done via natural language instructions – no need for manual masking or layer tweaking. For instance, “make the sky sunny instead of cloudy” or “turn this person’s shirt red” are commands Qwen-Image can follow. The model’s multi-task training ensured that it learns to localize the changes to the regions/objects specified while keeping the rest of the image intact 27 . This consistency is a big deal. Early image editors often produced artifacts or altered unintended parts of the image. QwenImage, however, has been shown to preserve background details, overall style, and image quality while making the specific edits requested 27 . As one report noted, it achieves “exceptional performance in preserving both semantic meaning and visual realism during editing operations” 27 . In plain terms, your photo won’t get weirdly warped just because you edited one element – it still looks authentic.

This level of control opens up many creative workflows. You can generate a base image with Qwen-Image and then iteratively refine it: add an element here, change a color there, increase the detail of a section, etc., all through dialogue-like interactions. It’s akin to having an AI art director that can make tweaks on the fly. Users have integrated Qwen-Image into node-based tools like ComfyUI, where it can be used in pipelines with other modules. For example, you might do a rough sketch, feed it to Qwen for enhancement, then do an additional edit pass – all within a unified framework.

In summary, Qwen-Image isn’t just a text-to-image generator; it’s a flexible image lab. It brings many capabilities under one roof that previously required separate models or add-ons (like using Stable Diffusion with a dozen ControlNet extensions). Here, Alibaba baked these abilities directly into the model's training. This makes advanced image manipulation more accessible to everyday users – you just ask Qwen-Image to do it, and it does, no expert prompt hacking needed.

And given it's open-source, developers can further extend these controls. We might see community contributions that add more conditioning inputs (perhaps feeding segmentation maps, or doing outpainting beyond original image bounds) since the model architecture can likely support those with some tweaks. Qwen-Image provides a strong foundation for semantic image editing, heralding a future where creating and editing images is as easy as having a conversation.

Use Cases: From RPG Sheets to UI Mockups

With its unique mix of high resolution, long prompts, text rendering, and editing skills, Qwen-Image unlocks a host of high-impact use cases that were challenging for earlier models. Let's explore a few scenarios where Qwen-Image particularly shines, and how you might use it in each:

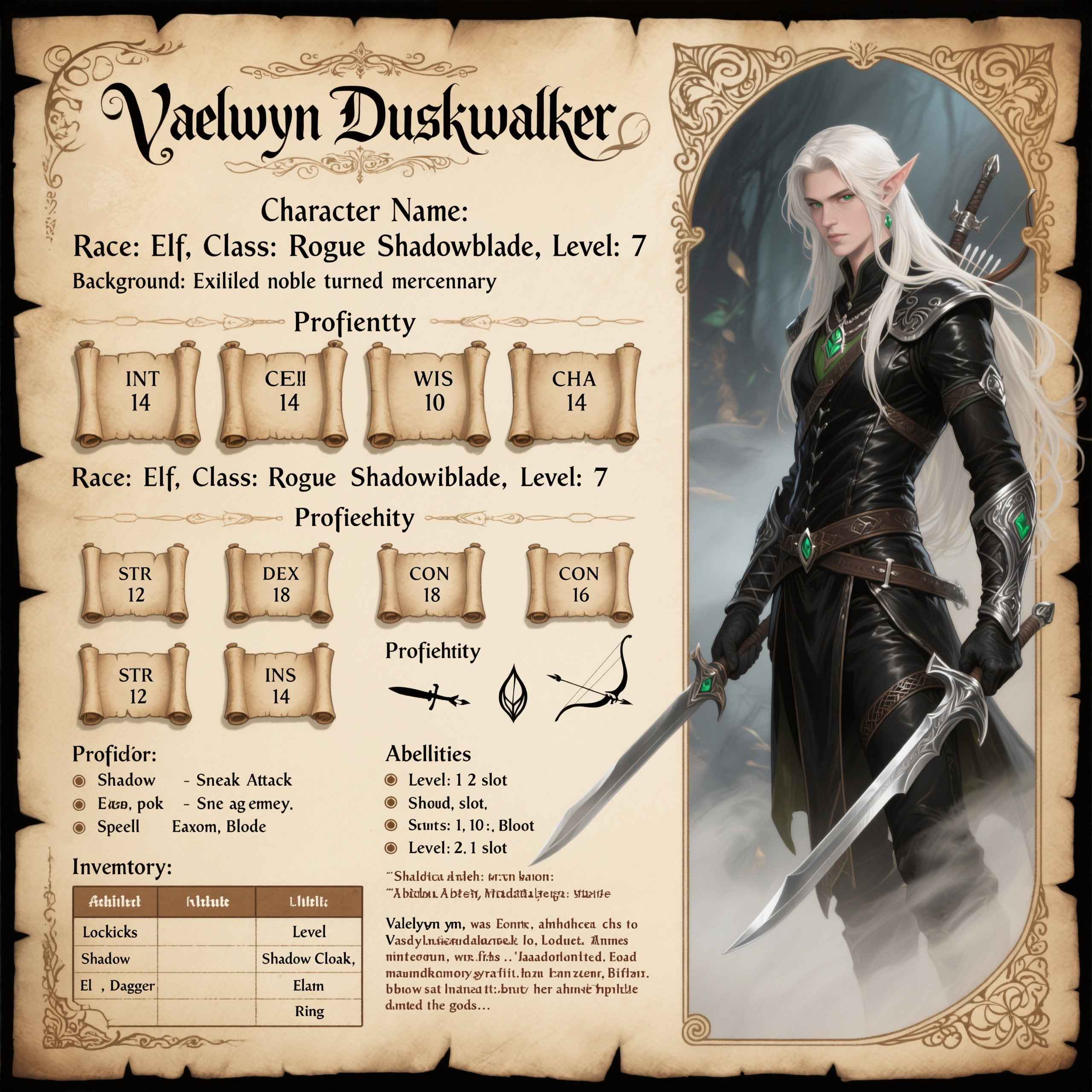

• RPG Character Sheets & Game Assets: Imagine generating a full Dungeons & Dragons character sheet – complete with the character’s portrait, name, and stats all in one image. Qwen-Image can create a fantasy character illustration and embed textual information like strength, agility, backstory paragraphs, etc., right on the parchment-style background. This is a dream for tabletop gamers and game developers. You could also design trading cards or game item icons that include written descriptions. Previously, an AI might give you cool art but you'd have to manually add text. Now the AI can do both, potentially even in a specific font or style matching the game theme.

Infographics and Educational Diagrams: Qwen-Image is well-suited for making infographics, flowcharts, and diagrams that combine graphics with explanatory text. Whether it's a process flowchart for an article or a visual instruction manual, the model can handle generating icons, arrows, and placing multi-line text labels. It was literally tested on creating an infographic about its own architecture – a task that proved challenging, but within the model’s capability 32 33 . As the model improves, we can expect clearer and more accurate infographics. The key advantage is saving designers time: you can get a draft layout of a complex infographic from Qwen-Image and then finetune it, rather than starting from scratch.

UI Mockups and Web Design: Need a quick UI mockup for a website or app? Qwen-Image can generate images that resemble real web page screenshots or mobile app interfaces, including readable interface text. For example, you could prompt: “Design a modern login screen with app name at top, two text fields, and a login button labeled ‘Sign In’”. Qwen can produce a plausible UI design image with those elements and labels (and you can specify styling like "material design" or "dark mode"). This is incredibly helpful for prototyping visuals. In one experiment, users asked Qwen-Image to create a landing page for a shampoo product, and it returned a comprehensive page design with hero image, headlines, feature sections, and a call-to-action button – the layout and theme were on point, and most text was correctly rendered (only a couple of minor word omissions) 34 . While it’s not going to replace a UI designer for final polish, it’s a great starting point to discuss ideas or illustrate a concept to a client.

Multilingual Visual Storytelling: Because Qwen-Image handles bilingual text so well, it’s a fantastic tool for storytelling across languages. Think of a children’s storybook page where the story is written in English and Chinese side by side, accompanied by an illustration. Qwen can generate the illustrated scene and embed both language texts properly. Another example is comic strips or graphic novels – you could have speech bubbles in different languages for different characters, or captions translated for a bilingual audience. Qwen-Image empowers creators to easily produce content that bridges language barriers, all in one image. This could be especially useful for educational content (e.g., posters that teach vocabulary by showing words in two languages next to pictures) 20 . The ability to accurately render non-Latin scripts means we might also see community use for other languages like Japanese, Korean, Arabic, etc., in visual media.

Fantasy Worldbuilding & Concept Art with Labels: Worldbuilders and authors can leverage QwenImage to visualize their worlds with the relevant lore included. For instance, you might generate a map of a fantasy land where each city name is actually written on the map in a decorative font, or a creature concept art with its name and attributes labeled (like a bestiary page). Qwen-Image can create the art for the creature and also write a paragraph of lore next to it in the image (as if the AI is producing the page of a fantasy encyclopedia). This is perfect for generating flavorful content for novels, tabletop games, or just for fun. No open model before could reliably produce the text labels on a map or the descriptive text on a concept sketch – now we have a tool that can, bringing us closer to one-shot generation of entire content pieces.

Posters, Ads, and Graphic Design: Of course, any scenario requiring a mix of imagery and text – movie posters, event flyers, social media graphics – is squarely in Qwen-Image’s domain. You can generate a movie poster with a title, tagline, credits, and central artwork all via AI. Or a business flyer with headline, bullet points, and contact info rendered correctly. One of Qwen-Image’s highlights is precisely this kind of poster creation: it not only reproduces design styles but “precisely generates user-specified Chinese and English text content” in the layout 35 . This makes it extremely valuable for advertisers and content marketers who need quick drafts of visuals with text. No more blank filler text (or lorem ipsum) – you get the actual content on the design.

These examples just scratch the surface. The community is already experimenting with Qwen-Image for things like book page generation, magazine covers, UI themes, and even meme creation (finally, an AI that can generate the caption text on memes correctly!). The ability to trust the model with writing means a single prompt can yield a pretty complete piece of content.

It's worth noting that while Qwen-Image is groundbreaking in these areas, it’s not without limits. Very complex multi-part layouts (like a full multi-slide presentation automatically generated) are still challenging and might require manual adjustment. In the Analytics Vidhya review, Qwen-Image struggled with a highly detailed infographic request, producing a somewhat unclear result that needed improvement 33 . So, for mission-critical design work, human oversight is still needed. But the initial output Qwen provides can save hours of work and serve as a solid template to build on.

Overall, Qwen-Image’s versatility means creators can spend more time on ideas and less on the tedious setup. Want to illustrate your D&D campaign guide? Generate all the pages with Qwen and then fine-tune them. Need concept art with annotations for your sci-fi novel? Qwen’s got you. It lowers the barrier to create richly annotated visuals – a valuable ability as content becomes more multimodal.

Benchmarking & Performance: Standing with the Giants

When it comes to objective performance evaluations, Qwen-Image has quickly proven itself as a top-tier contender. Various benchmarks and competitions in 2025 have shown that this open model doesn’t just hold its own – it often outperforms both its open-source peers and even some commercial models in key areas.

One highlight is Qwen-Image’s ranking on the Artificial Analysis Image Arena (AAA) leaderboard. As of its release, Qwen-Image is sitting at 5th place globally on this competitive benchmark, and notably it’s the only open-weight model in the top 10 36 . (All the others ahead of it are closed models or internal systems.) This is a big deal: it means an openly available model has broken into what was previously a closed-model stronghold. It’s a signal that open-source is catching up with the very best.

Let's break down some of the benchmark results that have been reported:

General Image Generation: Across tests like GenEval (which measures how well models generate various objects) and DPG (a diffusion model benchmark for photorealism), Qwen-Image has achieved state-of-the-art scores 37 . For instance, on GenEval Qwen-Image scored 0.91 (after finetuning) – edging out all other models evaluated 38 . It shows strength in creating a wide variety of content accurately.

Image Editing Benchmarks: Qwen-Image was evaluated on tasks like GEdit and ImgEdit, which involve making specific edits to input images, as well as GSO (perhaps a general editing score). In these, Qwen-Image again ranks among the best, often topping the charts 39 . Its ability to preserve image fidelity while editing gave it an advantage. For example, in one comparison Qwen achieved an ImgEdit score of 4.27 vs. around 4.0 for other high-end models 40 – a solid lead, indicating more faithful edits.

Text Rendering Benchmarks: This is where Qwen-Image truly shines. On evaluations designed to test how well an AI can render text in images – such as LongText-Bench (for lengthy strings of text), ChineseWord (for Chinese characters), and TextCraft (for English text in images) – Qwen-Image took the top spot in almost all categories 23 39 . Particularly for Chinese text, it wasn’t even close:

Qwen-Image’s accuracy scores were far above the next best model 41 . For English text, Qwen also led in most tests, though one proprietary model dubbed GPT-Image 1 slightly outperformed Qwen on one English text benchmark 21 . Still, Qwen-Image essentially set a new high score for bilingual text rendering in images.

Human Evaluation & Arena Tests: Alibaba conducted an interesting head-to-head arena where human evaluators compared images from different models (without knowing which was which). After 10,000+ comparisons, Qwen-Image ranked #3 overall, outperforming well-known commercial models like GPT-Image-1 and Flux v1 in aggregate 42 . A radar chart of the results showed QwenImage leading in categories like image generation quality, image processing (editing), and Chinese/ English text rendering 43 . In Chinese text, it was firmly the best; in English text it was on par with the leaders 43 . This kind of blind comparison demonstrates that Qwen-Image’s outputs are genuinely competitive in visual appeal and accuracy, not just scoring well numerically on niche tests.

It’s important to note that these benchmarks cover a range of criteria – from how detailed and coherent the images are, to how well they follow text instructions, to how aligned they are with the prompts. QwenImage performing strongly across the board means it doesn’t have a major weak spot. It’s a well-rounded model. In fact, the Qwen team calls it “a leading image generation model that combines broad general capability with exceptional text rendering precision” 44 . That sums it up nicely: it’s not a one-trick pony; it’s both a jack-of-all-trades and master of the critical skill of text-in-image.

How does it fare against the big names like Midjourney, DALL·E, etc.? Direct benchmark data for those models isn’t always available (since they’re not open), but we can infer from Qwen-Image’s rankings that it is right up there. If Qwen is 5th on a global leaderboard, the ones above it are likely the likes of the latest Midjourney or possibly Google’s and OpenAI’s newest. The gap between open and closed has dramatically narrowed. In areas like text rendering, Qwen-Image arguably leapfrogs the closed models – Midjourney, for all its artistic prowess, still produces jumbled text, whereas Qwen writes actual words. On pure photorealistic quality, Midjourney v5/v6 might still have an edge due to massive proprietary training, but Qwen-Image isn’t far behind and will only get better as the community fine-tunes and extends it.

One minor area where Qwen-Image lags is English text fidelity compared to the very best closed model (GPT-Image-1, presumably an internal model) 21 . For example, in one English text benchmark, Qwen scored slightly lower (e.g., 0.829 vs 0.857 on TextCraft) 45 . This suggests that while Qwen is excellent at English text, there is still room to reach absolute parity or superiority over the top English-trained model. It’s a small gap, but worth noting – perhaps due to that model’s larger training on English text or some extra OCR-based finetuning. However, given Qwen-Image’s rapid development, we might see updates that close this gap soon. And in any case, being second-best in the world at English text rendering and first at Chinese is nothing short of astonishing for an openly released model.

In summary, the numbers and rankings confirm what the demos suggest: Qwen-Image is a state-of-theart image generator on multiple fronts. It’s proven that open-source models can achieve world-class performance. For researchers and enthusiasts, this is vindicating – an open model is competing head-tohead with the likes of OpenAI and Adobe. For end users, it means you can leverage a freely available model to get nearly the same quality that you’d expect from the priciest AI services. It’s hard to overstate how significant that is in the ongoing AI landscape; Qwen-Image has essentially thrown down the gauntlet to proprietary providers, showing that open models can catch up and even surpass in key domains.

Ecosystem Integration & Accessibility

One of the best parts about Qwen-Image is that it’s not locked behind any platform – it’s released under an Apache 2.0 open-source license, which means anyone can use it, integrate it, and even commercialize products with it 46 . Alibaba has made the model very accessible across different channels, ensuring that both developers and casual users can give it a try.

Here’s how you can access and use Qwen-Image:

Hugging Face – The model’s weights and an official model card are available on Hugging Face 47 . This makes it easy to download or run Qwen-Image in your own code using the Huggingface Diffusers library. There’s also a hosted demo (often via Hugging Face Spaces) for those who just want to test prompts in a web interface 48 . The Hugging Face community has embraced Qwen-Image quickly, and you can find example notebooks, integrations with Diffusion pipelines, and discussions on how to optimize it.

ModelScope – As an Alibaba model, Qwen-Image is also on ModelScope 47 , which is Alibaba’s platform for AI models. They even provided a live demo on ModelScope where you can generate images in-browser without any setup 49 . This is great for users in regions where ModelScope might be more performant. ModelScope also often provides API endpoints, so developers could call QwenImage via API for their applications.

• Qwen Chat Web Interface – Alibaba integrated Qwen-Image into their Qwen Chat app

(chat.qwen.ai). If you go to the chat website and select one of the non-coding Qwen models, there’s an “Image Generation” option where you can simply type your prompt and get an image 50 . This is the easiest way for a non-technical user to play with Qwen-Image – no installation required. It basically turns Qwen-Image into a friendly chatbot that returns pictures. According to instructions, you just choose, say, Qwen-7B Image model in the interface and enter your prompt, possibly adjusting the frame size, and it will generate the image.

ComfyUI and Other Local UI Tools – The community has rapidly worked on integrating QwenImage into popular open-source visual interfaces. ComfyUI, a powerful node-based GUI for diffusion models, now supports Qwen-Image with a native workflow 51 . The ComfyUI team provided a template that loads Qwen-Image’s diffusion model, text encoder (Qwen2.5-VL), and VAE, and allows you to input prompts, set image dimensions, and generate results 52 . They even give some reference stats: on a 24GB GPU, the 8-bit model takes ~70 seconds for first image, ~20 seconds for subsequent ones at default settings 53 . This integration is significant because ComfyUI also lets you incorporate things like ControlNet nodes or image preprocessing – meaning you can do advanced things like feed Qwen-Image a depth map or combine it with other models in a pipeline. We can expect Automatic1111 (another popular UI) to also get Qwen-Image support soon via extensions, given the buzz around the model.

GitHub Repository – Alibaba has a GitHub for Qwen-Image (under the Qwen team organization) providing the code, model weights links, and examples 54 . The code being out means developers can read how the model is implemented, possibly tweak it, or use it to fine-tune on new data. It's also helpful for those looking to deploy Qwen-Image on their own servers or cloud setups.

All of these outlets underscore Alibaba’s approach: they didn’t just drop a model and call it a day; they ensured it’s easy to use for everyone. This ease of use was highlighted as a key feature – “the model is easy to use and works well even with simple prompts” 55 . If you don't want to write a novel of a prompt, that's fine – Qwen-Image can produce great results from straightforward prompts too, thanks to its robust training.

The Apache 2.0 license is particularly important. It means the model is truly open: you can integrate it into commercial products without worrying about legal issues. By contrast, some other “open” models like Stable Diffusion came with more restrictive licenses (like prohibiting certain uses). Apache 2.0 is very permissive. This invites startups, researchers, and hobbyists alike to adopt Qwen-Image freely. We might soon see Qwen-Image powering new AI art apps, being the base for fine-tuned specialized models, or even running inside design software, all because the licensing allows it.

Of course, running Qwen-Image at full capacity does require serious hardware. Not everyone has a spare 24GB GPU in their PC. But the good news is the model supports quantization (8-bit, etc.) which halves the memory footprint with minimal quality loss 56 . And the availability of cloud demos means you don’t need local hardware just to experiment. For heavier use, one could deploy it on cloud GPU services (e.g., AWS, Alibaba Cloud, etc.). Over time, as hardware gets cheaper and more optimized libraries (like DeepSpeed, Accelerate) support Qwen, the barrier to running it locally will lower. It’s similar to how running a large language model at home was impossible a few years ago, and now 13B or 30B models are feasible on highend PCs – Qwen-Image, at 20B, is on that cusp of doability for enthusiasts.

Integration into the AI art ecosystem is already happening. We’ve mentioned ComfyUI; another likely integration is with Stable Diffusion’s vast ecosystem of tools. There’s potential for mixing Qwen-Image with existing pipelines – for example, using Qwen’s text encoder with other diffusion backbones, or vice versa, given the modular nature. Some users might experiment with ControlNet from Stable Diffusion on Qwen’s diffusion model, or use Qwen-Image as a backend on services like NightCafe or Artbreeder.

It’s also on Discord communities and likely will be available through bot interfaces (like how there are Stable Diffusion discord bots, we might see Qwen-Image bots). All this means Qwen-Image is not just a research model, it’s being treated like a product that people can readily use.

To sum up: Qwen-Image is out in the wild and easy to access. Alibaba’s multi-platform release strategy ensures that whether you’re a programmer who wants to dig into the code, an artist who wants a one-click tool, or a researcher aiming to evaluate/extend the model, there’s an option for you. This broad availability is essential for an open model to actually make an impact – and Qwen-Image is already inspiring a wave of community engagement.

Impact and Outlook: Shifting the Open-Source AI Art Landscape

The release of Qwen-Image marks a significant milestone in the ongoing “open vs closed” AI arms race. In many ways, it redefines what open-source models are capable of, and its arrival will likely ripple through the industry in the following ways:

Democratizing Advanced Creative Tools: By providing capabilities on par with commercial systems for free, Qwen-Image lowers the barrier for artists, designers, and developers around the world. Small studios or indie creators who can’t afford enterprise API fees now have a powerful tool at their fingertips. As Alibaba put it, open-sourcing Qwen-Image is aimed at “lowering the technical barriers to visual content creation” 57 . This empowerment can lead to a surge in creativity and innovation from the grassroots. We’ll see more community-driven projects, plugins, and artworks that leverage Qwen-Image, much like Stable Diffusion sparked a creative revolution in 2022. The difference is, Qwen-Image expands that revolution into domains that were previously tough for open models (high-res graphics, text-heavy designs, etc.).

Pressure on Proprietary Services: Qwen-Image is a statement that open models can catch up with the tech giants. This competitive pressure could benefit consumers in multiple ways. Commercial providers like OpenAI, Midjourney, or Adobe will need to continually up their game and possibly reconsider pricing when an open model offers a compelling alternative. It might also push them to be more transparent or even consider open-sourcing parts of their tech (one can dream!). At the very least, it invalidates any notion that “you must use a closed API for the best results” – clearly, you do not. Open-source is now at a point where it can rival closed systems in quality, which will keep the AI ecosystem more open and competitive.

Catalyst for More Open Research: Qwen-Image’s success will likely inspire other organizations to invest in large multimodal open models. Just as Meta released Llama 2 for language, we might see a trend of open image models coming out. Perhaps Google or another research lab might answer with their own open textto-image model with different strengths. This back-and-forth drives the field forward. Also, Qwen-Image provides a reference architecture (LLM + diffusion) that others can build on. Researchers might take the idea and apply it to different modalities (video generation next, or 3D maybe). In the technical report, Alibaba hinted that they view Qwen-Image as a step toward “vision-language user interfaces” that integrate text and image more tightly 58 . This suggests a future where models seamlessly handle any mix of text or visuals – Qwen-Image is a concrete leap in that direction.

Room for Improvement: Despite all the praise, Qwen-Image is not the end-all be-all. There are areas it can grow, and being open means the community can help it grow in those areas: - Fine-tuning and Custom Models: Currently, Qwen-Image is a general model. It might not have a specific art style that someone wants or might not know about a niche domain (say medical images or a particular game’s art style). The open weights allow for fine-tuning or LoRA adapters, but as of now Alibaba hasn’t released specialized fine-tunes. The community will likely start producing LoRAs (lightweight fine-tunes) to teach Qwen-Image new tricks or styles. However, fine-tuning a 20B model is non-trivial and requires a lot of VRAM/data, so tools and techniques to do this easily will be needed. Over time, we may see Qwen-Image models fine-tuned for anime, or Qwen-Image v2 with even better capabilities (maybe after feedback). - Training Data Transparency: Alibaba gave a broad overview of the training data composition 17 , but details on the exact dataset are scarce. For the open-source community, knowing what data was used (and its licensing) matters for both ethical and practical reasons. If Qwen-Image was trained on certain public datasets, acknowledging those helps continue community work. Moreover, if there are biases or gaps in the data, transparency helps address them. For instance, does Qwen-Image have equal prowess on all languages or is it mostly English/Chinese? The mention of some Japanese/Korean support suggests a bit of data there 59 , but likely not as much as English/Chinese. An open dialogue on data would be healthy – perhaps Alibaba or others will publish more in-depth data audits. Regardless, because the model is open, the community can partially probe it (e.g., see how it responds to various cultural prompts, etc.) to identify biases. - Compute and Accessibility: As discussed, running Qwen-Image needs heavy compute. Right now, that’s a limiting factor for many hobbyists. We might see optimizations – such as someone converting the model to int4 quantization (trading some quality for smaller size), or splitting it across multiple GPUs. There’s also the possibility of distilling Qwen-Image down to a smaller model. For example, a clever approach could try to train a 7B or 10B model that imitates Qwen-Image’s outputs, making a more lightweight version. While it won’t match the full quality, it could be useful for those who want faster/ cheaper generation with some text rendering capability. It will be interesting to see if Alibaba releases smaller variants or if others attempt it. - Subtle Quality Improvements: Based on early testers’ feedback, Qwen-Image is amazing at what it does, but there’s room to polish. For instance, in very dense text scenarios (like an infographic with lots of small text), the model sometimes misses a few words or letters 25 33 . Its spelling in English is generally correct, but not infallible under pressure. Future training or finetuning could improve that consistency (perhaps by using an auxiliary spell-checker or OCR to guide it during training). Another aspect is coherence in complex scenes – if you ask for a very complex scene with many objects and relationships, the model might not nail every detail (which is expected; even humans struggle there). Larger context helps but the model still has to juggle it. There’s ongoing research on planning or decomposition for image generation that could be applied to push these boundaries further. - Safety and Moderation: Alibaba, like other providers, will have to consider content safety. An open model can be used to generate disallowed content (e.g., realistic fake signage with harmful messages, etc.). It’s a double-edged sword of open access. Likely, the model card has some guidelines and Alibaba’s demo will have usage policies. But unlike closed APIs which have filters, an open model relies on user responsibility. This means the community will need to self-regulate or build tools to detect misuse. Over time, open models might incorporate techniques to reduce harmful outputs (without hard-coding filters, which many users dislike). This is an area of ongoing discussion in open-source AI: how to balance openness with social responsibility. Qwen-Image’s impact will be part of that conversation as well.

In terms of symbolic importance, Qwen-Image feels like a culmination of trends. It’s as if the open-source movement in AI said, “Let’s take everything we’ve learned – diffusion models, transformers, large language models, multimodal training – and put it together into one super-model for images.” And they did, and succeeded. This will likely be remembered as a turning point where open models started leading in innovation, not just following. For instance, the MSRoPE technique for text positioning 19 is an innovation that others might now adopt. The integrated design might inspire next-gen systems like a future Stable Diffusion 3 or Google’s Parti to incorporate LLMs natively.

Alibaba’s open strategy also fosters a more transparent and sustainable ecosystem (as they themselves stated) 60 . Instead of siloing the advances, they put them out for collective benefit. If more companies do this, we’ll have a rich commons of AI models that anyone can build on – which is good for society, to counterbalance the dominance of a few AI monopolies.

Finally, what does this mean for everyday users and artists? It means more freedom. Freedom to use an AI model offline, on your own data, without censorship or fees. Freedom to tweak the model to better suit your needs. And freedom from the whims of a company that might otherwise decide how you can use AI. Qwen-Image users can create without constraint, and that unleashes a lot of creative potential. We’ve already seen how artists used open models to create styles and art that were unique to them. Now they can do so with text and design elements included – opening up new genres of AI-assisted art (like AI-generated graphic design, comic books, etc.).

In conclusion, Qwen-Image is more than just a new model; it’s a flag planted in the ground for opensource AI. It demonstrates that with collaboration and commitment to openness, we can have AI tools that are as powerful as those behind closed doors. There’s still work to be done and improvements to be made, but the trajectory is clear. As one reviewer pun-ily questioned – is Qwen-Image “Qwen-tastic”? The answer seems to be a resounding yes. It has redefined what's possible in 2025 for open-source image generation, and its ripple effects will continue to shape the future of AI art, making it more inclusive, innovative, and fun for all of us 61 .