Update 9/5/25

I've added V2 which cleans up unnecessary nodes and uses the fantastic Wan MOE KSampler that automatically determines when to switch from the high -> low noise model.

I've also added a version that uses Lightning speedup loras which you can download here.

I've been playing with Wan 2.2 over the past few days and WOW I'm impressed. I generate anime content and the quality difference over 2.1 is incredible.

I've tweaked my workflow to work with 2.2 and I'm at a point where I think it's good enough to share, so hopefully it helps others in the single-consumer-grade-GPU bracket :)

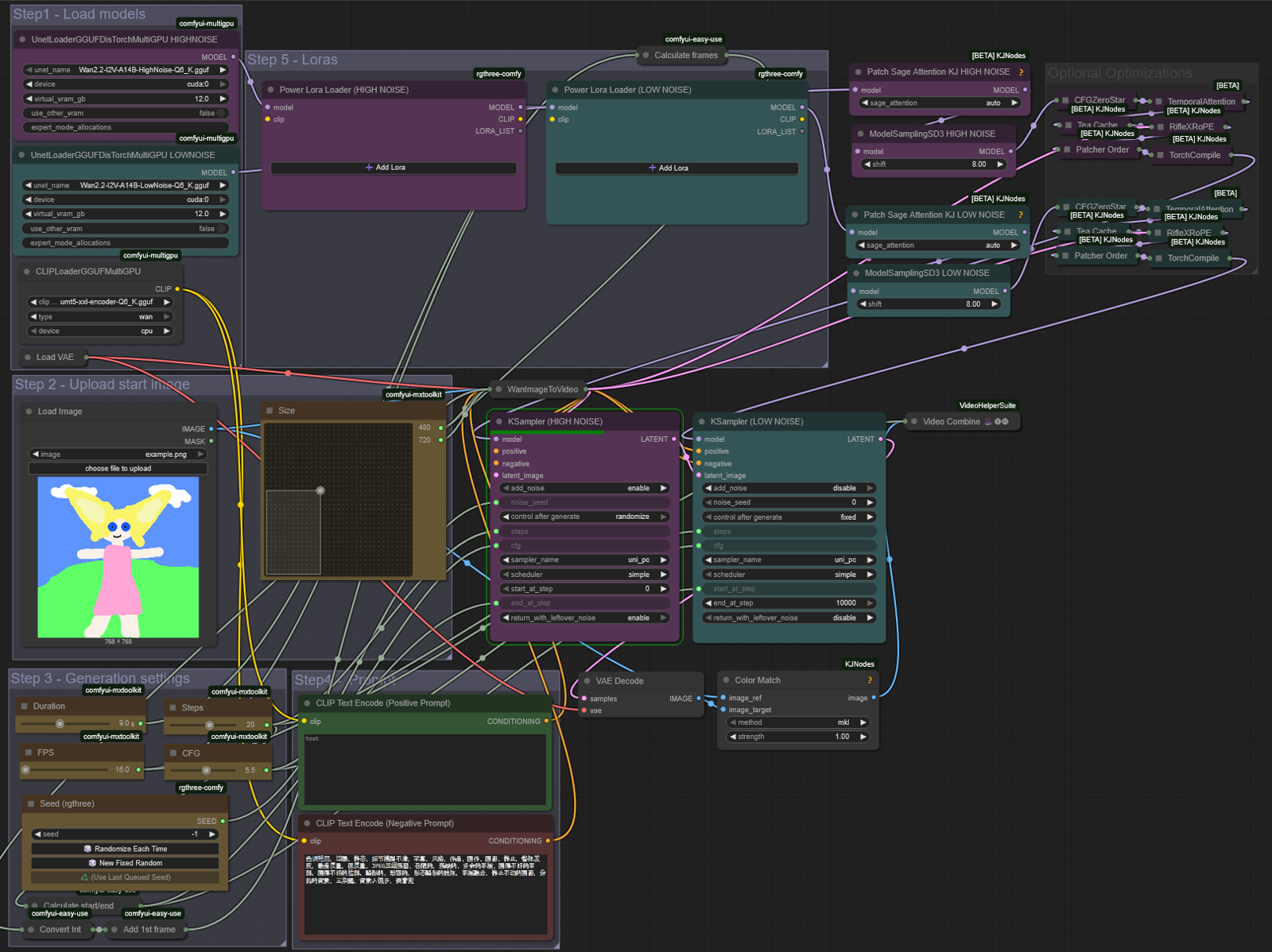

In this workflow the purple nodes are for the high-noise models and the teal nodes are for low-noise. I think it's pretty easy to plug-and-play, so give it a go and let me know if you have any troubles.

(The optimizations in the top-right corner were very helpful for WAN 2.1 but don't seem to be making as much of a difference in 2.2, so if you are having trouble with torch compile or whatever you can probably just bypass the whole group)

Models (\models\diffusion_models\)

HIGH NOISE: https://huggingface.co/QuantStack/Wan2.2-I2V-A14B-GGUF/tree/main/HighNoise

LOW NOISE: https://huggingface.co/QuantStack/Wan2.2-I2V-A14B-GGUF/tree/main/LowNoise

VAE (\models\vae\)

https://huggingface.co/QuantStack/Wan2.2-I2V-A14B-GGUF/tree/main/VAE

CLIP (\models\text_encoders\)

https://huggingface.co/city96/umt5-xxl-encoder-gguf/tree/main