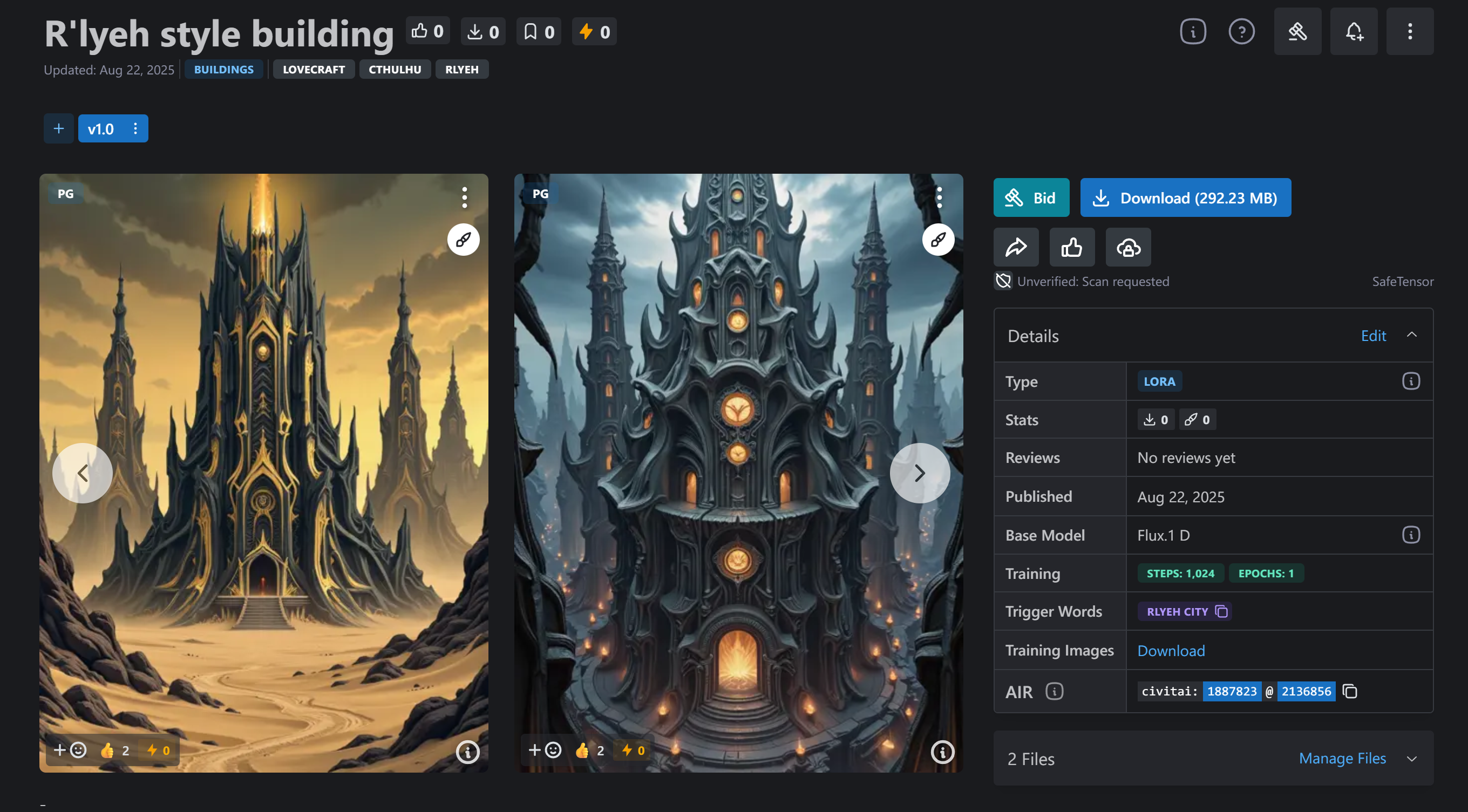

Recently, I've been learning how to train FLUX LoRA. Due to my lack of personal training skills, my attempts often fail. This has led me to frequently download, test, and analyze the training parameters of excellent community models, from which I have learned a great deal.

However, I have also discovered many overfitting LoRAs. From a purely functional perspective, these LoRAs can be very successful in certain scenarios, but the trade-off is their extremely poor generalization and frequent overfitting. For single-purpose use cases, they are often sufficient. Below, we will reconstruct this method of creating LoRAs.

I. Theme Selection

This is very important because this brutal training method is only suitable for specific themes. These include single objects or styles focused on a foreground subject, such as a specific character, an outfit, or a simple object. It can also be a strong stylistic feature, where the intensity of the style can mask the truth of overfitting, such as in manga, illustration, or a particular visual style. It's best to avoid complex, high-dimensional subjects.

II. Dataset Image Preparation

Open your browser with two tabs: Pinterest and Google Images. Then, you can start copying and pasting pictures,with a focus on leveraging their similar image search functions. Be aware that the more similar the images are, the better. It is ideal if all the works are from the same creator and have consistent style, lighting, and background. Preparing 20-50 images at this stage is enough.Cropping all images to a single resolution (using a GUI tool, Python script, or ffmpeg),this reduces the number of buckets, which can increase training speed.

III. Tagging

This part is nothing to worry about. Using Kohya SS's built-in WD 14 is enough, and although it's in the booru style and maybe not accurate sometimes,but it works well. You don't even need to set a specific trigger word (I'll explain why later).

IV. Training Parameter Settings - Kohya SS

Remember, our goal is to achieve "extreme" overfitting. Community users are looking for "does the character look like the original?" and "is the style good?". (These two traits aren't inherently bad, but you can't apply this to every LoRA.) Therefore, the more a LoRA resembles its dataset, the more successful it is considered to be.

For this purpose, we need to focus on the following key parameters:

Training Resolution: 1024x1024. This is crucial as it determines the level of detail the model can learn.

Image Count: 20-50. This quantity is sufficient for certain single objects and styles.

Image Repeats: 30-50. This forces the model to "memorize" the dataset in a short period. It ensures the model can reproduce all the details of the training images with extreme accuracy, satisfying the pursuit of visual perfection.

Number of Epochs: 1 We are not pursuing the breadth (epochs) and generalization of training, but rather the depth (repeats) and overfitting.

LR (Learning Rate): 0.0001

Dim/Alpha: 32/32. ensures the model can learn fine details.

Total Steps: 1000-2000. The step count doesn't need to be very high. After all, the themes we're training are not complex; we just need to achieve overfitting.

timestep_sampling: shift,The difference between

rawandshiftsampling is thatrawtrains the model to be 'all-encompassing,' whileshifttrains it to be 'purposeful'.

In reality, for some simple/low dimension themes, a 512x512 training resolution and just a few hundred training steps are enough to cause overfitting, and overfitting is all that is needed.

V. Publishing on Civitai

There are many tricks we need to pay attention to here.

First, select 6-7 good images from the training set and use tools like JoyCaption/Florence to get LLM prompts. Then, use your trained LoRA to generate the model's preview images. The output from JoyCaption (usually detailed sentences) perfectly matches the booru tags that the model learned during training, thereby activating its highly-fitted Unet portion to produce a good result. This perfect match between "training input" and "generation input" allows the model to create high-quality images that are almost identical to the training images or have a highly consistent style and composition. (But because of overfitting, sometimes all the preview images look the same.)

The trigger word on Civitai can be anything since you didn't actually set one.

From the LLM-style output of JoyCaption/Florence, select a few effective, general, and representative keywords to provide to the user as prompts. This works because they are still highly correlated with what the model learned during training.

VI. Summary

No need to use inpainting to fix dataset details, no need to observe loss charts, no need to repeatedly compare images generated during training to check for overfitting, no need to set different learning rates for different stages, and no need to spend time writing captions. This method ensures that LoRAs can be 'produced' quickly and in batches. This process is even more convenient if you have a Pinterest scraping script or if someone has already organized and packaged various image assets for you.

If the theme of the LoRA happens to be a strong style, even with this high-risk training strategy, limited generalization can be achieved through certain methods(which is a valid training method for some style LoRAs), However, if not, this type of model is essentially a "dataset image copier."

This training strategy isn't inherently bad,the methods mentioned above for providing prompts are also one of the ways to provide prompts after LoRA training is complete; they work for specific LoRA themes. But if all LoRAs, regardless of style or category, are all trained this way, then this strategy is fundamentally a form of "cheating." It relies on overfitting and a precise input-output match to create the illusion that the model has true generalization capabilities.