Mastering Prompting in Qwen-Image: A Plain-Language Guide to Reliable Image Generation

1. How Qwen-Image Understands Prompts

Qwen-Image is built with a powerful language-model backbone (Qwen2.5-VL) that interprets your prompt as natural language, not just as isolated keywords[1][2]. This means you can write prompts in full sentences or detailed phrases, and the model will grasp the context, relationships, and even layout cues from your description. Unlike older image generators that responded best to terse keyword lists, Qwen-Image understands structure and semantics in your request. It “reads” your prompt almost like a person would – considering the subject, actions, and modifiers – rather than simply counting keywords[2]. The training data for Qwen-Image included many well-written text-image pairs (e.g. photographs with descriptive captions, design layouts with annotations), which helps it follow detailed, natural prompts accurately[3]. In practice, this means you don’t need special prompt syntax or lots of | separators; just describe what you want in plain English (or Chinese) and Qwen-Image will do its best to fulfill it.

· Example Prompt: "A black cat sleeping on a sunlit balcony, photorealistic, morning light." – Uses a full sentence style to describe the subject (“a black cat”), setting (“on a sunlit balcony”), and style (“photorealistic, morning light”). Qwen-Image will understand this as one coherent scene, not just disconnected tags.

· Example Prompt: "Illustration of a wizard in a library, shelves of glowing books behind him, fantasy style." – This prompt reads naturally, and the model will interpret it accordingly (wizard as subject, library setting with glowing books, in a fantasy art style).

These examples show how writing a prompt as a descriptive sentence (with commas separating aspects) leverages Qwen-Image’s language understanding. The model can parse complex sentences and follow multi-part instructions better than most, even if you mention multiple elements or clauses[2]. In short, talk to Qwen-Image in normal language – it’s designed to follow along.

2. Writing Effective Prompts (Prompt Anatomy)

While there’s no one mandatory format for prompts, it helps to include key elements in a logical order. A simple prompt anatomy to consider is: Subject → Setting → Style → Text (optional) → Layout hints. By organizing your prompt roughly in this sequence, you ensure the model gets all the important details:

· Subject: Who or what is the image mainly about. (E.g. “Photo of a cyclist”, “Illustration of a wizard”, “A dragon flying over mountains”.)

· Setting/Context: Where or in what situation the subject is, including background elements or atmosphere. (E.g. “on a mountain road at sunrise, dramatic clouds in the background”, “in a library, shelves of glowing books behind him”.)

· Style/Genre: Any specific art style, genre, or visual tone. (E.g. “cinematic lighting”, “fantasy style”, “photorealistic”, “anime-style”, “in the style of Rembrandt”.)

· Text (optional): If you want written text to appear in the image, specify the exact words in quotes and where they go. (E.g. “bold headline at the top: 'SUMMER NIGHTS 2025'”, “a wooden sign that reads 'BAKERY & CAFE'”.)

· Layout hints: Any composition or positioning instructions to guide the model. (E.g. “central image of a crowd under fireworks”, “title text at the top, tagline at the bottom”, “two text fields labeled 'Email' and 'Password'”.)

Putting these together, you can craft a prompt that covers all aspects of the desired image. Qwen-Image’s understanding is robust, so you can be fairly specific. For instance, if you say “a portrait photo, head and shoulders centered, soft blurry background”, the model will interpret the composition hint (“centered head and shoulders”) and the depth of field hint (“blurry background”) appropriately. The order of information can matter: usually start with the main subject and scene so the model knows what the primary focus is, then add style or extra details. Avoid extremely long, rambling prompts beyond a few sentences – while Qwen-Image can handle very lengthy descriptions (its context is large), keeping prompts focused tends to yield more coherent results.

· Example Prompt: "Photo of a cyclist on a mountain road at sunrise, dramatic clouds in the background, cinematic lighting." – This prompt follows a clear structure: it introduces the subject (a cyclist), the setting (mountain road at sunrise with dramatic clouds), and the style (cinematic lighting). The model will likely produce a dramatic wide-shot photograph of a cyclist climbing a mountain road at dawn, with rich lighting and clouds, because the prompt cleanly provided those elements.

· Example Prompt: "Poster of a summer music festival, central image of a crowd under fireworks, bold headline at the top: 'SUMMER NIGHTS 2025'." – Here we specify a format (poster), the subject/scene (crowd under fireworks), a layout cue (headline text at the top) with exact text content. Qwen-Image will understand that the output should resemble an event poster: probably with the crowd and fireworks as the background image and the title text “SUMMER NIGHTS 2025” prominently at the top. Because we described it in one sentence, the model grasps it as a single concept (not separate unrelated pieces).

In summary, think of writing a mini scene description. Be clear and concise about each aspect (subject, setting, style, text) in your prompt. Qwen-Image excels when you paint a picture with words in a structured way, rather than just throwing random keywords around.

3. Text-in-Image Prompting

One of Qwen-Image’s standout talents is generating readable text within images. It can produce English and Chinese text (and other languages to some extent) with surprising fidelity – a capability that sets it apart from most image models[4][5]. This means you can ask for signs, posters, book covers, UI labels, and more, and expect the words to actually be legible and correct in the image. The model doesn’t just slap text on top; it integrates the text naturally into the scene with appropriate font styling and perspective[5]. To leverage this:

· Always quote the exact text you want in the image and mention where or how it should appear. For example: “a wooden sign that reads 'BAKERY & CAFE', hanging above a shop door” or “title text at the top in gold letters: 'THE LAST FLIGHT'”. Quoting the text in your prompt leaves no ambiguity about what letters/words should be generated.

· Provide context for the text so the model knows it’s part of the scene. Say “a sign reads '...’” or “a magazine cover titled '...’” rather than just putting the text alone. Qwen-Image uses the context to place and style the text appropriately[2].

· You can mix languages in one image. For instance, Qwen-Image can do bilingual text (English and Chinese together) without issues – it was specifically trained for high-fidelity rendering in both alphabetic and logographic languages[4]. If you include, say, an English title and a Chinese subtitle in the prompt, it will attempt to render both in the image. Always specify where each goes (e.g. “English text at the top: 'Hello'; Chinese text at the bottom: '你好'”) for clarity.

Figure: Qwen-Image can seamlessly render multiple lines of text in an image. In this example, the model produced a realistic bookstore window display with various textual elements. Notice the sign reading “New Arrivals This Week” and the poster advertising an event (“Author Meet And Greet on Saturday”), as well as the book titles on the shelf. Qwen-Image preserves the fonts and layout, integrating the text as part of the scene rather than as random floating text.

To get the best results with text, it also helps to keep the text relatively short and use standard characters. Qwen-Image is excellent at multi-line text and even paragraphs[4][6], but very long blocks of text might become less accurate. If you need a long phrase or several sentences, consider whether you can break it into shorter chunks in the prompt (e.g. separate lines with separate quotes). The model’s specialized training (including high-resolution documents and designs) means it usually renders even small fonts clearly[6] – something most image models struggle with. Still, if you notice any misspelling or a letter off (which can occasionally happen, e.g. a letter might look a bit odd), you can try re-running the generation or using the edit feature to correct it.

· Example Prompt: "A storefront with a wooden sign that reads 'BAKERY & CAFE', handwritten style, morning street scene." – This prompt tells the model explicitly that there’s a sign and what it says. We even added “handwritten style” to guide the font appearance. Qwen-Image will likely produce an image of a bakery café shop in morning light, with a rustic wooden sign above the door clearly showing “BAKERY & CAFE” in a script-like font, fitting the scene.

· Example Prompt: "A fantasy book cover: dragon flying over mountains, title at the top in gold letters: 'THE LAST FLIGHT', author name at the bottom." – Here we ask for a book cover layout. The model will attempt to draw a dragon in a fantasy style and also place the title text “THE LAST FLIGHT” prominently at the top of the image in a gold, book-cover-appropriate font. The author’s name (not specified here, but you could add a name in quotes) at the bottom means it will try to put smaller text at the bottom as typically seen on book covers.

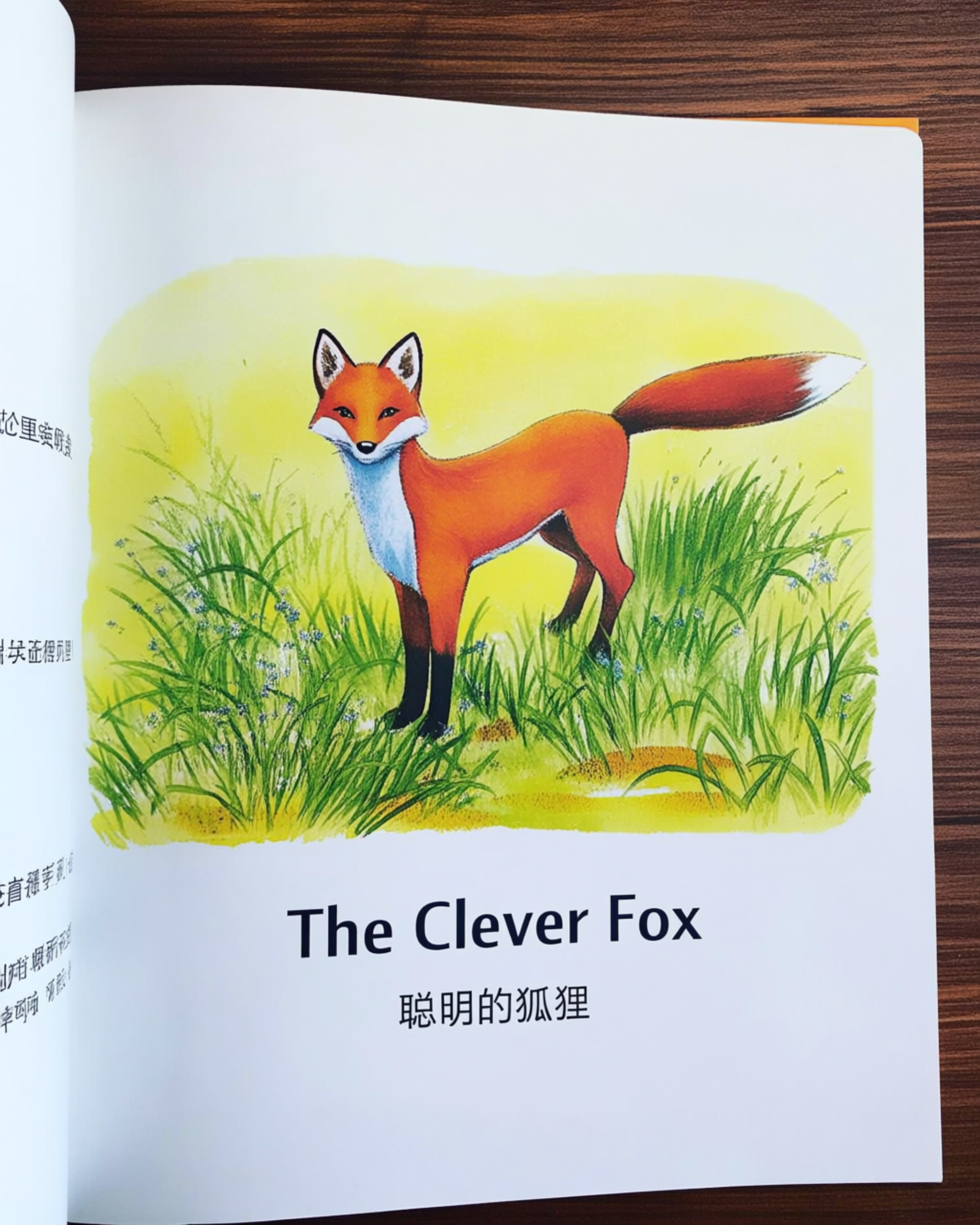

· Example Prompt (Bilingual): "Children’s storybook page with an illustration of a fox in a meadow. Text at the bottom: 'The Clever Fox' in English and '聪明的狐狸' in Chinese." – This prompt mixes languages. Qwen-Image can handle this: you’d likely get a cute illustration of a fox in a field, with the caption “The Clever Fox” at the bottom and the Chinese translation “聪明的狐狸” right below or above it. By specifying which text is in which language and where to put it, you help the model layout both lines properly. The result should resemble a bilingual storybook page, with both English and Chinese text clearly readable.

Overall, be explicit about any text you want in the image. Qwen-Image is exceptionally good at putting your words into the picture (even complex ones like signs with prices, posters with dates, etc.), as long as it knows exactly what to write and where. This opens up possibilities for creating things like flyers, UI labels, fantasy scrolls, and educational infographics directly via text prompt – a capability that Qwen-Image delivers with stunning accuracy in typography and layout[5].

4. Editing Prompts

Qwen-Image isn’t only for generating images from scratch – it also has a powerful image editing mode (called Qwen-Image-Edit) to modify existing images[7]. You can feed in an image and a prompt describing changes, and the model will alter the image accordingly. The key to effective editing prompts is to clearly state what to keep and what to change. Qwen-Image’s editing understands instructions very well, especially when you phrase them as “Keep X, but change Y to Z.” The model was trained to preserve the original image’s content and style except where you specifically instruct an edit, so it excels at making targeted changes while leaving the rest untouched[8][9].

Here are some guidelines for editing prompts: - Specify the elements that should remain unchanged. For example: “Keep the person and background” or “Keep everything the same except...”. This tells Qwen-Image-Edit to treat those parts as sacrosanct reference – it will do its best to not alter them beyond minor necessary adjustments. - Describe the change or new element clearly. If you want to replace or add something, be direct: “Change the cat on the chair to a small brown dog.” Or “Add a red hat on the person’s head.” Or “Remove the text on the sign.” The model will interpret this as an editing instruction. Because Qwen-Image uses its language model to parse the prompt, it can follow even fairly complex edit instructions (like “flip the orientation of the car to face left” or “make the sky nighttime with stars”) with a good degree of understanding. - Maintain context and style in your wording. If the image is a photo and you want the edit to blend in, you can mention style: e.g. “... in the same style” or “... realistic integration”. Qwen-Image strives to preserve visual realism and semantic consistency during edits[9], so usually you don’t have to explicitly say “make it look natural,” but it can help to reinforce if the edit is tricky.

Qwen-Image’s editing capability is advanced: it can insert objects, remove objects, change colors or styles, and even edit text within images (like changing what a sign or poster says) while keeping the original font/style of that text[10]. It uses a dual mechanism under the hood (feeding the original image into both the language model for semantics and the image encoder for appearance) to achieve this, which helps it maintain the identity of subjects and the overall realism even after multiple edits[11]. In practice, that means if you edit a picture of a person to change their clothes or background, Qwen-Image typically keeps the person’s face and identity consistent through the change – a notoriously hard task that this model handles better than most.

· Example Prompt (Editing): "Keep the person and background. Change the cat on the chair to a small brown dog." – If you supply an image that, say, shows a person and a cat on a chair, this instruction tells Qwen-Image-Edit exactly what to do. It will leave the person and the rest of the scene untouched, but where the cat was, it will generate a small brown dog in the same position. The new dog will be integrated to match the lighting and perspective of the original image. The output should look like the original photo never had a cat – it had a dog there from the start.

· Example Prompt (Editing): "Keep everything the same. Replace the poster text on the wall so it reads: 'Grand Opening – March 15'." – In this case, you might have an image of a store interior with a poster on the wall. This prompt tells the model not to alter anything except the text on that poster. Qwen-Image will edit the text on the poster to say “Grand Opening – March 15” while preserving the poster’s design, font style, and the wall around it. The result is precise text editing: the new text looks like it was originally part of the image[10].

· Example Prompt (Editing/Style Change): "Keep the composition the same. Make the same portrait but in the style of an oil painting, with warm brush strokes." – Here you provide an original image (perhaps a photographic portrait) and instruct Qwen to apply a style change. The model will aim to re-render the image as an oil painting, maintaining the person’s likeness and pose (composition remains) but altering the visual style to look like a classic painting with textured, warm-toned brush strokes. This showcases semantic preservation (same person, same pose) combined with appearance change (artistic style)[8]. Qwen-Image is particularly good at style transfer edits like this due to its training on multi-task editing.

When doing edits, be patient and specific. If the first edit isn’t perfect, you can chain another edit prompt on the output. Qwen-Image-Edit supports iterative editing: e.g., first “remove object A”, then on the result “now change background color”, etc. It was designed to maintain consistency across such iterations, especially for the main subjects[12][13]. Still, drastic multiple edits might eventually introduce some artifacts or drift (see Open Questions below), so it’s wise to check each step. Overall, Qwen-Image makes it possible to do complex image edits with plain language instructions – essentially doing Photoshop-level changes just by describing them.

5. Style & Control

One of the fun parts of prompting is guiding the artistic style of the output. Qwen-Image is a versatile model that supports a wide range of styles and genres – from ultra-realistic photography to anime illustrations, from classical paintings to surreal concept art[14]. To control style, you simply describe the style in your prompt, as one of the elements usually toward the end of the prompt (after describing the subject/scene). Here are some tips on style prompting:

· Use style adjectives or genre keywords. Words like “photorealistic”, “cinematic lighting”, “watercolor painting”, “comic-book style”, “dark fantasy art”, “minimalist vector graphic” etc., will influence the look of the image. Qwen-Image’s language understanding will associate those words with visual patterns (thanks to the large training set). For example, “photorealistic” will push it to generate something that looks like a real photo[14], whereas “anime-style” will yield a more illustrated, cell-shaded look.

· Refer to artists or specific styles if you want a very particular aesthetic. For instance, “in the style of Rembrandt” or “Surrealist painting in the style of Salvador Dalí” can make the output emulate those artists’ characteristics. Qwen-Image has been exposed to many art styles and can often capture the essence when an artist name or art movement is mentioned[14].

· Order matters when stacking styles. If you include multiple style descriptors, often the later ones dominate the final look. For example, prompting “a landscape, pencil sketch, vibrant oil painting” might lean more toward an oil painting because that came last. If you swapped the order (oil painting then pencil sketch), you might get a flatter sketchier look. So, list your most desired style last, or separate incompatible styles into different attempts. It’s generally best not to overload the prompt with too many style cues; pick a primary style or two that complement each other.

Use negative prompts for unwanted styles or elements. Qwen-Image supports negative prompting (like Stable Diffusion does). For example, if you want a clean illustration you might add a negative prompt like “no watermarks, no text” to avoid extraneous text, or “no blurry effects” if you want sharp detail. This is an advanced control, but worth knowing it exists. Often Qwen-Image doesn’t need heavy negative prompting unless you see a specific artifact recurring.

Example Prompt: "Anime-style illustration of a futuristic city skyline at night, glowing neon signs." – This clearly asks for an anime-style image. The model will likely produce something reminiscent of a frame from a sci-fi anime: a city at night with stylized neon lights, possibly high contrast and clean lines, given the “illustration” cue. The term “anime-style” strongly influences the character design (if any) and overall look (expect bold colors, sharp outlines, etc.).

· Example Prompt: "Photorealistic portrait of a woman wearing traditional Renaissance clothing, painted in the style of Rembrandt." – Here we mixed descriptors: “Photorealistic portrait” and “painted in the style of Rembrandt.” This is a case of combining a general quality (photorealism) with a specific art style reference. The model will try to satisfy both – possibly yielding a very life-like face (photographic detail) but with Rembrandt-esque lighting and color tones (rich shadows, warm light) as if it were an oil painting. The mention of Rembrandt, being last, might give the image a strong classical painting vibe despite the word “photorealistic.” The result could look like an extremely detailed oil painting that almost feels like a photo.

· Example Prompt: "Surrealist painting of an apple floating in the sky, in the style of Salvador Dalí." – We explicitly indicate Surrealist genre and Dalí’s style, so Qwen-Image will likely lean into that (think The Persistence of Memory mood, or Magritte-like imagery). An apple floating in the sky is a bizarre concept that fits surrealism. The model should produce an artsy image with the apple perhaps casting a shadow on clouds, with Dalí’s melting or dreamlike visual touches. Style terms like “Surrealist” and “in the style of Dalí” will make the output quite different than if we just said “apple floating in the sky” by itself – they add a controlled weirdness and painterly quality.

In summary, include style instructions in your prompt to steer the image’s look. Qwen-Image is very good at adapting to different styles on the fly[14]. If one style isn’t coming out strongly enough, try emphasizing it (for example, “highly detailed watercolor” instead of just “watercolor”). And remember that because the model truly understands the prompt as language, you can be creative in describing style, even metaphorically. It might grasp phrases like “in dreamy, pastel tones” or “with the gritty realism of a 1970s documentary photo”. Don’t be afraid to experiment – Qwen-Image’s strength is in following your described vision as closely as it can.

6. Resolution & Aspect Ratios

When it comes to image size, Qwen-Image gives you a lot of flexibility. It can generate images at very high resolutions – up to around 3584×3584 pixels (roughly 12.8 million pixels) in a single pass[15]. This is substantially larger than many earlier text-to-image models which often max out around 1024×1024. In practical terms, you can get print-quality or presentation-ready images directly, without needing to upscale afterwards. However, there are some considerations and best practices for using different resolutions and aspect ratios:

· Start with a smaller draft, then upscale if needed. High resolutions mean more computation and sometimes slightly different compositions. It’s often efficient to generate a quick preview at, say, 512×512 or 1024×1024 to make sure the content and composition look good. Then, once you’re happy, you can request a larger version (2048 or 3584 px square, for example) using the same prompt for a high-detail result. Qwen-Image will generally maintain the overall look when going higher res, just adding more detail. Since Qwen-Image was trained on a lot of high-resolution imagery, it tends to fill in details nicely at larger sizes.

· Use aspect ratio presets or multiples of 64/28 pixels. The model accepts custom width and height, not just square. Common aspect ratios like 16:9, 3:4, etc., are supported – you just provide the desired width and height. The Qwen team suggests using dimensions that are multiples of certain values (the underlying architecture works with grids of pixels). For simplicity: stick to even numbers that correspond to standard resolutions. For example, 1024×1024, or 1280×720 (approx 16:9), or 1408×1792 (which is 3:4). In code examples, they use specific sizes like 1664×928 for 16:9[16]. You don’t have to calculate these exactly; just know that if you pick something like 1600×900 the model will auto-adjust to the nearest valid dimensions internally.

· Remember the maximum size. If you request something above ~3584 in either dimension, the system will likely downscale it to the max it supports[15]. Also extremely small images below ~56×56 are not allowed (not that you’d usually go that low). So keep within the range. If you need a giant poster beyond 3584px, you might generate in tiles or use an external upscaler on Qwen’s output.

As a rule of thumb, 1024×1024 is a good starting point for many prompts – it’s fast and detailed. For wide or tall compositions (like a landscape banner or a portrait orientation book cover), you can do 16:9 or 9:16, etc. Qwen-Image was trained on various aspect ratios (its dataset included a lot of design content like posters, slides which aren’t square[17]), so it usually handles framing well. Just be aware that extremely panoramic or unusual ratios might crop some content oddly; you might need to tweak the prompt to ensure important subjects appear fully in frame.

· Example Prompt (Draft size): "Square 1024×1024 illustration of a cozy kitchen interior, warm lighting." – Here we explicitly ask for a square image of 1024px. Qwen-Image will produce a reasonably detailed square illustration. If the result looks good, we can then simply change the prompt to request a larger size.

· Example Prompt (High-res final): "High-resolution 3584×3584 poster of a concert stage at night, title text 'LIVE IN TOKYO 2025' across the top." – This prompt is aiming for the maximum resolution. The model will generate a very detailed poster image at 3584×3584 pixels, likely with crisp text “LIVE IN TOKYO 2025” at the top and plenty of clarity in the crowd, stage, and lighting. This could be print-ready. It will take longer to generate than a smaller image, of course. If it’s too slow or if you run into memory issues, try a slightly lower size (e.g. 2048×2048, which is still quite high). But assuming resources allow, Qwen-Image can directly produce the final high-res artwork.

In short, use resolution strategically: low-res for speed and testing, high-res for final output. Qwen-Image’s ability to go large is a big advantage for professional use cases (no need for separate upscaling software in many cases). Just keep in mind the limits and the fact that composition might tweak subtly at different aspect ratios. If something is off in a high-res image that looked fine in low-res (or vice versa), you might adjust the prompt or try a different random seed. Most often, though, it will scale up nicely with even more detail and sharper text when you increase the resolution.

7. Prompt Patterns and Use Cases

Now that we’ve covered general techniques, let’s look at specific creative use cases and how to prompt for them. Qwen-Image is capable of handling quite diverse tasks – from making professional posters with formatted text, to mocking up user interfaces, to generating game assets like character sheets, to producing storybook pages with illustrations and captions. Below we explore each with examples and tips:

Poster and Advertisement Prompts

Creating posters, flyers, or ads is an area where Qwen-Image shines, thanks to its strength in text rendering and layout understanding. When prompting for a poster or ad: - Make sure to specify it is a poster/flyer (this cues the model to expect a designed layout). - Include the main image or theme of the poster, and any headline or tagline text with placement hints (e.g. title at top, tagline at bottom). - Mention style or mood to match the event or topic (e.g. a horror movie poster vs. a cheerful fair flyer will be very different in tone).

Figure: Qwen-Image can produce high-quality poster-style images with crisp text and well-composed design elements. In this example poster (for an imaginary art gallery exhibition), the model has accurately placed a title “CHROMATIC REALMS” at the top in bold font, included event details in smaller text below, and even an artist’s name in script at the bottom, all integrated over an abstract painted background. This demonstrates Qwen-Image’s layout skills – it understood the prompt’s instructions for multiple text fields and arranged them in a visually pleasing way.

For a poster, it’s helpful to think of the visual hierarchy: what’s the eye-catching element, what’s the title, what supplementary info is included. You can describe that hierarchy in the prompt. Qwen-Image will attempt to honor it by sizing and placing elements accordingly (for example, making the title text larger than a tagline). It uses its internal spatial modeling to align text and imagery as instructed[2][18].

· Prompt Example (Movie Poster): "Movie poster of a detective standing under a streetlamp, foggy night. Title text at the top: 'SHADOW CITY'." – This prompt defines a genre (film noir detective poster) and gives a clear scene plus a title. The model will likely produce a cinematic composition: the detective in trench coat under a lamppost in misty darkness, and at the top, the title “SHADOW CITY” in an appropriate font (maybe stark, bold letters). Because we said “movie poster,” Qwen might also include subtle details like credit text at the bottom or a tagline automatically, but if you want those you could explicitly add them. In any case, you should get a very polished-looking poster where the text is nicely integrated with the artwork.

· Prompt Example (Event Flyer): "Event flyer: colorful background of balloons, central headline 'SUMMER FAIR', small tagline at the bottom: 'July 20 – Riverside Park'." – Here we call it a flyer (which implies a certain layout style, often letter or A4 size). We describe a festive background (balloons, colorful) and we specify the main headline text and a smaller tagline with date/location. Qwen-Image will try to generate a vibrant, cheerful design – probably with balloons and confetti in the image – with “SUMMER FAIR” in big letters in the center or top, and the date/place “July 20 – Riverside Park” in a smaller font at the bottom. The result should look like a real flyer you could print out for a community event, demonstrating Qwen’s knack for combining graphics with text in a design-savvy way.

Tip: If you have specific color schemes or graphic styles in mind for a poster, include them in the prompt (e.g. “retro 80s style neon colors” or “minimalist design with lots of white space”). Qwen-Image will incorporate those into the design. And don’t forget you can ask for things like “with a blank area for QR code” or “with space for sponsors’ logos at the bottom” if you need them – the model might not generate actual QR or logos (especially if not provided), but it will leave appropriate space or dummy elements which you could later customize.

UI Mockup Prompts

Qwen-Image can even be used to generate user interface mockups or app screen designs from a prompt. This is a more niche use, but thanks to the model’s training on design and slide layouts[17] and its spatial reasoning, it handles structured UI elements decently. When prompting for a UI: - Be explicit about the type of interface (mobile app screen, website layout, dashboard, etc.). - List the key UI components and any text on them. For example, mention headers, buttons, icons, fields by name. - It helps to use words like “screen” or “UI design” so the model knows you want a flat design-ish image rather than a 3D scene.

Keep in mind that Qwen-Image will generate a static image of the UI – it’s great for concept visuals but it won’t produce functional code (of course). It’s more like asking a designer to sketch a prototype based on your description.

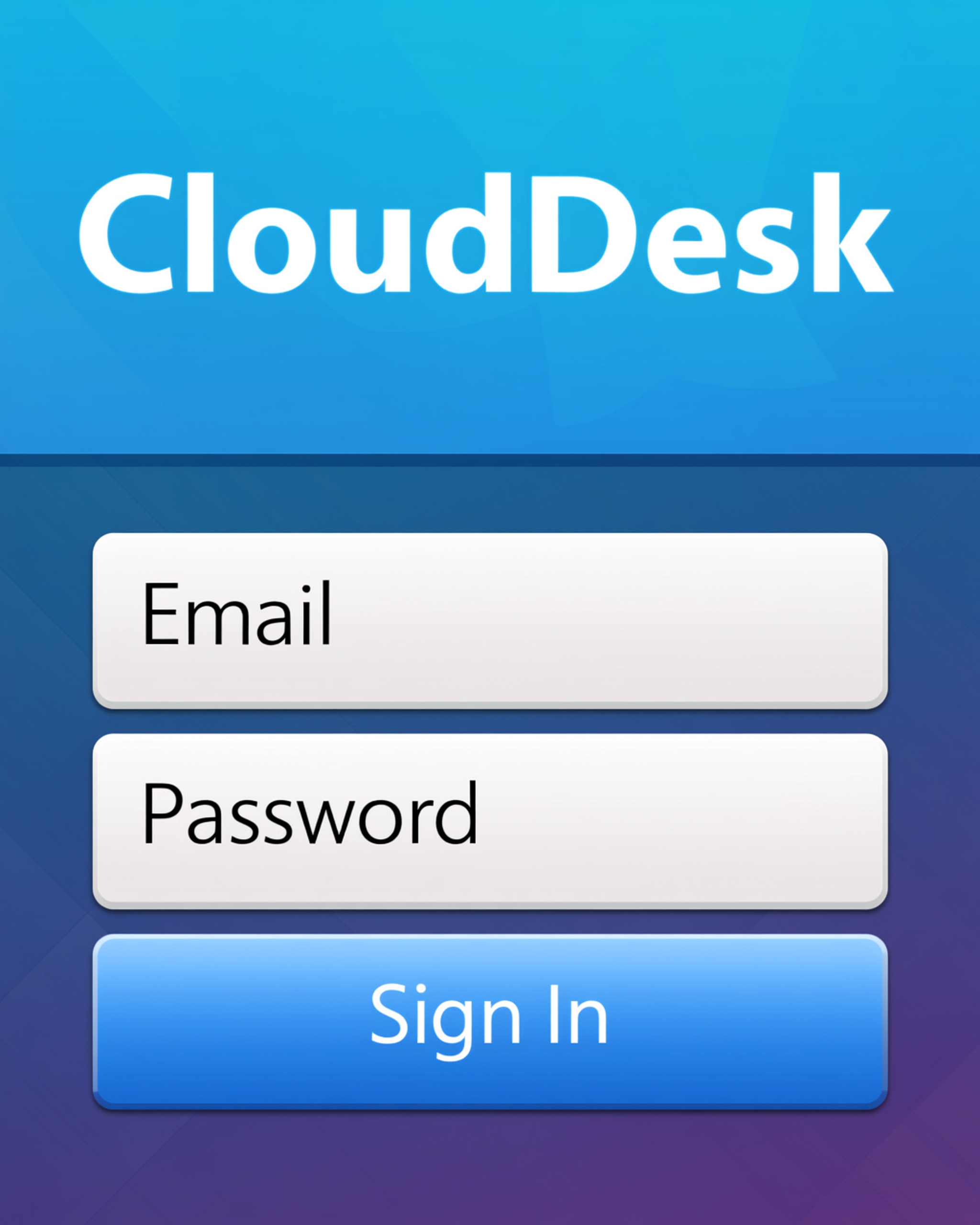

· Prompt Example: "Design a modern login screen with app name at the top: 'CloudDesk', two text fields labeled 'Email' and 'Password', and a button labeled 'Sign In'." – This prompt reads like requirements for a login UI. The model will likely imagine a clean login form: perhaps a centered layout on a background, the app name “CloudDesk” at top (maybe stylized as a logo or just text), two input boxes each with the labels “Email” and “Password” (it might even render placeholder text or something inside them), and a prominent “Sign In” button. The style “modern” implies it might use flat design, soft colors, etc. The result would be an image that looks like a screenshot of a login page. Qwen’s understanding of UI structure plus its text rendering means the words on the fields and button should be correct and legible. This showcases the model’s capability in non-photographic, structured outputs[18].

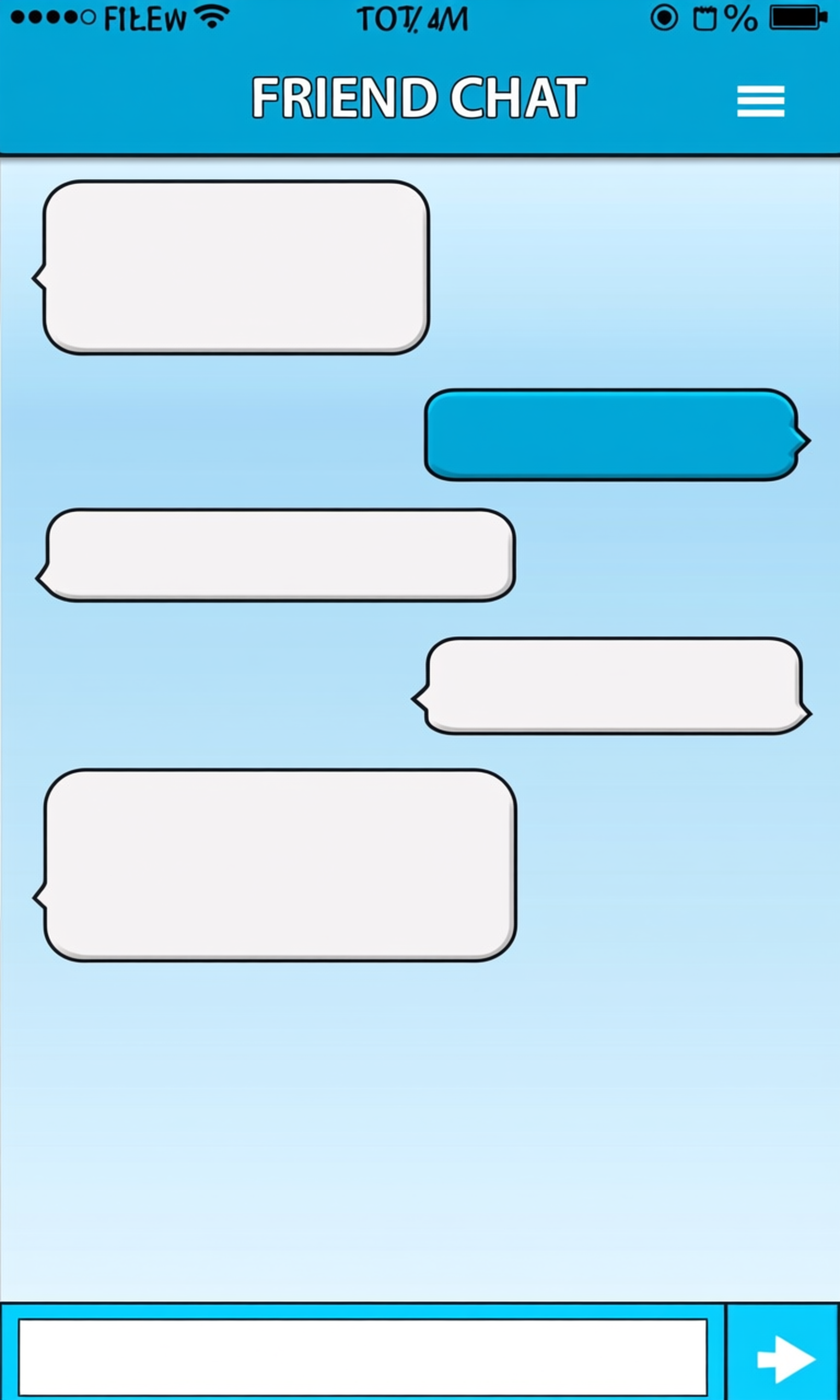

· Prompt Example: "Mobile app interface mockup: chat window with messages, title bar reading 'FRIEND CHAT', input box at bottom." – In this prompt we target a mobile chat app design. By saying “mobile app interface mockup,” we set the expectation it should resemble a smartphone screen. We describe a chat window (so it should have message bubbles or a conversation), and we explicitly state the title bar text (“FRIEND CHAT”) and that there’s an input box at the bottom (where you type new messages). Qwen-Image will attempt to draw something akin to a messaging app. You might get a phone-like frame or at least a rectangular screen with a top bar labeled “FRIEND CHAT,” some dummy chat bubbles (likely with short lorem ipsum or random text, or maybe even coherent short messages since it can generate text), and a text entry box above the on-screen keyboard. The visual might not be pixel-perfect as a real app, but it should convey the concept accurately, which is excellent for early-stage UI brainstorming or illustration purposes.

Because Qwen-Image was trained on slides and possibly GUI-like data, it knows about common interface elements (buttons, icons, menus). It also uses its spatial prompt parsing (thanks to techniques like MSRoPE) to arrange items as instructed[18]. That said, complex UIs with many small text elements might sometimes have gibberish filler text in minor parts (which is normal for AI images). For critical text like titles or labels, always specify them exactly as we did. And if you need a super sharp UI image, generating at a higher resolution can help with clarity of small text (you could do an initial low-res draft to confirm layout, then upscale to get crisp letters on the UI).

RPG Character Sheet or Trading Card Prompts

If you’re a game designer or hobbyist, Qwen-Image can assist in creating things like fantasy character sheets or trading card images, complete with both artwork and stats text. The key here is to combine an illustration with formatted text in your prompt: - Mention the visual theme/character for the image portion. - Mention the format (character sheet, card) so the model expects some text fields or tables. - Provide the exact stats or text you want displayed, and where (e.g. “on the side” or “below the image”).

The model will try to produce a layout that matches a typical character sheet or card: usually an image plus a text block. It might even add decorative borders or icons if the prompt suggests a themed design.

· Prompt Example (Character Sheet): "Fantasy character sheet: portrait of an elf ranger, parchment background, text block on the side: 'Strength 12, Agility 18, Wisdom 14'." – Here we ask for a character sheet style image. We specify a portrait of an elf ranger as the visual focus and a parchment background to give it that old-timey RPG document feel. Then we explicitly say there’s a text block with some stats. Qwen-Image will likely produce an image where the elf ranger’s portrait is prominent (maybe on one side) and next to it (on the side, as instructed) it will write the stats Strength 12, Agility 18, Wisdom 14. The parchment background and overall composition should resemble a page from a role-playing game manual or a D&D character sheet, possibly even with lines or a fancy border. The text for the stats should be clear – the numbers and words should come out exactly as given (Qwen is good at copying short text sequences like that). If you provided more stats or a list, it could list them vertically or in a decorative box.

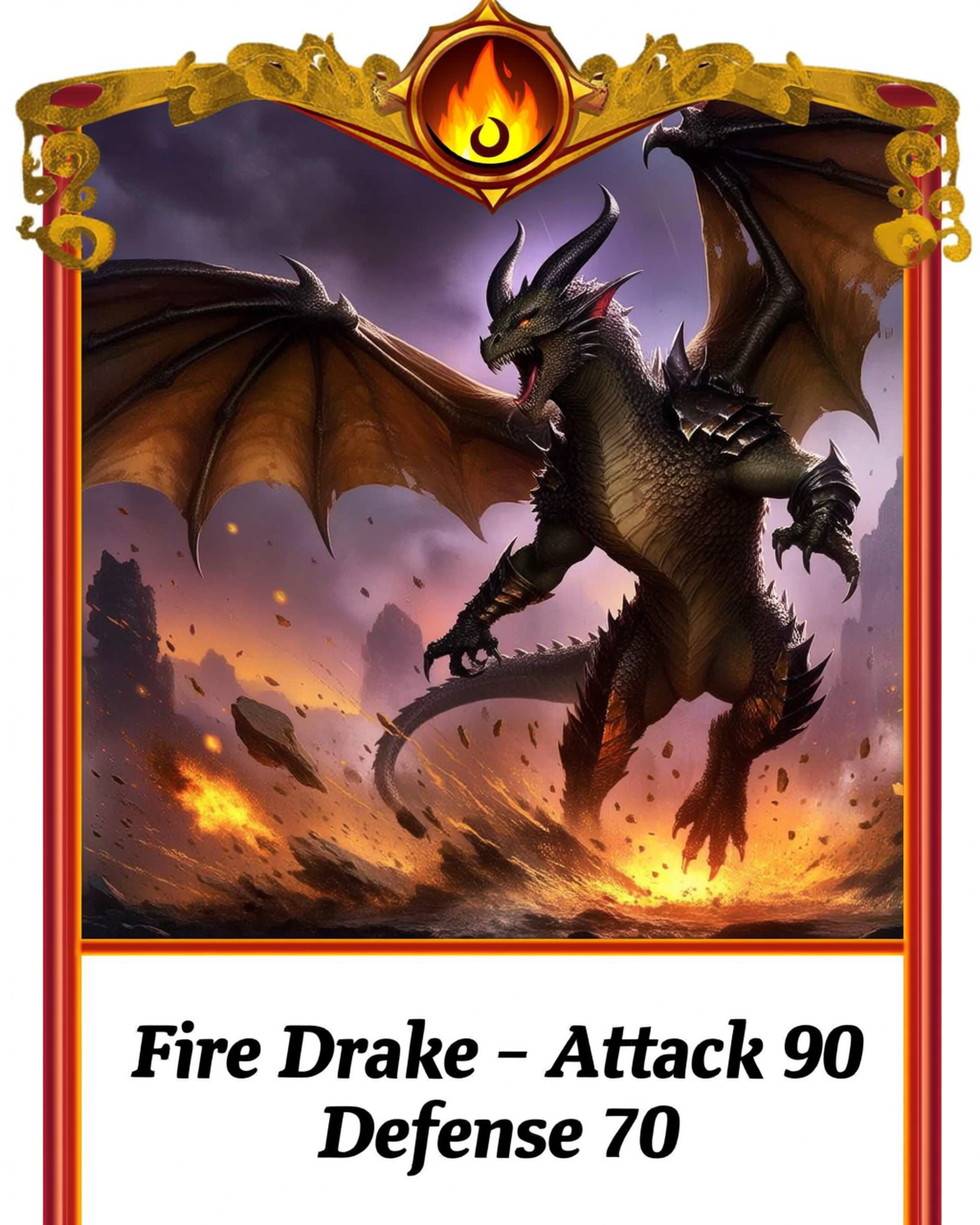

· Prompt Example (Trading Card): "Trading card design: image of a dragon in battle, text below the image: 'Fire Drake – Attack 90, Defense 70'." – This prompt sets up a scenario for a card. We have an image (dragon in battle) and some text stats below it. By saying “trading card design,” we cue the model to perhaps include a card border or at least to structure it like a card (often they have an image area and a text area). The model will draw a fierce-looking dragon and underneath, it will write “Fire Drake – Attack 90, Defense 70.” Likely it will stylize “Fire Drake” as the name and then the stats following. The use of an en-dash or dash and commas should be fine – Qwen can render punctuation and numbers consistently. The final image might even resemble something like a Magic: The Gathering or Pokémon card style (depending on what it infers), possibly with additional ornamentation. If you had specific colors or a template in mind, you could describe them (e.g. “in the style of a Yu-Gi-Oh card” or “with a gold frame”). But even without that, Qwen-Image will understand the general concept: picture on top, stats text below.

When doing these types of structured game assets, be aware that if the text block is very long or if there are many fields, the smaller ones might not all be perfectly legible. Qwen’s VAE is tuned for fine detail, so small text often comes out readable[6], but extremely tiny font (like a full paragraph of lore text on a small card) could blur. It’s best to focus on a few key lines of text (like name and a couple stats) for optimal clarity. If needed, generate at a higher resolution to capture small details.

Storybook Page Prompts

Lastly, Qwen-Image can be used to create storybook-style pages, which combine illustration with a caption or short narrative text. This is similar to posters or cards but usually in a more free-form artistic style (watercolor, cartoon, etc.), and often with the text in a distinct area like below the image or overlaid in a text box. To prompt for a storybook page: - Describe the scene or character illustration you want (the visual part). - Indicate it’s a storybook or children’s book style if you want a certain look (soft colors, simple shapes, etc., depending on the age target). - Provide the caption or story text and specify its placement (commonly at the bottom or top of the image).

Qwen-Image’s ability to handle bilingual text can be particularly useful if you want dual-language story captions.

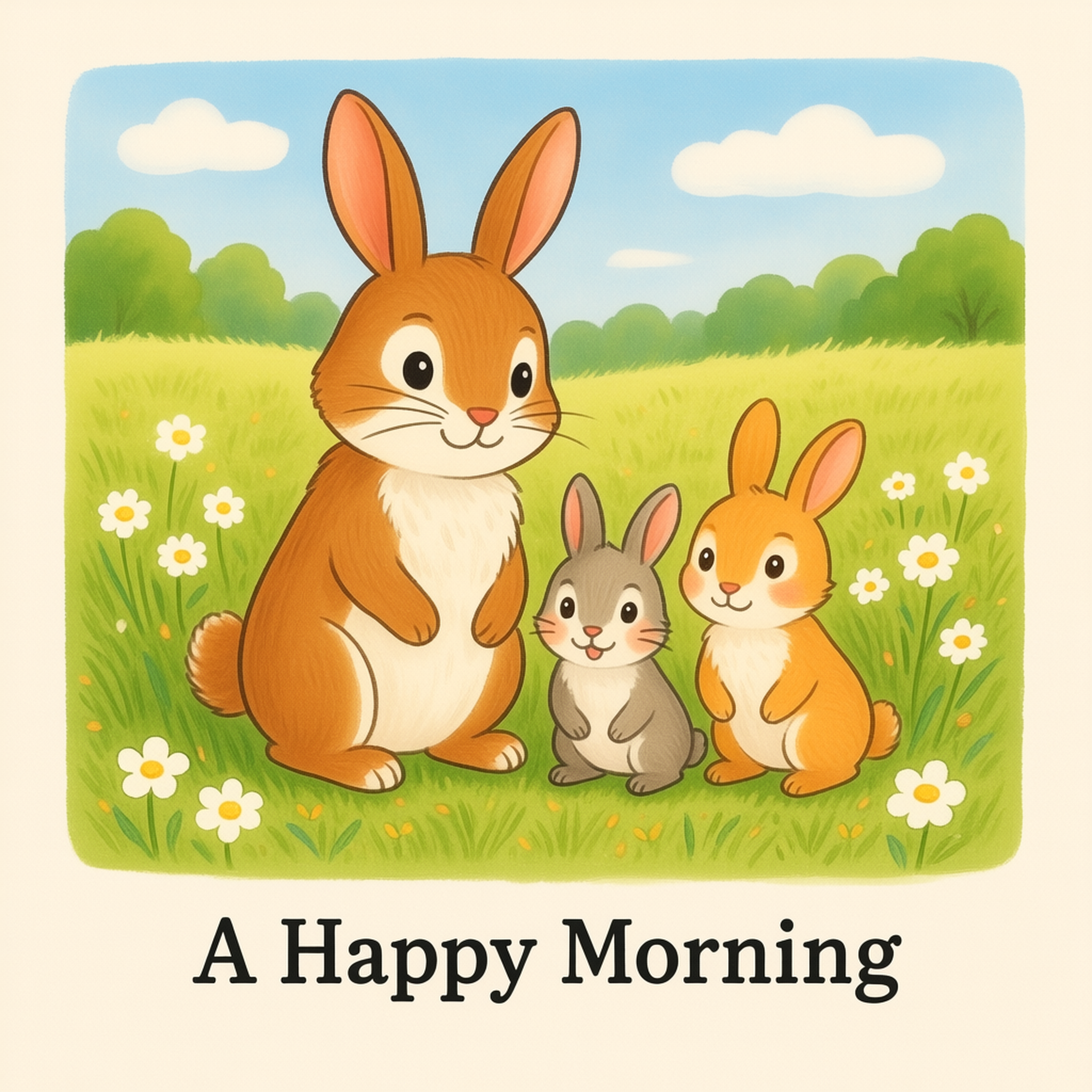

· Prompt Example: "Children’s storybook page: illustration of a rabbit family in a meadow. Caption at the bottom: 'A Happy Morning'." – This sets a clear expectation: an illustration suitable for a children’s book, showing a rabbit family in a meadow, with a caption text. By calling it a “storybook page,” the model will likely produce something with a friendly, colorful illustration (maybe in a painted or cartoon style) and then at the bottom of the image, it will write “A Happy Morning” in a clear, easy-to-read font (possibly a playful font). The output should look like a page from a picture book, where the image tells a story and the caption narrates it. If the style isn’t exactly what you want (maybe you want a watercolor look or a crayon drawing style), you can add that in the prompt. But the key elements (bunnies in meadow, text caption) will be there.

· Prompt Example (Bilingual story page): "Illustrated story page with bilingual text: a fox sitting under a tree. English text at the top: 'The Clever Fox'. Chinese text at the bottom: '聪明的狐狸'." – In this prompt, we explicitly request a bilingual layout. We have an illustration (a fox under a tree, likely done in a storybook art style). Then we specify an English title at the top and its Chinese translation at the bottom. Qwen-Image will handle this adeptly: you can expect the image of the fox (perhaps styled like a storybook illustration) and the text “The Clever Fox” written clearly at the top of the frame, and “聪明的狐狸” at the bottom. The fonts might differ (maybe one serif, one a hand-written style, etc.), or they might be similar, but importantly both texts will be correctly rendered. The Chinese characters should appear properly (Qwen is very strong in Chinese text rendering[4]). This kind of output would be great for a bilingual children’s book or educational material.

If you have more than two languages, you could try prompting them as well (e.g. add a French line). Qwen was mainly focused on English and Chinese in training, so those are most reliable; other languages might work if they use Latin alphabet or common scripts, but very mixed multilingual content is less tested. Still, it’s an area you can experiment with (see Open Questions).

One more tip for storybook pages: you can specify the style of the text if you want, such as “in a whimsical curly font” or “handwritten font”. Qwen-Image does attempt to mimic font styles (the training included a lot of typographic variety[19]), so you might get a neat effect. Just be cautious that specifying a very ornate font might sometimes reduce legibility, as the model has to balance style and clarity.

With these examples, you can see how to tailor prompts for various scenarios. The common thread is: be clear about format and include the needed text and layout details in the prompt. Qwen-Image will do a lot of heavy lifting in arranging and styling the output to meet that description, effectively acting like a graphic designer or illustrator that “gets” your requirements.

Open Research Questions

As powerful as Qwen-Image is, there are still some open questions and frontiers in using it most effectively. These are areas worth exploring further:

Very Long Prompts (5,000+ tokens): Qwen-Image’s backbone (Qwen2.5-VL) technically supports an extremely long prompt (the model has a large context window, up to tens of thousands of tokens in some versions). However, in practice, what is the coherence limit? At what point does a super lengthy prompt start to confuse the image generation? The training employed a curriculum learning approach that gradually increased prompt complexity to paragraph-level[20]. That suggests it handles multi-sentence or paragraph descriptions well. But if you feed 5,000 tokens (which is like several pages of text), the model might struggle to maintain focus on every detail, potentially leading to muddled results. This hasn’t been fully documented yet. Users may need to experiment with truncating prompts or focusing on the most important details if extremely long prompts yield odd images. The practical advice until more is known: keep prompts concise and targeted, even though the model can technically read a lot. We expect there is a diminishing return beyond a certain prompt length (perhaps a few hundred tokens) where additional text might not improve the image and could even introduce contradictions.

Instructional Cues vs. Plain Description: Does starting a prompt with an explicit instruction (e.g. “Draw a poster of...”, “Create an image of...”) improve or change the outcome compared to just describing the scene (“poster of...”)? Since Qwen-Image is built on an Instruct-tuned language model, it is designed to follow commands, so phrasing things as commands shouldn’t hurt. Initial usage suggests that saying “Imagine...” or “Draw...” doesn’t significantly alter the image content; the model focuses on the nouns and adjectives that follow. However, it might set a slight context – for example, “Draw a logo for a cafe” might yield a cleaner, more logo-like image than just “a logo for a cafe” due to the imperative mood cue. This area hasn’t been systematically tested in public, so it’s an open question. You can try both styles. It’s likely that for most cases, plain descriptive prompting is sufficient (and is what the model was mostly trained on), but adding a verb like “design”, “draw”, or “create” might help emphasize the creative intent or format. Future community findings will tell us if one consistently works better. Until then, use whichever feels natural – Qwen-Image will understand either way.

Multilingual Text Beyond Two Languages: We know Qwen-Image handles English and Chinese text exceptionally well[4], and likely other common languages (the model was trained on a globally sourced dataset, so it has exposure to languages like Spanish, French, etc., albeit not highlighted as much). The question is: if you ask for an image containing multiple languages at once (more than two), does it manage to produce all of them correctly? For example, a poster with English, Chinese, and Arabic text together. It’s an open area to explore. Mixing Latin-script languages (English, French, Spanish) should be fine – those use similar alphabets. Chinese we know is fine. Different script systems (Arabic, Cyrillic, Hindi, etc.) might be hit-or-miss; the model might default to something or possibly make mistakes, especially if three or more different scripts are present, since it’s a complex rendering task. Early indications from the tech report and demos show focus on English/Chinese, so more than two languages is not well-documented yet. If you have a need for it, experiment with small steps: try bilingual first, then add a third language in a simple prompt to see how it does. It’s quite possible Qwen-Image could surprise us with competency in those (given enough training data it might have learned Unicode patterns), but caution is warranted. The model’s ability to keep multiple languages separate (so they don’t get jumbled) when generating in one image is something the community is undoubtedly testing.

Consistency in Iterative Edits: Qwen-Image-Edit was designed with consistency in mind – to preserve the subject and overall look through edits[21]. In one-step edits, it’s clearly very good: e.g., it can change clothing or background while the person’s identity stays the same, as discussed earlier. The open question is how well this holds up if you do several edits in sequence. For instance, you generate an image, then edit it to add something, then edit again to change lighting, then edit again to remove an object. After multiple such iterations, is the main subject (say the person’s face or a mascot character) still looking identical to the first image? The Qwen team’s blog showcased some chained edits (like correcting text step by step, and transforming their Capybara mascot into multiple styles) with good results[12][13]. This suggests a high degree of consistency across edits. Nonetheless, every generation step has some variability, so there might be subtle drift if you do many edits, especially if those edits involve big changes (e.g. changing the setting or camera angle might start to alter the subject slightly). The model’s dual-encoding approach (feeding original image to the LLM and VAE for semantics and appearance) should help keep it on track[22]. Our advice is: for critical identity preservation, try to combine edits or use masks to limit changes, and keep an eye on details across iterations. It’s an evolving area – as of a late-August 2025 update, they even tweaked the diffusers implementation to improve identity preservation[23]. So Qwen-Image-Edit is actively being refined. We expect it to be one of the best in class for iterative editing consistency, but how far you can push it (5, 10, 20 edits?) is something you might want to test based on your project’s needs.

In conclusion, Qwen-Image is a cutting-edge model that offers a new level of control and fidelity in image generation. By understanding natural language prompts deeply, it allows creators to simply describe what they envision and see it come to life – whether it’s a basic photo, a complex poster with multiple texts, a UI concept, or an edited version of an existing image. This guide covered how to write effective prompts, include text, perform edits, apply styles, and handle resolution, with plenty of examples you can copy and tweak. As you experiment with Qwen-Image, you’ll likely discover even more tricks and capabilities. Remember to iterate and refine your prompts; even though the model is very advanced, a well-crafted prompt is still key to getting the best results. Happy prompting, and we can’t wait to see what you create with Qwen-Image! [5][14]

[1] Qwen Image Model — New Open Source Leader? | by Chris Green | Diffusion Doodles | Aug, 2025 | Medium

https://medium.com/diffusion-doodles/qwen-image-model-new-open-source-leader-8da3bed95e41

[2] [3] [6] [17] [18] [19] What is Qwen Image? Meet The AI Model Built for Text-Heavy Prompts | getimg.ai

https://getimg.ai/blog/what-is-qwen-ai-image-generation-model

[4] [9] Qwen-Image: Crafting with Native Text Rendering | Qwen

https://qwenlm.github.io/blog/qwen-image/

[5] [14] [16] Qwen/Qwen-Image · Hugging Face

https://huggingface.co/Qwen/Qwen-Image

[7] [8] [10] [12] [13] Qwen-Image-Edit: Image Editing with Higher Quality and Efficiency | Qwen

https://qwenlm.github.io/blog/qwen-image-edit/

[11] [20] [21] [22] [2508.02324] Qwen-Image Technical Report

https://arxiv.org/abs/2508.02324

[15] Vision - SiliconFlow

https://docs.siliconflow.cn/en/userguide/capabilities/vision

[23] GitHub - QwenLM/Qwen-Image: Qwen-Image is a powerful image generation foundation model capable of complex text rendering and precise image editing.