SillyTavern Character Expression Generators

Inspired by SillyTavern Expressions Workflow v2

To get started, the workflows are in "attachments" for this article.

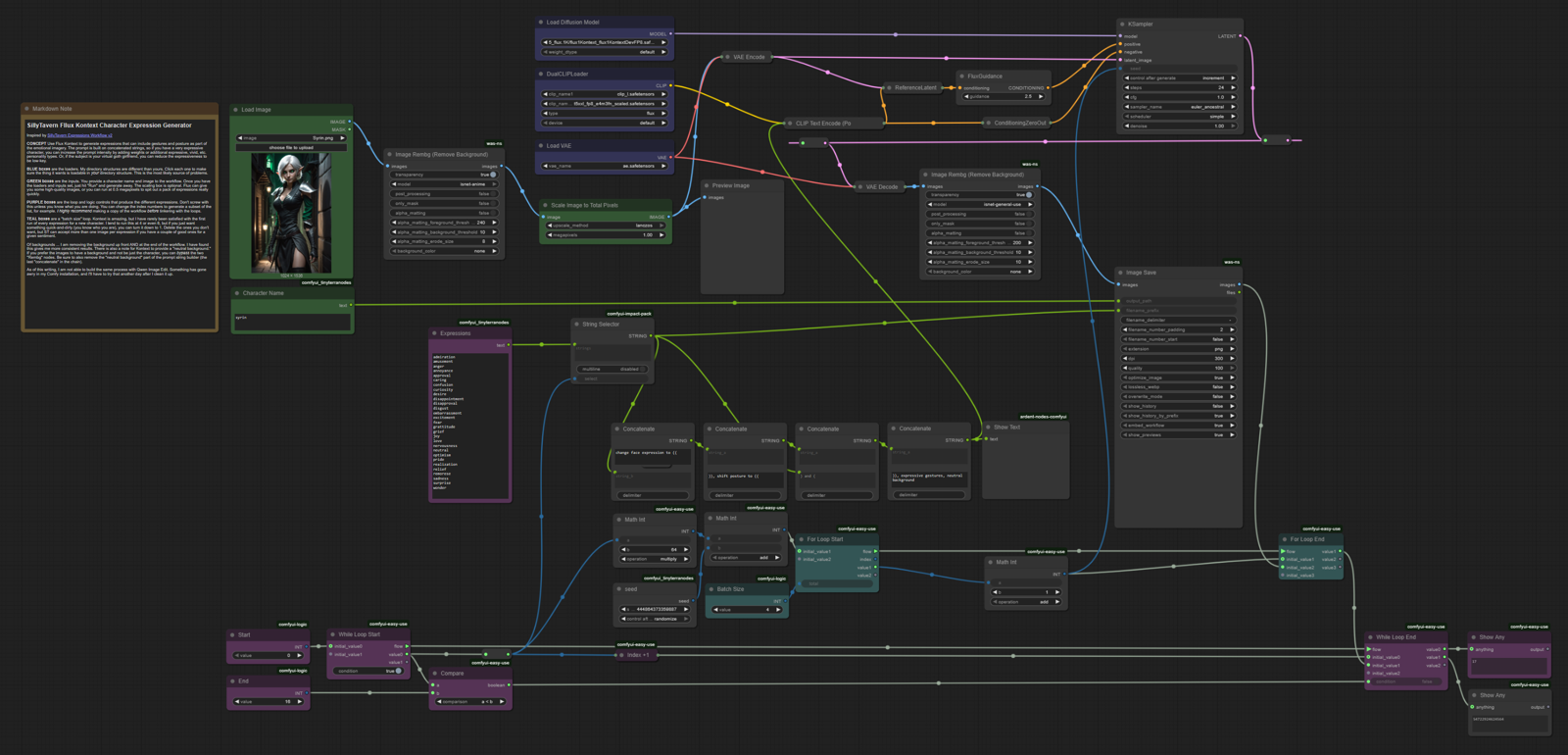

CONCEPT Use Flux Kontext or Qwen Image Edit to generate expressions that can include gestures and posture as part of the emotional imagery. The "Simplified" workflows are based on building a prompt with concatenated strings, so if you have a very expressive character, you can increase the prompt intensity by adding weights or additional expressive, vivid, etc. personality types. Or, if the subject is your virtual goth girlfriend, you can reduce the expressiveness to be low-key. The "LLM" workflows are based on the OpenRouter node. These use an LLM to describe how a person express the given emotion via facial expression and body language.

Either workflow should import and load missing nodes rather easily in ComfyUI. If you don't know how to do that, there are many videos and article here that can help you set up ComfyUI.

BLUE boxes are the loaders. My directory structures are different than yours. Click each one to make sure the thing it wants is loadable in your directory structure. This is the most likely source of problems.

GREEN boxes are the inputs. You provide a character name and image to the workflow. Once you have the loaders and inputs set, just hit "Run" and generate away. The scaling box is optional. These models can give you higher resolution images, or you can run at 0.5 megapixels to spit out a pack of expressions really quickly.

PURPLE boxes are the loop and logic controls that produce the different expressions. Don't screw with this unless you know what you are doing. You can change the index numbers to generate a subset of the list, for example. I highly recommend making a copy of the workflow before tinkering with the loops.

TEAL boxes are a "batch size" loop. While these models are amazing, I have rarely been satisfied with the first run of every expression for a new character. I tend to run this as batches between 2 and 6 per run, but if you just want something quick-and-dirty (you know who you are), you can turn it down to 1. Delete the ones you don't want, but ST can accept more than one image per expression if you have a couple of good ones for a given sentiment.

Additional notes

Of backgrounds ... I am removing the background up front AND at the end of the workflow. I have found this gives me more consistent results. There are also some anti-background tricks hidden in these workflows. If you prefer the images to have a background and not be just the character, you can bypass the two "Rembg" nodes. For Kontext, the "simplified" prompt asks to provide a "neutral background. "Be sure to also remove the "neutral background" part of the prompt string builder (the last "concatenate" in the chain). For Qwen, you will have to expend the negative prompt (red box) and remove "background" from the text.

These models can change the character a bit more than I would like on certain input pictures. If you feed some of the same images back through a few times, they will trend toward "Flux Face" or "Qwen Same Face." If you are super-particular about the facial structure and features of your character, you may have to train and add a Lora to the mix. Or perhaps you can use IP Adapter to solve this issue. For me, the "drift" from the original character is minimal and rarely enough for me to be concerned. I just wanted to note that it does happen, and sometimes, quite severely.

EDIT: By request, what do you do with the images once you have them?

In SillyTavern, there's an extension called "Character Expressions." This workflow was designed with the purpose of filling in the missing images. To do that, you'll want to ...

I will assume you already have a running version of SillyTavern, somewhere. You can find lots of tutorials elsewhere on how to get it going, if you do not. Once it is set up, make sure you have the extension for "Character Expressions." (You can manage extensions under the "three box icon" at the top of the ST UI.)

I am also going to assume you have a running copy of ComfyUI, somewhere. This is also beyond the scope of this article, but one you have it started, you can come back and download the "attachment" on this article (a JSON file). In the ComfyUI, choose "File | Open" and select the workflow from where you downloaded it.

If you have a character avatar you like, you can start there for consistency in the chat. Use that avatar as the picture input for this workflow. Fill in the name, and hit "Run" in ComfyUI. That should be all you need to start generating expressions. This workflow will run for over an hour even on a beefy setup.

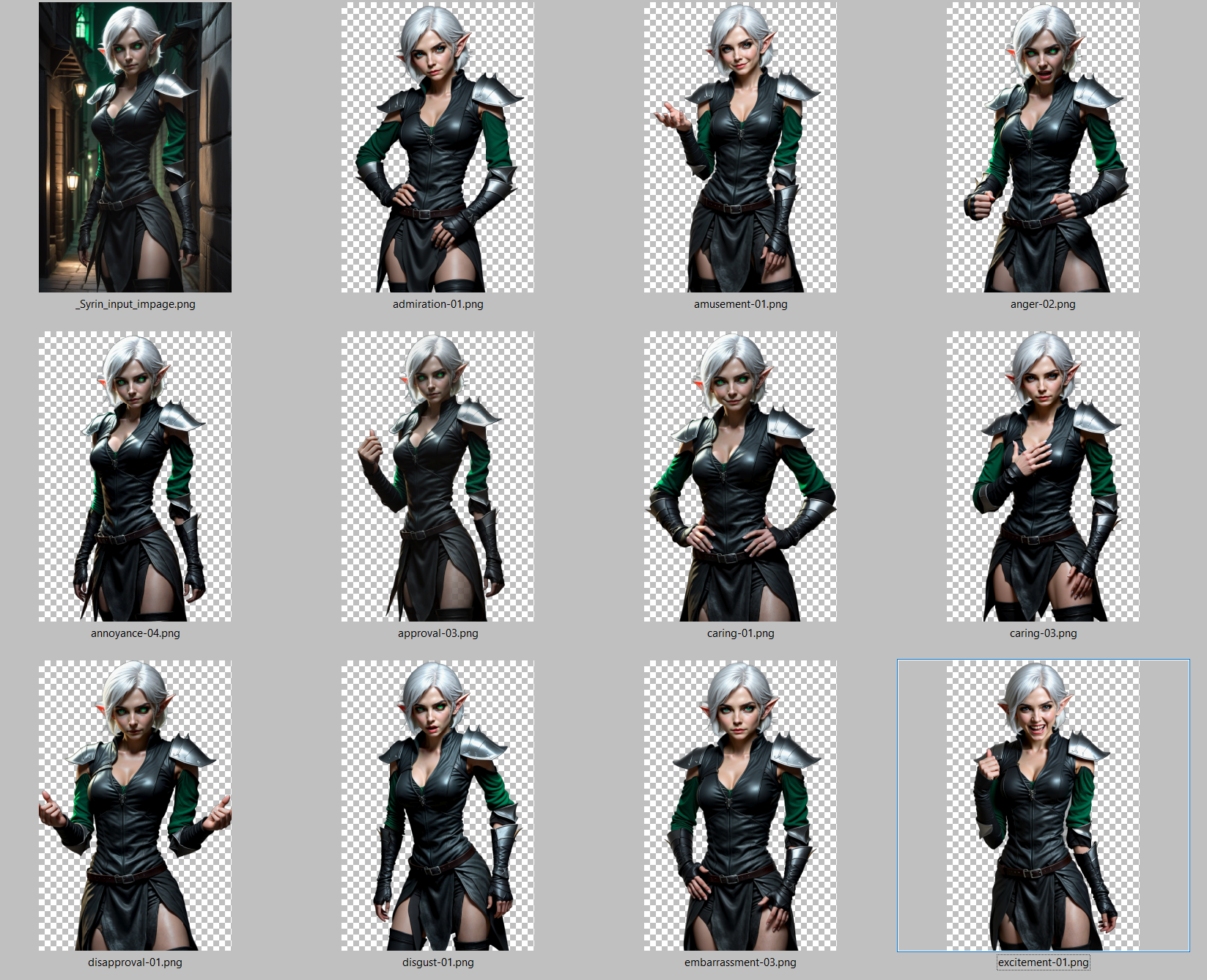

When it is done, you'll have a bunch of files that look like your original input character expressing various emotions. Some of them may have extra arms. Some of them may be angry when they should be happy. Delete the ones you do not want. Hopefully, you have at least one per expression that you can keep.

The files will be in the ComfyUI directory under:

outputs\<character name>\<expression>-##.pngPut all of your new expressions into a ZIP file with the character's name for easy storage and transfer.

Go back to the "three box icon" in ST. Open the drop-down for "Character Expressions." You should see a bunch of blank images, but don't worry: You can just upload your whole ZIP file at once! After a few seconds, you should see all the expressions populated in the extension.

Examples: