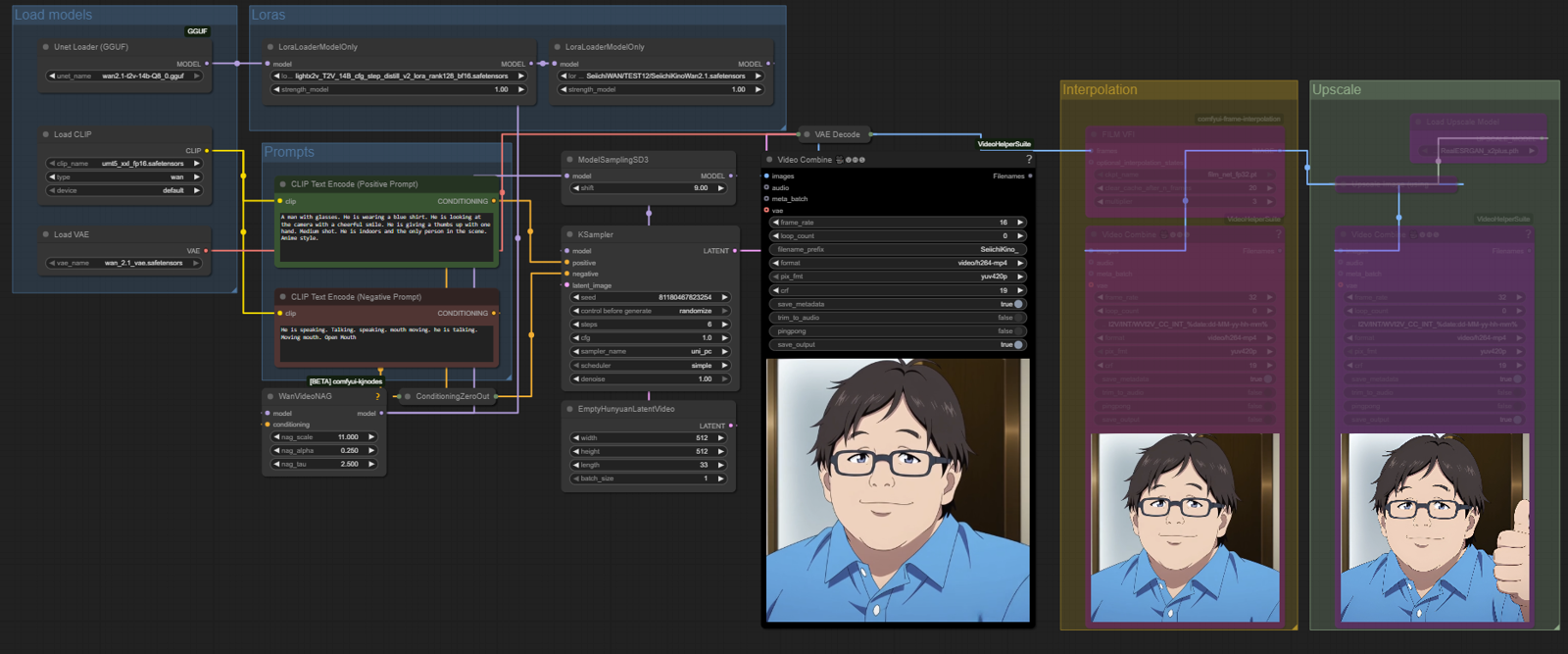

This is a supplementary page for my Seiichi Kinoshita WAN character Lora with a collection of WAN workflows for ComfyUI which can be immediately used with my Lora.

The following Workflows are attached:

WAN 2.1 Text2Video

WAN 2.2 Image2Video

WAN 2.2 Text2Video - 2x lightxV2 Lora (medium quality but fast)

WAN 2.2 Text2Video - 1x LightxV2 Lora (high quality but slow)

NOTE: some custom nodes are required to be downloaded - Comfy Manager should take care of it.

The workflows include:

GGUF quants

Lightning Loras

WanVideoNAG

Optional interpolation + upscaling

Explanation for included features:

Instead of the base models, I am using the GGUF quantization versions - this helps with VRAM and speed.

(If you want to use the base model files you will need to swap the Unet Loader nodes to the regular .safetensors model loaders)

All my workflows include the Lightx2v Loras - this helps with speed tremendously and I've been using/testing these Lora exclusively in all my generations. However, for WAN 2.2 the Lighting Loras can cause limited motion. If you want maximum quality then only use the Lighting Lora in the Low sampler or don't use them at all (but WAN 2.2 will be super slow then)

I've included WanVideoNAG in my workflows. Because Lightning Loras require you to use CFG 1.0 it means that negative prompts would be ignored by the sampler. NAG helps force the model to still take negative prompts into account. This helps control things like constant talking of characters by using negative prompts (for example "He is speaking. Talking. speaking. mouth moving. he is talking. Moving mouth. Open Mouth")

I've added Interpolation + upscale as optional nodes. By default they are disabled, can be enabled by simply toggling node bypass. NOTE: if you only want to use upscale without interpolation, you will need to adjust frame_rate on the last Video Combine node from 32f -> 16f

Recommendation for parameters:

Step count: Generally speaking more steps = more details/quality. But too high can cause long generation times as well as issues with Lighting Lora.

Recommended: 4-8CFG: when using Lightning Loras its always best to keep CFG at 1.0 but without Lightning Lora a good cfg is 3.5 (+20 steps)

Recommended: 1.0

Shift (ModelSamplingSD3): determines how much "room for movement" the latents can have. Recommended: 8-10

However, for Image2Video sometimes using lower values (5-6) can help retain some of the original facial expressions from the source image.

Resolution: The higher the better but obviously comes at a cost to generation speed and VRAM requirements. The default I use is 640x640 but I've also been able to generate up to 1024x1024 on a RTX4090 using my workflows. I tend to stick with 1:1 ratios as well.

Recommended: 512x512 or higherLength (frames)

Recommended: 16f + 1f for one second duration e.g. 3 seconds of video = 49 frames

WanVideoNAG parameters - I couldn't find documentation on what these parameters do exactly, but from my short testing nag_alpha/nag_scale will determine the strength of negative prompts. For T2V the defaults should be fine, for I2V I had to increase nag_alpha to 0.750 in order for the sampler to follow my negative prompts

Recommended: nag_scale 11.0 / nag_alpha 0.250 / nag_tau 2.500 (default)

In case negative prompts are not followed - increase nag_alpha to 0.500 - 0.750

Note: These workflows will of course work for anything WAN 2.1/2.2 related outside of my character Lora. Just delete/bypass my character Lora node in that case.