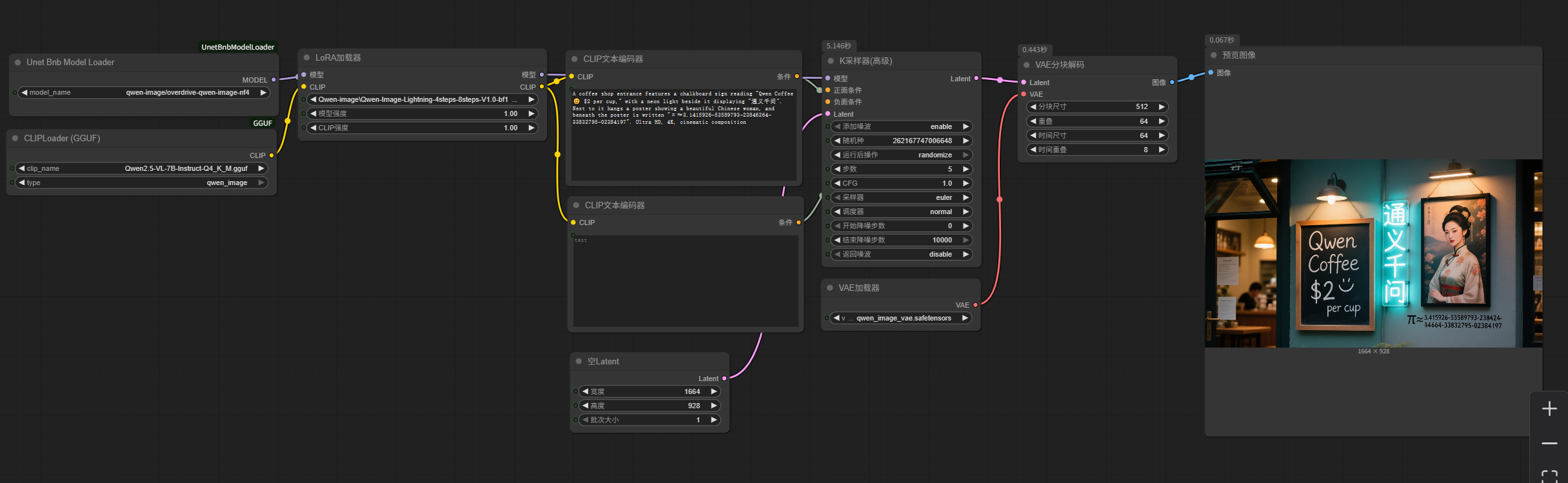

This workflow uses the latest bnb 4-bit model loading plugin to load the qwen-image quantization model in bnb nf4 format.

You need to manually install it in the custom-nodes directory of ComfyUI.

Model used: ovedrive/qwen-image-4bit · Hugging Face

Note that this is a sharded model, but you don't need to manually merge the shards together. Simply place them in a directory, such as qwen-image-edit-4bit, and then place that directory in the unet directory. The plugin will recognize and load the sharded model. In the drop-down menu, the sharded model will be displayed according to the directory it is in.

Use the following LoRa-accelerated generation: PJMixers-Images/lightx2v_Qwen-Image-Lightning-4step-8step-Merge · Hugging Face

Use the following text_encoder (requires the GGUF plugin): https://huggingface.co/unsloth/Qwen2.5-VL-7B-Instruct-GGUF/resolve/main/Qwen2.5-VL-7B-Instruct-Q4_K_M.gguf?download=true

The entire image generation process is about twice as fast as using the GGUF model, and the results are similar to GGUF Q4. Peak memory usage is around 15GB, but can be maintained at around 14GB when repeatedly generating images.

The image generation speed is about 1 it/s, and the recommended number of steps is 5-6. Due to its reliance on the BitsAndBytes library, this workflow does not support graphics cards other than NVIDIA.