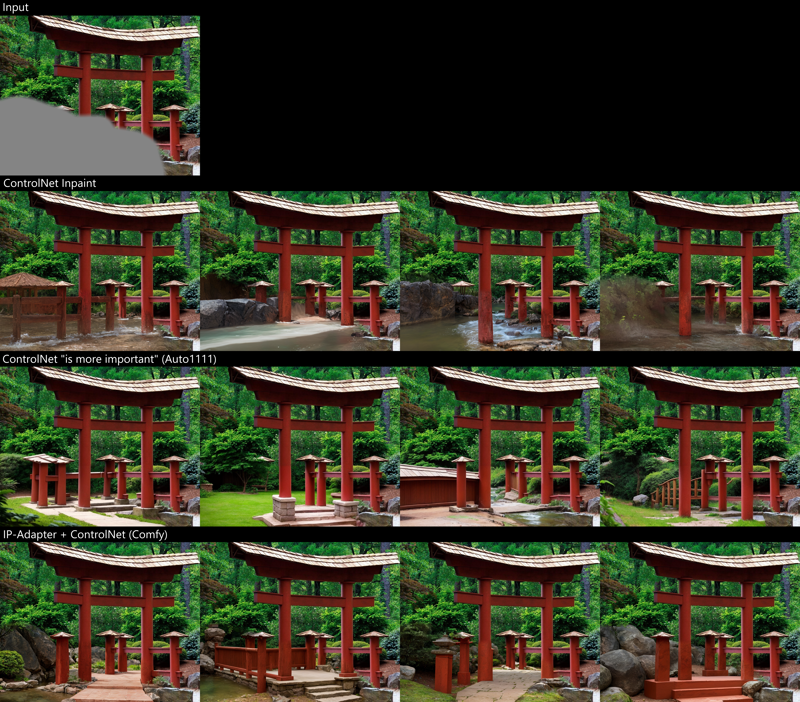

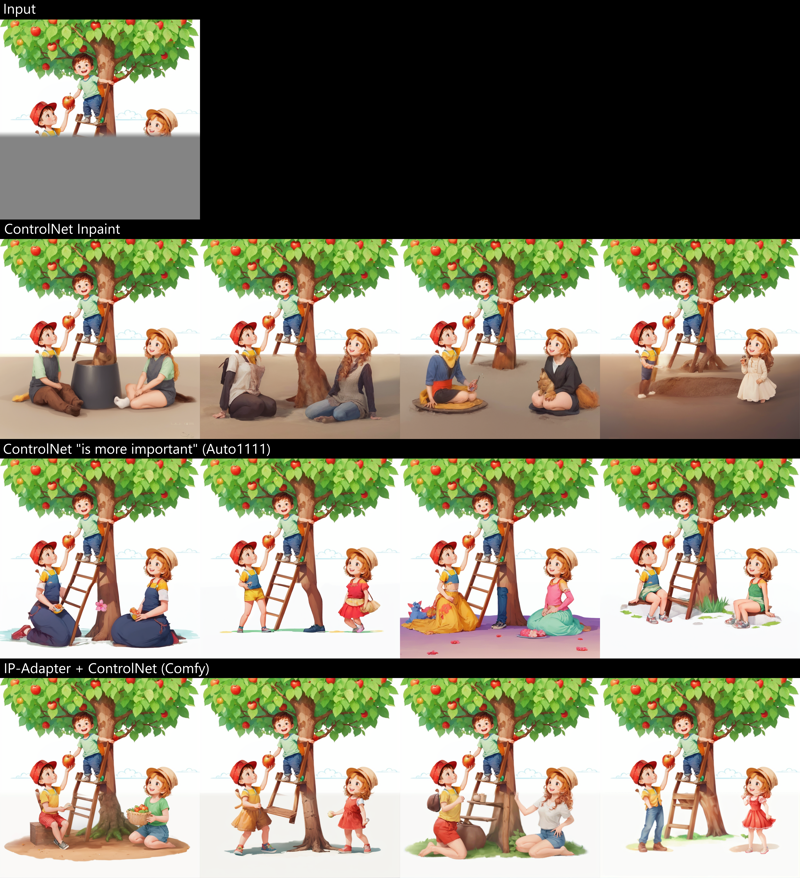

Promptless Inpainting Comparison

Promptless inpainting (also known as "Generative Fill" in Adobe land) refers to:

Generating content for a masked region of an existing image (inpaint)

100% denoising strength (complete replacement of masked content)

No text prompt! - short text prompt can be added, but is optional

Because outpainting is essentially enlarging the canvas and then inpainting the newly added region, it is covered here as well. This can be a very powerful tool to erase unwanted objects, exchange parts of an image or quickly extend the canvas with the press of a button.

The results are used to improve inpainting & outpainting in Krita by selecting a region and pressing a button!

Content

This document presents some old and new workflows for promptless inpaiting in Automatic1111 and ComfyUI and compares them in various scenarios.

Methods overview

"Naive" inpaint: The most basic workflow just masks an area and generates new content for it. Because SD does not really work well without a text prompt, the results are usually quite random and don't fit into the image at all.

ControlNet inpaint: Image and mask are preprocessed using inpaint_only or inpaint_only+lama pre-processors and the output sent to the inpaint ControlNet. This shows considerable improvement and makes newly generated content fit better into the existing image at borders. However without guiding text prompt, SD is still unable to pick up image style and content.

ControlNet image-based guidance (Auto1111): This method is adapted straight from this discussion. By choosing ControlNet is more important option, the inpaint ControlNet can influence generation better based on image content. Results are pretty good, and this has been my favored method for the past months. It is not implemented in ComfyUI though (afaik).

IP-Adapter + ControlNet (ComfyUI): This method uses CLIP-Vision to encode the existing image in conjunction with IP-Adapter to guide generation of new content. It can be combined with existing checkpoints and the ControlNet inpaint model. Results are very convincing!

Honorary mention, but not tested here: Inpaint Models. There is an inpaint version of the base SD1.5 model which improves results for inpaint scenarios. However this doesn't scale well for the hundreds of custom checkpoints out there, only very few of them come with an inpaint model. IMO this is too inflexible.

Comparison

I chose three reasonably challenging scenarios with large inpaint areas to compare the various methods. Smaller areas usually work better.

Torii - photo taken from prior promptless inpaint discussion.

Resolution: 1280x1024

Model: PhotonIllustration - outpainting scenario for illustration artwork (generated with SD).

Resolution: 1024x1024

Model: DreamShaper 7Bruges - background inpainting on photographic content (generated with SD).

Resolution: 1280x832

Model: Photon

Note that choice of SD model, sampler, etc. is not particularly important here. All workflows first generate an initial image at low resolution (512) and then do a "high-res" pass on the inpainted content (including suitable large parts of surrounding image). Detailed workflows are attached / at the end of this document.

Results

Non-cherry-picked, first 4 generations for all methods.

https://civitai.com/images/2159121?postId=527734 (full resolution)

Workflows

"Naive" inpaint: naive-inpaint.json

ControlNet inpaint: controlnet-inpaint-v3.json

ControlNet image-based guidance:

Negative prompt: bad quality, low resolution Steps: 20, Sampler: DDIM, CFG scale: 5.0, Seed: 1261144780, Size: 640x416, Model hash: ec41bd2a82, Model: photon_v1, Denoising strength: 0.4, NGMS: 0.2, ControlNet 0: "preprocessor: inpaint_only+lama, model: control_v11p_sd15_inpaint [ebff9138], weight: 1.0, starting/ending: (0.0, 1.0), resize mode: ResizeMode.INNER_FIT, pixel perfect: True, control mode: ControlNet is more important, preprocessor params: (-1, -1, -1)", Hires sampler: DPM++ 2M Karras, Hires prompt: "highres, 8k uhd", Hires upscale: 2.0, Hires steps: 20, Hires upscaler: Lanczos, Version: v1.5.0IP-Adapter inpaint: ip-adapter-inpaint-v4.json

Required custom ComfyUI nodes: