If you find our articles informative, please follow me to receive updates. It would be even better if you could also follow our ko-fi, where there are many more articles and tutorials that I believe would be very beneficial for you!

如果你觉得我们的文章有料,请关注我获得更新通知,

如果能同时关注我们的 ko-fi 就更好了,

那里有多得多的文章和教程! 相信能使您获益良多.

For collaboration and article reprint inquiries, please send an email to [email protected]

合作和文章转载 请发送邮件至 [email protected]

by: ash0080

Preface

I have been hesitating about whether to write a LoRa training series. One reason is that when I started learning about the training, I came across many related articles, and to be honest, the experience was very unpleasant. Most of the articles or videos were filled with errors and empiricism (usually only applicable to certain specific types), or even nonsense. However, looking at it from another perspective, this also indicates that describing LoRa training is inherently difficult, and the tools and algorithms are constantly evolving, so what may be the correct parameters today might not be applicable tomorrow. Despite this, these experiences have made me doubt whether it is possible to teach LoRa training effectively through tutorials, and that is why I have been hesitating to start writing.

However, as the Top-Ranked writing team on civitai, how could AIGARLIC not touch on the topic of LoRa Training? Therefore, a professional trainer will be joining us soon.She will bring us a comprehensive professional course on business-level model training, in-depth analysis, and optimization. Currently, this series is being updated on our Ko-fi page, and we welcome you to follow us there for the latest updates.

There have been many articles on how to organize training datasets, but in Hazel's article, she shares a method for constructing a scientific tagging system. What sets it apart is the highly rational approach used to explore the balance issue of image quantity. It is a professional and insightful contribution.

As an AIGC beginner, I also plan to shamelessly share a completely different perspective on the LoRa training series. If previous tutorials were like classroom lectures, this series will be more like your laboratory operation guide. I don't plan to provide too many summaries or tell you definite conclusions. I only want to share how I conducted experiments, hoping that it will be a meaningful attempt.

————————————————————————————

basic_shape_grey

The training set consists of three grayscale shapes on a white background, with only one tag: "myshape"

Batch generation, with only the trigger word:

Several observations can be made:

Gray color has been learned.

Circular shape has been learned.

White background has not been learned.

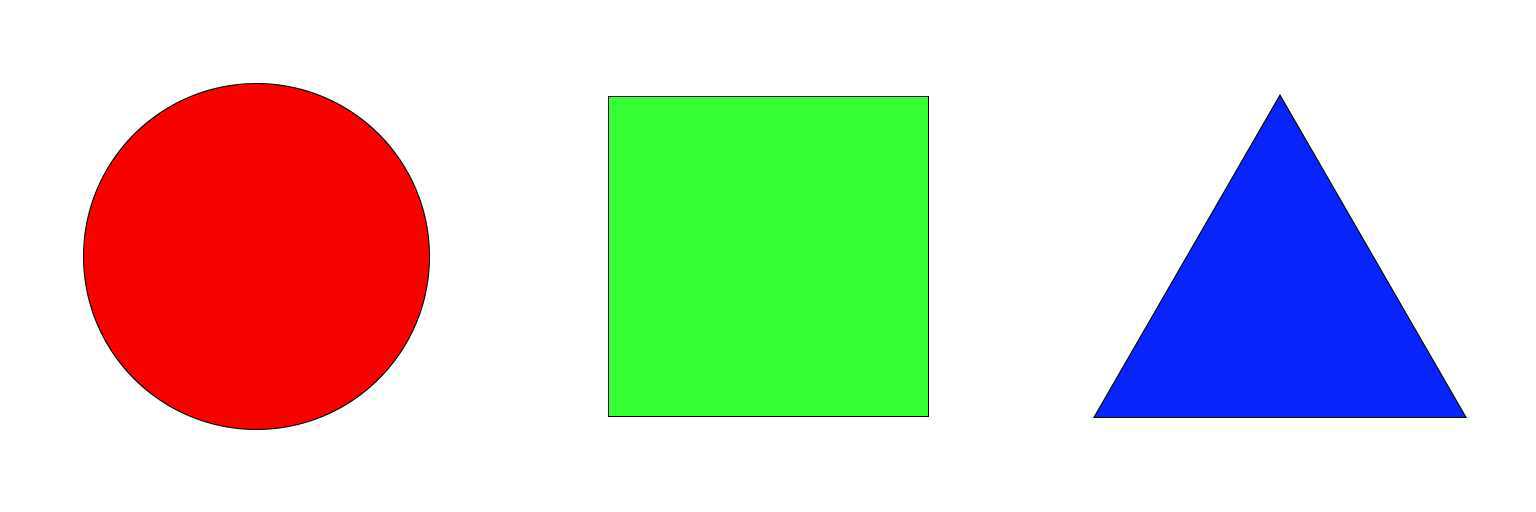

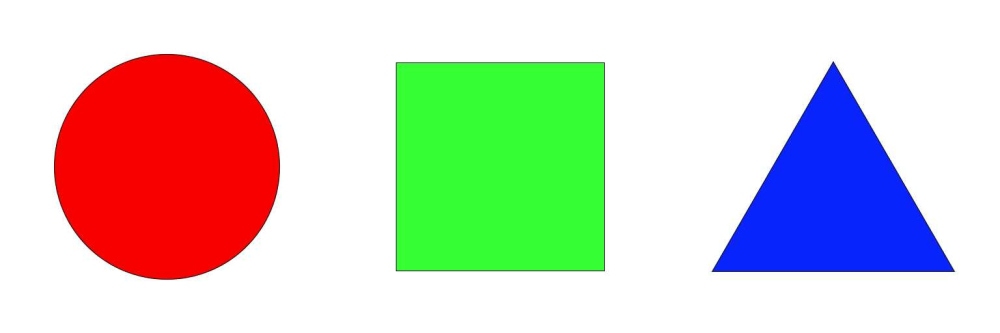

base_shape

This time, the training set consists of three primary-colored geometric objects, where each image has three elements: shape, outline, and color.

Compared to the previous example, the most significant difference is the introduction of color.

base_monster

Using the same training set, the only change is the keyword, which is now "monster."

It's difficult to discern any specific patterns. It appears that the training intensity may not be sufficient to distort the original word adequately.

However, we can still observe the presence of circles, squares, and triangles (composition).

base_monster_colored

In this variation, the tags are expanded to include color: red/green/blue monsters.

When using only the keyword "monster," there is little difference compared to the previous model. However,

red monster

green monster

blue monster

Something interesting happens when more specific tags are used. The generated content undergoes significant changes, especially in the case of the green square. It seems that SD (StyleGAN Discriminator) understands perspective, and in its understanding, squares and triangles may be the same thing in perspective relationship.

base_blur

Let's try using blur, and the only tag: "monster"

Compared to "base_monster," the edges of the characters do not become blurry. Instead, an atmospheric and depth-of-field effect is introduced. This might come as a surprise, as blur can be quite useful in adding ambiance to an image.

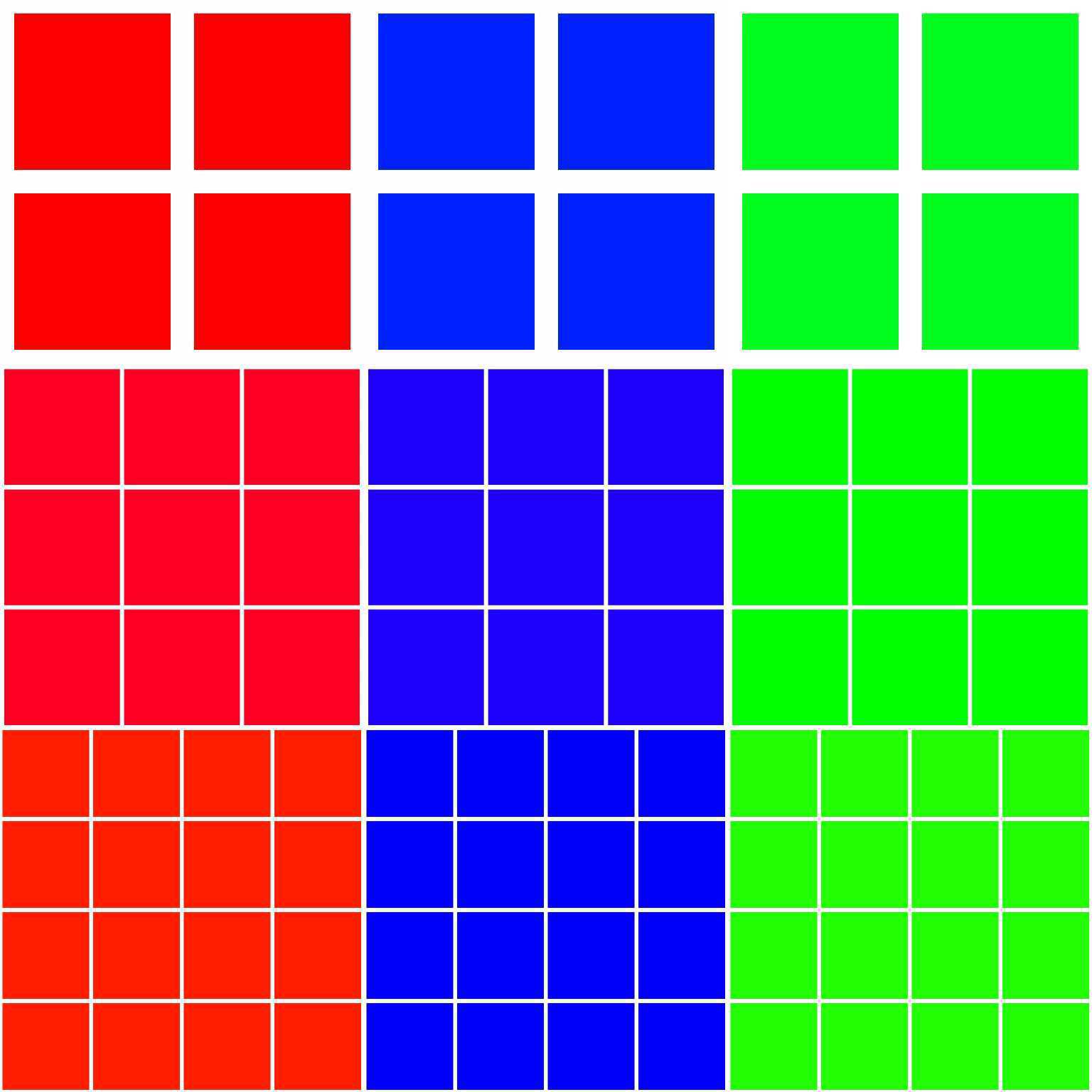

base_grid

The only tag here is "grid layout."

Interestingly, LoRa has not only drawn 2x2, 3x3, and 4x4 grids but even generated 5x5 and 6x6 grids. It's a fascinating experiment.

However, in most cases, unless the model is overfitting, the learned features are unlikely to be represented in the exact way we imagine. Instead, they become integrated with existing features.