WORKFLOW DOWNLOAD

This text was translated using AI.

09.20 - Some details in the Note and How to use sections have been revised.

1. Introduction

This workflow allows you to make use of Attention Couple for Regional Prompting.

It should be run in two phases, and please remember that the masks need to be set manually.

While I understand that not working in a single queue might not be ideal, this is an attempt to achieve better results.

If you have any ideas for improvements to this workflow, please let me know.

2. Note

This workflow was created with ComfyUI version 0.3.59.

This workflow may require a relatively large amount of VRAM. If you don’t have enough, try using options such as lowvram.

The workflow must be executed in two phases.

3. How To Use

3-1. Initial Setup (Shortcut 0)

Press number key 0 to load the nodes required for ControlNet, Detailer, and other essential models.

Please check the screenshot and set each item accordingly.

3-2. Main Settings (Shortcut 1)

This group handles the basic setup for image generation.

Here you choose the model, write your prompts, adjust various values, and select the LoRAs that will be applied to the whole image.

Image size can be set in Aspect Ratio. If you want to manually enter width/height, make sure to set the third dropdown (Preset) to Custom.

3-3. Generate or Load Base Image (Shortcut 2)

Next to the Main Settings, you’ll find nodes for generating or loading a base image.

First, bypass all groups in Phase 2 and 3. Then choose one of the two methods below:

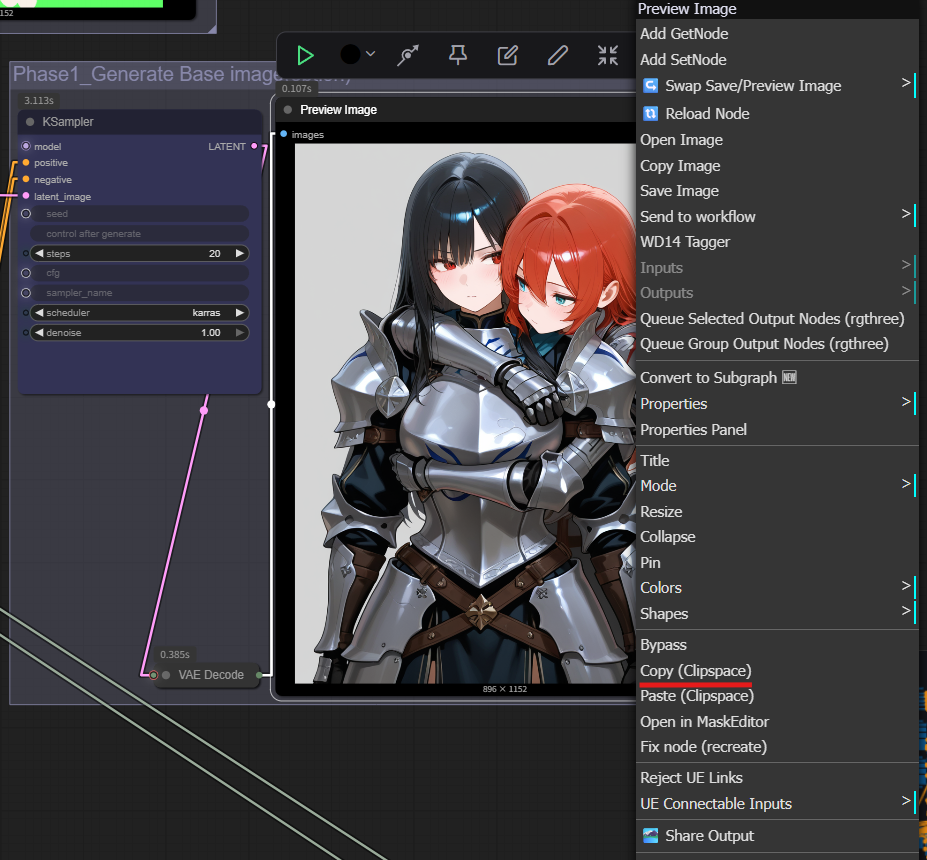

Generate a base image: Enable the “generate base image” group (which includes a KSampler) and run the queue once. A base image will appear in the preview next to the KSampler.

If you want to use ControlNet, activate the “Base image ControlNet (option)” group and load the image you’d like to use in the ControlNet via the Load Image node.Load an image: Keep the “generate base image” group bypassed and run the queue once. The loaded image will be resized to the settings from Main Settings and shown in the preview.

3-4. Copy & Paste Base Image (Shortcuts 4 and 5)

Right-click the preview image node of the base image → choose Copy (clipspace).

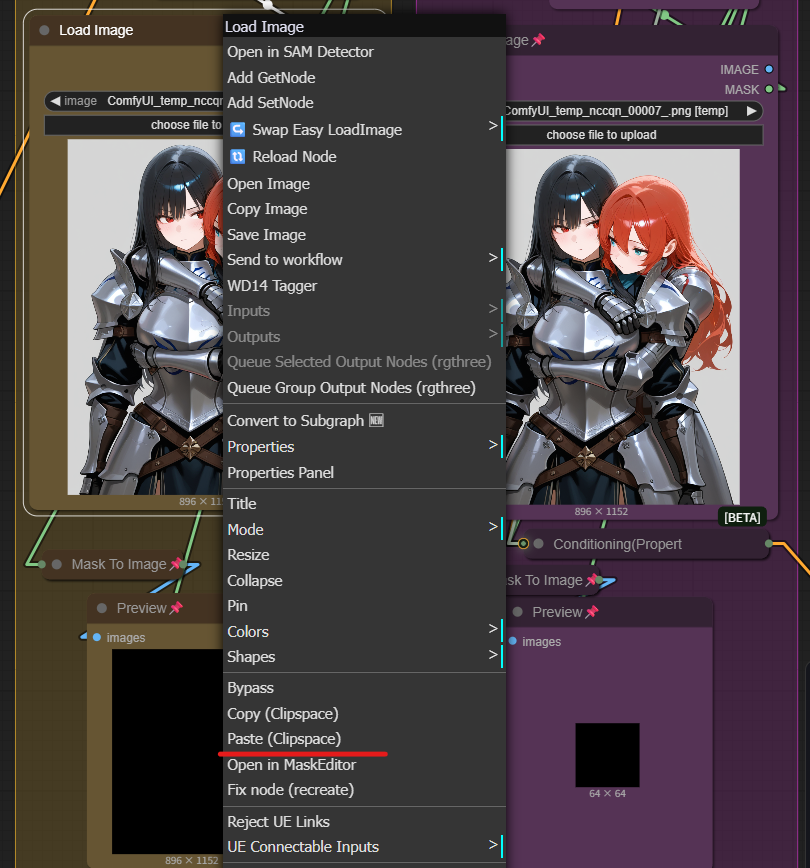

Then, right-click the Load Image nodes inside the character groups (Phase 2) and the “generate regional image” group → choose Paste (clipspace).

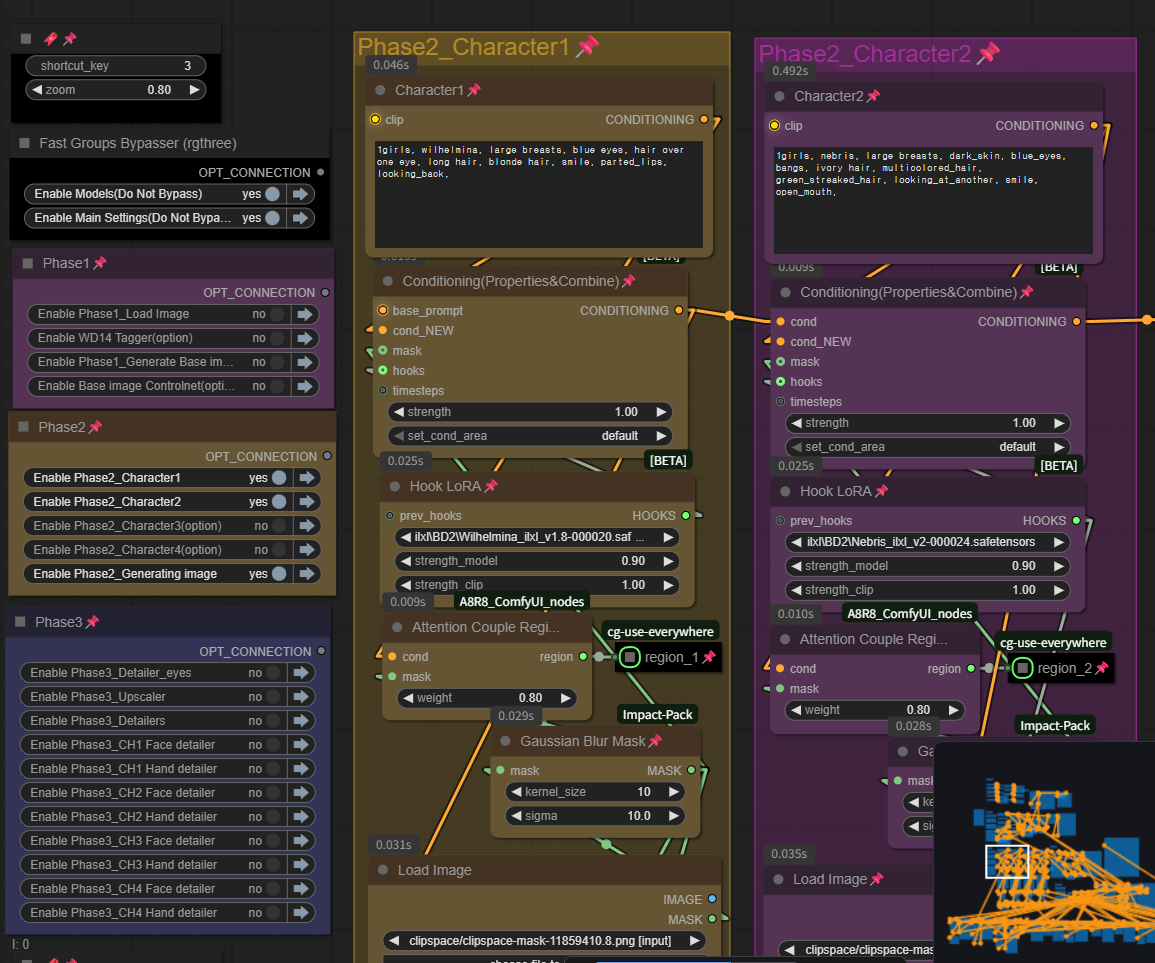

3-5. Character LoRA & Prompt Setup (Shortcut 3)

Bypass all groups in Phase 1.

In Phase 2, activate as many character groups as you need, plus the “generate image” group.

For each character group, enter the character prompt and apply the LoRA you want.

If you don’t want to use a character LoRA or have limited VRAM, you can bypass the hook LoRA node instead.

3-6. Mask Editing for Each Character (Shortcut 4)

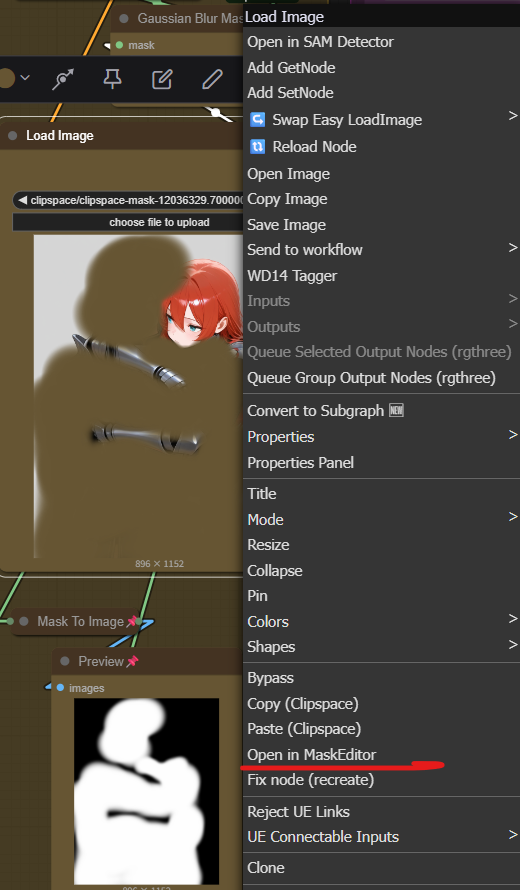

Right-click the Load Image node in each character group → select Open in MaskEditor and set up the mask for that character.

Masks don’t need to be super precise.

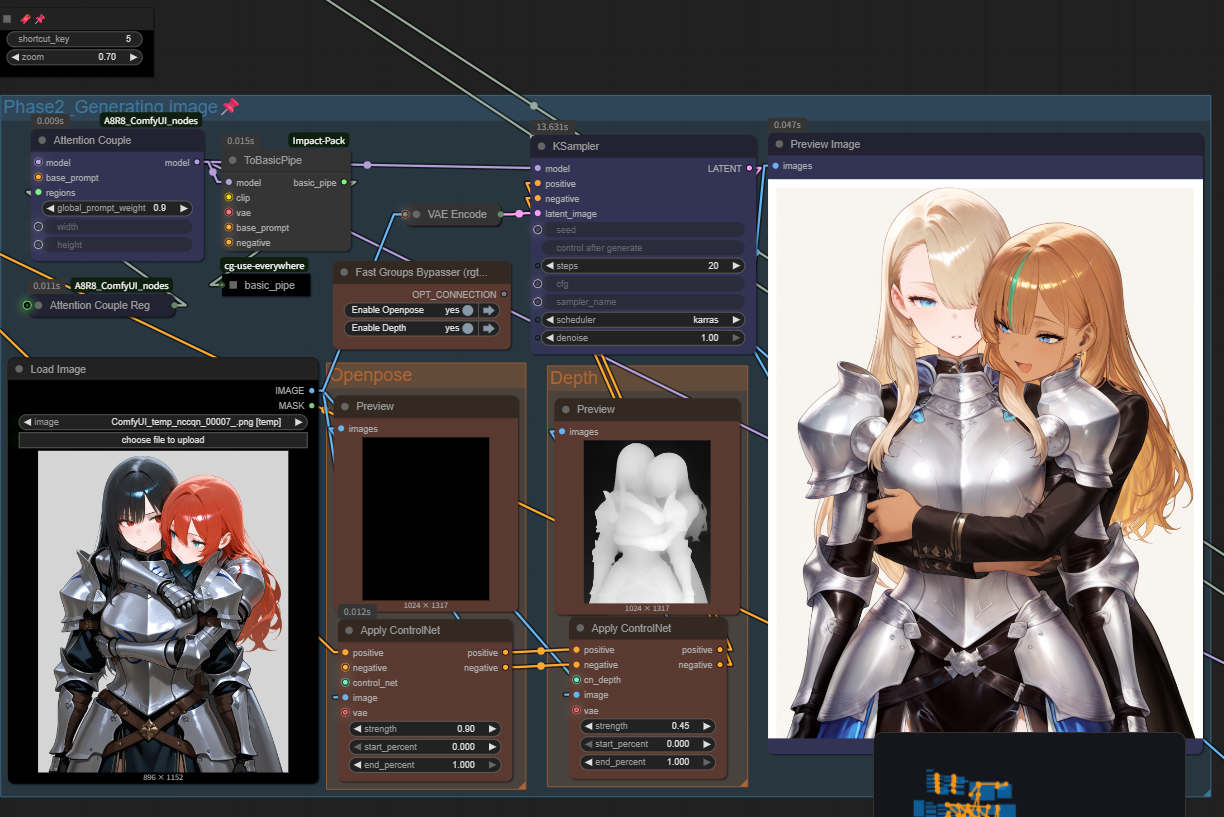

3-7. Generate Regional Images (Shortcut 5)

Once you’ve set prompts, LoRAs, and masks, you’re ready to generate regional images.

Run the queue to generate images. You can adjust the ControlNets and the denoise values of the KSampler to achieve the results you want.

If your want to use Upscaler and Detailers, you can also try to enable Phase 3.

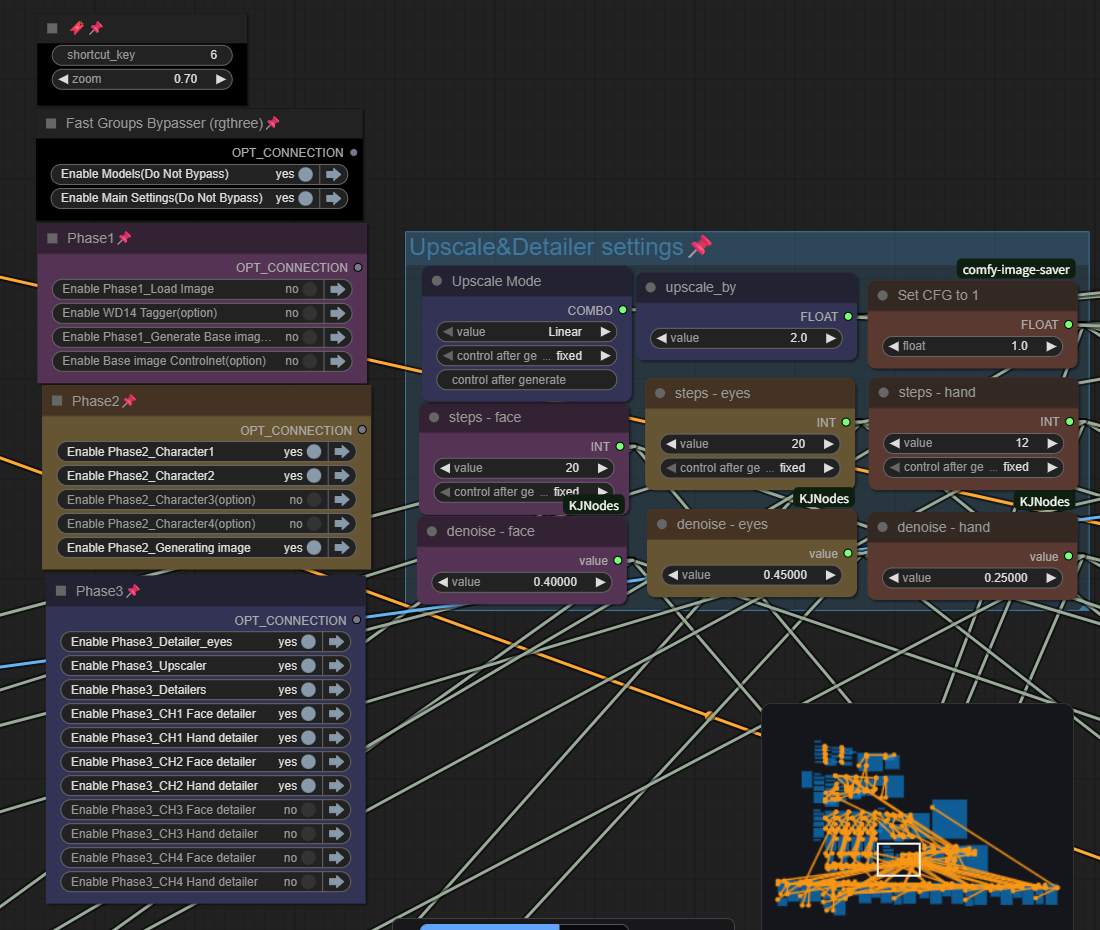

3-8. Detailer & Upscaler Settings (Shortcut 6)

The upscaler is set to 2× upscale by default.

If you don’t want to use Ultimate SD Upscale and only upscale, set Upscale Mode to None.

Other nodes are arranged so you can easily control steps, denoise, and related settings in one place.

4. Sample images