Idea

I've had this idea for a while, but AI stuff is way beyond me, and I don't even how I would begin to implement this properly. For the time being, I've created a crude, "The next best thing", to demonstrate my idea.

As we all know, LoRA's, and their cousins, are the go-to way to create customized content. Whether it be characters, outfits, concepts, etc. But while they are known for their versatility, they aren't known for their ease of training and their precision and accuracy when it comes to the finest of details. (Unless I've just been training them wrong lol).

So, my idea: 'Texture Cards', a fancy name for 'An external image you can attach to your generation, that, instead of relying on memory (like a Trained Lora), pulls directly from the source image during generation'.

It's basically just ControlNet, but instead of poses, it's clothing.

Hypothetically, because the diffusion process would somehow (i have no clue how), pull pixels directly from an imported image, the end result could be exactly as precise/detailed as the input.

What this also means, is you can create custom outfits/textures, without having to train an entire LoRA on them, Similar to how you can use ControlNet to create custom poses, without having to train an entire LoRA on it.

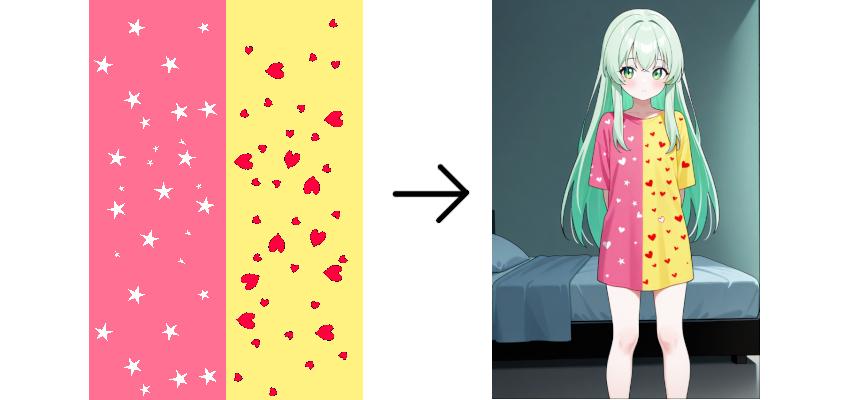

Demonstrations

So, what does that mean in practice? Well, let me demonstrate.

I want some hyper-specific shirt. I want a shirt that is half pink, half yellow, has red hearts on the yellow side, and white stars on the pink side.

Normally, you wouldn't be able to create this easily, even with Regional Prompting.

left half prompt: Score_9, score_8_up, score_7_up, source_anime, cute, green hair, green eyes, pink shirt with white stars, [[pink shirt]], [[white stars]], [[star texture]], standing upright

right half prompt: Score_9, score_8_up, score_7_up, source_anime, cute, green hair, green eyes, yellow shirt with red hearts, [[yellow shirt]], [[red hearts]], [[heart texture]], standing upright

global prompt: bed, source_anime, score_9, score_8, score_7, 1girl, solo, standing upright

I will admit I didn't expect it to split the shirt perfectly, I was expecting the two colours to blend together. Regardless, notice how there are zero stars anywhere, only 1 big heart, and where did "SCRE" come from? (and also why is she not in bed lol. Not relevant at the moment)

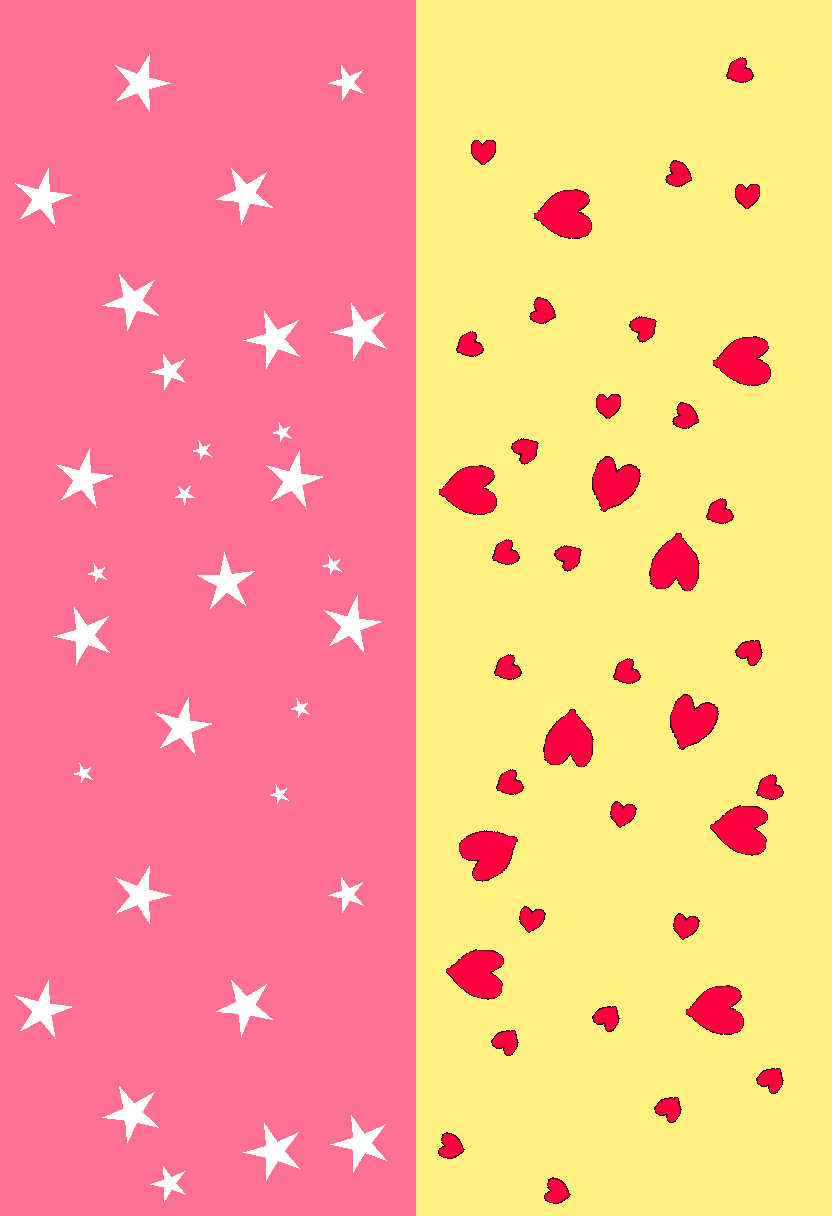

Now what if I try the same thing using no regional prompting, but using my (crude imitation of a) Texture Card:

Score_9, score_8_up, score_7_up, source_anime, cute, pale green hair, green eyes, 1girl, solo, standing upright, bed, [[[pink shirt]]], [[[yellow shirt]]], [[[stars]]], [[[hearts]]]as well as this PNG:

Now, the problem with this being a prototype, is there are 2 outcomes. Either it looks like garbage:

(Notice how it's hardly shaded at all, and looks flat), or, it starts losing its precision

(which defeats the entire purpose of the texture card. Though once again, this is a demonstration, not even on the same planet as the final product)

But you get what I'm going for, right? Trying to make a LoRA for that specific of an outfit would take ages, since you'd have to manually create all the data, or spend 7 decades trying to find random posts that for some reason use this exact design.

This doesn't only work with Outfits though, Lets say I wanted to generate a girl infront of the CN-Tower? (woo Canada!). There are no CN-Tower background LoRA's as far as I can tell. So...

using This Image I 'borrowed' from Expedia, slap it into the crude imitation of a Texture Card workflow, and badda bing badda boom:

Score_9, score_8_up, score_7_up, source_anime, cute, red hair, blue eyes, 1girl, solo, standing upright, peace signs, finger v, arms up, smiling, cityscape

Close enough. And yes, I had to edit the image to move the CN tower over, the Workflow does not do that by itself.

Other

So yeah. That's basically my idea. I do feel like there should be additional settings if someone actually does implement Texture Cards properly (more similar to ControlNet).

Basically just a strength slider. Strength of 1 means 'copy it pixel for pixel', 0.9 is closer to 'take the image, but make it anime', 0.8 is 'take the image, make it anime, but also let it vary from the source image etc.

What a normal Strength slider does.

Some additional notes for my perfect vision of a Texture Card, is, similar to a LoRA, it can still work with shading and whatnot. For example, at a Strength of 1, it might look more similar to the 1st demo image. But the 2nd image isnt a strength of 0.9, I'd say its a strength of 0.95. Very minimal shading, looks less like a shirt. With a strength of 0.9, you shouldn't even be able to tell a Texture Card has been used. It should just look exactly like a normal generation (shading, composition, etc), but with a specific texture.

Crude Demo Workflow

As you can probably guess by the overall 'Green' theme, I'm literally just using a Greenscreen as my prototype.

The Workflow, as well as the custom node has been attached to this Article, the rest of the custom nodes n stuff you can just get from ComfyUI Manager. And the LoRA is here

Using Advanced K-Sampler, generate 15/25 steps using a Greenscreen Lora, and greenscreen tags, Decode the image, replace the greenscreen, re-encode the image, then slap it back into an Advanced K-Sampler with the prompt you actually want starting from step 12, and ending at step 25.

The biggest problem with this prototype, is because the greenscreen replacement has no context of what's in the image, it can't possibly adapt to perspective, poses, etc. The reason I specified "standing upright" was so that the shirt would be facing forward. If I were to have left that blank, and SD decided to randomly generate her leaning to one side, the final product would have the dividing line straight down (relative to the camera), but at an angle (relative to the character). Once again, this shouldn't be an issue with a properly coded, properly implemented "Texture Card" system, Since it, Like a standard LoRA, would have the context of the image, and be able to adjust accordingly.

A very very crude implementation of the 'Strength' slider is just determining what step you end at the 1st time.

For example, a strength of '1', would just be 'Generate from 0-23 steps', greenscreen, 'Finish with 22-25'

Though there is a minimum step count, since the Greenscreen LoRA actually needs to kick in before you can detect it and replace it. This would not be an issue with Texture Cards, since the diffuser itself would 'know' (like control net), from step 0.

By default, I just slapped in some math nodes. The first K-Sampler generates 70%, the 2nd K-Sampler starts from 60%.