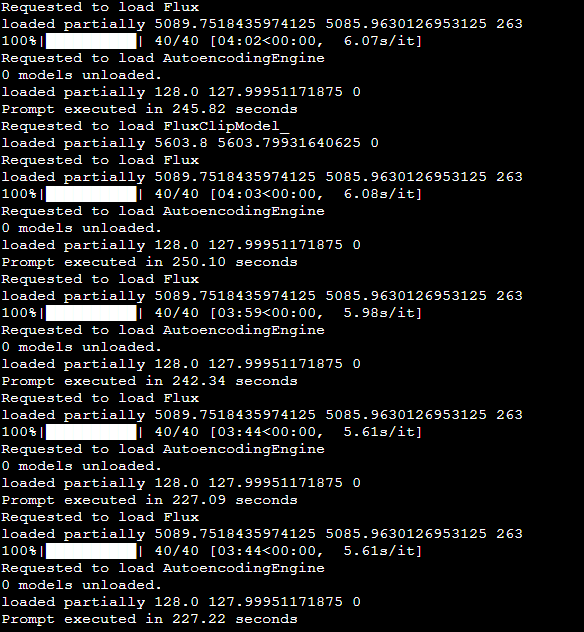

***With help and advice from the community, I’m currently experimenting with 2–3 new nodes on top of this simple Comfy + Flux workflow. The goal hasn’t changed: stick with the big model to get a strong baseline, and then layer in nodes or settings that boost detail and sharpness—without blowing up generation times. That balance is critical when you’re on low-spec hardware (8GB VRAM in my case), where every extra second counts. Once I’m done testing, I’ll put together a follow-up article and share the updated workflow as a clean, ready-to-download package.***

Intro

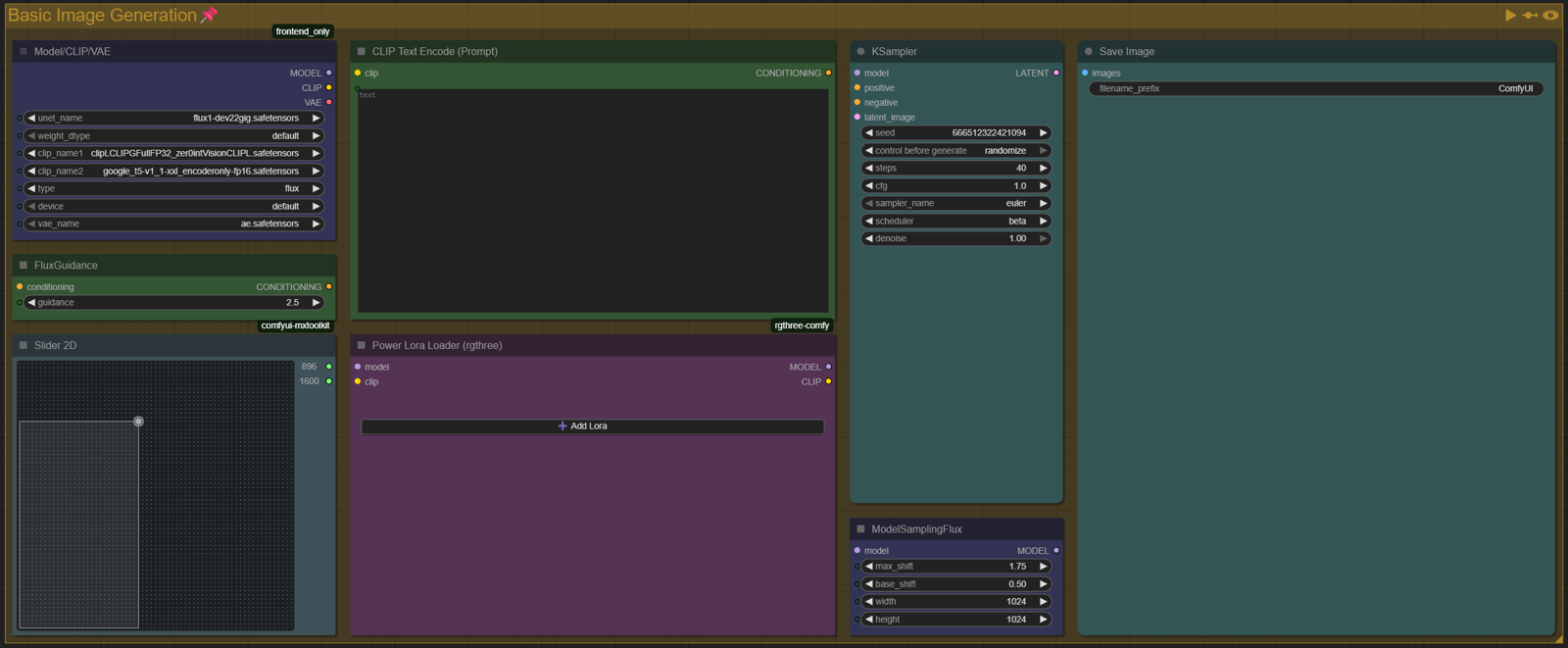

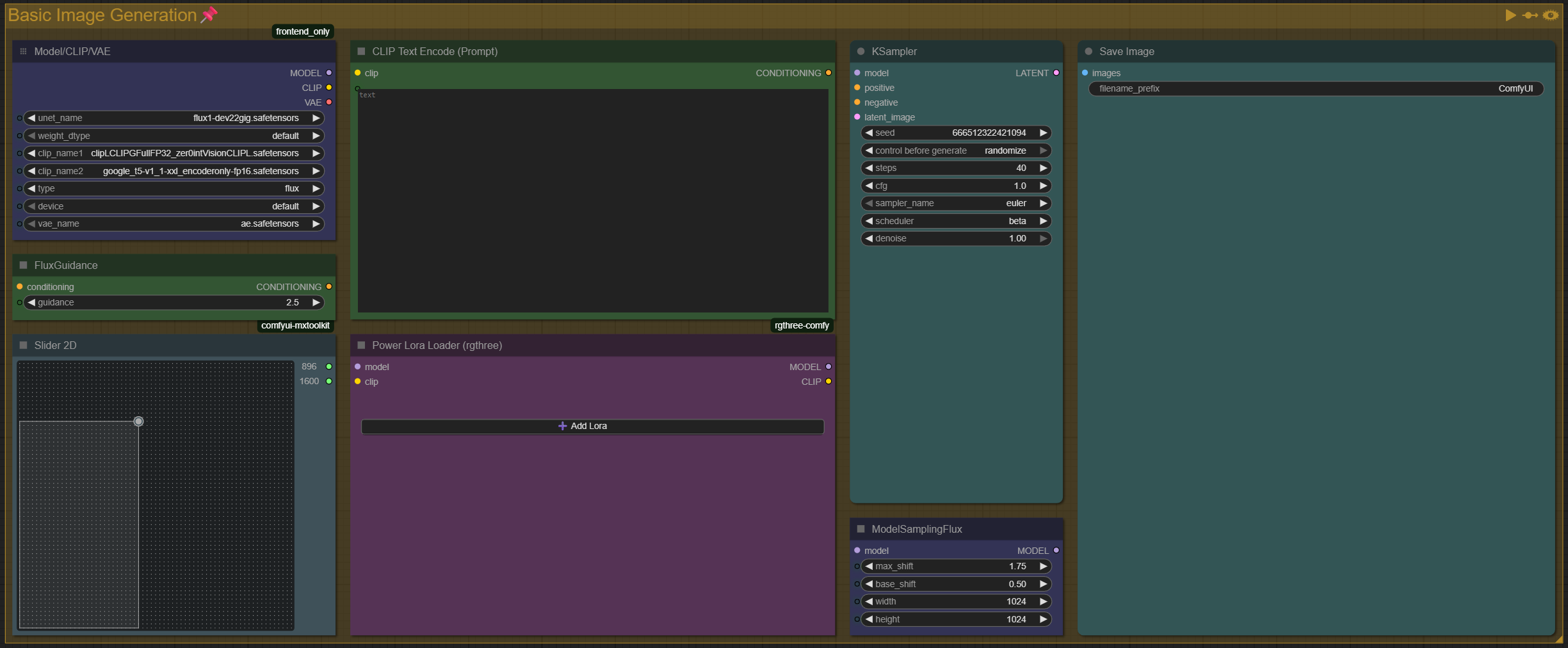

Here’s how I generate my images, plain and simple.

I run everything locally in ComfyUI with a minimal Text2Img workflow. No upscalers, no Adetailer, no post-processing. What you see in my showcases is always the raw output.

System specs:

Processor: Intel(R) Core(TM) i7-9700K CPU @ 3.60GHz

Motherboard: Gigabyte Z390 AORUS MASTER

GPU: NVIDIA GeForce RTX 2080 SUPER (8GB VRAM)

RAM: 64.0 GB DDR4

Model, ClipL, T5 xxl, Sampler, Scheduler, CFG, Flux Guidance and ModelSamplingFlux :

Sampler: Euler

Scheduler: Beta

CFG = 1

Flux Guidance: 2.3 to 3.5+

Max_shift = 1.75

Base_Shift = 0.50

That’s the backbone. From there, it’s just prompts, LoRAs, and a lot of trial and error until things click.

Flux Guidance (2.5 vs 3.0 vs 3.5)

One of the first things I like to play with is Flux Guidance. The default recommendation for Flux Dev is 3.5 and yeah, it works fine... but I actually prefer running it lower, usually around 2.5.

Here’s why:

3.5 (default) → Sticks closer to the prompt, but often makes skin look glossy and a little “AI polished.”

3.0 → A balance point, some freedom, but still pretty prompt-adherent.

2.5 (my favorite) → Gives Flux more room to “breathe.” It injects unexpected details, follows the prompt less rigidly, and in exchange you get richer realism, less glossy skin, more natural textures, and finer small details.

The trade-off is prompt adherence: at 2.5, Flux can start adding things you didn’t ask for, or it may interpret loosely. But personally, I find that chaos worth it... the images just feel more real.

ModelSamplingFlux & Why I Use Higher Max Shift

What is it, simply put

The ModelSamplingFlux node in ComfyUI lets you tweak how Flux “samples” its internal noise / diffusion behavior by injecting a shift during sampling. It has two main parameters: base_shift and max_shift.

base_shift = how much shift / “wiggle room” the model starts with.

max_shift = how high the shift can go during sampling.

Using higher max shift means the model has more flexibility during sampling to deviate, inject variety, and find interesting detail paths.

Standard setting vs what I use

Standard/common values: max_shift = 1.15, base_shift = 0.50

What I prefer: max_shift = 1.75, base_shift = 0.50

I chose 1.75 because in my tests I saw it pushed the image more toward lifelike realism:

More subtle detail variations

Less glossy / over-polished surfaces

Sometimes weird detail removal (it “fixes” noise I don’t like)

Color shifts (sometimes surprising, but often interesting)

The tradeoff is control: since the shift is more aggressive, Flux can drift from strict prompt adherence, and occasionally inject things you didn’t ask for. But for my style, that’s a welcome chaos.

Render Comparisons

BASE Flux

Here’s a video showing how Flux Guidance and Max Shift interact using the BASE Flux package (Flux Dev, ClipL, and T5 XXL). I kept the prompt pretty simple so it’s easier to see how the settings themselves affect the render.

''Shoulder-up portrait of a rockabilly woman''

As you can see, the setting that makes the biggest impact on the render is Flux Guidance. Even with small 0.5 step changes (3.5 → 3.0 → 2.5), the images come out totally different... new elements appear, others disappear. That’s the direct result of giving Flux more ‘wiggle room’ at lower guidance values. The effect of Max Shift is most noticeable when compared to the standard 1.15 setting. Hair, earrings, clothing, and other small details shift subtly, adding variation and realism.

Zer0intVisionClipL + Google t5 xxl

So here again, I kept the same simple prompt, but this time the renders were done with Zer0IntVisionClipL and Google T5 XXL. The idea is the same: to show how Flux Guidance and Max Shift interact, and how the results shift compared to the base Flux package.

''Shoulder-up portrait of a rockabilly woman''

Flux Guidance still changes the image a lot, especially at the lower setting (2.5). Max Shift still does its little clean-up magic. The big difference here is consistency, because even though the images change, they stay much more similar overall compared to the base setup.

With the base package renders you can see how drastically things shift. At 3.0 Guidance the outfit turns into an entirely different piece of clothing and a tattoo appears out of nowhere. At 2.5 Guidance the background changes completely and becomes much blurrier. The window disappears and the whole scene turns into a bokeh fest.

Longer, more complex prompt

''A photorealistic shoulder-up portrait of a rockabilly woman with jet black victory rolls hairstyle, bright red lipstick, winged eyeliner, and subtle freckles. She wears a red polka-dot halter top with a folded collar, a vintage black leather jacket draped over her shoulders, and a cherry pendant necklace. Her expression is confident, with a slight smirk. Behind her glows a soft pastel teal backdrop with faint neon signage blurred in the distance. Her skin is naturally textured, with warm highlights and matte shadows. Lighting is cinematic, with a gentle rim light catching the curls in her hair.''

BASE Flux

Zer0intVisionClipL + Google t5 xxl

Outro

So that’s it, that’s how I use Flux in ComfyUI. I hope it helps some of you with local generations, or at least gives some perspective that might connect with how the Civitai generator works. I don’t really use the online generator myself, so I would really appreciate if people who get good results with it could share their settings and explain how they got there.

If you have tips, tricks, or that little secret sauce that makes your generations pop, please share it. The more we exchange knowledge, the easier it gets for everyone.

When I first started, I would have loved to have veteran advice or power-user tips to guide me. So let’s use this space to help each other out and make life easier for the next person.

Next I plan on doing a LoRA guide. Not really a guide, more like a ‘how I personally use LoRA’ write-up. If you liked or disliked this article, your feedback is always welcome. If you liked it, I’ll try to do even better next time. If you didn’t, I’ll work harder to win you back. Thank you all for the support, for reading, and for pushing me to keep improving.

.jpeg)