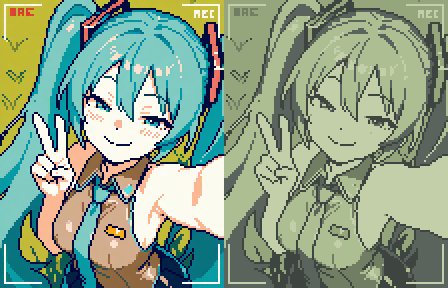

Resulted Lora:

About :

This experiment explores whether SDXL (Illust XL in particular) can learn pixel discipline through dataset design alone. By aligning all samples to an invisible global grid and tagging based on distance, lighting, and palette conditions, the model learns to interpret pixel density as scale and structure rather than random detail.

the goal is to have the LoRA pick up on the following patterns:

the uniform invisible grid the always stay consistent and uniform across resolutions

link the concept of scale to pixel density for each entity in the pixel space

[optional] pickup on pixel-art lighting

UniPixel Animated Project 🎞:

I finally found out a way to train LoRAs for WAN2.2 (kinda) so I had to give this a shot

I curated a small subset (characters, environments, props) of the UniPixel initial dataset then captioned them based on what I could gather about wan2.2 prompting for the last 2weeks (full on Shakespeare mode 🎭)

the idea is is to train a LoRA using mostly images on the Low Noise T2V Model to apply the styles as filter after the High Noise T2V Model pass create most of the motion

or even use it to create images using Low Noise T2V Model set at a single frame

Im currently training/testing this LoRA experiment [here] at tensorArt

you have an account there you can also try it , that goes a long way to helping me.

Testing 🧪:

T2V Low Noise image (single frame)

"nv-p1xel4rt, a girl with short, bright green hair styled in a bob cut, wearing large red over-ear headphones and a sleeveless top. She is sitting at a wooden desk, working on a laptop thats emitting a soft glow. Her expression is focused and slightly tired, with her elbow resting on the desk and her chin resting on her hand.

a large window behind her, through which the night sky is visible, with a cityscape, the desk lamp on the desk illuminates the room."

while not as good as the Illust output its still worth noting that WAN2.2 is higher param model thats mostly designed for videos

T2V Low Noise video test

Now of the animated test I'll be making two samples using the same prompt with and without the LoRA loaded and see how much of a difference it makes (shape and motion wise)

you can find the prompt in the metadata

It seems the LoRA rly act as a sort of a pixel style layer on top of the video it even gamified the back ground a bit xD

I think imma got and train a lora on the the high noise model after all to see how much change it makes motion wise . . .

Mini UniPixel Dataset 🖼:

curated from 12bit games screenshots

more thematically limited then UniPixel

added some cropped items from UniPixel's dataset tagged as close-up

uniform grid scale (x16)

first things first I manually resized all dataset their minimal pixel format (one visible pixel = one actual pixel)

then i had all fit (not resized) into specific resolutions

(1152x896)/16 = 72x56

(896x1152)/16 = 56x72

(1024x1024)/16 = 64x64

the overall resolution distribution was 27 per resolution at a total of 81 images

WD3 auto-caption was useless at this pixel scales so I went full manual tagging on this one

LoRA settings ⚙:

Rank = 32

steps = 3628

optimizer = Adamw

trigger word used = yes "nv-mp1xel4rt"

Testing 🧪:

at this point Im rly pushing it with training at this pixel scale so any human readable results pretty much a win

there are multiple ways to influence the main subject pixel density (aspect ratio, distance, stylization, outline padding)

base prompt: simple background, 1girl, pink hair, blue dress,

resolution (WxH) : 896 x 1152, 1152 x 896,

steps 24

while not intended it even picked up on some interesting variation when used with some games names

base prompt: simple background, 1girl, pink hair, blue dress, chibi,

resolution (WxH) : 896 x 1152

steps 24

so basically its the prompt to decide what variation is possible withing this tight pixel space

Extra 🧱:

I also updated the Unipixel tools to include a preset for the Mini Unipixel Lora

all the example used above is using the webui version of that tool for direct processing

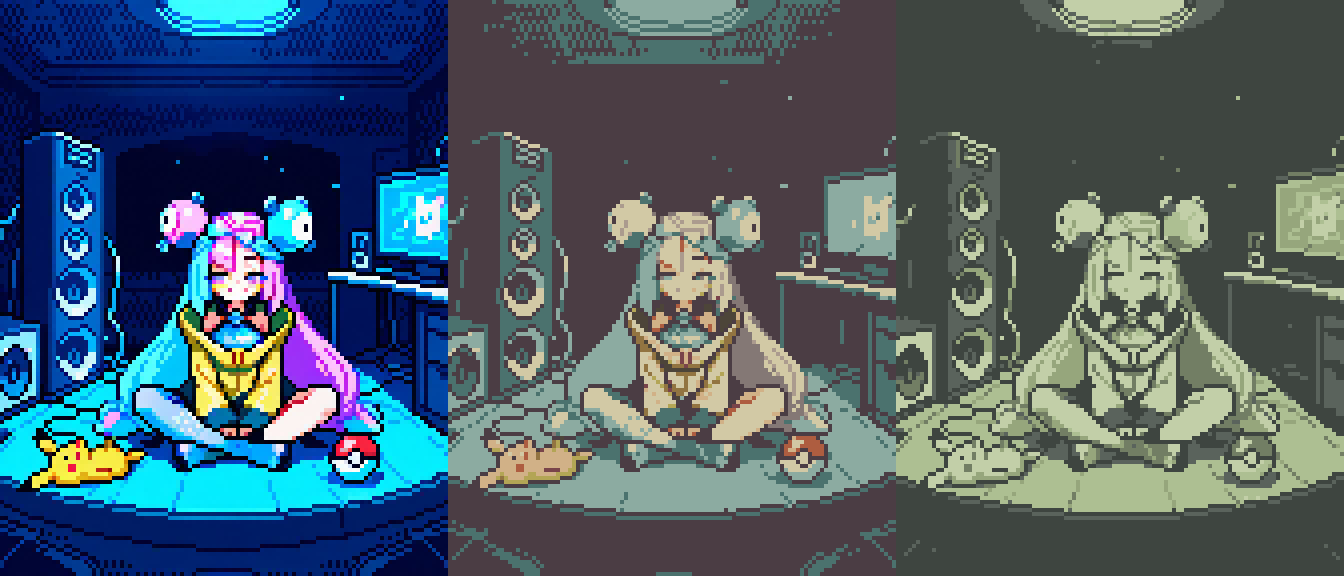

UniPixel Dataset 🖼:

curated from multiple style and sources

have almost uniform distribution across multiple themes (chara sfw, chara nsfw, scenery, creatures, scifi , medieval)

varying subject distance

uniform grid scale (x8)

first things first I manually resized all dataset their minimal pixel format (one visible pixel = one actual pixel)

then i had all fit (not resized) into specific resolutions

(1152x896)/8 = 144x112

(896x1152)/8 = 112x144

(1024x1024)/8 = 128x128

images that were bigger were cropped (manually)

images that were smaller were filled (with the same color as its existing bg)

I forgot to include "masterpiece" in the tag pool but after testing the result it didnt interfere that much with the overall style

converted the imaged to an indexed color mode with the max color limit = 32

scaled back images to trainable resolutions by scaling the all the dataset by 8 again

insured resolution distribution balance rs1=40, rs2=40, rs3=40

manual re-captioning was done over a WD3 auto-caption pass

manual tagging taking into consideration "close-up" and "far away" based on pixel density of main subject

tagged even props in these scenes like bottles and rocks . . .

tagged expressions (even if looked like two pixels most of the time)

tagged color palettes as lighting conditions (sunset, dark, foggy. . .)

added "masterpiece" tag to all dataset to avoid its interference with the style

LoRA settings ⚙:

Rank = 32

was initially planning of doing a second run at rank 64 but 32 proved to be enough

steps = 4928

optimizer = Adamw

trigger word used = yes "nv-p1xel4rt"

Testing 🧪:

to be honest I didn't imagine a this would work from the first training session.

here's the list of what LoRA managed to get:

thematic flexibility (characters, animals/creatures, scenes, scifi, medieval ... )

pixel consistency ( amazing )

scene depth (both 3d scenes and side scrolling) by adding "from side"

lighting (metal shading, backlighting, times of day lights, simple shadow cast)

the viewer gird and Pixel size will always be consistent , knowing that can still play with the pixel density of the subject using two approaches

Depth: how far away the subject is from the viewer

Output Resolution: the space that subject can occupy

it tries to keeps consistent pixels and plays nice with other LoRAs loaded in

all results reliably converts back to true pixel format by dividing the image size by 8

this is useful in case when any manual edits are needed or just to remove the jpeg the ai noise from results completely

Extra 🧱:

For quicker processing I also made a small [Tool] the do just that xD (divide by 8 with and resize using nerest neighboor)

as well as adding the option apply quantization automatically or use a custom palettes

for palettes I added predefined ones like (gameboi, nokia, horror, fantasy . . .)

or using custom ones by loading them as .png color strips

that's pretty much it for this experiment, I'll be upload the LoRA and the Pixelart tool soon