中文版我发B站专栏了,搜用户ReroWintermoon就行。

I use GitHub - Akegarasu/lora-scripts: LoRA training scripts use kohya-ss's trainer, for diffusion model for training, so this article is based on it and its default parameters:

lr 1e-4,batch size 1, epoch 10, dim32, alpha 32, NAI for training

not for SDXL

Pollution(Overbaking, Overlearning)

Those unwanted features can all be called "pollution" by Chinese player usually, at least in those QQ groups I join. So for convenience I will use pollution in the following part.

For example, training a character/clothing lora with images from a single artist might caused "style pollution"; Missing captions might bring unwanted clothing/hairstlye/pose etc.

Data Preparation

Nothing much to say.

Avoid SD-generated images (Learning them is so fast and their details are bad).

Avoid collecting data from a single artist if you do not want the style.

If you use NAI for training, do not use too many realistic images.

Steps and Repeats

By default, 800 steps for a character/clothing is enough. But I prefer 1200+ steps. after all if it is overlearning you can still use it with 0.8 strength

Our goal is that this lora works perfectly at strength 1 with as little pollution as possible. So do not give a image too many repeats. 8 is quite high, and as for 10, it is very dangerous.

When there are lots of images like 40, you can achieve high steps easily with only 4 or 3 repeats. Even there might be some missing or wrong captions, because you do not give certain single image many repeats, it is hard to bring pollution.

But when you do not have many images for what you want to train, you have to give them more repeats or epoches. As for this situation, it is important to manage the captions.

Missing Tags and Wrong Tags

Wd1.4 has improved a lot compared with deepdanbooru but it is still not perfect. Missing tags and wrong tags will be likely to bring pollution. Followings are those you should pay attention to:

Things on Arms

off shoulder jacket, off shoulder kimono, shawl, open shirt, detached sleeves etc.

They are very "dangerous", even though you might think you have tagged them, but found they still appear, ignoring your "bare arms", they might have high weight in some models. Just one or two images with "things on arms" will make AI "realize" that "well, I can put some thing on arms!". As for me, I will try to avoid to use those images, and would rather give more seeming tags as captions.

Twintails and Two Side Up

Dangerous hairstyles. Tagger sometimes mixed them up. Once the hairstyle is tagged wrongly……the twintails or two side up will keep showing up. If you can not tell the difference too, you'd better tag the image with both.

Animal Ears and Hair Ornament

animal ear fluff also, sometimes I even use Photoshop to remove the animal ears or hair ornament like some kinds of hair ribbon. Sometimes, headgear or hair bow can also be regarded as (fake) animal ears for AI. Like Girls'Frontline's Tokarev (griffin's dancer).

Poses and Gestures

In fact, tagger can not tell many poses and gestures,

so do some xxbooru submitters.It often misses tags like hand_in_own_hair, arm_under_breasts, playing_with_own_hair…… arm_under_breasts is more likely to become pollution, especially with huge_breasts. Tags actully are not isolated, some of them have related weights with other tags. For example, cow-related tags like cow_print will bring cow_horns.Views from ……

from_side, from_above, from_behind, from_below

Composition and …… Pussy?

cowboy_shot, upper_body, portait, full_body head_out_of_frame etc.

Once missed, the output might be hard to control. You might have come across an image like this: a girl is spreading her legs but the lower part is cropped so you can not see the pussy. This kind of images will influence your lora's "spread_legs" tag if you do not have other spread_legs data. What's worse. Her pussy do not show on this image (may be out of frame or implied sex), but tagger still give pussy. Now, it is hard to see the pussy.

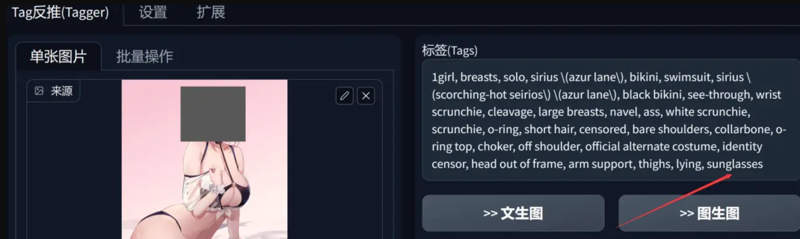

In fact, wd1.4 can "guess".

Sometimes the tagger will "know" what it probably is, and guess what it should have.

For example, Sirius (swimsuit) (Azur Lane) always has heart-shaped glasses on head, now I cover her head so you can not see the glasses. However, tagger still give "glasses".

Captions and Trigger Words

Is trigger word a must? How to set trigger words? Should we delete all these captions that describe the character/clothing's features?

Before answering these questions, here is an example or though experiment.

Firstly, what will happen if you input "apple, white shirt"? Of course, they both show on the output image but the do not have any relations.

But you want a special white shirt that has an apple printed on it, then you collect one or two bunches of images with this kind of shirt, and throw them into the tagger. Of course, tagger give them captions "apple, white shirt". Then, you start the stove and get a lora. Now there are mainly 3 kinds of results:

Some apples are drawn not very well, or you do not have some pure white shirt images as regularization, or the lora is a little bit overbaking. You just input white_shirt only and get an apple print shirt (not very standard apple maybe).

apple and white_shirt are separated well, only when you input apple and white_shirt together will you get an ideal apple shirt.

Underfitting and nothing happened

For this "apple shirt" lora, the trigger words are "apple" and "white shirt" of course. But……

the apple not only shows on the shirt, but also on other places. After all you can not tell AI the precise position.

You think it is inconvenient to input both apple and white shirt!

Then you delete "apple" in captions. Then the trigger word is only white_shirt.

But for AI, it does not "know" the print on the shirt is an apple anymore. The "apple" is now just a "round, red, with stem maybe" pattern. If the training steps are high or the ckpt models you use is good at drawing apple, that is OK. But if you lower the lora's strength or use this lora on a model that is far from the training based models, you may not get an ideal apple print.

You might have see those realistic review images of anime character loras. Many of them's show wrong hair colors and eye colors. However, we usually delete the character's hair color and eye color's tags when training (those auto-training robots or beginners not included probably). This is an example: most realistic models are overfitting in face features, so without the guidance of text, the character's hair color is covered. Can we show respect to characters.

In short, lora training is the procession of connecting image features with text. Those tags that appears often are more likely to "absorb" features. Using trigger words is using tags to "activate" those features in datasets. Loras give some tags unique particularity, making shirt "a certain kind of shirt", dress "a certain kind of dress" etc. You can even regard those style loras as a Xin1 lora, with "this kind of eyes" "this kind of nose" etc. So just memorize and use "trigger words" flexibly.

For example you know a character very well—— she has grey hair, red scarf, dog ears and white thighhighs. If the lora maker do not delete all the feature captions and make strict regularization, red scarf and white thighhighs must be trigger words right?

What to Delete——Caption Editing

What to Delete? If you are training a character/clothing lora, you can put images you collected in tagger.

For example here is Friedrich Der Grosse (Azur Lane) "Zeremonie of the Cradle" Wedding Dress.

For this lora I made several months ago I used "head" or "potrait" method for changing clothes more easily, now just forget that.

Get back to the point. Tagger will give following tags: wedding dress, dress, black dress……

Because I want to make changing clothes more easily and you know, guidance of text. I had better keep one "dress".

"Wedding_dress", pass. Most wedding dress are white, I'm afraid it will not work well. "Dress", pass. It is too broad.

So I choose black_dress, and replace all the "wedding dress" and "dress" with "black dress". So do these horns, I choose "mechanical horns" to replace other "xx horns" or "horns" because mechanical can keep the textures better.

Tagger sometimes will tag bridal gauntlets or elbow gloves with detached sleeves wrongly, we'd better fixed it.

And Friedrich always has black hair and yellow eyes so we can delete them for convinience.

Tags that are not recomended to delete when training:

halterneck and criss-cross halter. They have already become pollution in some models.

special pattern like fishnets, striped, argyle.

small and unique things on head, like Kashino's small cow horns, Mudrock's pointy and long horns, ahoge and especially heart ahoge.

breast curtains and pasties.

gloves, elbow gloves/bridal gauntlets, animal hands/paw gloves, thighhighs and pantyhose, shoes and boots

wings and tails

certain hairstyles like twin braids ( if you deleted it it might apprear on the top of head sometimes)

necklace and earrings, epecially specific kinds like cross_xx.

And you might keep a self-made tags as a trigger word for your concept. No matter whether it works or not, make sure that this word do not have other meanings you do not want. For example I used to give Yuudachi (Christmas) (Azur Lane) a trigger word "poixmas", but to my surprise AI recognized "xmas" from it and added Christmas's elements.

"Manul Regularization"

You might have read my https://civitai.com/articles/726/an-easy-way-to-make-a-cosplay-lora-cosplay-lora.

Now I would like to make some additions:

Images in which girls are completely nude's influece to the clothing is nearly 0. Based on this conclusion, we can use those unrelated images to reduce pollution, such as scenery without humans:

For now we can call this manul regularization method.

Another example is my Cow Print Naked Bodypaint lora, I can only found 8 images of Cerestia (Cow bodypaint), for those completely nude images I only used "completely nude" instead of "nude", and I added several images in which girls are not nude and wearing different clothes, and made some identity censor.

You might tried to train Xin1 lora but found some concepts did not work. Yeah, less amount*repeats concepts will be covered. And you've known in Steps and Repeats that 1 repeat can hardly bring pollution. So……there is a more "radical" manul regularization,:

Collecting lots of high-quality images, do not delete any captions of them, and give only 1 repeat. And they had better not have much similarity with the character/clothing you want to train, for example you want to train Hoshino Ai with idol costume, then these images had better not contain images with other idol costume.

By this, it will be easier to change your character's clothes. You can see this lora as a unique 2in1 lora, concept A is "1girl" with different appearence, concept B is the character you want.

However, as I said before in Captions and Trigger Words, when you do not have many images as datasets and they are from a single artist, the style might be bound with the clothing.