First of all, i just got sucess with style loras, not for content or faces yet.

Allright chooms, here we are, after a bunch of experiments, i will reveal the secret formula.

First of all, i've trained using ostris ai toolkit, but you can adapt, whathever.

in case you guys don't know, there an old article, but this is for sdxl, and what a suprise, works as well in qwen too!!

but for qwen, there some new stuff to kept in mind, also a script to do the magic trick as well.

1th step.

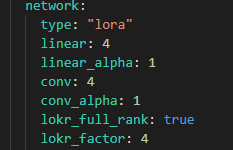

at nutsell, you need to modify the advanced config in your training, like this.

linear: 4

linear_alpha: 1

conv: 4

conv_alpha: 1

yes, this should make the training faster, and the filesize at least [70MB].

2th step.

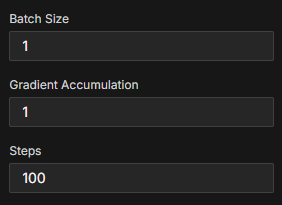

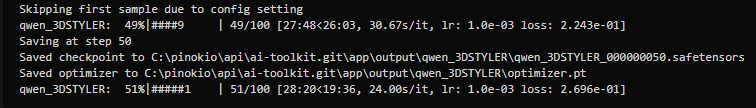

Now, you can choose the number of steps, should be 100 [20 minutes] or 150 [30 minutes], more than this will make the lora lose the context for no reason, and i don't get why yet, kinda witchcraft.

3th step.

My config is low noise, wavelet, and sigmoid, for style catchy, but you can play with that, make sure to not set to high noise, this will force to content mode, whatever i have no sucess doing this.

3bit with ara is the only way on this gpu, of course, but here a catchy, UNLOAD TE, yes, no text encoder, this will make the training faster, and also, grab more the style than whatever else.

4th step.

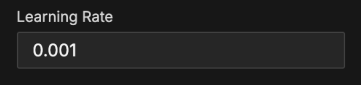

yes, the most crazy one, set the learning rate to 0.001, you don't read it wrong, this is will work, believe me.

5th step.

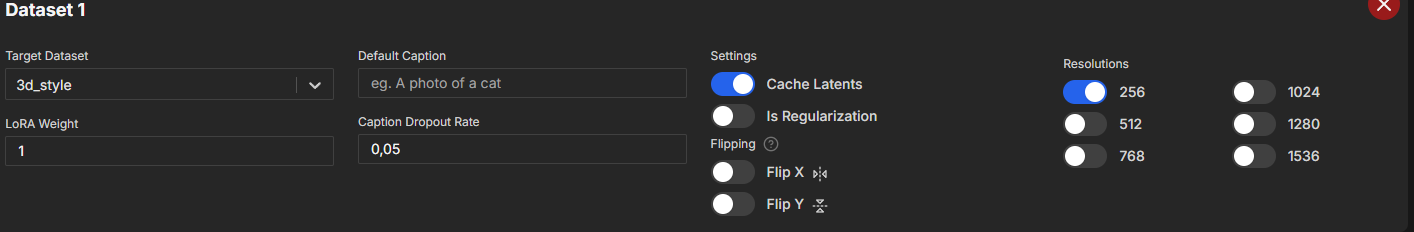

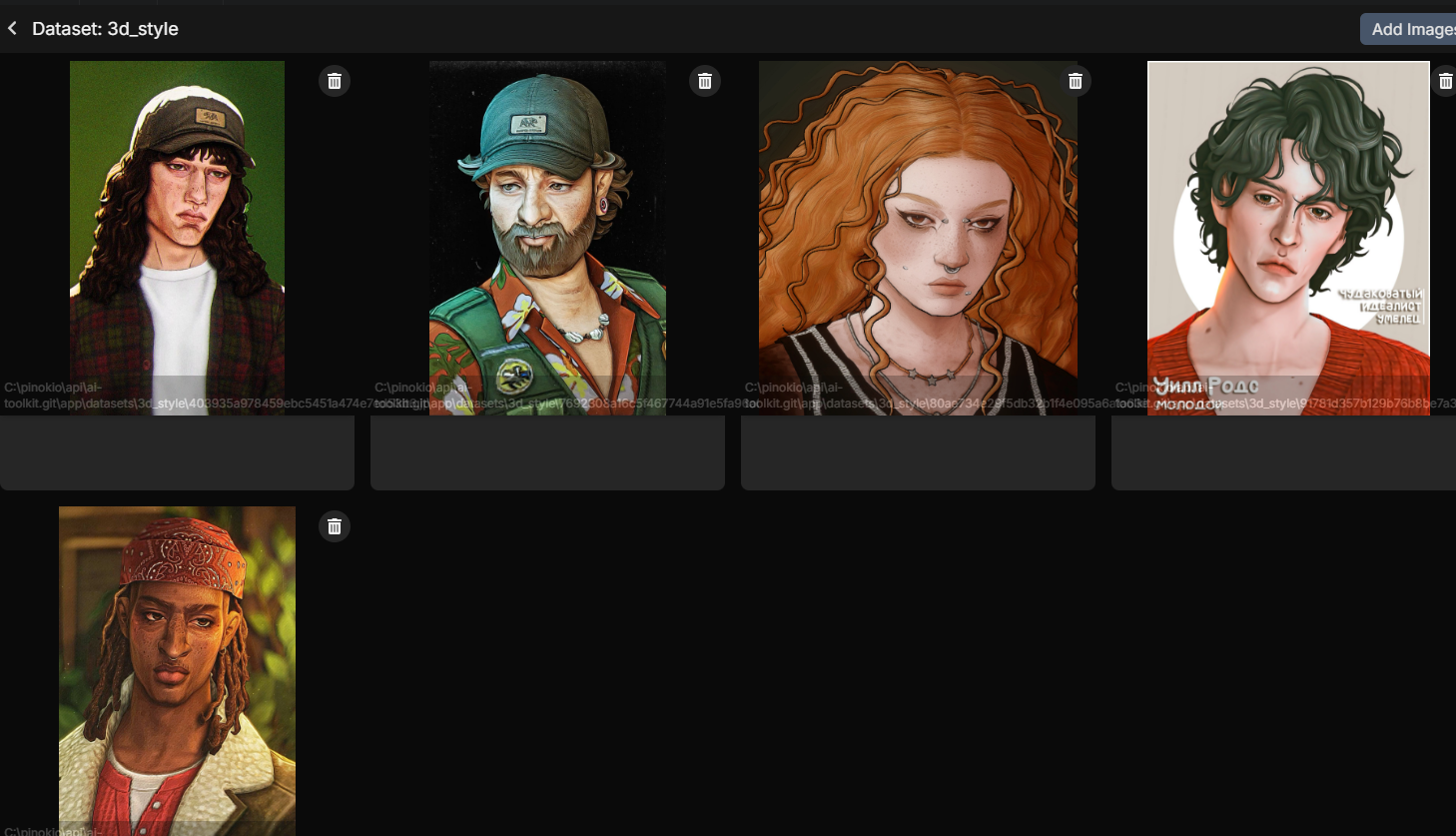

now, you should train, be patient, at least, now instead of long hours, will be just 30/50 minutes, i recomend using minimal number of images on dataset, like 4 from the old article, less make learns faster some content of the style, and because of the 4/1/4/1, i'ts kinda hard to make some mess with less data, but more data works too.

6th step, the script.

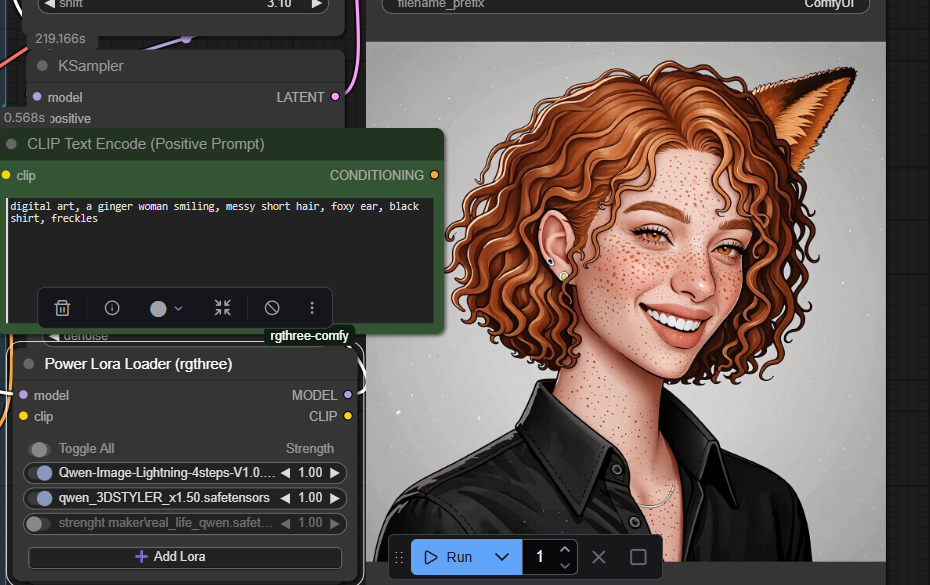

yes, is not read yet, if you try to use the lora as the way is now, you i'll see that nothing will happens, this is because the strenght is too low, if you set the strenght to 2, the lora do the perfect style composition, with no distortion, but, you don't want to post a lora that need 2 strenght to be activated, huh?

this why, in attachment, you have a script, that multiply the strenght of the lora, manually.

you open a comand, or visual script, and type like this.

python merge_strenght_lora.py strength yourlora.safetensors --multiplier 1.5you can adjust the multiplier, 1.5 is kinda the perfect for most of cases, this will adjust the strenght and make no need to train anymore.

Final toughts.

i think that should work with videos too, but, about text encoder, i've already trained a 150 steps lora with 2 bits of text encoder, is the clown girl lora.

Cons- this lora is a failed experiment of trying to get a shadbase style, the face structure don't works, but the overall style is there shadbase failed experiment.

also result of this training now, 100 steps.

![[LuisaP💖] Qwen - How i trained 30 min of style loras in RTX 4060 8GB](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/80afd1cd-ed91-4a4a-9851-be260fd12455/width=1320/ComfyUI_01528_.jpeg)