If you find our articles informative, please follow me to receive updates. It would be even better if you could also follow our ko-fi, where there are many more articles and tutorials that I believe would be very beneficial for you!

如果你觉得我们的文章有料,请关注我获得更新通知,

如果能同时关注我们的 ko-fi 就更好了,

那里有多得多的文章和教程! 相信能使您获益良多.

For collaboration and article reprint inquiries, please send an email to [email protected]

合作和文章转载 请发送邮件至 [email protected]

by: ash0080

Last time we conducted various training experiments with three different shapes, but there was one problem that remained unresolved: what did Lora actually learn? In the series of experiments with the three shapes, most of the time we observed that Lora did not learn any specific shape from the dataset. For example, in the basic_grey experiment with minimal interference, the generated image with the highest probability was an incomplete gray circular line. Therefore, this time we will continue to eliminate interference and use only one shape for the experiment.

basic_round_rect_25

tag: monster, white background

Let's take a few steps first, with steps = 3x25x10 = 750.

Since the number of training steps is low, it is difficult to observe any learned features directly from the generated images. However, when comparing the generated image at step 1 (left 4: without Lora, right 4: with Lora),

we can clearly see a tendency for noise to converge towards the center of the image.

basic_round_rect_100

Keeping the training set the same, this time we simply increase the steps to 3x100x10 = 3000, which should be sufficient for training the style in most cases.

Using the trigger word "monster" to generate images, we obtain the following results:

What patterns can we observe from these images?

Despite using three rounded rectangles of the same shape as the training material, Lora does not draw any obvious rounded rectangles.

Compared to shapes, Lora seems to be more sensitive to colors in its training.

When our tag is unrelated to the actual image, with sufficient training, it is possible to completely distort the mapping of the tag and force it to point to different results. However, these results are typically neither part of our training content nor a direct representation of the original semantic content. Instead, they are often a combination of both, as Lora's use involves freezing the pre-trained model, so it cannot completely counteract the influence of the original model (unless trained for more than 8000 steps, achieving the "photocopier" effect).

Can Lora Learn Mathematics? (1) Single Word

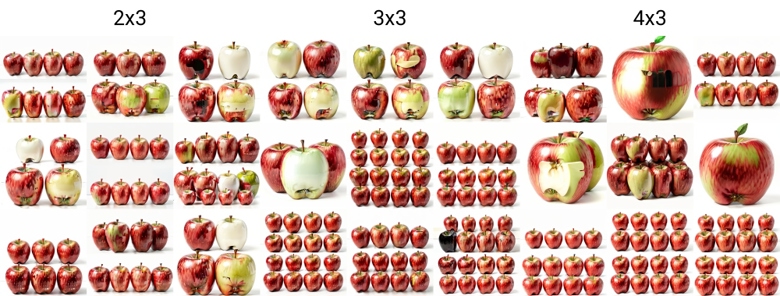

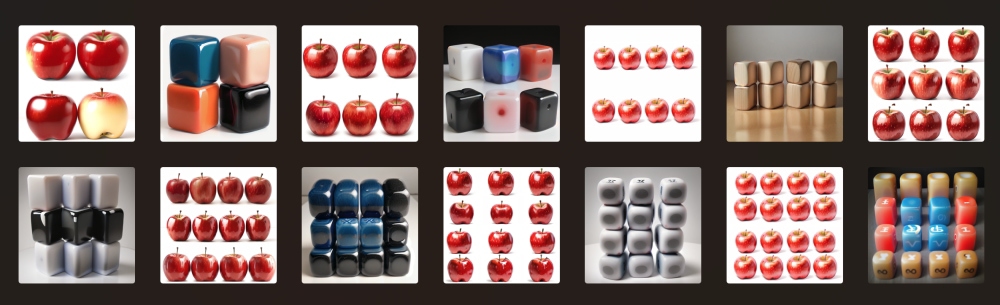

The tags for this experiment are 2x2, 2x3, 3x3, 3x4, 4x4 apple/cube, white background (note that intentionally I did not train 3x2).

Let's start by drawing apples from the dataset. The accuracy is less than 20%, but the trend can be confirmed.

Now, let's draw a cup, which is not present in the training dataset. The results are even worse.

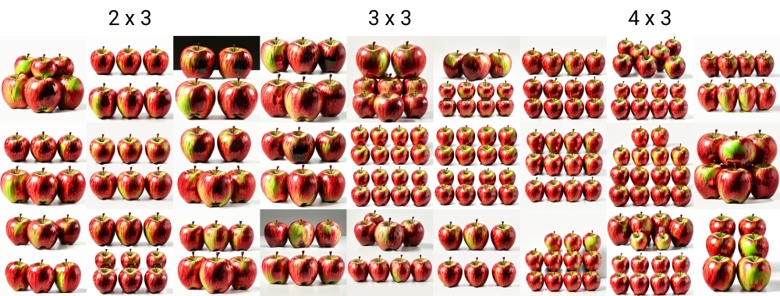

Can Lora Learn Mathematics? (2) Phrases

The dataset remains the same, but this time the tags are changed to 2 x 2, 3 x 3... (note the spaces).

Let's see what happens when we draw an apple. It seems that the accuracy is slightly better compared to single words. My guess is that the order of tokens enhances an additional layer of training parameters, leading to better performance on the training examples.

However, if we draw a cup (an object not present in the training set), we still cannot achieve good results. This means that the words "apple" or "cube" have absorbed certain features, and when we don't use these words, the performance is reduced by half!

It is worth noting that, at least in A1111, there is no apparent difference in the impact of using a single word or a phrase with spaces as the trigger word. Therefore, we can speculate that the significance of word spacing in the training process is different from its usage in the generation process.