If you find our articles informative, please follow me to receive updates. It would be even better if you could also follow our ko-fi, where there are many more articles and tutorials that I believe would be very beneficial for you!

如果你觉得我们的文章有料,请关注我获得更新通知,

如果能同时关注我们的 ko-fi 就更好了,

那里有多得多的文章和教程! 相信能使您获益良多.

For collaboration and article reprint inquiries, please send an email to [email protected]

合作和文章转载 请发送邮件至 [email protected]

by: ash0080

Last time, we conducted a study on single shapes and found that LoRa doesn't strictly learn the shape (without overfitting). It only has a general tendency to determine the distribution of pixels. Additionally, we found that LoRa is more sensitive to colors than shapes. So, today, I plan to investigate the influence of color distribution.

There is another important point to consider: GPT informed me that the stable diffusion algorithm is based on grayscale, so strictly speaking, the algorithm is not based on color. I'm thinking about how to design a few experiments to test this.

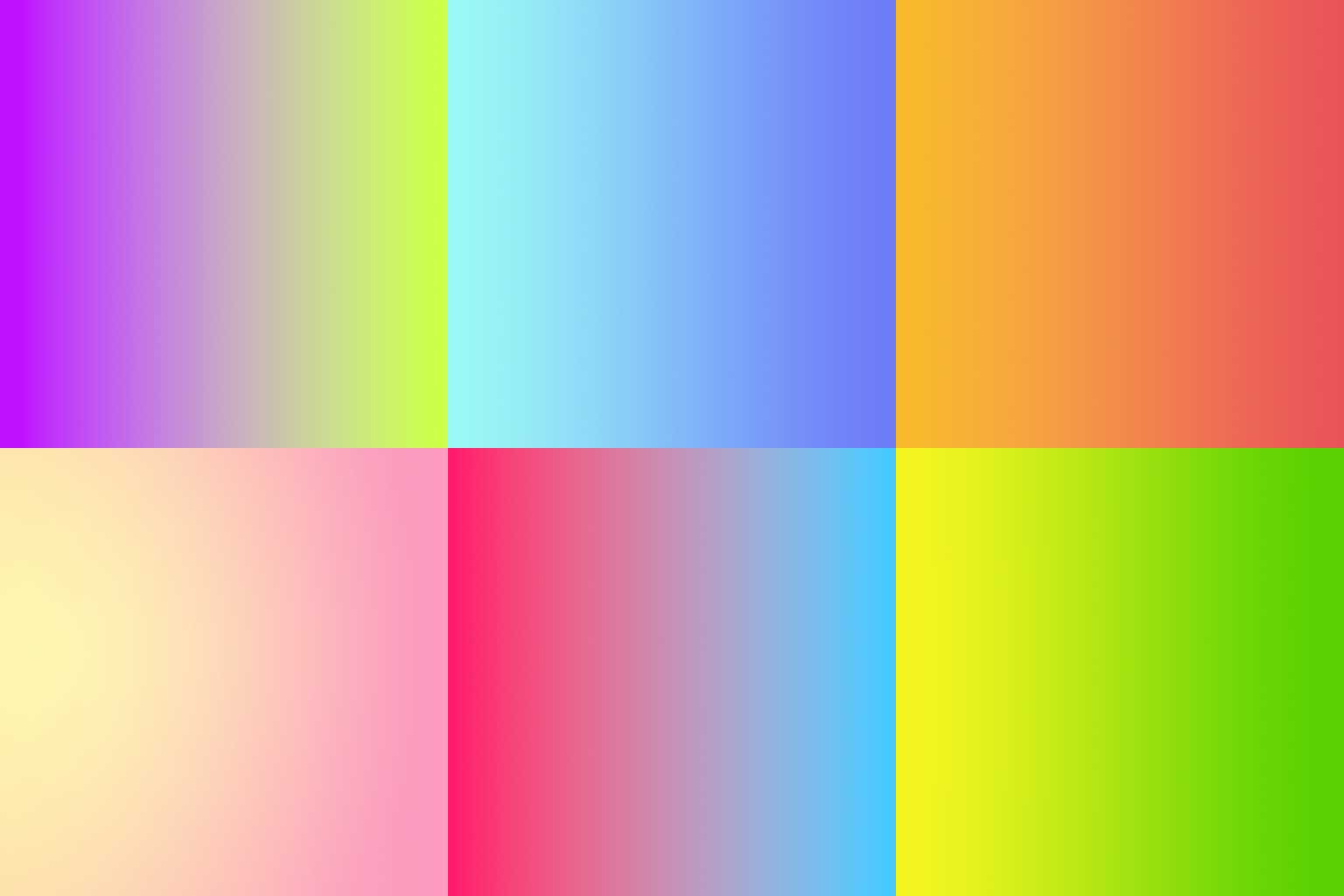

base_grad

This time, the training material consists of gradient images. Let's see if we can achieve some interesting effects.

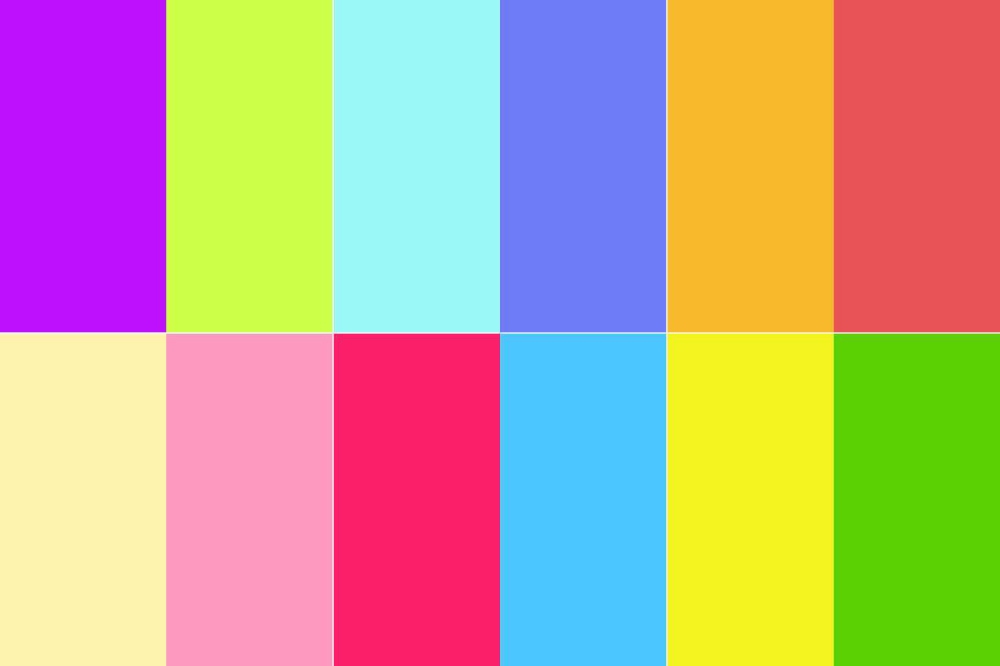

As a comparison, I created another dataset with the same colors but without the gradients.

base_block

prompts: {color} to {color} lighting, 1girl, portrait, bare_shoulders, looking_at_viewer,

Surprisingly, the generated results for gradients and blocks are very similar. This leads me to think that during the downsampling process, the learning focuses on retaining low-resolution features that are highly similar. It also reminds me of how I can train a model to generate realistic 2.5D or even photo-realistic images using simple 2D sketches. The smoothness of pixels in the training set doesn't have a significant impact on the results, but the distribution of pixels plays a more critical role.

For example, this model is trained entirely on very flat 2D images.