⚠️The part 2 is here: [⚡WIP DRAFT⚡] [PISSA] [SVD] SDXL EPS to FLOW MATCH convert (part 2) [PROOF OF CONCEPT] | Civitai

This guide covers a method for simulating full SDXL model training through the lens of SVD-based approaches, particularly the PISSA method described here github.com/GraphPKU/PiSSA.

⚠️ This guide is a work in progress — information may be added or changed over time.

Problems this method solves:

Base LoRA training inefficiency — Training standard LoRA matrices on top of a base model starts almost from scratch, meaning the adaptation process is slow and limited by the size of additional matrices (thus reducing generalization).

SVD solves this because the extracted decomposition already contains the most important model’s learned knowledge in a compressed form, allowing training to continue from this knowledge rather than from zero.Lightweight full-model simulation — SVD decomposition enables near full-scale SDXL training simulation on any home GPU that can fit the SVD extract and the base null model.

In practice, all key information can be compressed into 16/32/64/128/256/inf dimensions with minimal coherence loss in generation.No need to tune

dimandalpha— They are fixed values in this approach.Reduced dependence on learning rate tuning.

Stable training without large betas — Especially effective if your dataset is clean.

Post-training reconstruction — You can rebuild a full model after training or extract a highly accurate LoRA from it for use with other models.

Early-stop support — You can apply early stopping for the text encoder (just use TE from early epochs, simple af).

Fast adaptation to new EQ latent spaces — For example, https://huggingface.co/KBlueLeaf/EQ-SDXL-VAE, or https://huggingface.co/Anzhc/MS-LC-EQ-D-VR_VAE, or even training SDXL through flow matching (the methods can be combined).

Requirements:

SDXL base model you plan to fine-tune

(Optional) BLORA slicer script

ComfyUI

sd_scripts, or this fork, or any compatible variant

Preparing the extract

Step 1. Obtain an empty (null) model derived from your main SDXL model.

Name the output like model_null. I named the output BIG25NULL.

Step 2. Generate the LoRA extract using the null model via GUI with the original model in "Finetuned model". Higher ranks are better and depend on your RAM (for the extracting process) and VRAM (for training process, for example, 64 ranks fit nicely in 12GB of VRAM for UNET+TE training).

I named the output LoRA extract as ext_big25.safetensors.

Step 3. Fine-tune the extracted LoRA (in my case ext_big25.safetensors) using any optimizer with an extremely low learning rate (for example, 0.000000000000000000000001).

Both the text encoder and UNet should be enabled.

Load the extracted LoRA in network_weights.

Training is performed in a single step (literally one iteration), after which the result is saved.

As a dataset you can use simple dataset like a 1024px pink noise image in a folder 1_noise without captions.

You can use any sdxl model as pretrained model, for example I use the original bigasp2.5, the config is:

accelerate launch --num_cpu_threads_per_process 8 sdxl_train_network.py ^

--pretrained_model_name_or_path="K:/Comfyui_033/ComfyUI/models/checkpoints/bigaspV25_v25.safetensors" ^

--train_data_dir="fix" ^

--output_dir="output_dir" ^

--output_name="ext_big25_fix" ^

--network_args "conv_dim=64" "conv_alpha=64" ^

--resolution="1024" ^

--save_model_as="safetensors" ^

--network_module="lycoris.kohya" ^

--shuffle_caption ^

--max_train_epochs=1000 ^

--save_every_n_steps=1 ^

--save_state_on_train_end ^

--save_precision=bf16 ^

--train_batch_size=1 ^

--gradient_accumulation_steps=1 ^

--max_data_loader_n_workers=1 ^

--enable_bucket ^

--bucket_reso_steps=64 ^

--min_bucket_reso=768 ^

--max_bucket_reso=1280 ^

--mixed_precision="bf16" ^

--caption_extension=".txt" ^

--gradient_checkpointing ^

--network_dim=64 ^

--network_alpha=64 ^

--optimizer_type="AdamW8bit" ^

--optimizer_args ^

--learning_rate=0.000000000000000000000001 ^

--loss_type="fft" ^

--xformers ^

--network_weights="K:/Comfyui_033/ComfyUI/models/loras/train/ext_big25.safetensors" ^

--full_bf16 ^

--mem_eff_attn ^

--lr_scheduler="constant" ^

--seed=1 ^

--no_metadata ^

--logging_dir="logs" ^

--log_with="tensorboard" ^

--persistent_data_loader_workers ^

I named the output as ext_big25_fix.safetensors.

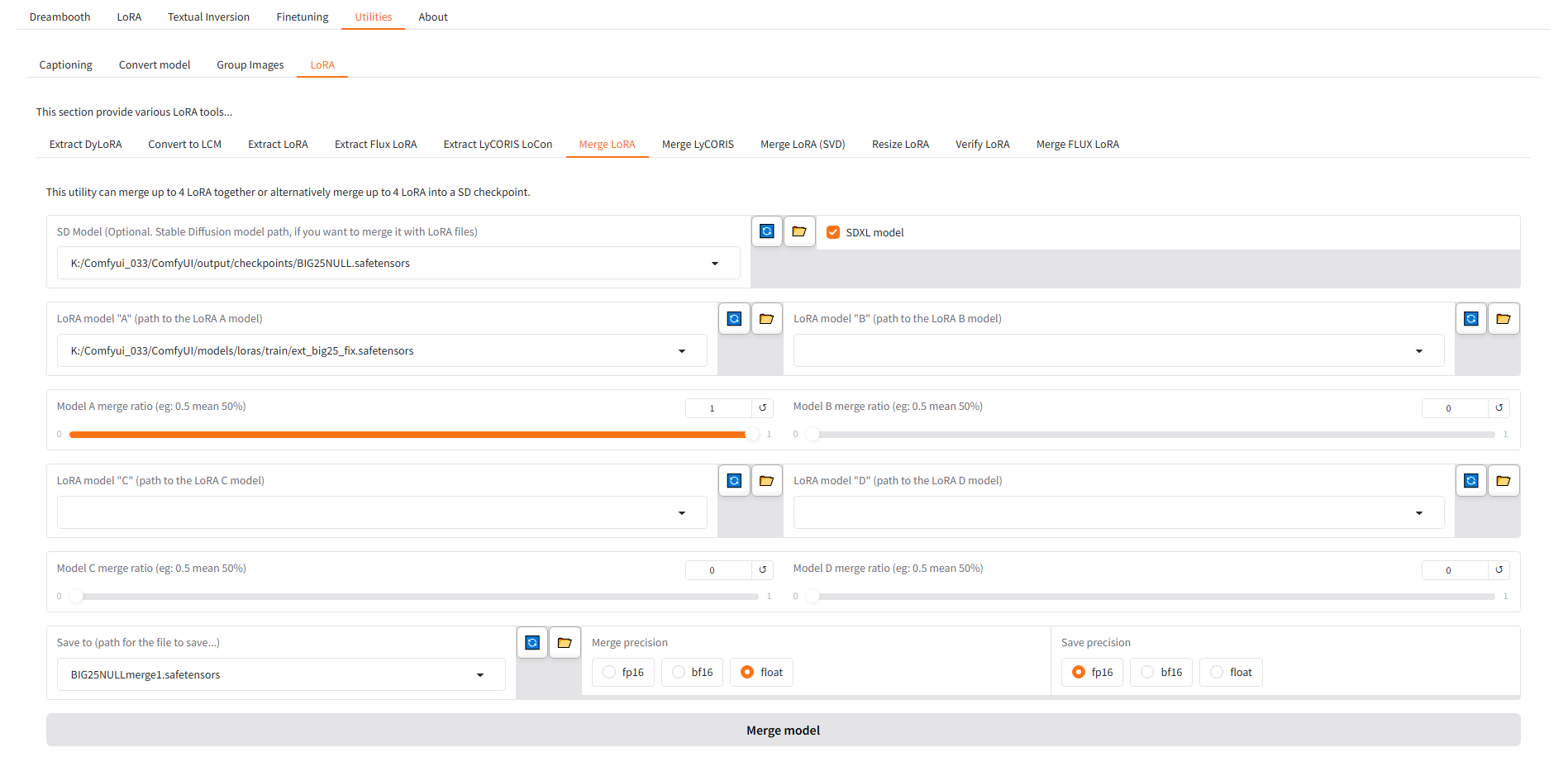

Step 4. In the GUI, merge the LoRA obtained after Step 3 with the null model.

In my case, ext_big25_fix.safetensors was merged with BIG25NULL.safetensors.

I named the resulting model BIG25NULLmerge1.safetensors.

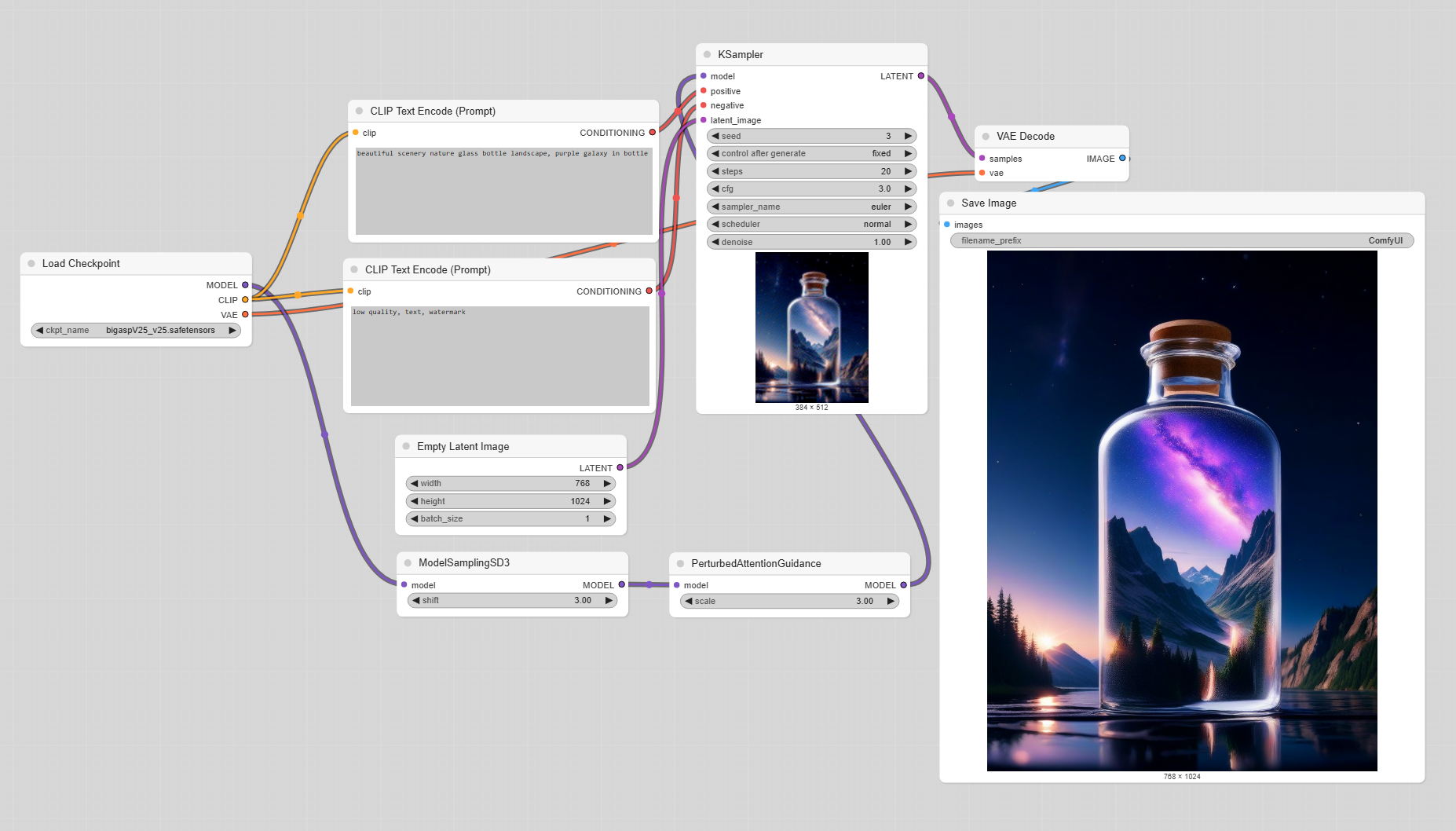

Step 5. In ComfyUI, calculate the difference between the original model and the “expanded” extract from Step 4.

Use the resulting model for further work.

I named this final model BIG25NULLdiff.safetensors.

✅ Preparation is complete.

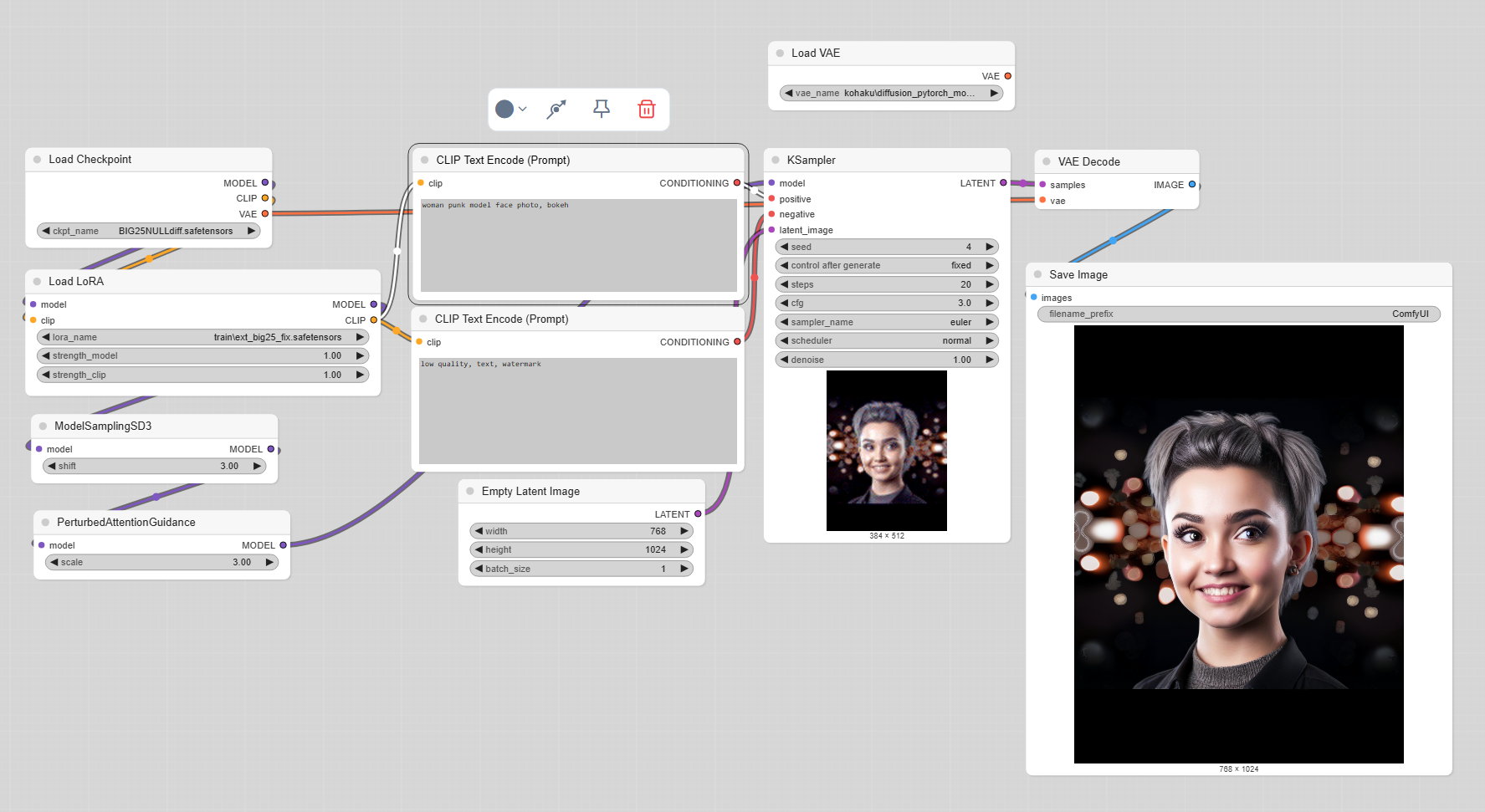

Validating the setup:

Generate any image using the original model.

Generate an image with the same settings using the pipeline with the diff model and the fine-tuned LoRA extract.

If the resulting images are identical, it means the compression and reconstruction process was successful.

Training process

Load everything for training as if you were continuing a regular LoRA fine-tuning session.

In Network Weights, select the extract that was fine-tuned for one step.

In Pretrained Model, load the diff model (the same one used during the validation step).

Set Alpha = Rank.

Example config is:

accelerate launch --num_cpu_threads_per_process 8 sdxl_train_network.py ^

--pretrained_model_name_or_path="K:/Comfyui_033/ComfyUI/output/checkpoints/BIG25NULLdiff.safetensors" ^

--train_data_dir="mydataset" ^

--output_dir="output_dir" ^

--output_name="NAME" ^

--network_args "algo=locon" "conv_dim=64" "conv_alpha=64" "preset=full" "train_norm=False" ^

--resolution="1024" ^

--save_model_as="safetensors" ^

--network_module="lycoris.kohya" ^

--shuffle_caption ^

--max_train_epochs=1000 ^

--save_every_n_steps=100 ^

--save_state_on_train_end ^

--save_precision=bf16 ^

--train_batch_size=1 ^

--gradient_accumulation_steps=1 ^

--max_data_loader_n_workers=1 ^

--mixed_precision="bf16" ^

--caption_extension=".txt" ^

--gradient_checkpointing ^

--network_dim=64 ^

--network_alpha=64 ^

--optimizer_type="prodigyplus.prodigy_plus_schedulefree.ProdigyPlusScheduleFree" ^

--optimizer_args "prodigy_steps=100" "betas=(0.8, 0.8)" "schedulefree_c=10" "weight_decay_by_lr=False" "d0=1e-5" "use_stableadamw=False" "d_limiter=False" "d_coef=1" "use_speed=False" ^

--learning_rate=1 ^

--loss_type="fft" ^

--flow_matching ^

--flow_matching_objective="vector_field" ^

--flow_matching_shift=2.5 ^

--color_aug ^

--flip_aug ^

--random_crop ^

--caption_dropout_rate=0.5 ^

--network_dropout=0.1 ^

--enable_bucket ^

--bucket_reso_steps=64 ^

--min_bucket_reso=768 ^

--max_bucket_reso=1280 ^

--xformers ^

--vae="K:/ComfyUI_p022/ComfyUI/models/vae/kohaku" ^

--network_weights="K:/Comfyui_033/ComfyUI/models/loras/train/ext_big25_fix.safetensors" ^

--full_bf16 ^

--mem_eff_attn ^

--lr_scheduler="constant" ^

--seed=1 ^

--no_metadata ^

--logging_dir="logs" ^

--log_with="tensorboard" ^

--persistent_data_loader_workers ^That’s it — training proceeds as a normal LoRA continuation, but now the model internally simulates full SDXL training while maintaining extremely high coherence and efficiency.

Output LoRA models

The resulting LoRA models directly replace the LoRA used in the pipeline with the diff model — simply substitute your existing LoRA file (e.g., ext_big25_fix.safetensors) with the newly trained one.

For example:

Base test:

Finetuned an SVD extract on a 100-image dataset for 10 epochs using only the class token, via the EQ latent space:

Tips

When training an EPS checkpoint:

Use an SNR-based loss that adapts to the timesteps,

or use EDM2 as a guideline for dynamic learning behavior.When training a FLOW checkpoint:

You can practically use any loss function,

since noise-based methods do not interact with FLOW training in any meaningful way.If you no need to train text encoder you can disable it via --network_train_unet_only and load existing TE (strenght clip - 1, strength model - 0) from the

ext_big25_fix.safetensorsin the gen pipeline.You can also substitute pretrained TE to another model TE (or train on it) - just slice the extracted LoRA via slicer script with a config of blora_traits.json :

{

"te":

{

"whitelist": ["lora_te1_text_model_encoder_layers_", "lora_te2_text_model_encoder_layers_"],

"blacklist": []

}

}Use it via blora_slicer.bat

python blora_slicer.py --loras C:/Users/user/Desktop/blora_for_kohya-main/model-step00000200.safetensors --traits te --debug

pauseIn this case, you’ll get separate text encoder modules that you can add to another LoRA model where the TEs were removed in the same way (adjust blora_traits.json and blora_slicer.bat accordingly).

![[⚡WIP DRAFT⚡] [PISSA] [SVD] Fast full finetune simulation at home on any GPU (part 1)](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/4c575b4a-007f-433d-a92b-51ebacc7b19d/width=1320/024382502810159.jpeg)