UPDATE:

Part 2 is out - check it out here.

Do not use the files attached to this article. Updated notebook and workflows are provided in the Part 2 article that are much better. You should switch to those.

The instructions in this article should help you through your first set up using the files in the Part 2 article.

"If you can sign up for a Gmail account, and have a computer with a web browser, you can generate video for free on Colab!"

(Massive props go to OGMustard, who's colab guides and notebook I'm basing this off)

This is Part 1 of a series on running ComfyUI on Google Colab to generate images and video at no cost. Part 1 covers getting video generation set up and running a test text-to-video generation. Part 2 will cover more advanced techniques like image-to-video and the 2-phase video generation for added quality.

Hey, everyone! I'm sharing my workflows, colab notebooks, and instructions on how you can generate video using Google Colab's free tier. You will not need a GPU, and you will not need to sign up for anything other than a Google account to get access to Google Drive and Colab. Furthermore, once set up, you can access and run this from anywhere you have a computer with a web browser and Internet connectivity. Everything should be automated, so you shouldn't need any coding knowledge or ComfyUI experience (but it always helps) - It should be as easy as clicking a button and waiting a few times, then inputting a prompt and getting video out. Let's get started.

Step 1: Get a Google account.

This should be self-explanatory. If you already have a Google account, you can use it, but you are only allowed 15GB total of storage for free, and your Gmail takes up that space, too. You will be using 10GB by following these instructions, so plan accordingly (or sign up for a second Google account).

Step 2: Download workflows and notebook.

Download 'ComfyUI_WAN5B.zip' and 'WAN5B.json' to your computer (they are both attached to this article). Unzip 'ComfyUI_WAN5B.zip' and get the 'ComfyUI_WAN5B.ipynb' file out of it. You'll use these to set things up. We'll be saving both of these to your Google Drive, so you'll be able to access them from anywhere in the future.

Step 3: Import notebook and set up Colab.

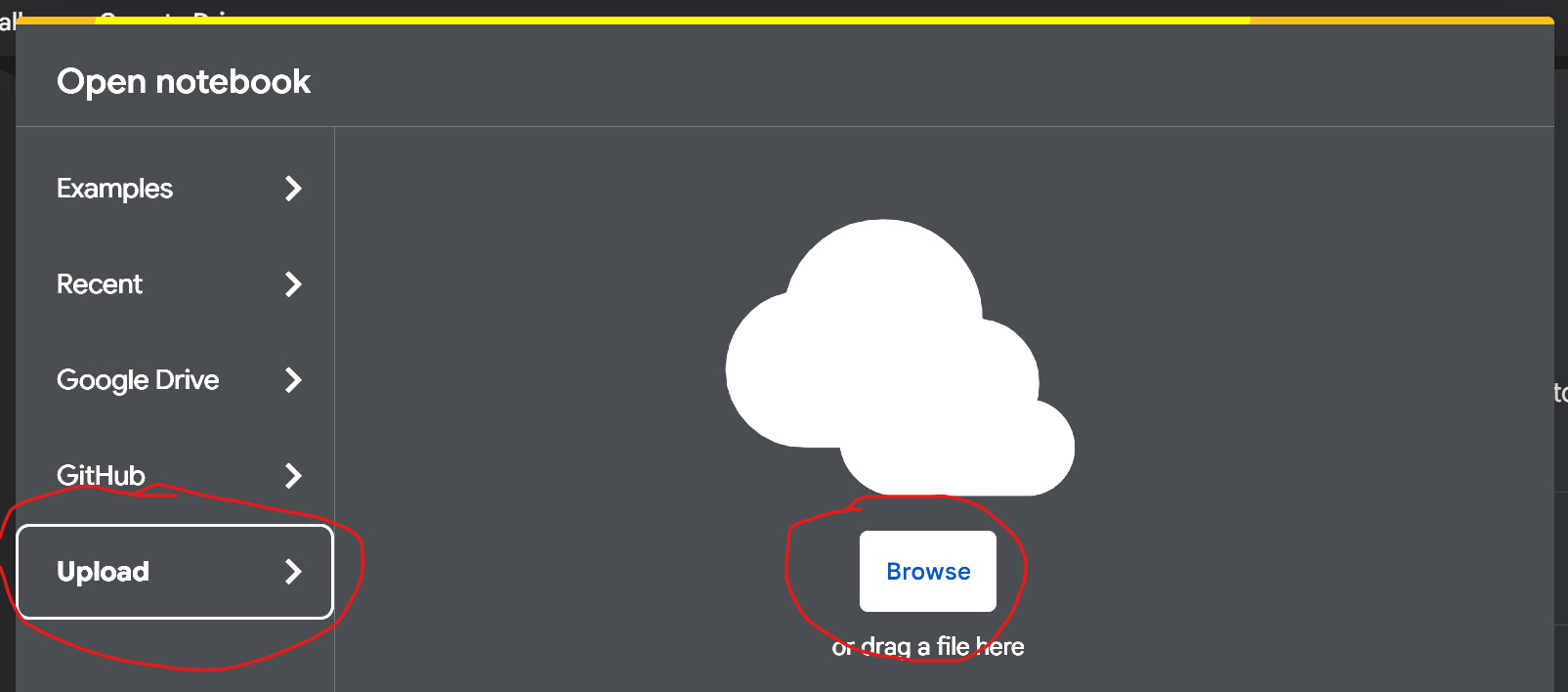

Go to https://colab.research.google.com. Log in with the Google account you created or chose in Step 1. You should choose 'Upload' on the 'Open Notebook' screen:

Click 'Browse' and choose the 'ComfyUI_WAN5B.ipynb' file you unzipped in Step 2:

You should now have a copy of the WAN5B colab notebook. Close the "Release Notes" tab if it's open, and let's have a tour:

In the upper right, you'll see your instance (this should mention a 'T4' instance - that's the one with the GPU you'll need):

Once you start running things, this connects to an 'instance' or virtual machine on Google's cloud (it's like a computer in a datacenter that you are renting, but the rent is free, because this is pretty outdated hardware - the GPU you'll be using is 7 years old as of 2025).

It looks like this when running, and if you click on the graphs, you'll see graphs of how much RAM you are currently using on the computer you are borrowing, and a note about how much free time you have left (you get about 4-5 hours per day):

MAKE SURE to disconnect and delete your runtime when you are done using it, or it will continue to run and use up all your time credits (click the down-arrow next to the graphs and choose the option):

Now on to the actual notebook:

On the right, under the 'Notes' section (which has some helpful reminders for you), you'll see 3 more sections corresponding to the steps you will take to install and run ComfyUI (the framework you will use to generate videos). Each of these has a hidden cell underneath it that contains the Python code that you'll run in that step.

On your first time through - you'll be running all 3 steps, and configuring them using checkboxes that will appear to the left of them once you expand them.

When you come back to colab in the future - you'll only need to run the first ('Install Dependencies') and third ('Run ComfyUI'), because the 'Resources' will be stored on your Google Drive (saving you valuable time!)

Step 4: Install Dependencies

On your first time through, you'll check all 4 boxes, because you'll be installing ComfyUI to your Google Drive. If you leave colab (remembering to disconnect and delete your 'instance') and come back later, you'll run this step with the first and fourth checkbox only, unless you need to update ComfyUI for some reason - which you shouldn't. Expand the 'Install Dependencies' section by clicking the '>', make sure all 4 checkboxes are checked, then click the arrow-in-a-circle next to the code section:

If your instance isn't currently running, it will connect, then the code in the box to the right will begin running on your instance. It will first connect to your Google Drive - you'll need to provide permissions ('Select All' and 'Continue') and possibly log in (use the same Google account you've been using). If you're interested in what it's doing, text output from the running code appears farther down the page. It could take up to 5 minutes to complete. When it's done running, you'll see a green checkmark next to the arrow-in-a-circle by the code block:

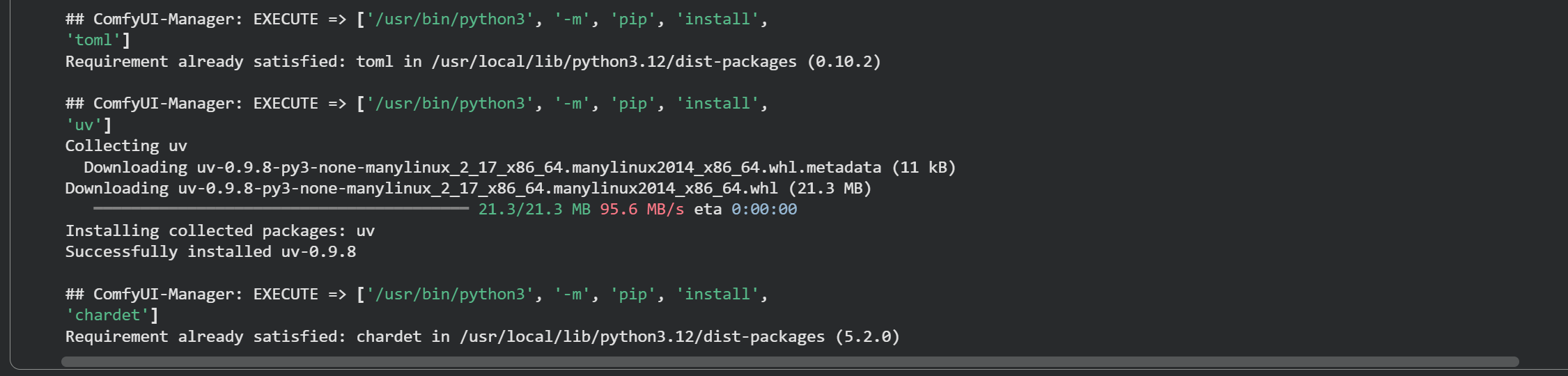

and you should see something like this at the bottom of the output:

!Important! - Go back up to the top of this section, and uncheck the 'UPDATE_COMFY_UI' and 'USE_COMFYUI_MANAGER' checkboxes, so you don't forget and leave them checked next time.

Step 5: Download Resources

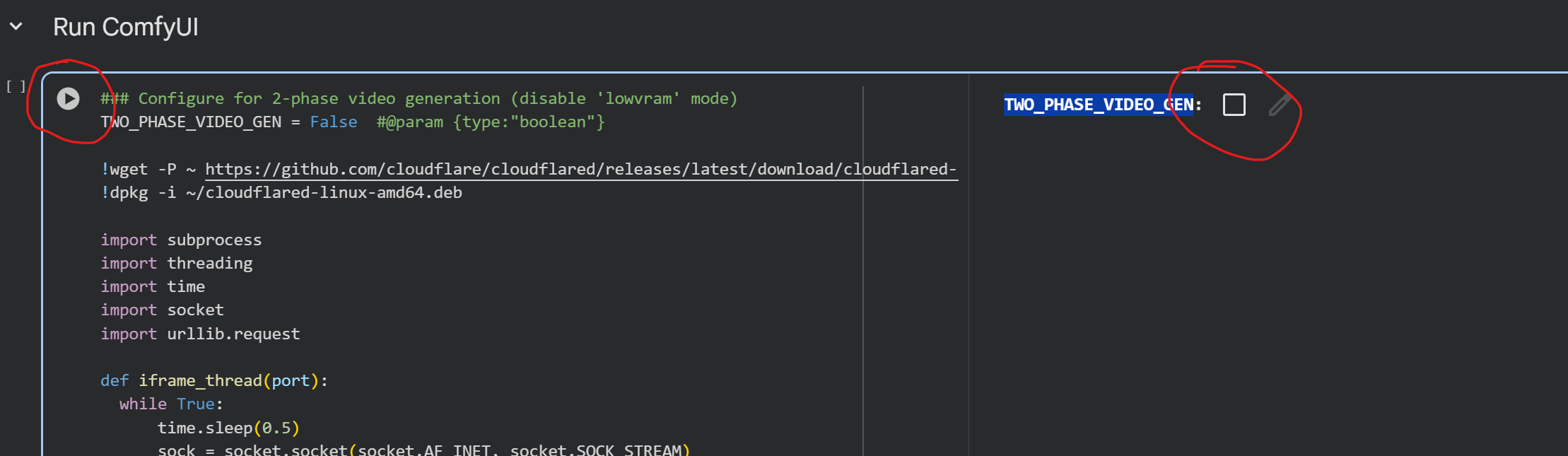

Expand the 'Download Resources' section by clicking the '>', then check the last 4 checkboxes, leaving 'TWO_PHASE_VIDEO_GEN' unchecked (I will explain this in the next article - it's an option that adds some complexity, and gets marginally better quality outputs). This will now download WAN 2.2, along with all the necessary extra stuff you need to run it, to your google drive. Once the checkboxes are correct, click the arrow-in-a-circle next to this new code block and let it run:

While you wait for the downloads to finish, here's an explanation of the 'TWO_PHASE_VIDEO_GEN' option: I've chosen 'WAN 2.2 TI2V 5B' to generate video for a number of reasons (read down at the bottom if you're interested), but the main one is size - I wanted one workflow that could do text-to-video and image-to-video (both first-frame, last-frame, and first-last-frame), and it had to fit in 15GB of storage (to keep the Google Drive storage free of cost), and run in 12 GB of system RAM and 16GB of VRAM (because that's what these free instances have).

You have to run a text encoder (which is another big model for WAN), then the model that actually makes the video, then the VAE which turns what the model spits out into something your computer can play back (which in WAN 2.2 5B's case is a big ol' chungus and a real memory hog). You can compress the model and the text encoder, but the smaller you make it, the worse the quality gets (the text encoder gets really flaky at higher compression, making it not listen to your prompts well at all). Running all 3 steps in one workflow requires a more compressed model (the Q4 GGUF quantization here) and some fancy memory management (block swapping) to deal with the VAE - that's how we're setting it up first because the quality will very probably be just fine for what you're working with.

But, if we run the first 2 parts of that workflow, then save the resulting 'latent' (what the model actually operates on in memory) off to your Google Drive, THEN come back in another workflow, load that latent back in and do just the third step with only the VAE, we can actually get away with running a model that's much less compressed (the Q8 GGUF quantization). I can see slight differences in video quality in preliminary testing, but it's not much. Still, it's good to have the option (and I spent a lot of time stuffing these models on to these instances so they just barely fit, and I didn't want that effort to go to waste).

If you are interested in switching to the 2-phase mode; pros, cons, and instructions will be in the Part 2 article.

Once stuff is done downloading (again, look for the green checkmark next to the arrow-in-a-circle), move on to the next step.

Step 6: Run ComfyUI

The last section is where we run ComfyUI. For now, leave the 'TWO_PHASE_VIDEO_GEN' checkbox unchecked and click the arrow-in-a-circle to run ComfyUI:

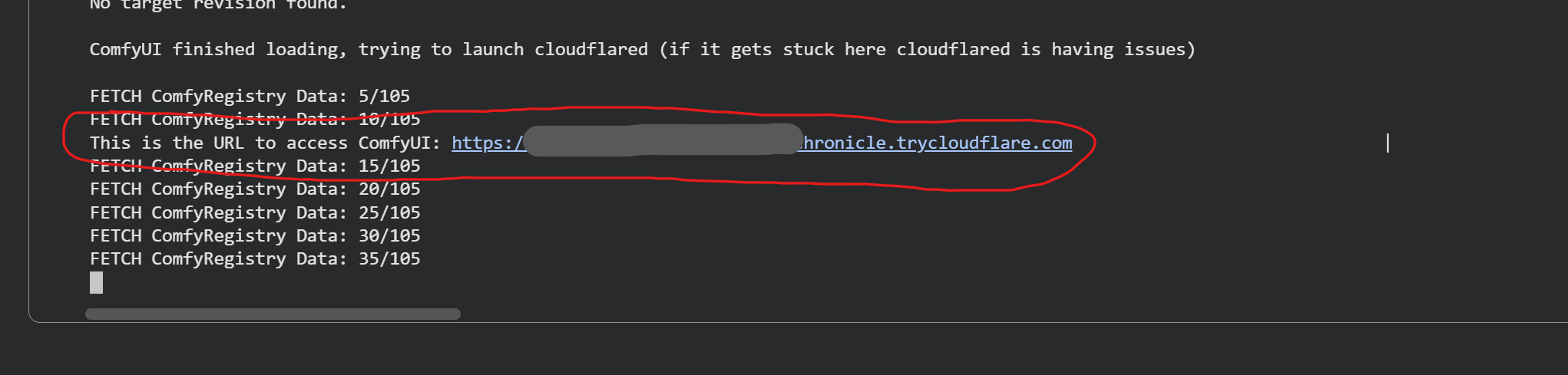

Now, you'll have to scroll down to the very bottom of the colab notebook and look at the output for a 'trycloudflare.com' URL - this is where you'll go to access ComfyUI. It may take some time (5 to 7 minutes), but once it appears, click on it. You'll have to wait some more (again, 5+ minutes sometimes) for ComfyUI to then load through the cloudflared tunnel - it will take awhile but it will eventually load (this is the worst part, just be patient...):

If you get an immediate 'DNS Resolution' error right after clicking the link, it means cloudflared messed up:

You can try again by going to the top of the code box for this section, and click on the square-in-a-circle to stop ComfyUI, wait for it to turn into an arrow-in-a-circle, then click it again to restart it.

ComfyUI Starting...

waiting...

waiting...

YAY!

If you see this screen, ComfyUI is loaded! Now it's time to load up the workflow - you're almost there! Click the 'X' in the corner of the 'Templates' popup to close it. You should see a default workflow load up. Ignore that and load the one you downloaded!

Step 7: Load and run the workflow

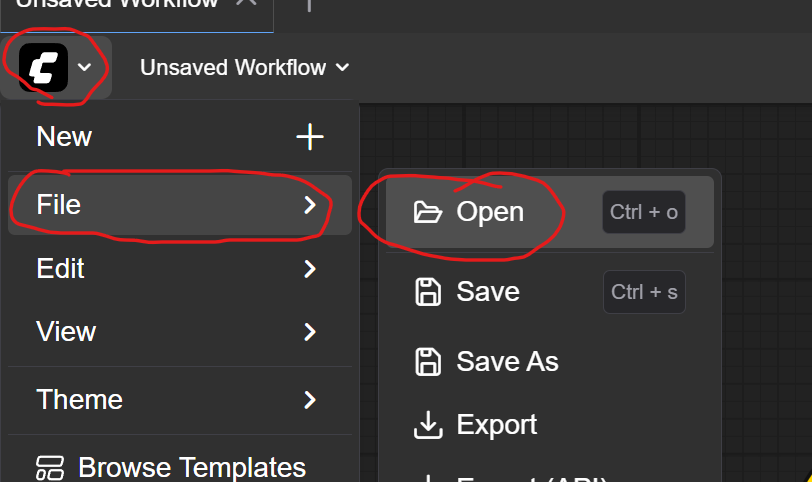

Click the 'C' icon in the upper left, then 'File >', then 'Open':

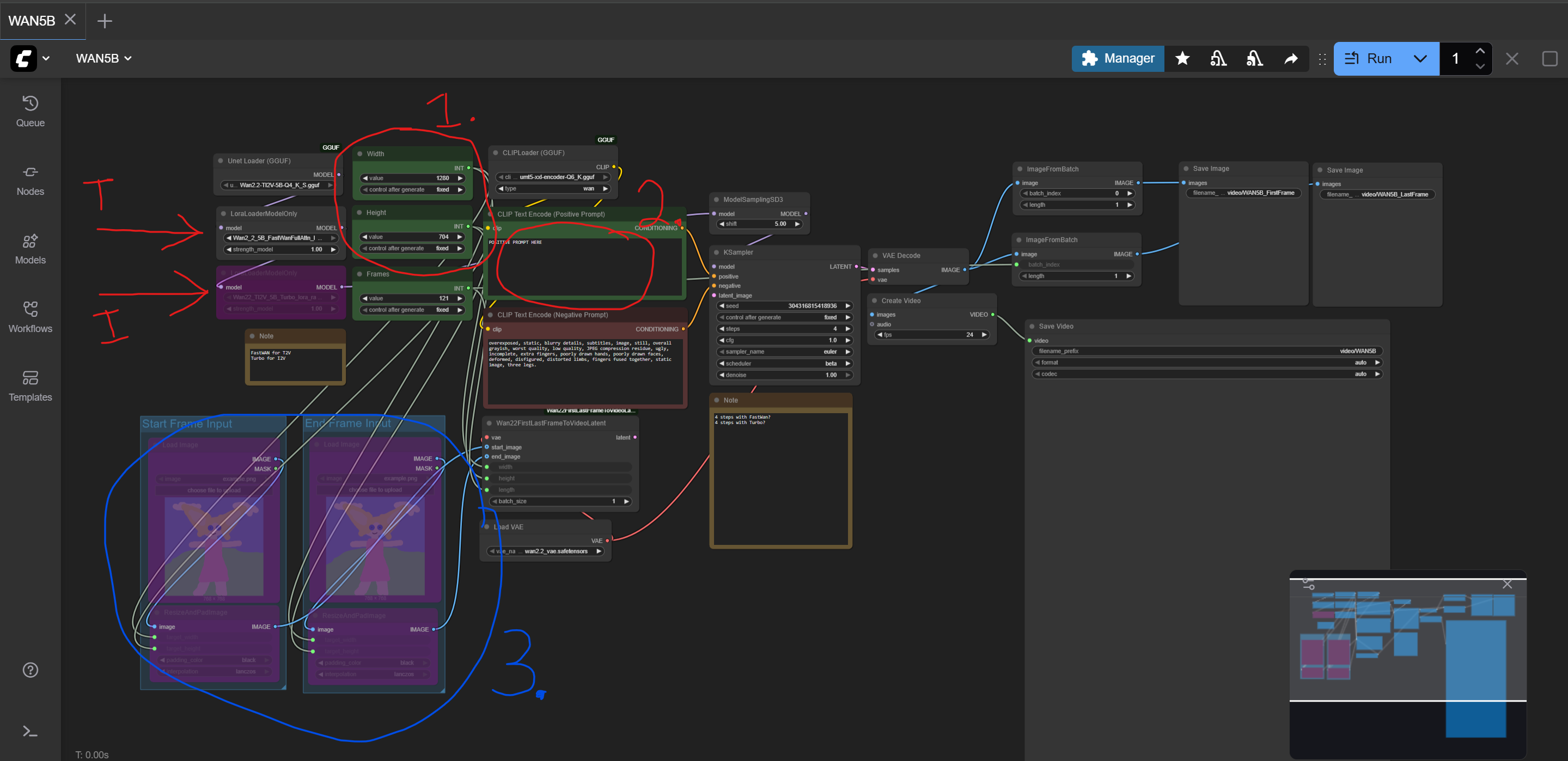

Find the 'WAN5B.json' file you downloaded and choose it. That should load the WAN 2.2 5B workflow. It looks complicated, but there's only a couple things you'll need to mess with:

(EDIT: I've made an update to the workflow (big thank you to qek!) so the negative prompt box is gone - it wasn't used anyway due to having CFG set to 1.0 due to the 4-step speed-up - not having to encode it saves a bunch of time!)

Once the workflow is loaded, click the 'C' icon in the upper left again, then 'File >', then 'Save', and give the workflow a name - this saves the workflow to your Google Drive so it will always be available in this install of ComfyUI.

I've added some markup here to explain what parts of this workflow you'll be changing. It looks a little complicated, but you won't need to adjust much of it. I colored the nodes you should adjust GREEN. Nodes that are disabled (or bypassed) are PURPLE. A quick explanation of the parts:

'T' and 'I' - the arrows marked 'T' and 'I' point to 2 nodes that load LoRAs (smaller models that modify the main model we're using) to allow us to create videos in only 4 sampler steps instead of the usual 30. This cuts generation time down to about 10 minutes per video. You want to enable only the 'T' one when using text-to-video, and only the 'I' one when using image-to-video. We won't need to disable or enable anything now - we'll be doing that in Part 2.

'1.' - This is where you set the width and height of your video. You should only use 2 different options with this workflow - 704x1280 and 1280x704 (width x height). This is because that is WAN 5B's 'native' resolution and WAN 5B is VERY sensitive to resolution changes. Also, I've sized things in this workflow to barely fit in the memory we have when creating this size video. These 2 sizes will work, and will work best.

'2.' - This is where your text prompt goes - It's where you tell the model what to put in your video. In Part 2, when we include images as part of the prompt (for image-to-video), you may also want to include a short description of what is in your image before you describe what happens in your video. Either way, this is where you tell the model what to put in your video.

'3.' - This is where you can load in images to either start your video from or end your video on, or both, or neither (it's very flexible). We will be messing with this in Part 2, but for now, leave these all disabled.

For your first test, you should do a simple text-to-video run. You'll be creating a 5-second 720p 24fps video (well, technically only 704x1280@24fps). Width should be set to 1280 and height to 704. Frames (or the number of frames to generate total) should stay at 121 (24 frames per second X 5 seconds = 120, plus we do one additional frame).

As an example you can put the following prompt in the 'Positive Prompt' box ('2.'), or use whatever you want if you're feeling adventurous:

dusk time, mixed colors, wide shot, firelight, establishing shot, high contrast lighting.The video follows a runner through different terrains. Starting in a desert, the camera slowly pans to the right as the runner transitions to climbing a mountain path. The shot captures the lone figure persevering through difficult terrain; his pace is steady, and he occasionally uses his hands to steady himself on rocks. The background gradually shifts from rolling sand dunes to steep, rocky peaks, illustrating the arduous nature of the journey. The runner remains centered in the frame throughout, and the camera movement is smooth, without any abrupt changes.

Then click the 'Run' button in the top right corner and wait (the number is how many runs to do in a row - leave that at '1':

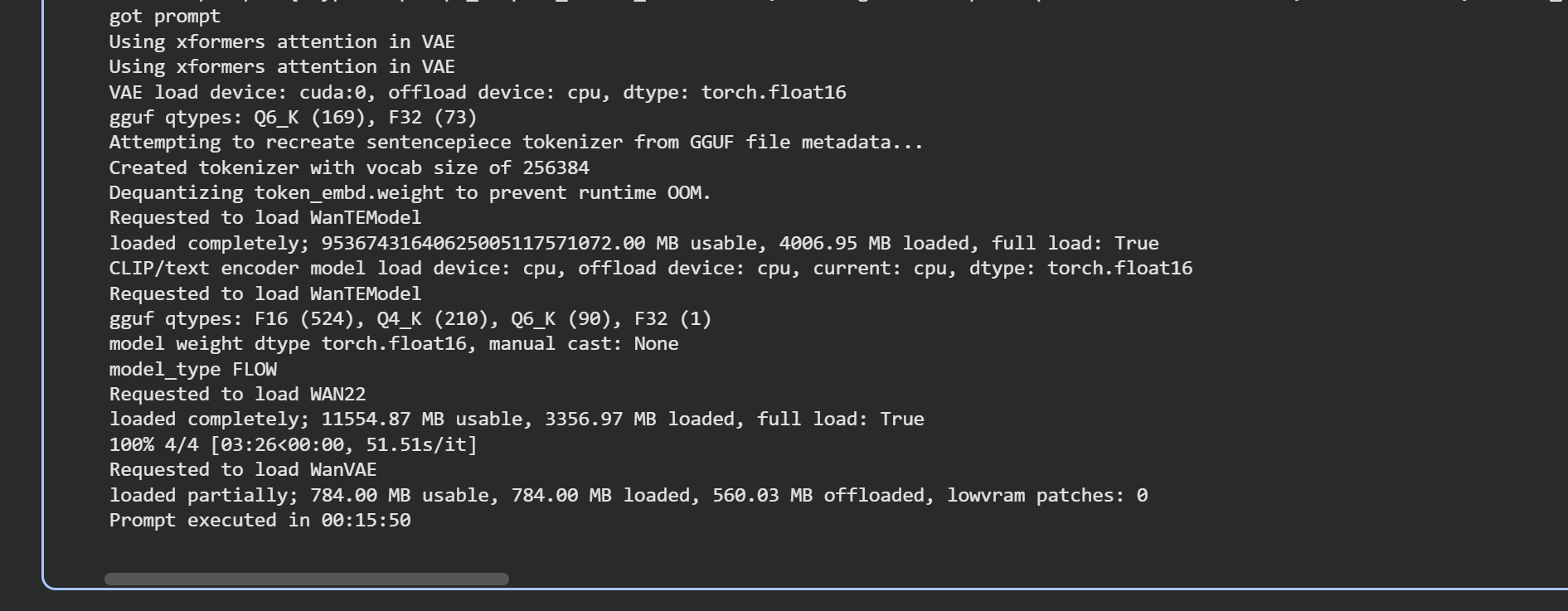

That's it! ComfyUI is now creating a video for you using the WAN 2.2 5B model and the prompt you entered. The prompt I provided is the first example prompt from the WAN 2.2 manual that was created by the makers of the WAN models. If you'd like to watch the output of ComfyUI (there's some logs about what it's doing and a timer when the model finally loads and starts doing passes), switch back over to the Google Colab tab and watch the text output at the very bottom of the screen:

Models take a long time to load the first time (because they're being copied off of Google Drive). Runs after this first one will be faster to load the models, because they'll be kept in your instance's memory.

Once you see that "Prompt executed in ..." message, you should have your video! It saves in a folder on your Google Drive, and, if you've got a good internet connection, you should see a preview load in the "Save Video" node to the lower right of the ComfyUI workflow. That preview doesn't work very well, so you should just download the video to view it.

Step 8: Download your output

Go back to the Google Colab browser tab and click on the folder icon along the left side of the screen:

That will open your Google Drive (as it appears mounted to the instance you're running). Drill down in the file structure to 'drive' -> 'MyDrive' -> 'ComfyUI' -> 'output' -> 'video':

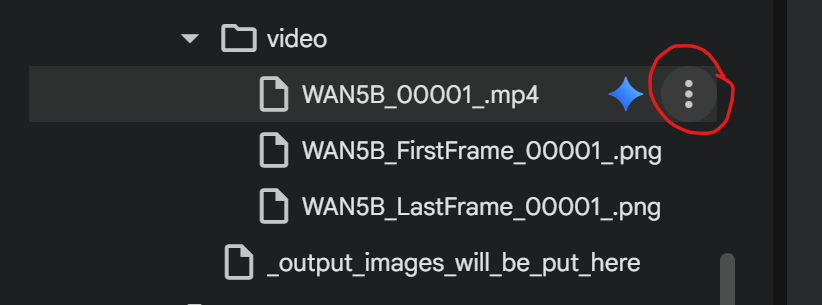

That folder will contain 3 files: your video, and 2 .PNG images that are the first and last frames of that video. Click on the 3 dots next to the files and download them to your computer for viewing and whatever:

Why the .PNGs? - The workflow is set up to save the first and last frames so that you can use them to extend the video (in either direction!) later if you want, by loading one of those images as either the start frame or end frame, then using image-to-video to create another video that either starts (or ends) at that point. This is basically how all long WAN videos are created (It gets more complicated than that, but that's the basis for all of it).

Here's the video I got with my example prompt:

That's it! Don't forget to disconnect and delete your instance when you're done! Part 2 will delve into image-to-video and go over using a 2-phase workflow to get a little bit better quality. Thanks for reading, and leave any questions and comments! Below are some notes about the models I chose and some other musings if you're feeling you haven't read enough yet.

Notes about WAN 2.2 5B

Taking a step back and looking at what we are accomplishing here, WAN 5B is pretty impressive - we are creating 720p video using 7-year-old hardware (the Nvidia Tesla T4 GPU is on-par with a 2070, but with 16GB of VRAM), and we're generating a 5 second clip in 15 minutes (and that will get down to 10 minutes once all the models are loaded in RAM). Plus - and this will get more apparent when you start doing I2V - you are still getting WAN here - all the great environmental and subject consistency is still there. I2V is way more impressive than T2V!

There are definitely tradeoffs. Even though the model itself is small, the text encoder (think CLIP) is still huge (I'm using a GGUF version of that, too, because even fp8 scaled it's 6+GB), and it does not put up with quantization well. The VAE is also quite big and memory hungry - but I think they've baked some of the magic into that, too - it's doing some heavy lifting. It doesn't have the depth of LoRA support and development that the bigger 14B model does. But there's a lot going for it, too. It does both T2V and I2V in one model - that's key here. For example, there's a build (several, come to think of it) of the bigger 14B model that includes both the high and low noise models (because the 14B model is really 2 14B models that you run one-after-the-other) and can do both T2V and I2V, but the Q3 GGUF of that build is almost 10GB in size. 5B's Q8 GGUF is only just over 5GB, and I'll bet it can handily beat a Q3 GGUF quantization of the 14B model in quality, even with its 5B 'eccentricities'.

I attribute some of those eccentricities and the assumptions that 5B's quality is poor to the fact that it really breaks down when you use resolutions it doesn't like. Set it to generate smaller resolutions, and motion gets really screwy, and you get weird body-horror nonsense happening. Leaving it at 720p resolution (portrait or landscape orientation) solves 95% of those issues. I'd be interested to hear from folks that have some more experience with the 5B model about other potential optimizations, but that's another of its downsides - there just hasn't been that much community engagement with it - we all just seem to prefer the bigger model.

In my experience with the 14B models, and now the 5B model, I'm finding that WAN 2.2s text-to-video performance is not all that special. It really shines when doing image-to-video, though. Both models are amazing at animating cartoon and anime images, and they're not too bad with realism either. I'm constantly impressed by what the bigger 14B model can do, and its 5B little brother has that same DNA!

Stay tuned for Part 2!