This will walk you through setting up ComfyUI on Google Colab with a simple workflow that will allow you to generate images using ANY SDXL-based model you want, with any LoRAs you would like to use. No coding knowledge or experience with ComfyUI required.

This covers any SDXL-based model - so it will work with any finetune of SDXL, Illustrious, Pony, NoobAI, and possibly others. Any of these will work! You may be able to adapt the included workflow for other types of models, too! Let's get started!

(Massive props go to OGMustard, who's colab guides and notebook I'm basing this off)

Step 1: Get a Google account.

This should be self-explanatory. If you already have a Google account, you can use it, but you are only allowed 15GB total of storage for free, and your Gmail takes up that space, too, so plan accordingly (or sign up for a second Google account).

Step 2: Download workflows and notebook.

Download 'ComfyUI_SDXL.zip' and 'SDXL.json' to your computer (they are both attached to this article). Unzip 'ComfyUI_SDXL.zip' and get the 'ComfyUI_SDXL.ipynb' file out of it. You'll use these to set things up. We'll be saving both of these to your Google Drive, so you'll be able to access them from anywhere in the future.

Step 3: Import notebook and set up Colab.

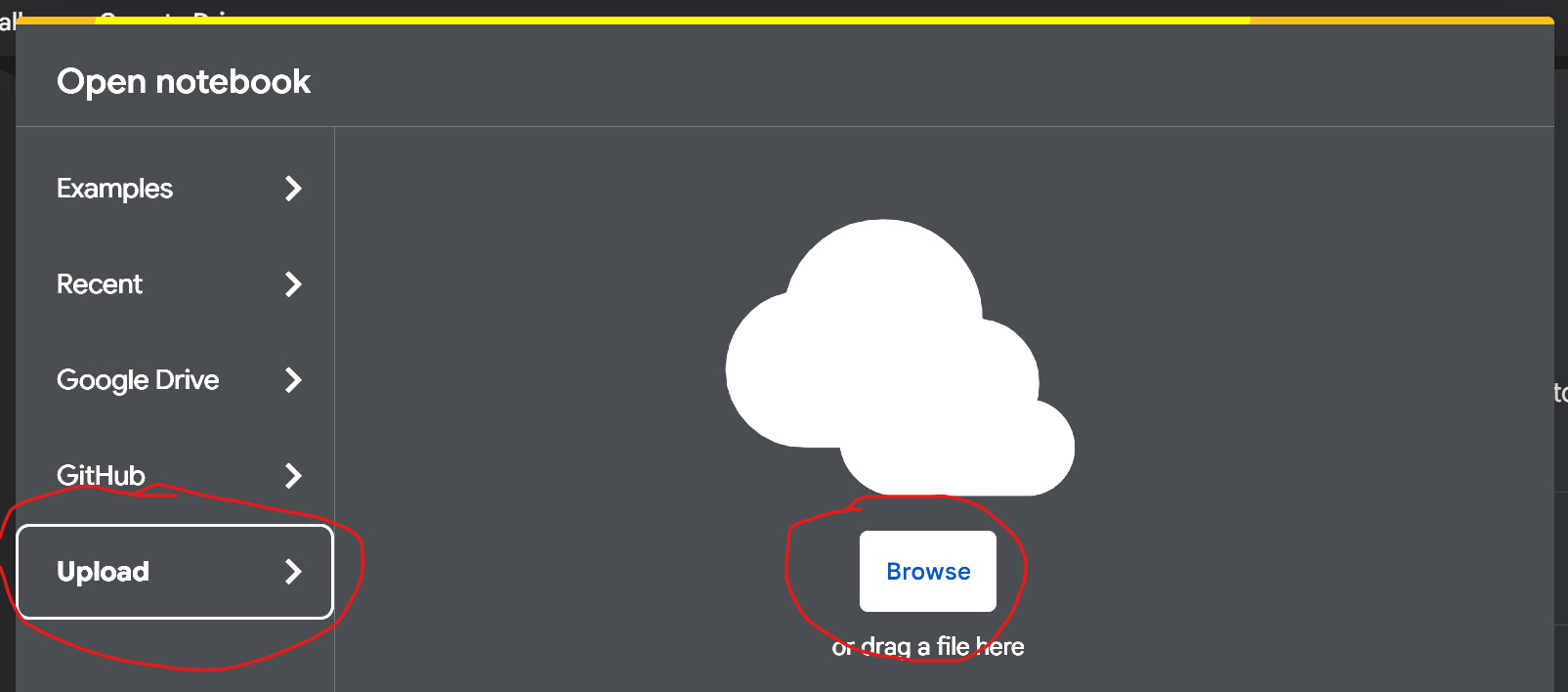

Go to https://colab.research.google.com. Log in with the Google account you created or chose in Step 1. You should choose 'Upload' on the 'Open Notebook' screen:

Click 'Browse' and choose the 'ComfyUI_SDXL.ipynb' file you unzipped in Step 2.

You should now have a copy of the SDXL colab notebook. When you leave and come back to colab, you can load the notebook again by choosing 'Google Drive' instead of 'Upload'. Close the "Release Notes" tab if it's open, and let's have a tour:

In the upper right, you'll see your instance (this should mention a 'T4' instance - that's the one with the GPU you'll need):

Once you start running things, this connects to an 'instance' or virtual machine on Google's cloud (it's like a computer in a datacenter that you are renting, but the rent is free, because this is pretty outdated hardware - the GPU you'll be using is 7 years old as of 2025).

It looks like this when running, and if you click on the graphs, you'll see graphs of how much RAM you are currently using on the computer you are borrowing, and a note about how much free time you have left (you get about 4-5 hours per day):

MAKE SURE to disconnect and delete your runtime when you are done using it, or it will continue to run and use up all your time credits (click the down-arrow next to the graphs and choose the option):

On to the notebook. There are 2 sections that you will run in order (first 'Install Dependencies', then 'Run ComfyUI'). You 'run' a section by expanding it by clicking the '>' next to it, then clicking the circle-with-an-arrow-inside to run the code. The arrow will change to a square - you can click the square to stop the section's code running. The first time through, we want to install ComfyUI to your Google Drive, so make sure all 4 of the checkboxes are checked:

After the very first run, uncheck the middle 2 checkboxes ('UPDATE_COMFY_UI' and 'USE_COMFYUI_MANAGER') before running 'Install Dependencies'. The 'Install Dependencies' step takes around 10 minutes to complete.

After that step is complete, run the 'Run ComfyUI' step. You have a choice at this point - you can access ComfyUI through a 'cloudflared' tunnel, or directly through Google's network. The main difference is that cloudflared is slower, and sometimes less stable, but all of the ComfyUI web UI features will work. Without cloudflared, the only feature I've found that doesn't work is saving workflows in ComfyUI, so I'll show that way in these instructions - it just means you'll need to keep a copy of the workflow (that's the 'SDXL.json' file) somewhere on your computer, and 'Export' it from ComfyUI if you make changes you want to keep:

While that is running, we need to set up an 'API key' on civitai.com. You'll use this later to download your checkpoint and LoRAs and other resources. This is basically a password to your civitai.com account, so don't share it with anyone.

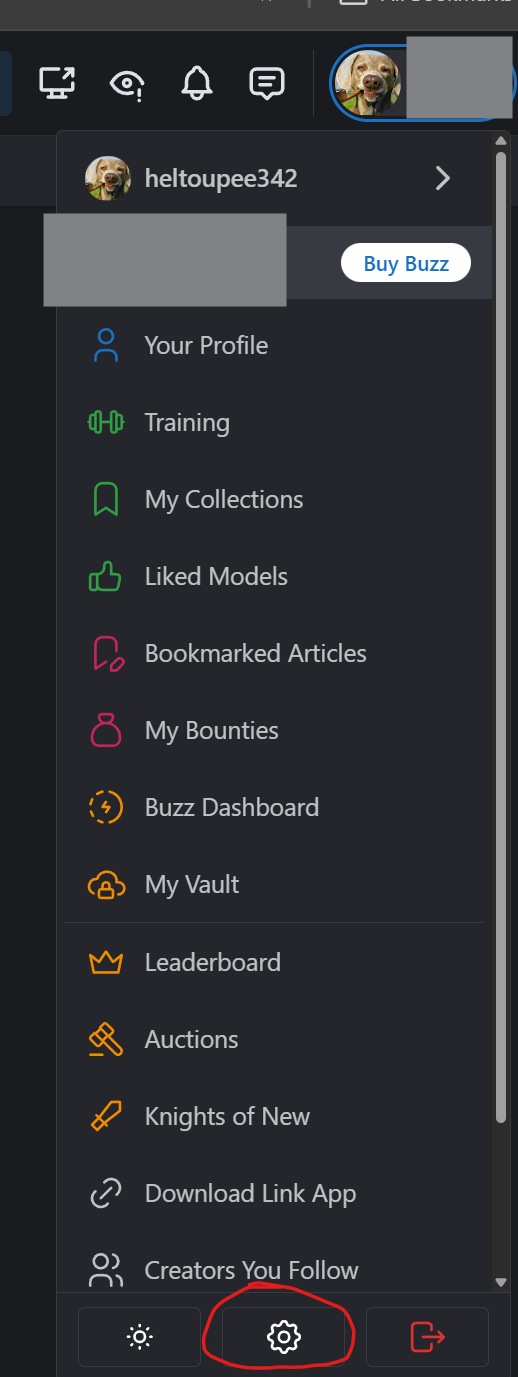

Go to your settings on civitai.com:

Almost all the way at the bottom is the 'API Keys' section. Click 'Add API Key':

Then name it and save:

Then click the clipboard icon to copy it to your clipboard. It won't ever be displayed again, so make sure you copy it, or you'll have to create a new one:

I save mine at the top of my colab notebook - you'll need it every time you come back to colab to start ComfyUI up again if you want to download things from civitai.com:

Back to the notebook. Scroll all the way to the bottom and look at the output from the second block. You'll be looking for something that looks like this:

Click that link, and it should open ComfyUI in a new window:

YAY!

If you see this screen, ComfyUI is loaded!

Step 4: Download Models and LoRAs and set up ComfyUI

At this point, you have a standard, fully-functional install of ComfyUI with a couple custom nodes and one extension installed. You can install more custom nodes with the Manager, and do pretty much anything you could do with a local install, just this one is hooked up to a 16GB Tesla T4 GPU (that's not quite as impressive as it sounds -- it's on par with an RTX 2070 from about 7 years ago).

This will be a short intro to ComfyUI using the workflow I've provided here. This will also show you how to use the extension, 'civicomfy' to download models, checkpoints, LoRAs, embeddings, etc. from civitai.com. I'm going to use the Illustrious 2.0 base model and a random LoRA as examples, but I want to stress here, if you have a favorite model, or a preferred set of LoRAs, go ahead and use those. This is your sandbox!

If you haven't already, click the 'X' to close the 'Templates' window. Then, find the 'civicomfy' button and click it to load the interface:

Go to the 'Settings' tab, and paste the API key we generated in the 'Civitai API Key' box. You'll also need to set the 'Default Model Type' - choose 'checkpoints'. Finally, there are some settings for blurring NSFW images that are attached to the models and displayed in searches (this extension searches civitai.com for things, and sometimes the examples of what the things can do are (very) NSFW, if that's what they're designed for). Leaving this alone is fine, or set it to taste:

Next, we'll search for a checkpoint to use. This is the main model you'll use to generate images. For an example, I'll search for 'Illustrious XL 2.0'. If you have a favorite model (if you like, say 'WAI-illustrious-SDXL', or maybe you prefer 'Pony Realism'), you can search for that, instead. Fill out the search form, then hit search, then scroll down to find the model you're looking for and click the blue button with the version you want to download:

This will add the model to your downloads and take you to the download tab:

Make sure the 'Model Type (Save Location)' is filled out correctly (full models go in 'checkpoints', LoRAs go in 'loras', Embeddings go in 'embeddings'), then scroll to the bottom and click 'Start Download'. This will pull the model down to your Google Drive (using Google's internet link which is nice and fast!)

Next, let's download a LoRA - which is a smaller model that adjusts the main checkpoint in certain ways. I'll search for 'definitive disney' (again, just as an example - you can use your favorite(s) if you have them - just make sure you download the version for the model type you're using - LoRAs built for Illustrious-based models don't work very well with Pony-based models, for example). Make sure to switch all the dropdowns accordingly:

You'll download the 'Illustrious XL' version if you're following my example.

Finally, we'll download some embeddings - these adjust your prompts when you activate them by adding 'embedding:<EMBEDDING_FILENAME>' to your prompts (I'll show you this in a bit). As an example, you can download 'lazypos' and 'lazyneg' - some embeddings to put in those default quality tags that you have to include ('masterpiece, best_quality', etc.):

download both the lazypos and lazyneg ones I indicate here - you'll have to click one, then download, then come back to the 'Search' tab and click the other. There are versions for Pony-based models here, too that do the 'score_9_up, score_8_up' business also, if that interests you.

Once all those downloads are completed, close the civicomfy window by clicking the 'X' in the corner.

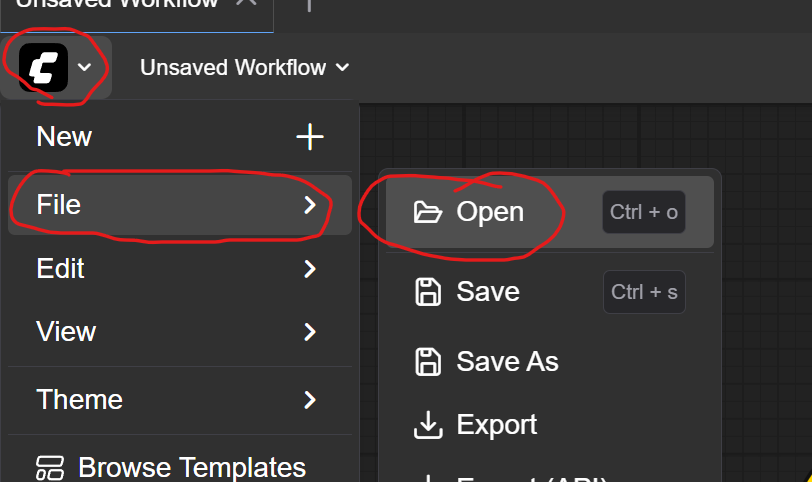

Next, you'll load up the workflow. Click the 'C' icon in the upper left, then 'File >', then 'Open':

Find the 'SDXL.json' file you downloaded and choose it. That should load the SDXL workflow. Take a moment to notice the 'Export' option - if you change the workflow and want to save it, click that 'Export' button to download your changes. It looks complicated, but there's only a couple things you'll need to mess with:

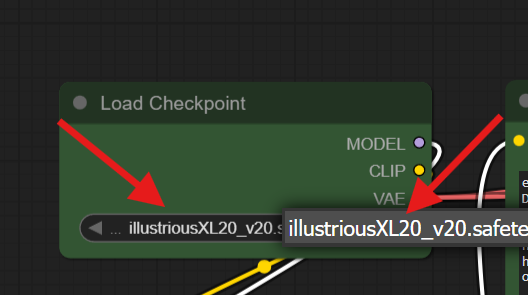

I've made the nodes (all the little boxes are nodes) you need to mess with green. Starting from the left and moving right, first, we'll find the 'Load Checkpoint' node. Click the checkpoint name and choose the one you downloaded:

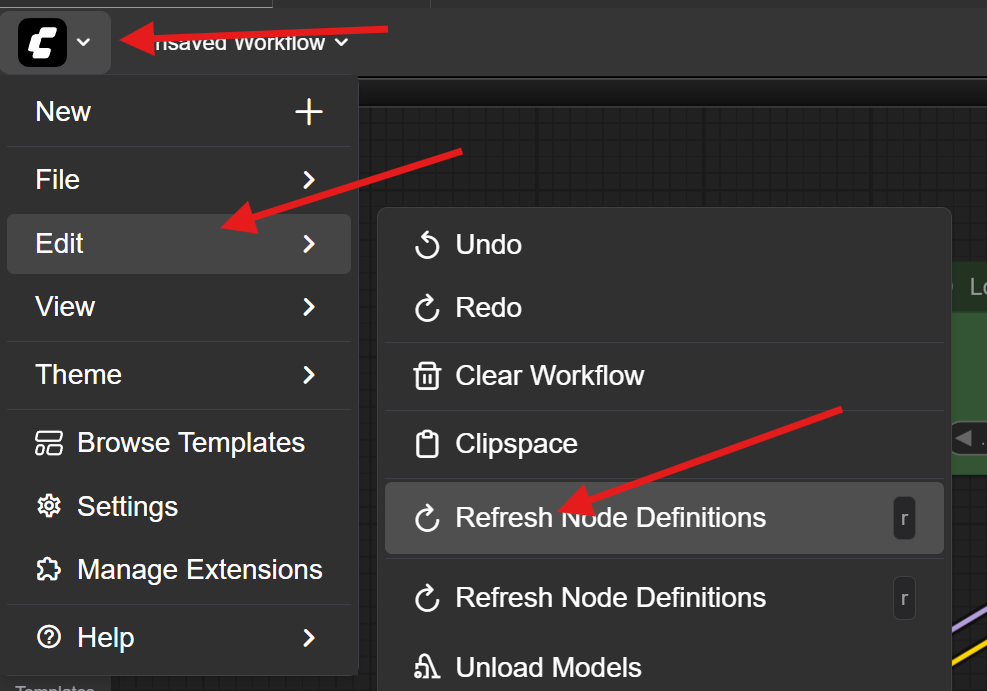

If it doesn't appear, you may have to refresh your nodes. Click the 'C' icon in the corner, then choose 'Edit' -> 'Refresh Node Definitions':

With the checkpoint chosen, next we'll load LoRAs. I'm using rgthree's 'Power Lora Loader' node here. It has a bunch of nice features. Click the 'Add Lora' button and choose the LoRA you downloaded:

If you have more than one LoRA, you can load them all in here by clicking 'Add Lora' again. There's a toggle switch to the left of each LoRA allowing you to turn it on or off (so selectively load or not load each of them). The number to the right of each LoRA is the strength (each LoRA will have suggestions on what values to use if you look on its civitai.com page, but generally the higher this number is, the greater the effect the LoRA will have). Also, you can right-click on any lora to bring up a menu that will let you remove, reorder, or 'Show Info', which is VERY useful:

Clicking 'Show Info' brings up an info box. Click the 'Load from Civitai' button to populate this box with useful info straight from civitai:

Some highlights - 'View on Civitai' will take you to the model's Civitai page where you can read more about how to use it. The 'Trained Words' are a combination of the ones the creator specifically added to the instructions on civitai (those are marked with the blue 'C'), and in the case of SDXL-and-SDXL-derivative-based loras, you can see all the words that were used in the dataset the LoRA was trained with. We're going to click the 3 words with the blue 'C' icon next to them, then click the 'copy' link that comes up to copy them to our clipboard, ready to be pasted into our positive prompt. Handy!

You can also scroll down and see all the images that are attached as examples (these come right from the model's civitai page, and are NOT censored or blurred in any way when NSFW - so, yeah, careful). Hovering over an image gives all the data civitai detected about the image when the creator uploaded it. Some interesting data is available, including the checkpoint used, as well as (ostensibly) the seed and other generation settings:

This middle image was ostensibly generated with 'waiNSFWIllustrious_v70' for example.

Next, the positive and negative prompts. These boxes are where you put your prompts, or what you'd like your model to draw for you (or to not draw for you in the case of the negative prompt):

Notice I've included the activation phrases for the embeddings we downloaded earlier. Don't worry if you misspell these - you will get a warning in ComfyUI's output (at the bottom of the colab page - probably still open in your other browser tab):

If you'd like to follow along and get pretty much the same image in my example, I'll include the prompt I'm using below:

DisneyStudioXL, cartoon, cinematic,

1girl, solo, portrait, sunlight, edge lighting, red silk dress, single side slit, exposed thigh, garter, black pantyhose, red high heel shoes, black gloves, beautiful face, detailed eyes, black hair, braids, hair decoration, low angle shot, full body shot, wind, outdoors

Next, we visit the generation control section:

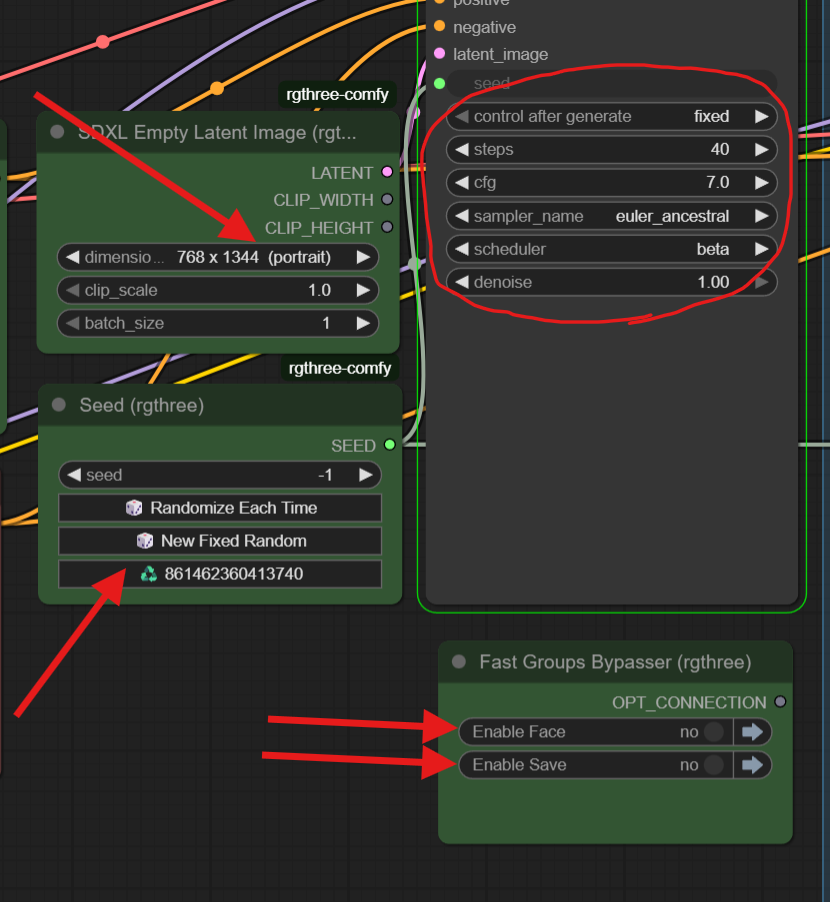

The 'SDXL Empty Latent Image' box controls the size and resolution of the image you'll generate. This node includes some good presets for SDXL-based images.

The 'Seed (rgthree)' node is one I use to control the seed for the generation, as it lets me sync the seed I'm using between nodes (like the sampler that generates the original image and the face detailer we'll use later) - this is what defines the randomness that gets used with the model. If the 'seed' field is '-1', it will generate a new random seed each time. You can use the last seed generated permanently by clicking the bottom button, the top button sets it back to choosing a new random each time, and the middle button will set a new random number and set it permanently. You can also just click the 'seed' field and type a seed in if you want to use a specific seed.

If you're following along and want to try to get the same image I did, the seed I used is '923896740991536'

'Fast Groups Bypasser' is a set of switches that controls the 2 extra blocks I've added on to the end of the generation. 'Enable Face' will run your image through 'FaceDetailer', a set of nodes that detects faces in your image and refines them using your chosen models and LoRAs. The civitai.com on-site generator has a similar feature, and many SDXL-based models benefit from this, especially with wide-angle shots or multiple subjects, so I've made sure to include it in the workflow.

Finally, in the red circle, are your sampler settings. You can find specific recommendations for some or all of these values on the description page on civitai.com for your specific chosen checkpoint. The values I've included are ones I've found to produce good results on a wide variety of SDXL-based checkpoints.

Here's an example of FaceDetailer in action - before you run your image generation, enable the 'Enable Face' switch in the 'Fast Groups Bypasser' node:

Then generate your image. The image will generate, then the FaceDetailer block will detect and refine faces in your image, finally putting both the original and updated images in the preview window (the big node named 'Image Comparer'). If you hover over the image, you'll be able to compare the 2 images (left side is final, right is original):

The final switch in the 'Fast Groups Bypasser' just enables an automatic image save that will place the generated image in your output directory on your Google Drive (I'll show you how to find that later). You can also save your image directly from the 'Image Compare' node by right-clicking on the image and choosing 'Open Image':

Your image will open in a new tab, and from there you can save the image using the methods built into your web browser (I'm using Chrome here):

Step 5: Switching Models and Google Drive Management

If you've gotten this far, you've now been able to generate an image and save it to your local computer. Awesome! Now you're probably itching to try out some other checkpoints, right? The problem is Google Drive only allows for 15GB of storage in its free tier, and a single checkpoint is about 7GB, so we'll need to delete the old one before downloading and using a new one. Here's how you do that. First, go back to the colab tab, and find the folder icon along the left side of the window - this is your instance's storage drive:

Drill down in the file structure by clicking the arrows to expand 'drive' -> 'MyDrive' -> 'ComfyUI' -> 'models' -> 'checkpoints'. Here you'll find the files you downloaded using civicomfy. If you hover over them, a button with 3 dots ('...') will appear - click on that, and you will have the option to delete them. Delete all the files named the same as the old model you don't want any more. Once that's done, we have to empty the Trash (Google Drive doesn't let you just delete files, it moves them to a 'Trash' that you then have to go empty.) Either go to 'https://drive.google.com' or, on the colab tab, click 'File' -> 'Locate in Drive':

Once in Google drive, you need to find the 'Trash' folder along the left side and click on it:

Then find 'Empty Trash', along the top right, and click that to permanently delete the files that got moved into Trash (and getting the space on your Google Drive back to use for other models):

Sometimes, the files you just deleted don't appear in the Trash right away - this is because there's some delays between deleting big files and the changes showing up on drive.google.com. Sometimes, you have to stop the 'Run ComfyUI' step on the colab window, then the changes will show up on drive.google.com, THEN you can empty the trash, then restart the 'Run ComfyUI' step again.

Once you've got your trash emptied, you can download a different model by searching in the civicomfy window and downloading it just like before.

LoRAs you've downloaded are in the 'loras' folder inside that 'models' folder if you need to delete some of those. Embeddings go in the 'embeddings' folder inside the 'models' folder.

Finally, if you chose to enable automatic saving of images in the workflow, the images will be put in the 'output' folder inside the 'ComfyUI' folder:

You can download them from the file structure on the colab window, or you can find them on https://drive.google.com and download them from there (drive.google.com allows you to select and download multiple files at once, so if you generated a bunch of images, it might be more convenient to get them from there, and delete them once you've saved them.

That's really it - you should have everything you need to replace on-site generation with a free colab account!

One last little trick for you - if an image was generated in ComfyUI, and the creator left the option to include generation metadata in the image, you can drag and drop the image itself into ComfyUI to load the exact workflow used to create the image. You can try that with this copy of the image that I created using the settings I walked you through:

Enjoy, and let me know in the comments how these instructions worked for you, or anything else!

Edit: As of 11-19-2025, Google Colab updated their runtime to include torch 2.9.0. It seems that Google is a bit slow to update to new torch versions, so the best thing we can do is allow our notebook to update torch versions when a new one becomes available (ComfyUI's dependencies specify the latest version of torch). This means the 'Install Requirements' step will take about 5 extra minutes each time when Google is still including an older torch version, but everything should still work. I've updated the attached files (ComfyUI_SDXL.zip) to do exactly that.