⚠️ This guide is a work in progress — information may be added or changed over time.

⚠️ This guide is a proof of concept.

In the first part of the article ([⚡WIP DRAFT⚡] [PISSA] [SVD] Fast full finetune simulation at home on any GPU | Civitai), we examined a method for simulating full SDXL training by using decomposition and fine-tuning the extracted weights in the form of a LoRA with conv and linear layers. The method works with any prediction type and shows excellent fine-tuning results.

This article covers a different SVD/PISSA use case — fast convert of an EPS SDXL checkpoint into a FLOW MATCH checkpoint.

The original problem with converting a full model consists of two aspects:

1. Time cost — converting EPS → FLOW takes a long time for a full checkpoint (around 400,000–500,000 steps for a stable and coherent result).

2. Resource requirements — not everyone can afford training a full SDXL checkpoint.

Main assumptions:

A strong optimizer gradient is required for fast conversion to “push” the model toward FLOW adaptation.

It is difficult to choose an appropriate LR for “static-speed” optimizers when the dataset is heterogeneous.

Training settings must suppress overfitting on the dataset.

The base model’s generalization must be preserved at a normal learning speed.

Based on these points, the following assumptions were made:

An optimizer with automatic LR adjustment is required—one that behaves stably on a heterogeneous dataset.

It must compute quickly (to further reduce total convert time).

We only have a small number of suitable optimizers, among which are:

Prodigy

Prodigy Plus (schedule-free)

DAdapt family (AdaGrad, Adam, SGD, Adan, Lion)

Adafactor

The original Prodigy is too resource-intensive, which makes it unsuitable.

Prodigy Plus (schedule-free) shows good results in test runs and includes multiple convergence-accelerating code improvements, but on a heterogeneous, complex, and noisy dataset it does not perform the task correctly. It does work for homogeneous datasets. Possibly the parameter space is overly complex, but out of ~30 test runs, only a few achieved a stable transition into the FLOW regime.

DAdapt family:

Adam — essentially behaves like an automatic Adam; stable,

but slowfast if you choose right d0: for example 1e-5 is weak, 5e-5 - strong, 1e-4 - very strong, maybe need something about warmup.Adan — good for large datasets and noisy data, but slow. Extremely strong gradient from the start destroys training (NaN).

AdaGrad — old and inefficient.

SGD — concerns about convergence and sensitivity to small datasets.

Lion — produces strong gradients and is fast.

Adafactor - not tested.

After testing partial behaviors, DAdaptLion was selected.

A heterogeneous, extremely complex dataset was used, consisting of:

350 images

a clean class-token-only dataset without captions

a moderately noisy dataset for an enhanced concept with human-language style captions

a complex, messy dataset with SDXL-style captions

Test model for convert: LUSTIFY! [SDXL NSFW checkpoint] - ENDGAME | Stable Diffusion XL Checkpoint | Civitai

The text encoder was not trained; an additional test run is needed.

To further increase task complexity, the new latent space Kohaku’s EQ VAE (https://huggingface.co/KBlueLeaf/EQ-SDXL-VAE) was used. The main idea behind using a different latent space is that the new latent space should, in practice, increase the training time and require additional compute. Accordingly, if the test training run is successful under these conditions, then training on the original VAE of the original EPS model will be even faster. And EQ itself is also more precise.

Training settings:

accelerate launch --num_cpu_threads_per_process 8 sdxl_train_network.py ^

--pretrained_model_name_or_path="K:/Comfyui_033/ComfyUI/output/checkpoints/LUSTINULLdiff.safetensors" ^

--train_data_dir="MIX" ^

--output_dir="output_dir" ^

--output_name="MIX" ^

--network_args "algo=locon" "conv_dim=64" "conv_alpha=64" "preset=full" "train_norm=False" ^

--resolution="1024" ^

--save_model_as="safetensors" ^

--network_module="lycoris.kohya" ^

--shuffle_caption ^

--max_train_epochs=1000 ^

--save_every_n_steps=350 ^

--save_state_on_train_end ^

--save_precision=bf16 ^

--train_batch_size=1 ^

--gradient_accumulation_steps=1 ^

--max_data_loader_n_workers=1 ^

--mixed_precision="bf16" ^

--caption_extension=".txt" ^

--gradient_checkpointing ^

--network_dim=64 ^

--network_alpha=64 ^

--optimizer_type="pytorch_optimizer.optimizer.dadapt.DAdaptLion" ^

--optimizer_args "betas=(0.9, 0.99)" "weight_decay=0" "d0=1e-5" ^

--learning_rate=1 ^

--network_train_unet_only ^

--loss_type="fft" ^

--flow_matching ^

--flow_matching_objective="vector_field" ^

--flow_matching_shift=2.5 ^

--enable_bucket ^

--bucket_reso_steps=64 ^

--min_bucket_reso=768 ^

--max_bucket_reso=1280 ^

--xformers ^

--vae="K:/ComfyUI_p022/ComfyUI/models/vae/kohaku" ^

--network_weights="K:/Comfyui_033/ComfyUI/models/loras/train/ext_lust_fix.safetensors" ^

--full_bf16 ^

--mem_eff_attn ^

--lr_scheduler="constant" ^

--seed=1 ^

--no_metadata ^

--logging_dir="logs" ^

--log_with="tensorboard" ^

--persistent_data_loader_workers ^

pause

Used sd_scripts fork with FLOW capabilities for SDXL LoRAs: fork of sd-scripts

The training ran for four epochs.

Results:

The method basically works.

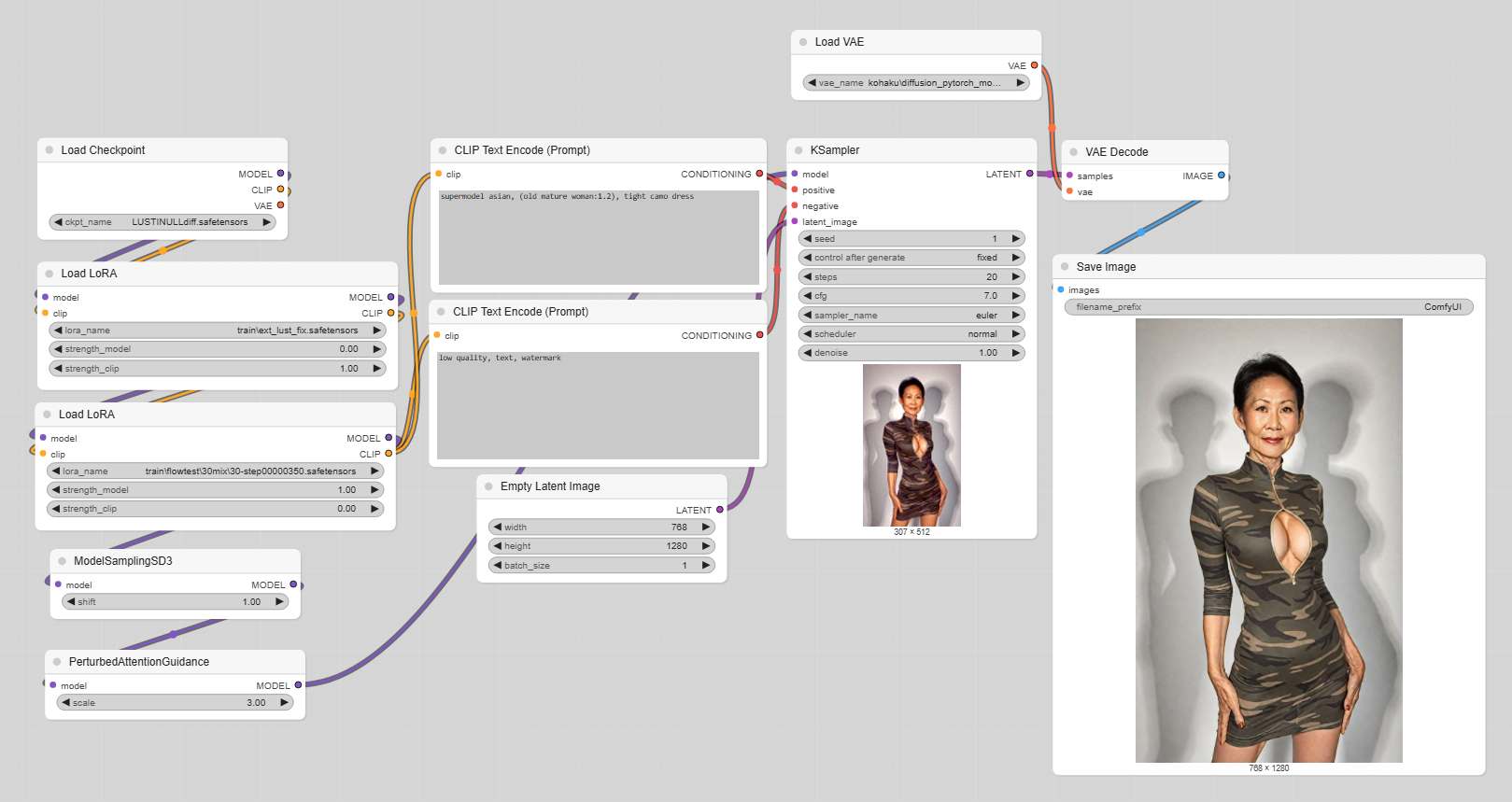

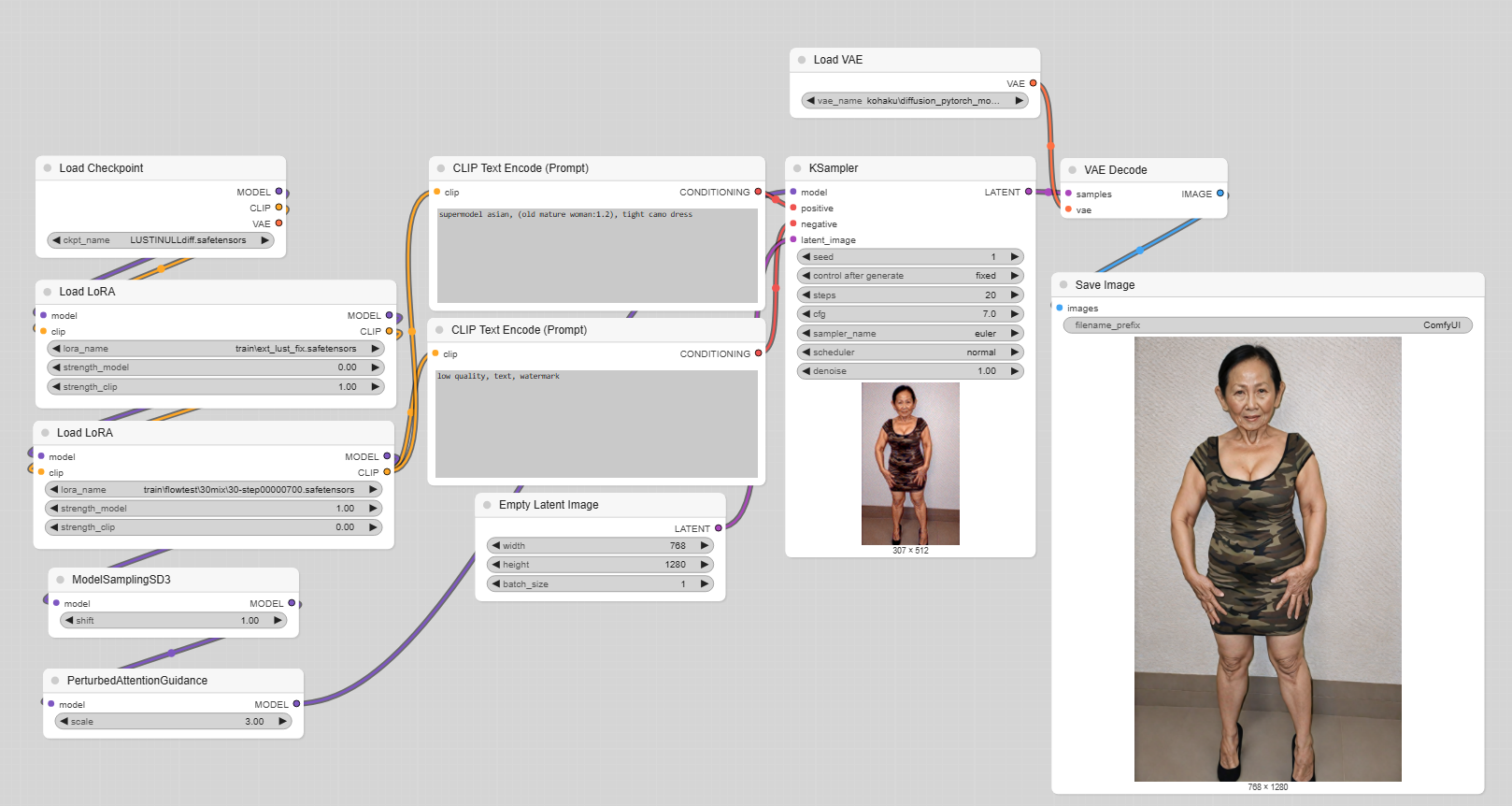

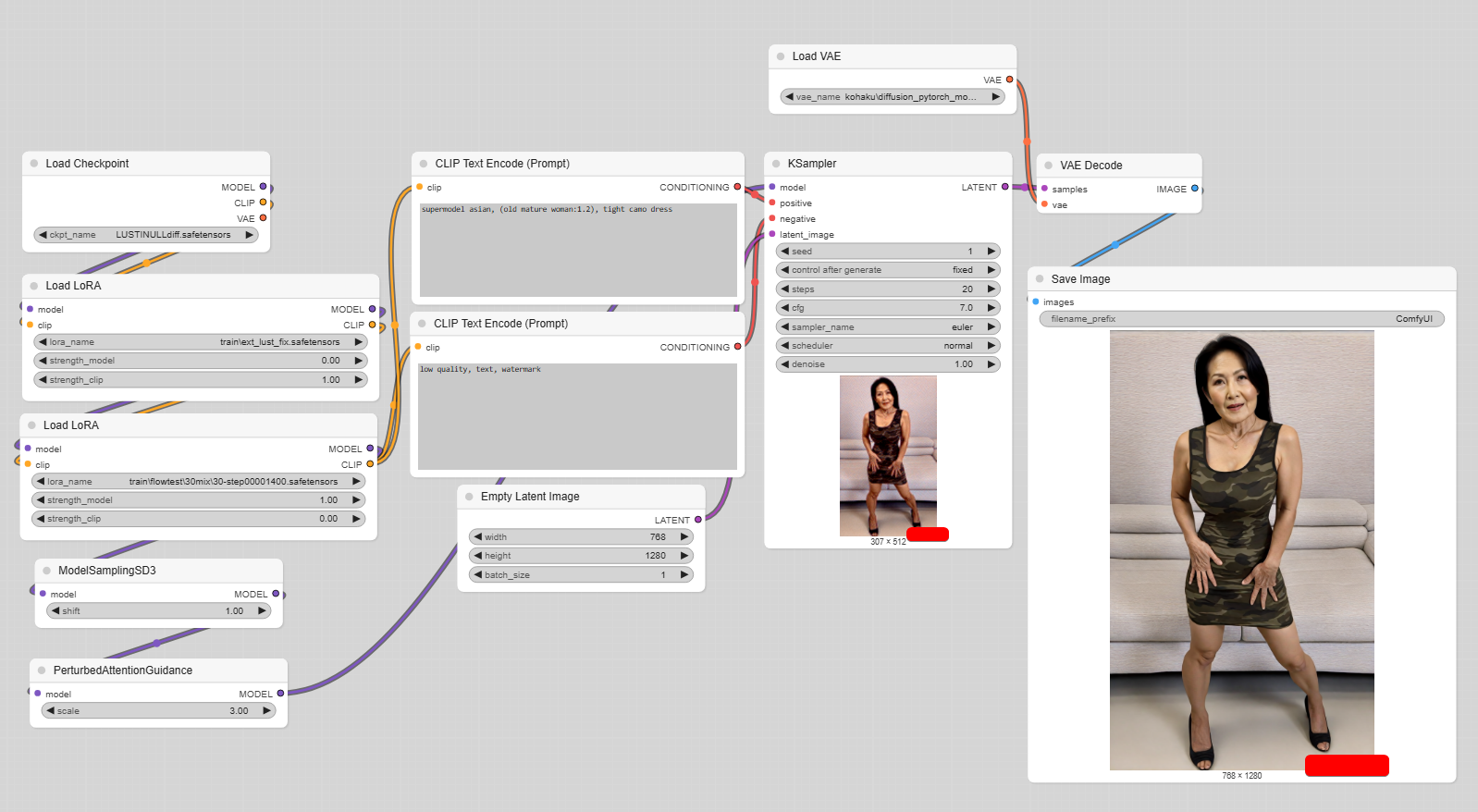

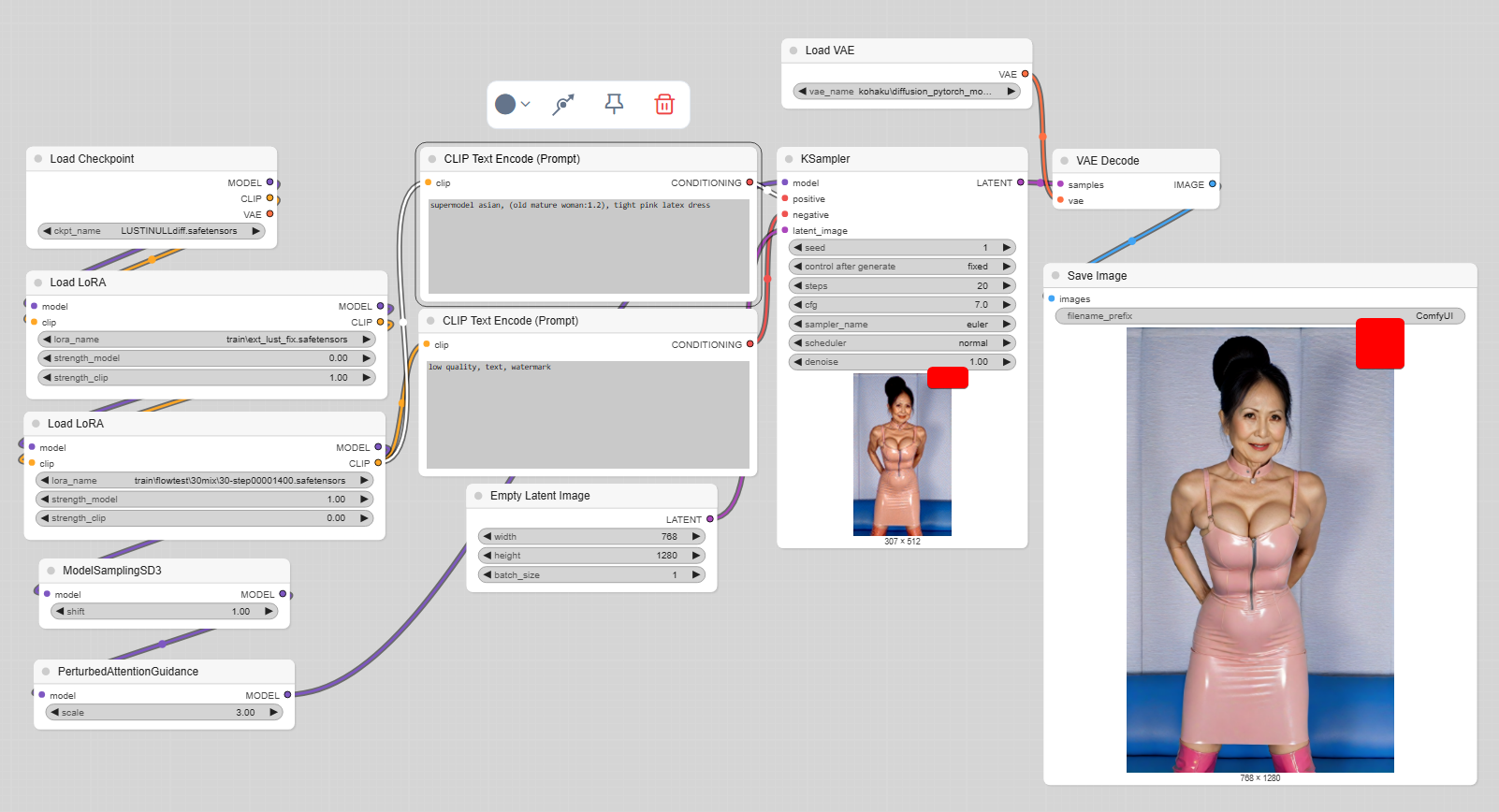

As a test prompt, we use:

"supermodel asian, (old mature woman:1.2), tight camo dress",

because overfitting can strongly push the model to forget basic concepts (e.g., mature woman), and the model can become tied to the dataset.The first image shows the native model generation:

Next images shows FLOW parts each epochs:

In the last epoch, we can see watermark leakage from the dataset (one of the three datasets has strong watermarking) — visible under the red square shape.

This is not a big problem overall; it just means we need to tune some dropouts, reduce training speed, or add some augmentations.

Coherence is preserved. As an additional test, we changed the token:

3. The base knowledge is also preserved. As a test, we used a somewhat complex base SDXL prompt:

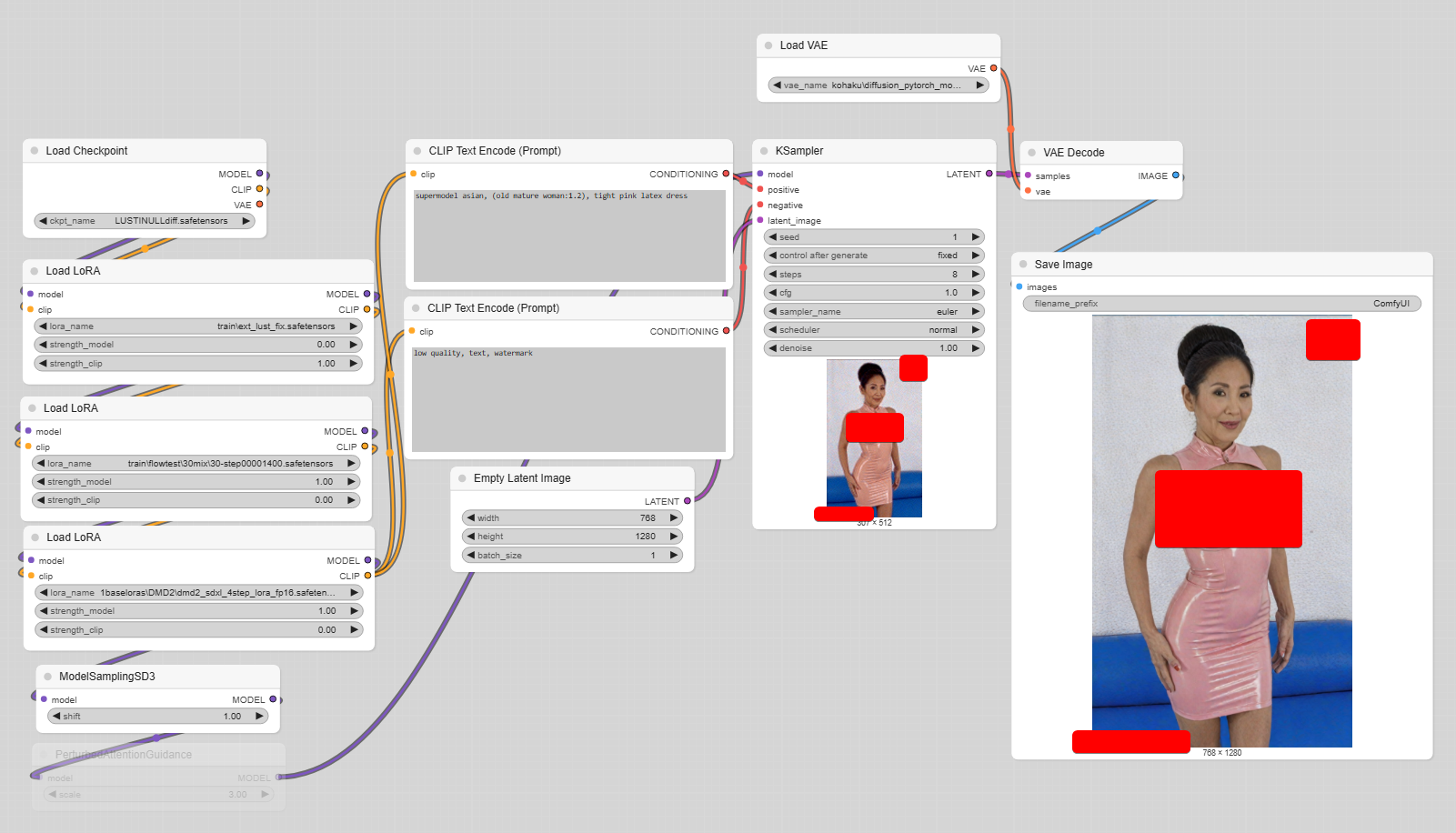

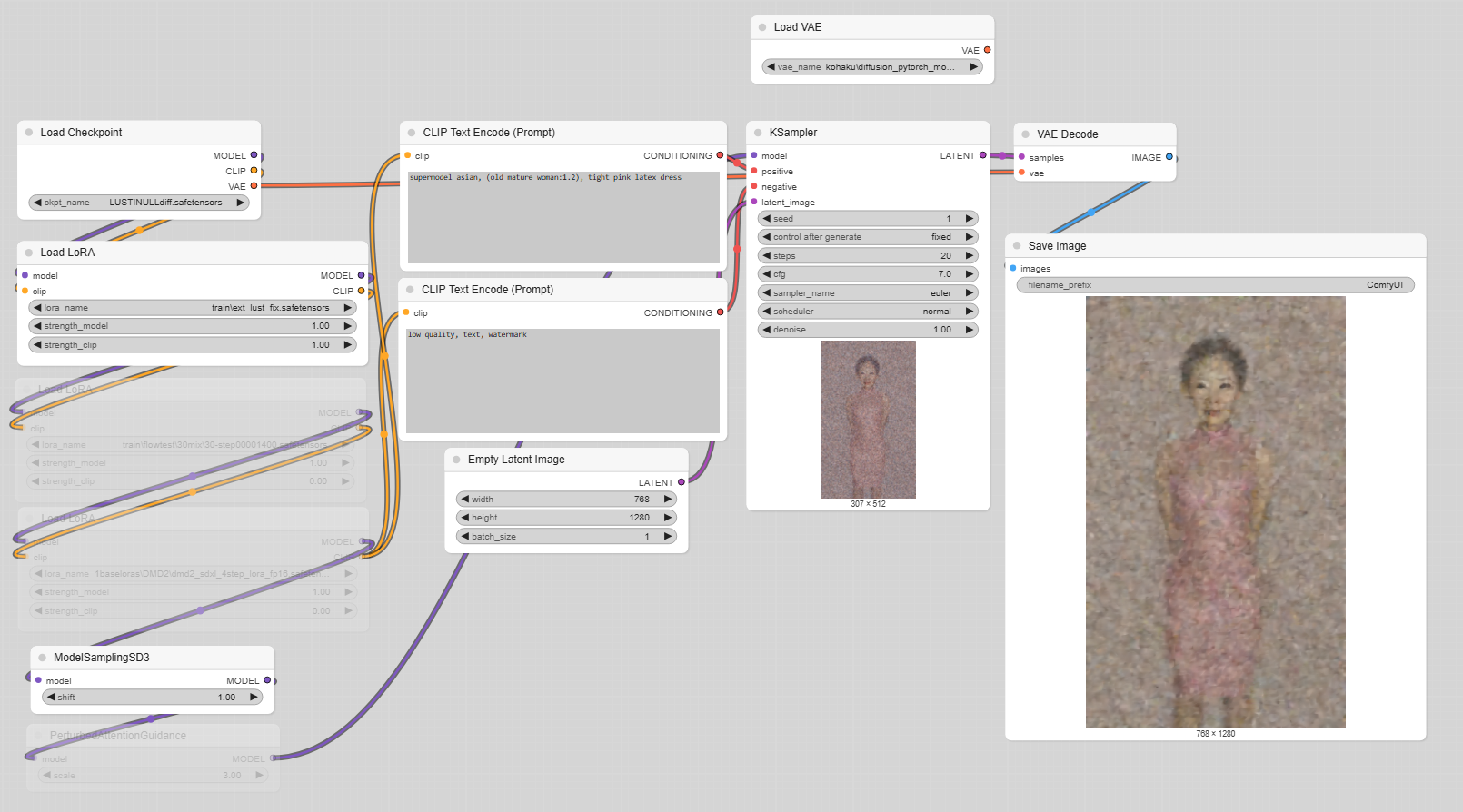

4. As a small bonus, the ability to run DMD2 on top of FLOW has been preserved:

LCM:

EULER (ooops, NSFW):

And to conclude, here is the proof that the resulting model behaves and is perceived as a FLOW model:

1. Original model under EulerDiscrete:

2. FLOW model without EulerDiscrete:

That's all for now.

Feel free to ask anything you want to know or anything else in PM or in the comments.

The next task is to do the same with Pony V6. Converting a realistic model to FLOW is a fairly simple task even with a small dataset, but it's unclear how Pony will behave on a small dataset when used as the driver for the conversion.

![[⚡WIP DRAFT⚡] [PISSA] [SVD] SDXL EPS to FLOW MATCH convert (part 2) [PROOF OF CONCEPT]](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/1062995f-d035-4ffb-bfc4-ce12381e9a5d/width=1320/514028988629141.jpeg)