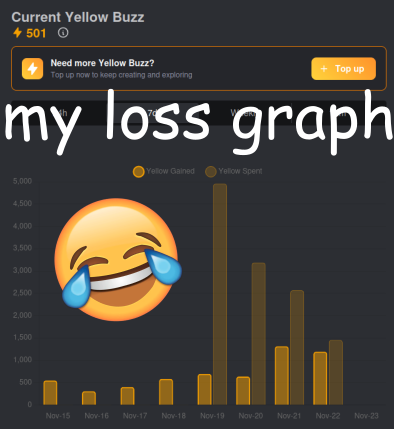

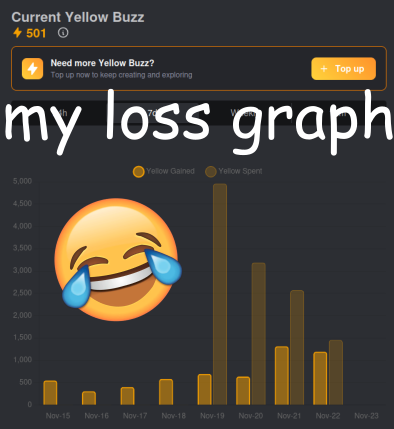

i spent 10000 buzz training the same lora over and over again so i could stop worrying about settings because i was tired of googling and getting told by some phd on reddit that X is better than Y because they used it to train llm checkpoints when all i want is to train anime loras

here's the best settings for training loras in order of importance. basically what is written here means "i trained the same lora with both settings, sometimes even more than 2 times, and found no significant improvement to make it worth its downsides so u shouldn't use it OR this one setting performed obviously better than the other so u should always use it." source: trust me bro.

upscale ur dataset

more pixels = more training. if ur dataset is mostly cropped images/small images u gotta upscale it bro.

realistic ESRGAN is better than anime ESRGAN to preserve details in photos, but anime ESRGAN makes images flatter and that avoids making the style more "realistic" like noses appearing on my anime characters. both of them suck for comics/black white images so go find some specialized upscalers on https://openmodeldb.info/ if u need that

cosine without restarts is better than cosine with restarts

cosine with restarts is bad. use cosine without restarts.

noise offset is bad for details

set noise offset to zero to improve details.

adafactor is better than adamw 8bit

adamw 8bit is way worse than adafactor. it's ridiculous. if u hear someone saying adamw is better they're probably not talking about the 8bit version that civitai has

don't train the text encoder

set the text encoder learning rate (TE LR) to zero to disable it in training. train only the UNET

disable min snr gamma for better details

min snr gamma should be zero (disabled) if ur training something with details otherwise 5 is fine. values lower than 5 like 1 make the effect stronger which will get u worse results. making the value higher like 20 is going to make it almost disabled.

32/32 dim/alpha is better than 32/16 dim/alpha

always use the same alpha as the dim to disable the effect that alpha has on the learning rate

cosine is better than constant schedule for details

u can train with constant if u want thanks to way adafactor scales learning rate but the constant schedule makes details better

batch size 4 is better than batch size 1

if u see a phd on reddit telling u that batch size 1 is better than batch size 4, don't listen to him. bigger batch size is always better. can't believe i had to waste 500 buzz just to forget what i read on reddit