🍥Workflow Link

≈➤ Use the Table of Content on the right of this page to jump to different sections (Desktop view only)

⌨️Usage

Select t2v or i2v/FFLF Mode.

t2v: Adjust resolution for video

i2v: Drag and drop in the 1st image loader, choose to manually scale down image or disable it to use original image dimension

FFLF: Enable i2v mode and Enable FFLF and drag and drop image in the 1st & 2nd image loaders, choose to manually scale down image or disable it to use original image dimension (it will use 1st image dimension)

Edit/Add 🍏Prompts, add ✨Loras if needed.

Adjust ⌚Duration or just leave it at 5 seconds.

Set 🚶➡️Total Steps & 👞Split Steps. (4 Total/ 2 Split for Fast, 6 Total/ 3 Split for Good Quality, 8 Total/ 4 Split for Higher Quality)

Click on "New Fixed Random" in 🎲Seed Node.

Generate Video (▷RUN).

Repeat step 4 & 5 until you get desire video shown in 🎥Preview.

While using the same 🎲Seed and without changing anything that is not in 🛠️Post Processing, change/edit 🛠️Post Processing Options, Generate Video (▷RUN) again as many times and skip through KSampler.

Video generated will be in your output folder - 🗂️ComfyUI/Output

🌀To start on a new video project, disable everything in 🛠️Post Processing and set 📺Final Video Mode to 🎥Preview.

💡If you have lower VRAM

Click on the "ComyfUI icon" for the menu

Go to "Settings"

On left bar, go to "Server-Config"

Scroll to "Memory"

Look for "VRAM management mode" option

Select "lowvram"

Restart ComfyUI

🧩Installing Custom Nodes

Load workflow into ComfyUI.

Click on "🧩Manager" in ComfyUI.

Click on "Install Missing Custom Nodes".

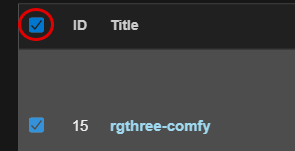

Check the Box on the top right to select all missing custom nodes.

Click on "Install" at the bottom. Wait for installation to be done and restart ComfyUI.

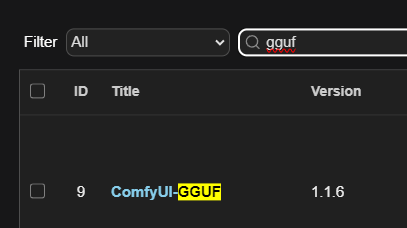

⚠️Comfyui-GGUF might not show up in "Install Missing Custom Nodes":

Click on "Custom Nodes Manager".

Type "gguf" in the search bar, it will show up. Install it, and restart ComyfyUI.

🧩Custom Node Used:

ComfyUI-GGUF (Manually search in the Customer Node Manager and install this, if it does not appear in “Install Missing Custom Nodes”)

rgthree-comfy

ComfyUI-East-Use

ComfyUI-KJNodes

ComfyUI-VideoHelpSuite

ComfyUI-essentials

ComfyUI-Frame-Interpolation

ComfyUI-mxToolkit

WhiteRabbit

ComfyUI-MMAudio (only for workflow that uses it)

🎼Installing ComfyUI-MMAudio (for WF with 🎼MMAudio)

1. Git Clone

Go to your ComfyUI, custom node directory.

🗂️ ComfyUI\custom_nodes

Windows:

Git clone the ComfyUI-MMAudio into the directory.

Right-click in the directory and select "Open Terminal"

Enter:

git clone https://github.com/kijai/ComfyUI-MMAudio.git2. Installing

For Desktop:

Open ComfyUI,

Click on "🧩Manager" in ComfyUI.

Click on "Install Missing Custom Nodes".

Select "Try Fix" on ComfyUI-MMAudio.

Restart ComfyUI.

For Portable:

Go to your "python_embeded" folder.

Right-click in the directory and select "Open Terminal"

Enter:

python.exe -m pip install -r ComfyUI\custom_nodes\ComfyUI-MMAudio\requirements.txt🎼ComfyUI-MMAudio Models + Download Link below

(Not Require) You can install ComfyUI-Crystools custom node in the 🧩manager (add real-time graph in ComfyUI to monitor %usage of CPU, GPU, RAM, VRAM)

1. 📲Models / Distil Loras Loaders + Links

⚠️⚠️⚠️GGUF Model Selection: (Based on your VRAM)

🔵 24 gb Vram or more: Q8_0 - (Higher Quality, Big Size)

🟢 16 gb Vram: Q5_K_M - (Mid Quality, Mid Size) - commonly used by many

🟠 12 gb Vram or less: Q3_K_S - (Lower Quality, Small Size)

(if you intent to use only i2v, set the t2v model in 8. & 9. to the same i2v model to prevent error. You don't have to download both i2v/t2v models. Same as the ⚡️Distll Loras.)

1. 📈I2V High Noise GGUF Model

2. 📉I2V Low Noise GGUF Model

🗂️: ComfyU/models/unet

For Non-GGUF workflow:

1. 📈I2V High Noise Model

2. 📉I2V Low Noise Model

🗂️: ComfyUI/models/diffusion_models

-

3. ⚡️I2V High Noise Lightx2v Distill Lora

4. ⚡️I2V Low Noise Lightx2v Distill Lora

🗂️: ComfyUI/models/loras

-

5. 🧷Clip - umt5_xxl_fp8_e4m3fn_scaled

🗂️: ComfyUI/models/text_encoders

-

6. 🌸Vae - wan2.1_vae (yes, wan2.2 uses wan2.1 vae)

🗂️: ComfyUI/models/vae

-

(recommended to use a 2x upscale model, you can still use other 4x upscale model, but it might take longer to upscale)

7. 📐2x Upscaler Models

🗂️: ComfyUI/models/upscale_models

-

(if you intent to use only t2v, set the i2v model in 1. & 2. to the same t2v model to prevent error. You don't have to download both i2v/t2v model. Same as the ⚡️Distll Loras.)

8. 📈T2V High Noise GGUF Model

9. 📉T2V Low Noise GGUF Model

🗂️: ComfyUI/models/unet

For Non-GGUF workflow:

8. 📈T2V High Noise Model

9. 📉T2V Low Noise Model

🗂️: ComfyUI/models/diffusion_models

-

10. ⚡️T2V High Noise Lightx2v Distill Lora

11. ⚡️T2V Low Noise Lightx2v Distill Lora

🗂️: ComfyUI/models/loras

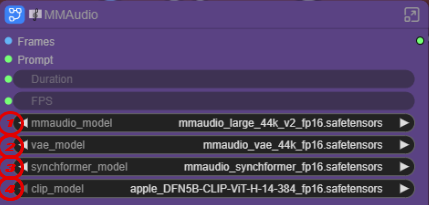

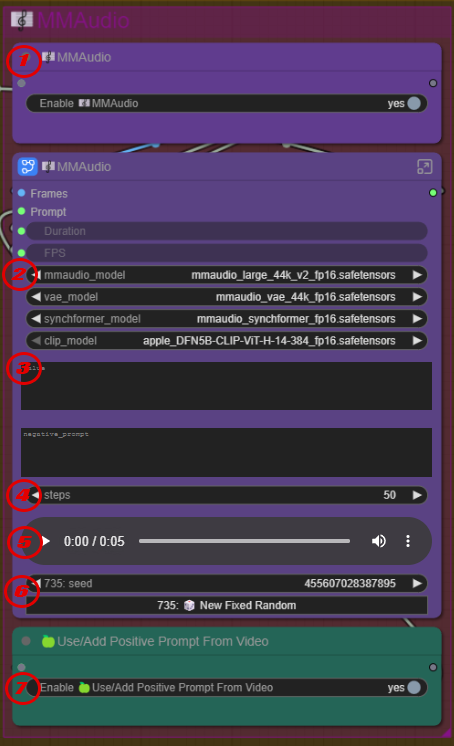

🎼MMAudio Models + Download Link (for WF with 🎼MMAudio)

Press "F5" in the workflow and you will get transported to the 🎼MMAudio Node.

For 🛠️Post Processing Workflow, go to the 🎼MMAudio Node on the right of the workflow.

⚠️Create a folder in "models" folder and name it "mmaudio". Put all the files in there.

🗂️: ComfyUI/models/mmaudio

1. mmaudio_model (Can get both or either, I used both and switch depending on what audio I want)

SFW Model (Not so good at NSFW Stuff)

NSFW Model (Not so good at SFW Stuff)

2. vae_model

3. synchformer_model

4. clip_model

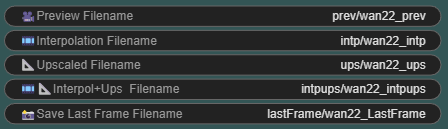

2. 🗂️ Filenames

Here you can set the video output filenames. Adding a "/" will create a folder.

The default examples here: 🎞️Interpolation videos will save into "🗂️:ComfyUI/output/intp/" and the filename will be "wan22_intp_00001". ComfyUI will automatically add a numbering system at the end of the filename.

All Generated videos will be save into 🗂️:ComfyUI/output.

3. 🚨Sage Attention

-🚨Sage Attention - Enable Sage Attention to boost generation speed.

Click here for a guide to install Trition and Sage Attention by @UmeAiRT.

I have forgotten how I get them installed as it was too long ago, so I may not be able to help.

4. 📀t2v 💿i2v Mode

4a. 📀t2v - 💿i2v Switch

Switch between (i2v/First Frame Last Frame) or (t2v)

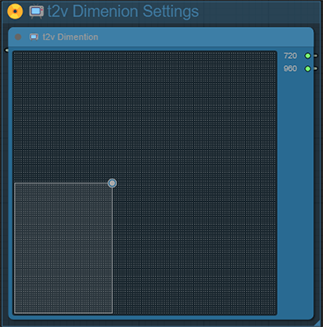

4b. 📀📺t2v Dimenion Settings

Adjust the dot to set width and height for t2v video resolution.

⚠️Higher resolution images will require more time and memory to generate video.

4c. 💿🖼️🌆i2v/FFLF Settings

1 - 💿📏🌆i2v Settings

- 📏Manual Scale Image - Enable/Disable Options to Manual Scale Image to Megapixel. Disable to use original image dimension.

- 🏙️🌆First Frame Last Frame - Enable/Disable Options to input 2nd image at 4 for First Frame Last Frame video.

2 - 📏Manual Scale Image to MegaPixels (When Enabled in 1)

- Its better to downscale your input image than upscale it for better quality.

Example: 1440x1920 images are too large for me to generate video, so I scaled down to 0.66 megapixel

- You can use the Calculate Megapixel Workflow to calculate your image dimension in megapixel.

(eg. 1440x1920 - 0.66 megapixel ≈ 720x960).

If your dimension is already in the same megapixel, it will not change much.

Example: input image 720x960, input megapixel 0.66, dimension used is still 720x960

- As some may use different various image dimensions, with all images scaled to a fix megapixel, generation time will be more consistent, without the need to keep adjusting or figuring out which dimension to use.

⚠️This only for i2v.

3 - 🖼️First Image

- Drag and drop your image here for i2v. Your image dimension will be displayed at the bottom of the node.

4 - 🌆Last Image (when enabled in 1)

- Drag and drop your image here for First Frame Last Frame Video. Your image dimension will be at the bottom of the node.

⚠️All images dimension will be automatically resize to divisible by 16 to prevent error in video generations. (eg. 961 to 960)

⚠️Higher resolution images will require more time and memory to generate video.

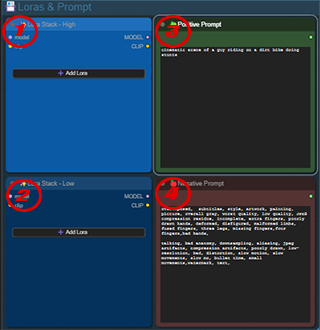

5. 📇Loras & Prompt

Wan2.2 uses 2 models to generate videos, High and Low noise. Naturally Lora exist in both High and Low Noise, with the exception of some.

1 - ✨Lora Stack - High

- Add High Noise Loras

Some Loras may only have high noise only

2 - ✨Lora Stack - Low

- Add Low Noise Loras

3 - 🍏Positive Prompt

- Positive Prompt - Describe the scene, actions, motion etc. of the video.

4 - 🍎Negative Prompt

- Negative Prompt - Describe things you don't want in the video.

Since we are using ⚡️Distill Lora for low steps generations, we have to set 🏵️CFG to 1. Thus, 🍎negative prompt is almost ignored or does not have any effect. 🔮WanNAG is used in this workflow to allow and strengthen the use of 🍎Negative Prompt.

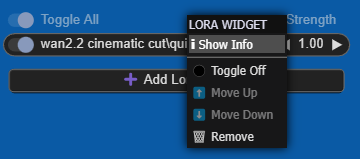

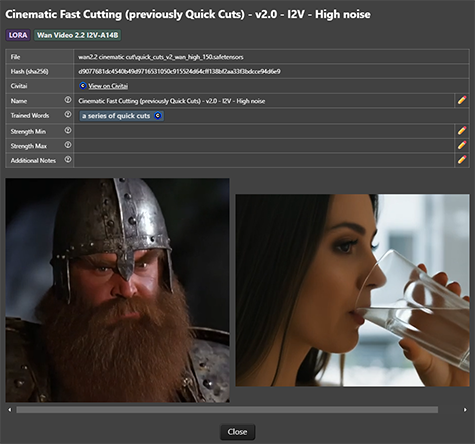

💡Tips On Lora Stack Loader

After adding a Lora in the Stack, right click on it and an option will show like below:

Click on "ℹ️Show Info" and an interface will show up:

If the Lora is downloaded from CivitAI, you can click on the "Fetch info from CivitAI" to get more info from the Lora such as trigger words, link to the lora page on CivitAI and also example videos/images uploaded to that Lora page with prompt examples, if any.

⚠️‼️(NSFW videos and images from the Lora Page are not blocked here and will pop up here when the info is fetched)

Example:

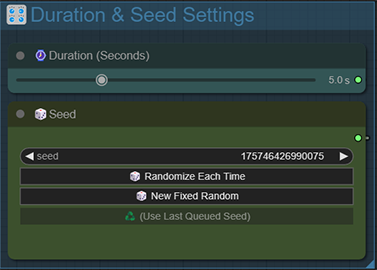

6. 🎛️Duration & Seed Settings

⌚Duration (Seconds)

Set the durations of the video. (Calculation: 16 x Duration + 1 Frame, 5 sec = 81 frames)

Anything more than 5 seconds will cause Wan video to bounce back in i2v in most cases. You can use FFLF with a desire end frame to try to counter this for videos more than 5 seconds.

⚠️More duration will require more time and memory to generate the video.

🎲Seed

🎲Randomize Each Time.

Randomize the seed number every time you Generate a Video (▷RUN)

Useful when generating batch 🎥Preview videos, then select desire video and do 🛠️Post Processing later.

Or generate batch videos with fixed🛠️Post Processing options.🎲New Fixed Random.

Fixed the seed number every time you Generate a Video (▷RUN)

Useful for repeating 🛠️Post Processing editing. (skip past pre processing like KSamplers, if no inputs are changed during video generation)♻️(Use Last Queued Seed).

When 🎲Randomize Each Time is used, after video generation, a seed number will appear here, click on this to use back the seed number of the video generated.

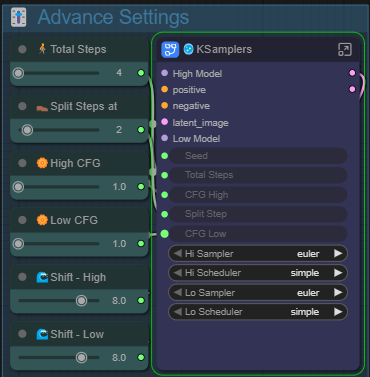

7. 🎚️ Advance Settings

🚶➡️Total Steps + 👞Split Steps at

(Generally 👞Split Steps = 🚶➡️Total Steps / 2)Fast: 4 🚶➡️Total Steps - 2 👞Split Steps

Good Quality: 6 🚶➡️Total Steps - 3 👞Split Steps

Higher Quality: 8 🚶➡️Total Steps - 4 👞Split Steps

(More steps = more time to generate video)

🏵️High CFG + 🏵️Low CFG (High and Low Noise Model CFG)

Need to be set to 1 as we are using ⚡️Ligthx2v Distill Lora to help us generate video in lesser steps instead of 20 total steps.

🌊Shift - High +🌊Shift - Low (High and Low Noise Model Shift)

Value from 5 - 8. Shift of 8 seems fine in my testing.

Shift setting changes the denoising schedule, impacting motion and time flow in the video.

A lower shift results in smoother, more predictable video motion, while a higher shift is more dynamic but can be chaotic.

🧫KSamplers - Samplers and Scheduler

Samplers: Euler + Scheduler: Simple (Default)

Good enough quality and speed generation for both High Noise and Low Noise models.

8. 🔮 NAG Setting

When 🏵️CFG is set to 1, 🍎negative prompt is almost ignore.

To bump up or strengthen 🍎negative prompt, 🔮Wan NAG (Normalized Attention Guidance) is integrated in the model process.

🌀nag_tau

Value input: 2.0-3.0

higher = strengthen more

lower = lesser effect

9. 🛠️Post Processing (🛠️Post Processing Workflow)

Post Processing makes video generation take longer time. Especially, when you are drafting for video/testing prompts and loras combinations, you might not want to post process the video until you have generated a desire one.

When 🎲Seed number is fixed and without changing anything that is NOT in 🛠️Post Processing. We can keep changing or edit 🛠️Post Processing options as may times and skip pass pre processes.

Example: Let's say you generated a video by clicking (▷RUN) and you forgot to set/change the 🎞️Interpolation Multiplier. Once the video have finished generating, you can change and set the 🎞️Interpolation Multiplier option and click (▷RUN) again. After the video is generated, you can still change and set other 🛠️Post Processing options like 🎞️📐Interpolation Multiplier+Upscale, 💎Sharpen or 🦄Logo Overlay options and hit (▷RUN) again.

All this will skip the sampling processes/steps because you are using the same 🎲Seed number and NOT change any inputs that is not in the 🛠️Post Processing Section.

If you changed/adjust some inputs in the pre processes(e.g steps, prompt, lora) during/after the generation, you can always set back the same inputs. So long everything is the same as when you generate the video.

If you forgotten what you have changed, you can go to the "Queue" tab, go to the "Running"/latest generation, right click and choose "Load Workflow". It will load all inputs for that generation. You can continue with this loaded workflow for 🛠️Post Processing.

🛠️Post Processing Workflow for video uses the same options. It is recommended to use a unprocessed video or 🎥Preview videos created using this workflow with no post processing.

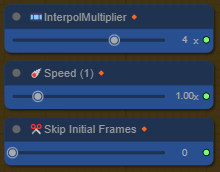

9a. 🎞️Interpolation Multiplier + ☄️Speed +✂️Skip Initial Frames

🎞️Interpol Multiplier

Frame interpolation to make video look smoother by filling up frames in between of video frames.

3-4x Multiplier = very smooth.

2x Multiplier = slightly smooth, cinematic feel.

Interpolate Multiplier = how many frame to fill up in between original video frames.

A 5sec video - 81 Frames, with 4x Multiplier, video will result in 321 total frames.

A 5sec video - 81 Frames, with 3x Multiplier, video will result in 241 total frames.

☄️Speed

Regulate the speed of the video

Changes the FPS of the video automatically

1 = normal speed

More than 1 = accelerated motion but reduce video length

1.5x = 50% faster

2x = 2 times Faster

Less than 1 = slow down motion but increase video length

0.6 = 40% slower.

✂️Skip Initial Frames

Trim off frame(s) from the start.

Some video might transit immediately from the first frame. You can use this to trim off the first frame.

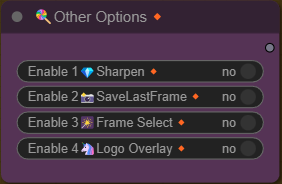

9b. 🍭Other Options

💎Sharpen

Sharpen video images using💎Adaptive Contrast Sharpening,

Enhance the perceived sharpness and detail of an image.

📸SaveLastFrame

Saved the last frame of the video (without post processes)

Use the saved last frame and input into the workflow again to "continue" the video or change the video outcome, (e.g switching out prompt and/or loras). Then merge them later.

Example:

Car racing down the street - Save last frame

Input last frame - Car flies into the sky.

Merged = Car racing down the street then flies into the sky.

- Output to: 🗂️ComfyUI/Output/(Your SaveLastFrame filename input)

🎇Frame Select

When enabled, Display/Preview all the frames generated from the video(without post processes) at the far right of the workflow.

You can click and select a frame in this preview to Save as image. (right click on the frame, "Save Image")

You then use this saved image as a starting image for i2v or last image for First Frame Last Frame video.

Example: If the frame in the middle of the video is what you want to end with instead of the actual ending frame of the video. You can save the middle frame and enable First Frame Last Frame, input it as the last frame and your video will end with that frame.

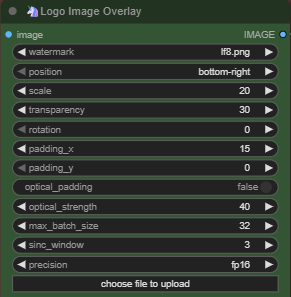

🦄Logo Overlay (Watermark)

Enable to watermark your videos.

Drag and drop your logo/watermark image into this.

Support images with transparency backgroundSelect the position you want the logo/watermark to be

Scale the size of your logo/watermark

Transparency - Adjust the opacity of you logo/watermark

Rotation - If you want your logo/watermark to be tilted

Padding X - How much spacing for the logo/watermark to be offset from the horizontal side

Padding Y - How much spacing for the logo/watermark to be offset from the vertical side

Leave the rest of the settings as it is.

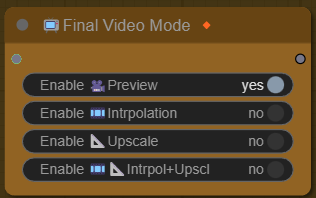

9c. 📺Final Video Mode

All mode output to a single 🎥Video Combine node.

FPS is automatically calculated in all modes.

🎥Preview ("🎥Video Only" in 🛠️Post Processing Workflow)

Select this to set as a default when drafting video.

🎞️Intrpolation (Video Frame Interpolation)

Select this for Frame Interpolation only

📐Upscale (with Model)

Select this for Upscale only

🎞️📐Intrpol+Upscl

Select this for Frame Interpolation and Upscale video.

All outputs will be save in 🗂️ComfyUI/Output/(Your filename input). The names of the videos and subfolder are dependent on what you set in the 🗂️ Filenames settings.

9d. 📹Manual FPS

Enable to manually set FPS(Frames per Seconds).

Overrides ☄️Speed and Auto FPS calculations & inputs.

The standard fps for Wan video is 16fps without interpolation.

Manual input of FPS will also change the length of the video.

Interpolated Frame FPS

(Auto Calculations = 16 x Interpolation Multiplier = fps)

Interpolation Multiplier 2x = 32 fps (Normal Speed Manual input - 30-32 fps)

Interpolation Multiplier 3x = 48 fps (Normal Speed Manual input - 44-48 fps)

Interpolation Multiplier 4x = 64 fps (Normal Speed Manual input - 60-64 fps)

Any fps input lesser than the auto calculation will slow the video and more than will speed up the video.

Example: Interpolation Multiplier 4x = 64 fps, Manual input 50fps = Slowed video/Increase video length, Manual input 70fps = Accelerated video/decrease video length.

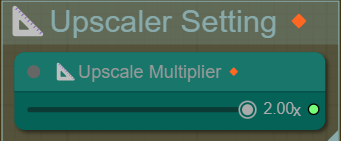

9e. 📐Upscaler Setting

When 📐Upscale or 🎞️📐Intrpol+Upscl mode is selected in 📺Final Video Mode.

Input/Adjust the final video output dimension by upscale factor.

Example: 720x960 - 1.5x =1080x1440 | 2x = 1440x1920

9f. 🎼MMAudio (for WF with 🎼MMAudio)

⚠️This is not perfect. You might need to try a lot of runs sometimes to get the audio you want.

It is best to do 🎼MMAudio in 🎥Preview 1st, but there are 2 scenarios to consider.

If you need to adjust ☄️Speed, adjust ☄️Speed 1st then do 🎼MMAudio. You can do the rest like 🎞️Intrpolation later.

If you need to adjust 📹Manual FPS, adjust and set your 🎞️Interpol Multiplier + 📹Manual FPS. Select your📺Final Video Mode with Interpolation and (▷RUN) the workflow. Once satisfied with the video fps, then do 🎼MMAudio in that 📺Final Video Mode selected.

Both of these scenarios affect FPS and audio duration for 🎼MMAudio.

Usage:

🎼MMAudio node function like 🛠️Post Processing. As long as FPS are not change, you can keep doing 🎼MMAudio. Until you get desired audio.

Enable 🎼MMAudio node, Click on "🎲New Fixed Random" in this 🎼MMAudio Group and hit (▷RUN). If it is not the audio you want, click on "🎲New Fixed Random" to get a new seed and hit (▷RUN) again. You can hover your mouse on the 🎥🎞️📐Video Combine node to hear the audio with the video, or play the preview audio in this node.

1. Enable/Disable 🎼MMAudio.

(If you are doing a fresh video, disable this 1st)

2. mmaudio_model

Switch between model if you need to.

3. Positive Prompt (Top) & Negative Prompt (Bottom)

Write your prompts here. you can keep it simple. A few words or tokens are good enough. Add audio prompt if the audio does not produce it. Or add negative prompt if there are audio sound you don't want in it.

Example:

If your video only have a person playing guitar but there are no audio for drums. Write/Add it in the prompt: "drums" or "drums, snare".

If you don't want the person to sing, you can put "vocals" in the negative prompt.

4. Steps

50 steps give good enough quality and very fast too. 10sec audio generation for me for 5 secs/81 frames/720*960 image in 🎥Preview mode.

5. Audio Preview

You can preview the audio here or hover your mouse over the 🎥🎞️📐Video Combine node.

6. 🎲New Fixed Random

Set a new seed for audio generation. If the audio is not what you desire, click on 🎲New Fixed Random to get a new seed and (▷RUN).

7. 🍏Use/Add Positive Prompt From Video

Enable it to pass in your entire video 🍏Positive Prompt into the audio positive prompt.

You can leave the audio positive prompt above empty and enable this to use your video 🍏Positive Prompt.

Or

Enable this to combine the audio positive prompt above and your video 🍏Positive Prompt.

Or

Disable to only use audio positive prompt above.

💡You can use the 🛠️Post Processing workflow to just add audio to your videos. If your video is already interpolated, just select 🎥Video Only and just do 🎼MMAudio.

All outputs will be save in 🗂️ComfyUI/Output/(Your filename depend on which 📺Final Video Mode is selected).

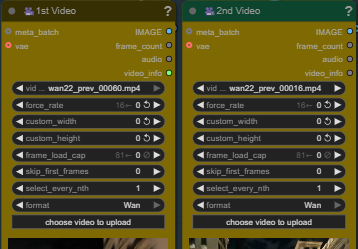

10.🎬Video Merger/Joiner Workflow

Drag and drop 1st video on the left and the 2nd video on the right and hit (▷RUN).

1st Video's FPS will be used.

Outputs will be save in 🗂️ComfyUI/Output/(Your Merge filename input in this workflow).

You can choose how the video resolution are match by clicking on the merge_strategy.

match A - match 1st video

match B - match 2nd video

match smaller - match which ever video is smaller

match larger - match which ever video is larger

Enable this to merge 3 videos at once. Drag and drop 3rd video in the video upload below this node.

11.🧮 Calculate Megapixel Workflow

Drag and drop image into the 🖼️Input Image.

Hit (▷RUN) and it will display your input image's megapixel.

Adjust/edit the "megapixel" in 🧮ImageScaleToTotalPixels and hit (▷RUN), this will display the Width and Height of your input image scaled to the input megapixel.

Manually input Width and Height and hit (▷RUN) and it will display megapixel according to the input dimension.

🖨️My Hardware and Stats:

🖥️ My Hardware Spec:

♠️ RTX 3090 Ti 24GB - 64GB RAM

📽️ My Video Generation Stats:

🚨 Sage Attention Enabled

🖼️ 720*960 (0.66 megapixels - Scaled down from 1440*1920)

🚶➡️ 8 Total Steps + 👞 4 Split Steps

💠 Per iterations/steps ≈ 47-49 secs (5 sec video)

⏱️ 5 seconds video / 81 Frames ≈ 7.5-8 mins (455 - 480 secs)

🛠️ 💎Sharpen + 🦄Logo Overlay + 4x 🎞️Interpolation + 1.5x 📐Upscale ≈ 2mins (120 secs)

📜References:

For 🎼MMAudio :

🔗(NSFW) Dead-Simple MMAudio + RIFE Interpolation Setup for WAN 2.2 I2V

😀SeoulSeeker(CivitAI)

![🍥Wan 2.2 (GGUF) [i2v / FFLF] + [t2v] Workflow Download Links & Guide](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/b201a4e5-7865-4364-bfd0-fbfb226e34ef/width=1320/article2.jpeg)