I saw some people asking on how did I generate the GGUF, and this article is to give you an idea of what it is needed and what I've developed to help me on this journey.

First of all, I need to recall that I'm on an AMD card, so, with the help of PatientX ( Creator of ComfyUI-ZLUDA ) I was able to use the scripts that he provided to makie it work with the ZLUDA project.

All my scripts are available in - https://github.com/Santodan/GGUF-Converter-GUI - this is a repo where I keep some of my scripts that I use or things to help me to remember for the future.

The first that I noticed, was that it was kinda a tenacious job to go through a single model to create various quantize models.

The process would be,

Run the dequantize script - https://github.com/Kickbub/Dequant-FP8-ComfyUI/blob/main/dequantize_fp8v2.py - with the command

python convert_gemini.py --in_file InputFile --out_file OutputFile --out_type f16, this would sctrip thefp8_scaledweights and also do the multiplication forFP16Run the

convert.pyto the output file from the previous stepRun the llama-quantize for the output file from the previous step to the wanted quantize

llama-quantize.exe InputFile OutputFile Q6_K, and I would have to run this for every single quantizelast, run the

fix_5d_tensors.py, this is needed for WAN specially, with the commandpython fix_5d_tensors.py --src InputFile --dst OutputFile --fix fix_5d_tensors_wan.safetensors, again for every single quantize

Then I saw that I also needed a script to upload the files to HugginFace, so I created the upload_to_hf.py, this one I made it so it would be in an interactive mode with the user.

What I would recommend, before running, is to set the variable HUGGING_FACE_HUB_TOKEN in the OS with your HF token, this way you will not be asked for it.

So I started to try to automatize this procedure.

The first step was to create a CLI script that would run everything for all the quantize files - run_conversion.py

The script will run everything and will require you to install, at least, one additional package prompt_toolkit since I'm using it to have an autocomplete when selecting the directories / files.

You will also need to run it inside the a python environment with the following packages:

safetensors huggingface_hub tqdm sentencepiece numpy gguf

You can see that, all these packages are normally in our ComfyUI environment, so you can select that environment to use the script.

So, when creating WAN GGUF in my hardware, it was taking around 12 hours to create, upload and then test it, and I wouldn't be able to use my PC at this time.

Specially, becasue WAN files need around 80GB of RAM to be able to convert to GGUF, and I have 32GB + 50GB PageFile

So i tried to also make it work inside runpod.

The script was updated to use the llama-quantize as respect everything inside linux, so the only thing I need to do when running in runpod is to get all these files and I don't need to build the llama-quantize anymore.

But then, I saw that it was getting a little bit out of hand when handling more than one model to convert.

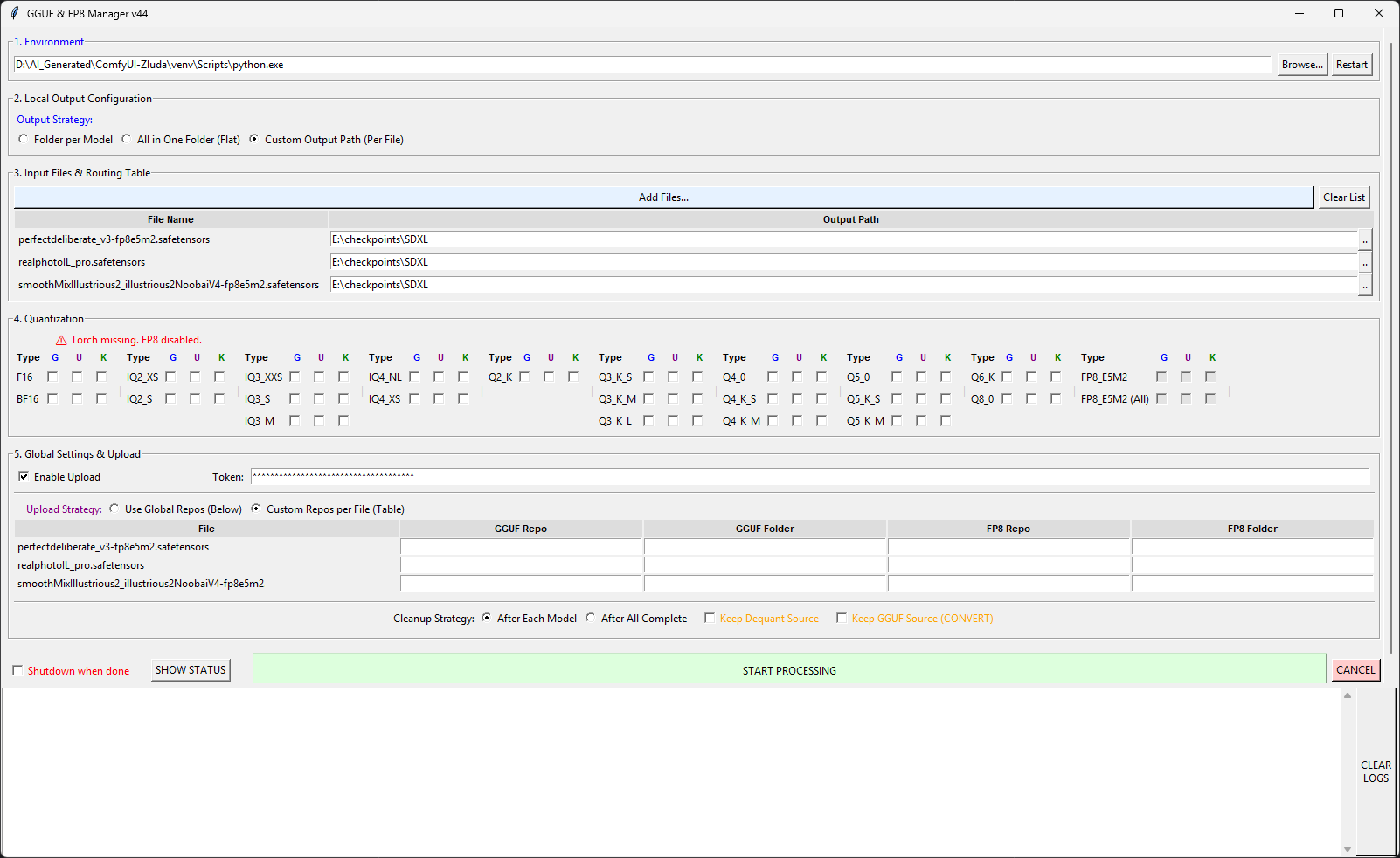

So I decided to create a UI that would make the job easier.

and this UI really makes things easier...

it has a lot of options and you can also select the correct python environment, and it is also easier to select files / folder when browsing through the directories, at least for me.

In this ui you can also add the corresponding folder for each model, the repo and folder inside the repo for each model.

I've separated the FP8 from the GGUF because I have a FP8 collection in HF and a GGUF Collection, so I can upload to different repos the different type of files.

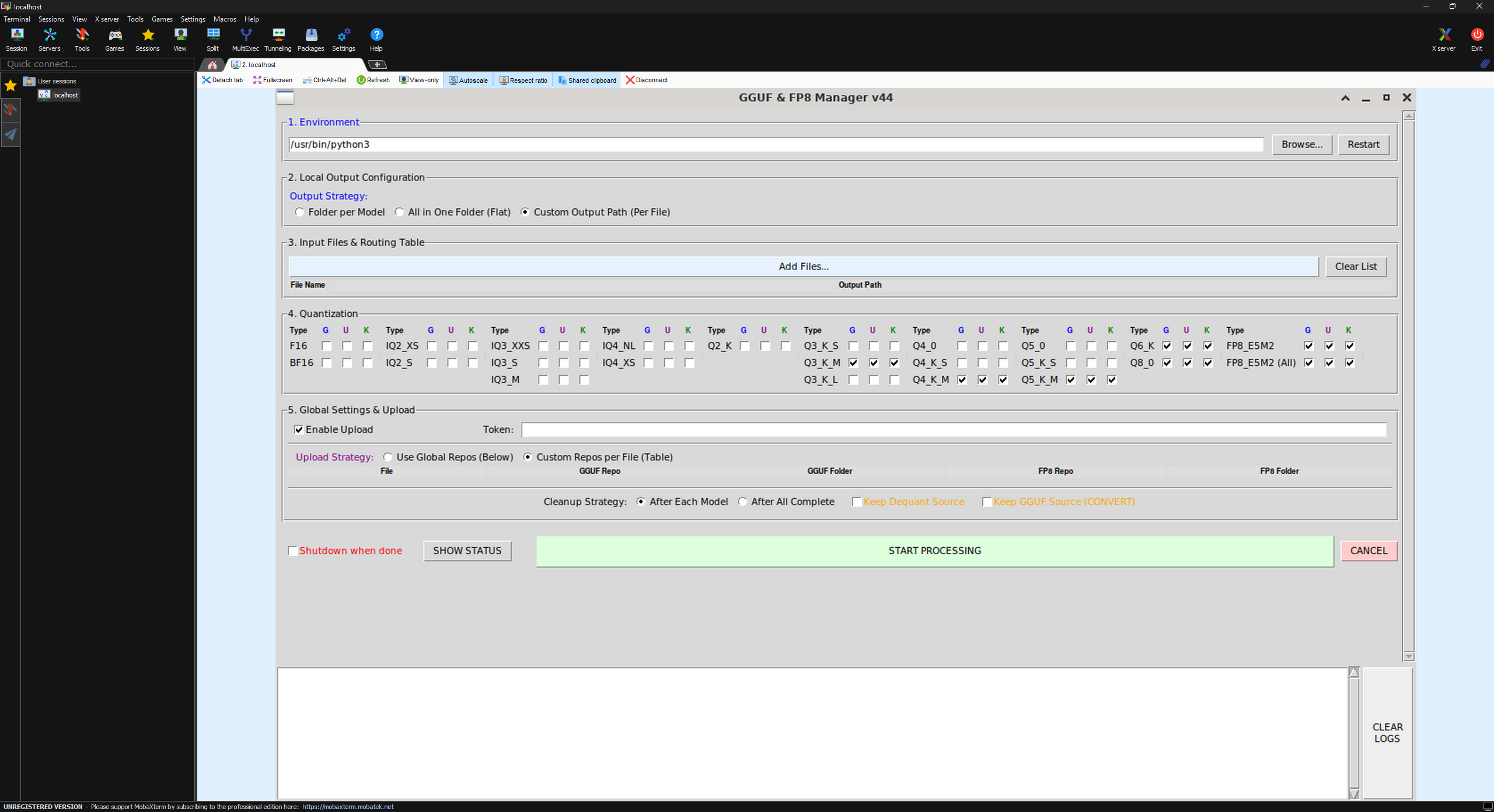

But this was only working in windows, since runpod runs headless VMs, so no UI inside runpod... or that what I thought until I got it to work.

The steps aren't exacly very user friendly, or at least for some people, but is very easy.

You will need a ngrok Authtokens - https://ngrok.com/ - and then install some additional things in the runpod pod ( and these don't need to be in the ComfyUI environment, so it should not mess it up.

Install Desktop & Network Tools

apt-get update && apt-get install -y python3-tk xauth xfce4 xfce4-goodies tightvncserver xterm dbus-x11 curl git build-essential cmake libcurl4-openssl-dev python3-pip autocutsel

Install Ngrok

curl -s https://ngrok-agent.s3.amazonaws.com/ngrok.asc | tee /etc/apt/trusted.gpg.d/ngrok.asc >/dev/null

echo "deb https://ngrok-agent.s3.amazonaws.com buster main" | tee /etc/apt/sources.list.d/ngrok.list

apt-get update && apt-get install -y ngrokDownload Files

cd /workspace

wget -N https://github.com/Santodan/comfyui-scripts/raw/refs/heads/main/zluda/GGUF/llama-quantize

chmod +x /workspace/llama-quantize

wget -N https://raw.githubusercontent.com/Santodan/comfyui-scripts/refs/heads/main/zluda/GGUF/dequantize_fp8v2.py

wget -N https://raw.githubusercontent.com/Santodan/comfyui-scripts/refs/heads/main/zluda/GGUF/gui_run_conversion.py

wget -N https://raw.githubusercontent.com/Santodan/comfyui-scripts/refs/heads/main/zluda/GGUF/run_conversion.py

wget -N https://raw.githubusercontent.com/Santodan/comfyui-scripts/refs/heads/main/zluda/GGUF/upload_to_hf_v4.py

wget -N https://raw.githubusercontent.com/city96/ComfyUI-GGUF/refs/heads/main/tools/convert.py

wget -N https://raw.githubusercontent.com/city96/ComfyUI-GGUF/refs/heads/main/tools/fix_5d_tensors.pySetup VNC Startup

mkdir -p /root/.vnc

echo '#!/bin/sh' > /root/.vnc/xstartup

echo 'unset SESSION_MANAGER' >> /root/.vnc/xstartup

echo 'unset DBUS_SESSION_BUS_ADDRESS' >> /root/.vnc/xstartup

echo 'autocutsel -fork &' >> /root/.vnc/xstartup

echo 'xfwm4 &' >> /root/.vnc/xstartup

echo 'xterm -geometry 80x24+10+10 -ls -title "Rescue Terminal" &' >> /root/.vnc/xstartup

chmod +x /root/.vnc/xstartupInstall Python Deps

pip install safetensors huggingface_hub tqdm sentencepiece numpy gguf prompt_toolkit

Config Ngrok

ngrok config add-authtoken YOUR_TOKEN

echo "2. Run: export USER=root && vncserver :1 -geometry 1280x800 -depth 24 && ngrok tcp 5901"something similar to the following will appear:

grok (Ctrl+C to quit)

🧱e Block threats before they reach your services with new WAF actions → https://ngrok.com/r/waf

Session Status online

Account Santodan (Plan: Free)

Version 3.33.1

Region United States (us)

Latency 23ms

Web Interface http://127.0.0.1:4040

Forwarding tcp://6.tcp.ngrok.io:19154 -> localhost:5901

Connections ttl opn rt1 rt5 p50 p90

0 1 0.00 0.00 0.00 0.00and now you are ready to start the GUI script, in another terminal run the command:

export DISPLAY=:1 && nice -n 10 python3 gui_run_conversion.py

but you will not be able to see the UI, since runpod is headless.

What I'm used to use, is MobaXterm, so I go there and start a VNC session with the details from the grok image

hostaname -

6.tcp.ngrok.ioport -

19154

and now you can connect and see the GUI:

and from there is just fill everything that you want and run it.

if you close the script with the X on the top right corner, it will generate a file called last_run_settings.json with the chosen setting, that way, when you reopen it, it will have them selected automatically.

In the Quantization you have three options ( G for generate, U for upload and K for keep ) I think it is pretty obvious what they do, but if you choose only U, the script will check if the files exist and will try to only upload them, without doing any conversion.

The Keep Dequant Source is the file generated by the dequantize_fp8v2.py and the Keep GGUF Source (CONVERT) is the one from the convert.py

PS: I think there is a bug that, sometimes, runs the script twice for the same file, I'm still investigating it why, but I have a clue for it.

For the NVidia users, I don't have a llama-quantize.exe build in windows, but I think it is straight forward to follow the steps from https://github.com/city96/ComfyUI-GGUF/tree/main/tools

You will also need the convert.py and the fix_5d_tensors.py from it.

Also, I'm not a programmer, but I have some knowledge of it, so the scripts that I've created were more created by Gemini Pro than me.