Artificial dataset extension from minimal image sources

Hey everyone!

I’m excited to introduce FrameForge, a streamlined, one-button pipeline to build high-quality character image datasets even when you only have one or a few reference images.

The goal:

Use 4 Grok Imagine video set to artificially expand a tiny dataset into a full, diverse training-ready collection.

Example LoRA using this Methode and toolit: https://civitai.com/models/2179897

🧩 What FrameForge Does (Fully Automated)

✔ Cleans and renames your sources

✔ Extracts frames from 6-second Grok Imagine clips

✔ Picks up to 40 diverse frames automatically

✔ Crops, flips, and standardizes

✔ Optional: autotags using EVA02

✔ Outputs a ready-to-train dataset folder

Drop → run → done.

🎥 Why Grok Imagine?

Grok’s 6-second clips are consistent enough to provide:

multi-angle shots

dynamic movement

natural lighting variation

expression changes

FrameForge turns these small fragments into a usable dataset with minimal noise.

⚠️ Important Note for Grok Users

No NSFW input.

xAI tolerates “spicy,” but has strict boundaries.

Stay within safe, clothed, allowed content.

🔥 Recommended Workflow (Best Results)

To get the cleanest coverage and a pseudo-3D look, generate 4 Grok Imagine videos of your character, each 6 seconds long, with simple, consistent prompts:

✔ Video 1: Normal

Static, neutral pose with slight motion.

✔ Video 2: Turn Around and Look Back

Shows silhouette, back shape, hair, posture.

✔ Video 3: Face Zoom

Close-up rotation / subtle facial movement.

✔ Video 4: Walk Sideways

Full-body strafe motion for profile diversity.

This combination produces:

stable multi-angle variation

consistent identity

enough pose diversity for most LoRA use cases

a noticeably cleaner 3D-style dataset

more reliable training convergence

🛠 Example Usage

Generate your four 6-second Grok clips.

Place them with one cover JPG here:

input_videos/YourCharacter/Run FrameForge:

python workflow.py --autotagYour ready dataset appears in:

final_ready/YourCharacter/

📥 Download / Source Code

GitHub Release: (Moved to different Github Organisation)

👉 https://github.com/MythosMachina/FrameForge

Python 3.10+, FFmpeg required.

Autotagging optional.

🔧 Final Thoughts

FrameForge was created to solve a simple but painful issue:

How do you train from one reference image?

Combine a small Grok video set with full automation — and suddenly you’ve got a high-quality, standardized dataset ready for training.

Feedback, ideas, or improvements welcome!

🛠 Planned Work (Release only when done and Stable)

• GPU-Accelerated Autotagging (optional) – in development

The current autotag system runs on CPU by default for maximum compatibility.

A GPU-powered mode is now in progress, activated simply by adding:

--gpuExample usage:

--autotag --gpuThis enables NVIDIA-based GPU acceleration for much faster tagging, while gracefully falling back to CPU if no GPU is available.

CPU remains the default — GPU is the “turbo mode.”

Update:

GPU tagging is fully operational and stable.

The core tagger runs clean; fine-tuning for the optional --autochar tag-reduction (hair/eye filtering) is still ongoing.

• Auto-Trainer (optional) – in development

An optional training module is being prototyped, enabled with:

--trainThis system will automatically:

analyze the generated dataset

detect density, diversity, and style

derive ideal hyperparameters algorithmically

run a hands-off LoRA training process

No Kohya_SS Knockoff. Purely from ground up.

The initial implementation will target PonySDXL, ideal for character-oriented LoRA workflows, with more model presets planned.

Update:

The planner and trainer are producing first successful results.

The system now auto-generates training jobs based on dataset size and image diversity, selecting parameters such as:

epochs

effective repeats

rank

learning rate

resolution

gradient accumulation

all derived algorithmically per dataset.

Training runs on GPU and exports clean LoRA-only safetensors, with automatic checkpoint handling already in place.

Preview sampling and automatic best-epoch selection are planned next steps.

🔥 Major Update: Full WebUI, Automated Pipeline & Training Integration

The newest FrameForge release represents a major milestone:

a complete, fully automated end-to-end workflow wrapped in a modern WebUI.

With the updated system, FrameForge now processes:

➡️ 1 reference image + 4 Grok Imagine videos → ~800 curated images → fully tagged → auto-cleaned → training-ready dataset

And optionally:

➡️ Automatic LoRA training, using adaptive hyperparameters derived from your dataset.

Everything works straight through the WebUI — no manual steps required.

🌐 New WebUI (Modern, Simple, Queue-Driven)

The new browser interface gives FrameForge full hands-off usability:

Upload any number of input ZIPs (each ZIP = 1 dataset job)

Jobs are placed into an internal queue, processed strictly one by one

Each finished job is stored in a history panel for easy download

(datasets + optional trained LoRAs)All core features are toggleable per job

This makes FrameForge scalable, repeatable, and ideal for bulk dataset creation.

⚙️ Fully Automated Workflow

The updated pipeline executes the complete process from input to training output:

Input

1 reference image

4 Grok Imagine 6-second videos

Processing

Frame extraction → ~800 frames (avg.)

Cleaning, cropping, flipping, standardization

Tagging & Character Refinement

Optional Autotag

: Automatically tags all images after the Crop-n-Flip stageOptional AutoChar

: Removes tags based on user-defined presets to keep character-specific labels cleanOptional FaceCap

: YOLO-based detection extracts clean face close-ups automatically

Output

A training-ready dataset compatible with Civitai Trainer, Pony, SDXL, and custom pipelines

🧠 Training Integration (Beta, but Fully Testable)

FrameForge now includes an optional Train module:

Automatically analyzes dataset size, diversity, face/pose distribution, and overall image quality

Selects optimal hyperparameters for:

epochs

learning rate

rank

resolution

repeats & scheduler settings

Launches a complete LoRA training session

Produces ready-to-use LoRA safetensors

Training is currently in Beta, but already stable enough for real-world testing.

I’m actively looking for feedback during the calibration and fine-tuning phase of the trainer.

If you'd like to help with testing or parameter validation, feel free to contact me via DM or on GitHub.

📦 Queue System (WebUI)

FrameForge’s WebUI introduces a robust job queue:

Add unlimited input ZIPs

Each job is processed sequentially

Results are stored cleanly in job history

Perfect for batch dataset production or hands-off overnight processing

No more waiting for individual runs — just load your tasks and let FrameForge cook.

🖼️ Screenshots

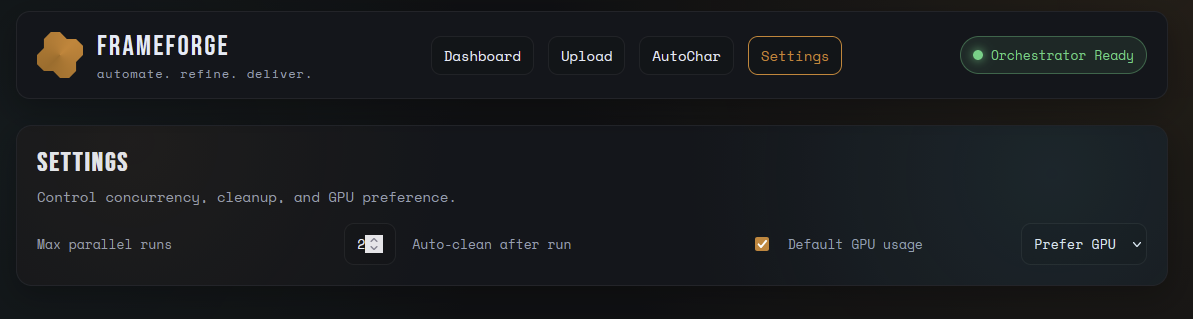

Dashboard / Active Queue / History

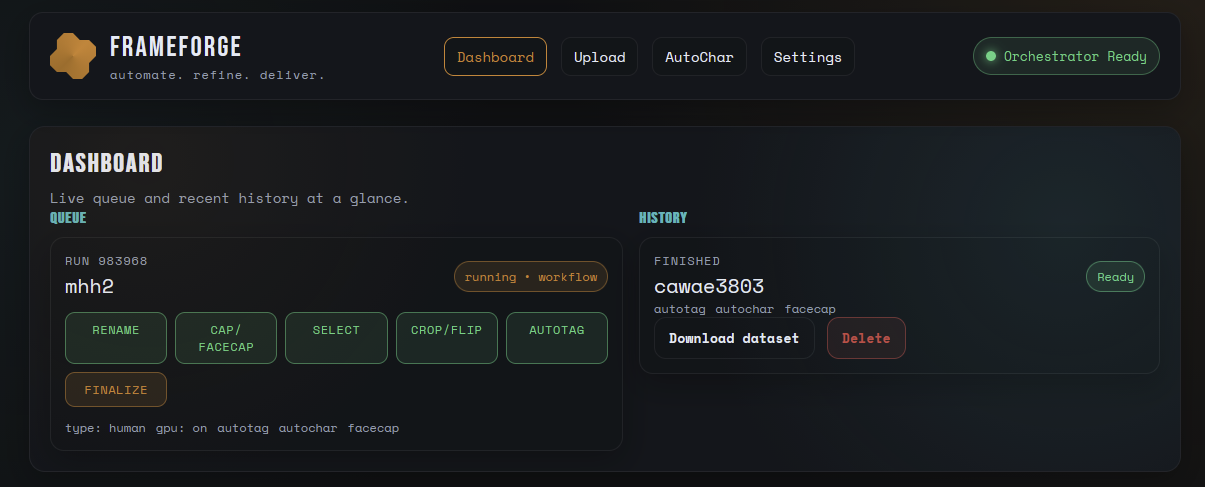

Upload, Mode and AutoChar Selection

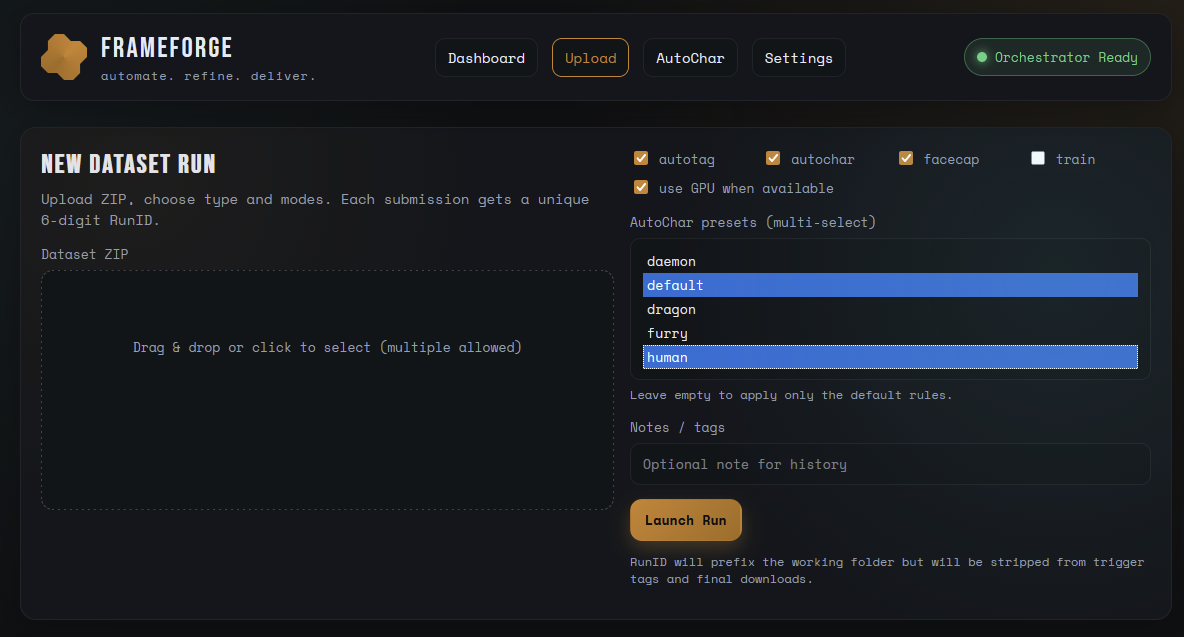

AutoChar Online Editor for AutoTag Removal

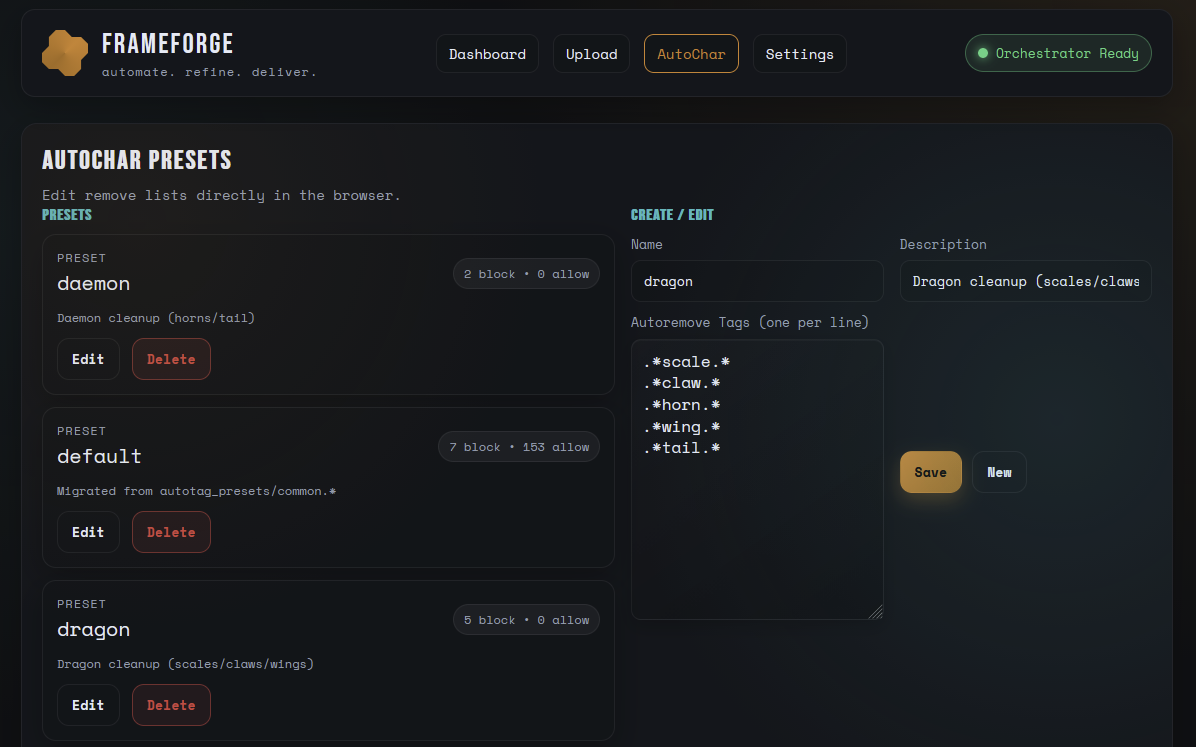

Settings Panel (Currently only MOC)