WHATS ACTUALLY HAPPENING:

Diffusion is front-loaded for composition: early steps decide big layout/pose/camera; late steps decide texture, style, micro-structure.

So if you lock the noise + early composition (“disruption”), then swap in a LoRA (or swap prompt conditioning), and finish the last chunk of steps…

…you get two images that feel like they share a “skeleton” but diverge stylistically or semantically.

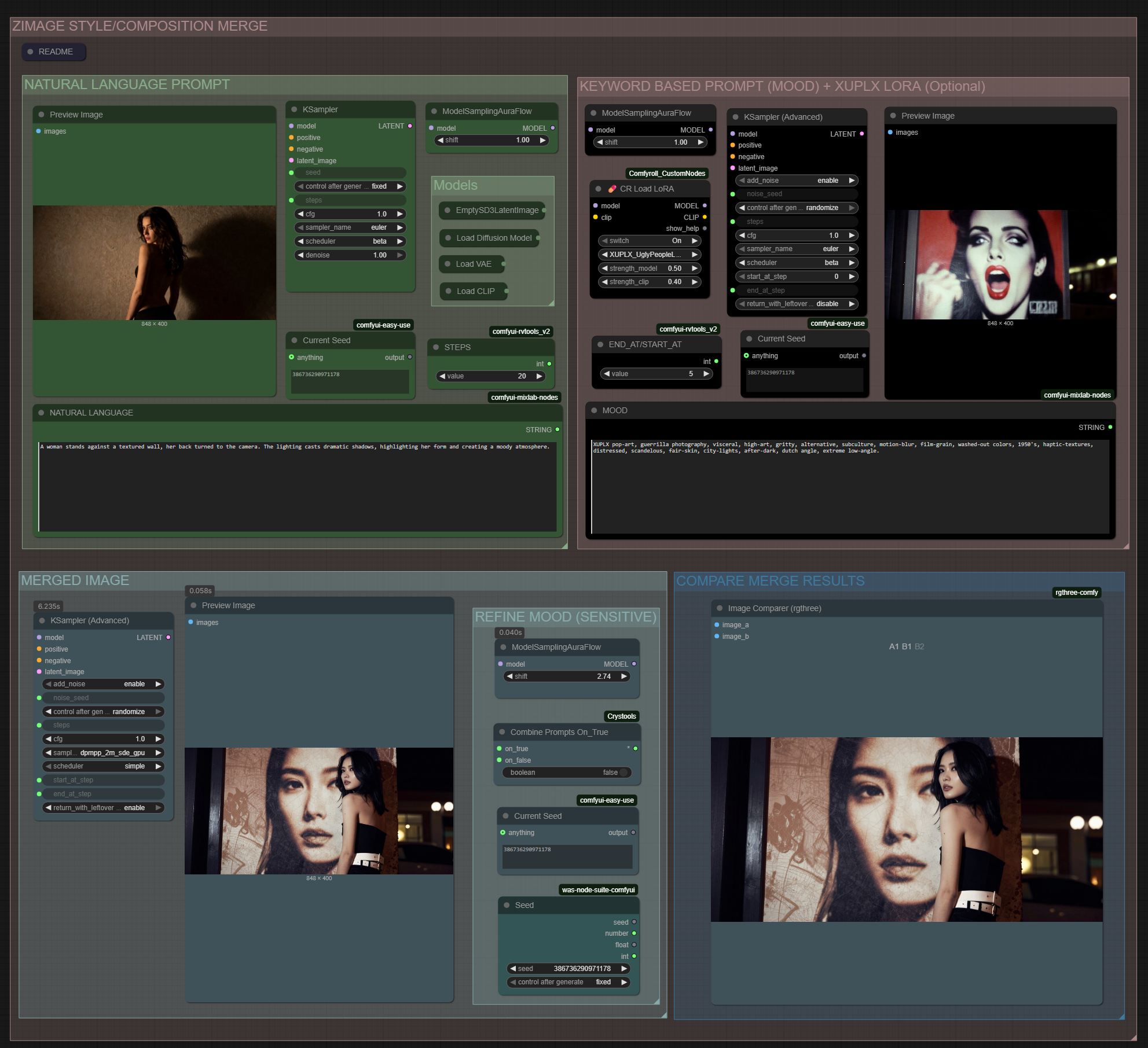

HOW IT WORKS:

Generate MOOD with keywords (optional LoRA + trigger) for the early steps,

Generate NATURAL LANGUAGE for the late steps,

The workflow outputs a MERGED image that blends both worlds.

Customize your blended image by shifting ModelSamplingAuraFlow in the “REFINED MOOD” section — low values lean MOOD, higher values lean NATURAL. The sweet spot is “somewhere in the middle.”

Download XUPLX_UglyPeopleLoRA here: https://civitai.com/models/2220894/xuplxuglypeoplelora

WORKFLOW TIP:

Make the MOOD + NATURAL prompts conceptually opposed for maximum “alchemy.”

*The workflow I used to create the banner image (and the workflow showcased in the image above) is included for download.

DON'T BUY ME A COFFEE!!

Download "ExportGPT - Media & Chat Export Toolkit" from the Chrome Web Store. Use it to quickly export assets from ChatGPT and Sora to speed-up workflows. I update it regularly, it has a 5-star rating, and it's free forever!

Visit exportgpt.org to see it in action.