Hello, fellow latent explorers!

Introduction

Looks like Z-Image Turbo is will be with us for quite some time.

I did a guide on generating with it in Forge NEO and in the process some stuff changed.

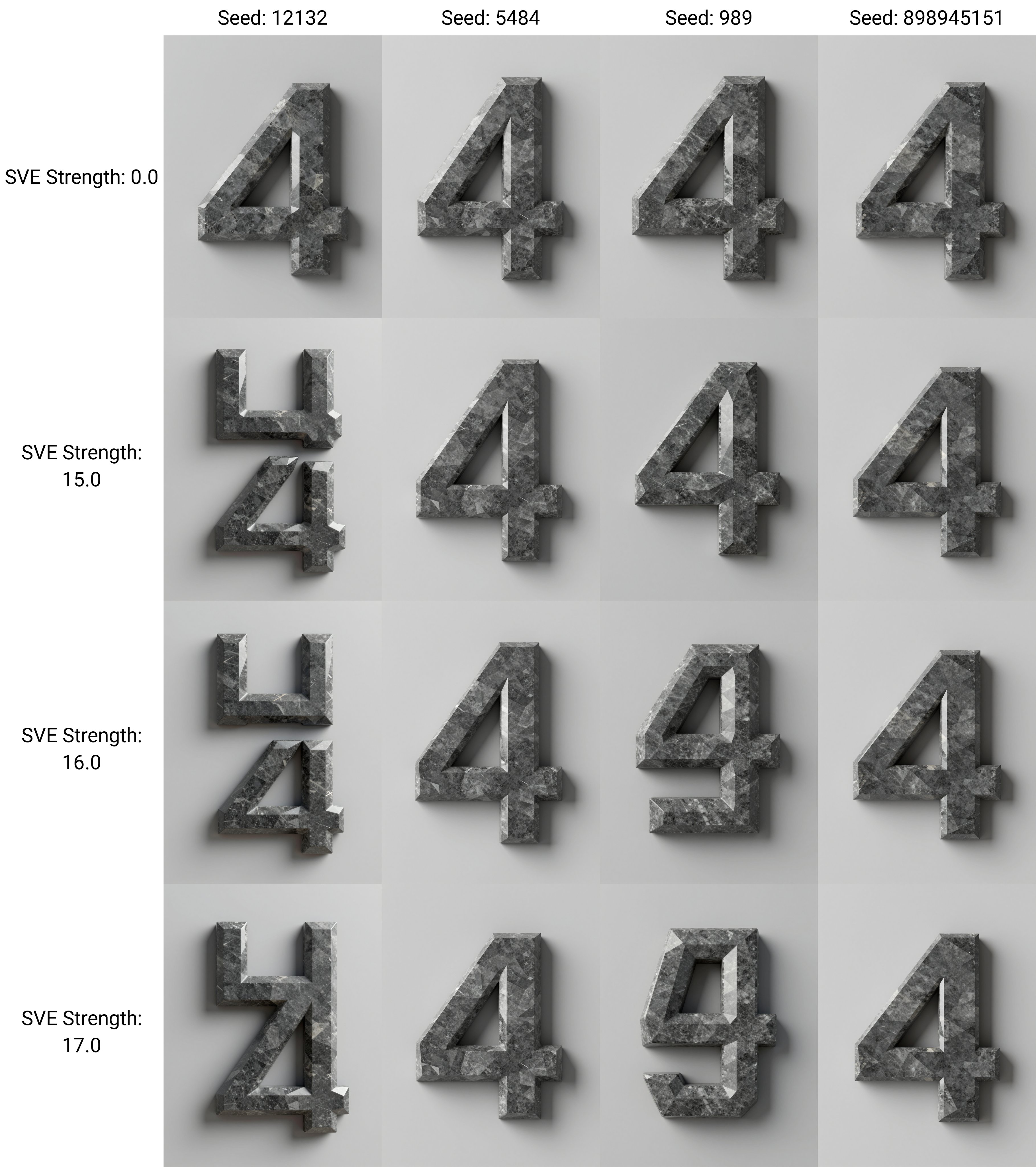

There is a fundamental "problem" with MM-DiT architectures - combination of really precise text encoder and flow matching results it seed-toseed variance is really low. It was apparent to some degree in Flux1, and was obvious in never models, like Hidream, Qwen Image etc. It seems that with better prompt adherence comes this:

A highly detailed, three-dimensional representation of the number 4, crafted from dark gray, polished stone or marble with a subtle sheen. The surface is intricately faceted into numerous sharp, geometric planes, creating a low-poly, crystalline appearance. The facets catch the light, resulting in a dynamic interplay of bright highlights and deep shadows that emphasize its complex, angular form. The number is mounted on a plain, smooth, light gray wall, appearing as a modern architectural element or sculpture.

Those images look really similar. Sometimes with some prompts it has a bit more variation, but usually you have same face, same background, same pose, same shape etc. The only way to drastically change image is to alter prompt every time.

Is there a problem with it? Yes. It is not fun. It is better than rolling for a "perfect seed" for 200 tries as with SD1.5, but it takes some fun away. That rolling for something "interesting".

This is where community has shown it's power. People linked llms to redo prompts every time, introduced wildcards.

Idea of injecting random noise to text conditioning was floating in the air. I myself aired that idea on reddit kinda early.

Then the trick of using empty prompt for first couple of steps was born. This makes sampling start with unrelated image and then shift it towards prompt. It was introduced to Forge by it's creator and results were mixed. It slaughtered prompt adherence and shifted image too much.

While I was creating my guide SeedVarianceEnhancer was introduced to Comfy and quickly adopted by NEO. This is random noise injection at first steps to "break image, but not that much".

I tried to find a sweetspot with parameters but results were mixed.

It either did not introduce enough variation to my liking, or still slaughtered prompt adherence. Most characters were now random Asians, text was broken. But even when everything sticking together I still got samefaces for each seed which was meh.

Main parameters for original implementation are percentage of conditioning and strength.

Default values of 0.6 and 32 mean that random noise is added to 60% of text condition with 32 multiplier.

I quickly figured that with in certain prompts main character was falling out of those 60%. Also the drop introduced but ending variation hit image hard. But lower amount of strength introduced almost no variation.

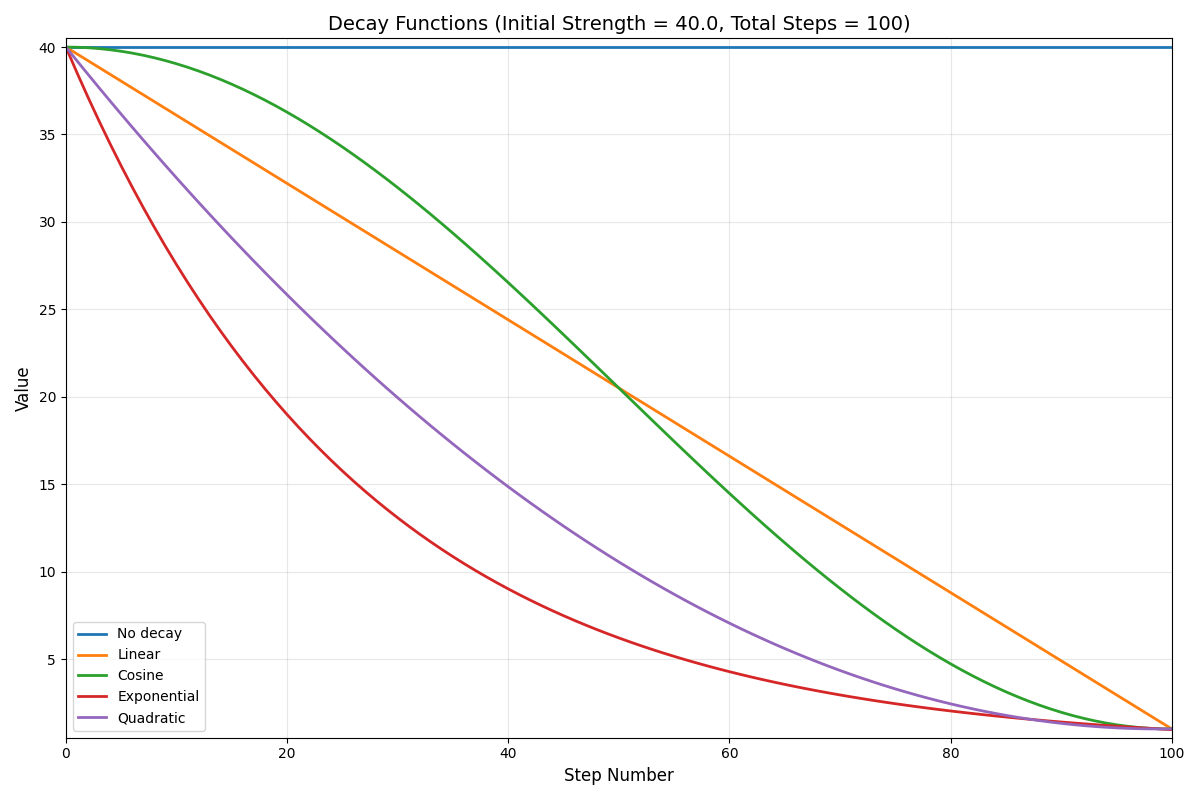

This gave me a hint. If image still converges well with low amount of injected noise, why not introduce a decaying function to it? This way model will "naturally" converge to a lower value and I can keep injecting noise further, to prevent it from drawing sameface and other features that prompt settled with. So with my nonexistent coding experience and vague knowledge of python and 0 knowledge of gradio I decided to make it myself.

First I started trying to remember math from institute that I never really used in my work or day-today life after graduating.

At first I introduced linear, cosine, exponential and quadratic functions that end in 1. Results were... mixed. While looking good at first glance, exponential decayed too early, cosine was too slow. But it became apparent that that "drop" is needed to make it work, otherwise I got Asians everywhere no matter what I asked for.

Next step was introducing the ability to select functions at certain parts of sampling. For example start with no decay for few steps, then thresholds to move those points.

Then I had to figure out how to introduce it to X/Y/Z plot for proper comparisons and pasting.

Did you know that if name fields decay1 and decay2 then Gradio cannot figure out where to put them after pasting because they are too similar? I didn't and wasted 3 days to figure out.

Now it was promising, but something felt off.

After further testing I noticed there are some outliers. Some seeds tend to "explode" at relatively low strength:

Our introduced noise is basically a tensor with values from -1 to 1. And it seems that sometimes those 1 are getting full multiplier variating exact needed thing too much.

That's why I introduced "clamping". This is torch.clamp function that limits bounds of values for noise.

The thing

You can try results yourself, fork is here. No need to install whole thing, just download files in \extensions-builtin\seed-variance-enhancer

I also added PR for that, hope it goes through.

It introduces whole new set of parameters for you to tinkle with:

By setting clamping to 1 and functions to no decay you will revert whole thing to original behavior.

Those are a lot of new options, but the best way I figured out so far:

Drop slowly but steadily for couple of steps with quadratic, then drop fast with exponential, then simple linear to top it off (or rather bottom it out in this case). All for all steps and 100% of conditioning.

I also added "Make it good" button with those settings (it does not update visually, but internally amount of applied steps equals to all). Parameters are set to ones I deemed worthy for my generating config and greyed out. Are the perfect? No idea. That's the reason why I left all controls for accessible for you.

Except for strength.

I was not able to find sweet spot for it. Prompts are just not equal. Sometimes text does not degrade on 40 strength. Sometimes space becomes a beach on just 18.

The only general pattern I was able to pick is that smaller prompt requires less strength to break. Limits? None. Some really long prompts also tend to break more. Realistic seems to hold more, probably due to distillation nature.

Seeds also influence whole thing a lot. Sometimes they just don't introduce much variance. Random nature of this whole thing...

I'd say that starting value is 26 for ZIT. For most longer prompts it did not break. 16 for prompts that are around 2 sentences.

But it is funny to yolo it to 40-50 and just watch complete randomness:

Some comparisons

So that you could get the point. Prompts and contigs included.

https://civitai.com/posts/25311060

You can open image, right click on it and open at new tab to see it whole. All images are compressed jpegs because they are just too big.

https://civitai.com/images/114701939

25 seems enough, after that odd stuff starts happening:

40 on last seed shifted whole thing to realistic, but overall prompt following is really good. This was one of the prompts I used for comparison and around 30% seeds tend to fall to realism a lot faster.

https://civitai.com/images/114701946

Some typography borrowed from Ideogram.

Interestingly holds really well even at 40. 25 is just barely enough. My guess is that is because there were probably quite some images with exact same text during training.

https://civitai.com/images/114701977

32 has additional comma after LAD. Yet helmet has no variations even at 40.

https://civitai.com/images/114701984

This prompt holds too well. That's exactly what I was speaking about earlier. Almost no variation at 15. Random Asian on 3rd seed at 32.

https://civitai.com/images/114701990

Some funky typography. Interestingly, third seed got fixed at 40 strength. Perfect example where seed variation actually improves prompt adhesion (even in other seeds).

https://civitai.com/images/114702013

Prompt suggested on reddit. Only one of the seeds has 2 girls in background with 0 strength (extension off basically). None has UFO CATCHER written correctly. Interestingly strength 40 seems to fix the text, but breaks too much stuff. This generally means that prompt should be reworded.

Dismembered cows even at 25.

https://civitai.com/images/114702018

One of the short prompts I tested. 3 of the seeds are kinda samefacey at 0 strength. At 32 this effect falls off. One of the examples of short realistic prompts that holds well.