Images generated from SDXL and DALL-E 3 are logged in this Google Doc. I might add more in the future.

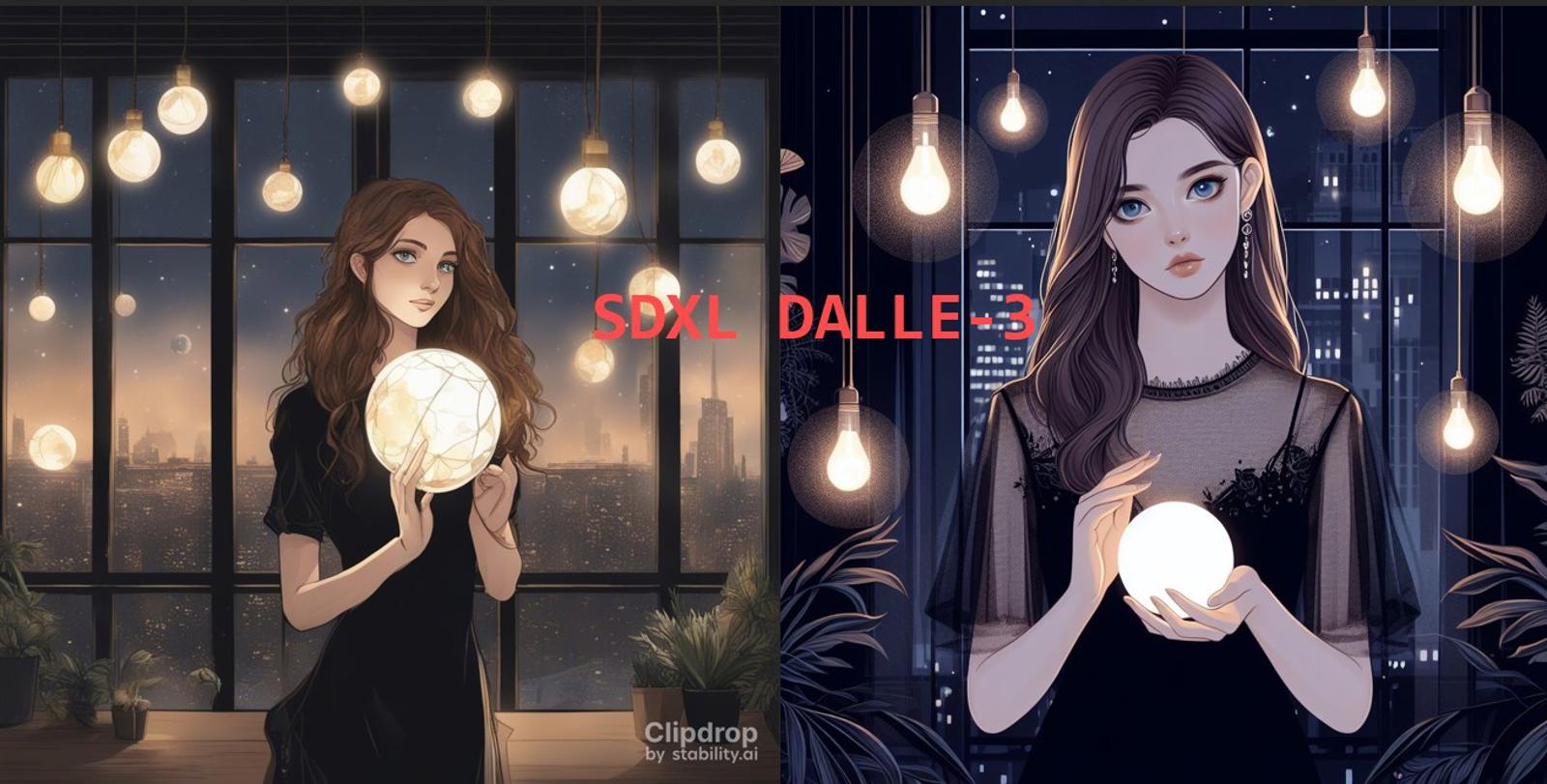

Training anime models with Booru tags is common practice, but the inherent ambiguity of the tag-based captioning can be troublesome at times and necessitates a richer language. I asked GPT4-V (Bing) to generate some image captions from some handpicked images and used the same captions to generate images with SDXL (Clipdrop) and DALL-E 3 (Bing Image Creator). Some thoughts/observations:

Though not quite at the same level as DALL-E 3, you can go quite far with natural captions with SDXL in some cases. I'm surprised at how accurate the gens get with the hanging lights image.

SDXL struggles with more complex prompts. It's still better than random though.

DALL-E 3's safety checker is way too aggressive, frequently blocking innocuous prompts.

GPT4-V's captioning seems to follow a certain template.

Like most language models, GPT4-V hallucinates.

Bing blurring faces is annoying.

This shows that training anime models with natural language is a reasonable option (as some seems to have already done). Hope we get more base models following this direction.