Background / Why this tutorial exists

This workflow comes with very little usable documentation.

https://civitai.com/models/448101?modelVersionId=591027

Even ChatGPT could not really help, and for TheGeekyGhost the workflow logic is obvious, so from his perspective there was not much to explain.

For me, however, it took two full days to fully understand how it actually works.

Since other users also asked for a tutorial, I decided to write one myself, with images and clear steps.

- Required additional resources (step by step)

Pose reference pack (linked in the original WF description)

You need to download the pose images linked in the workflow description.

https://civitai.com/models/56307?modelVersionId=63973

Inside that download you will find two main folders:

512 by 512 cropsfull sized base images

Each of these contains 4 subfolders:

2 running animations

2 walking animations

Inside those, you will find direction-based subfolders, for example:

L= LeftR= RightB= Back

etc.

👉 Take some time to explore the folder structure, it is important to understand how it is organized.

⚠️ This is critical

The IP Adapter reads the motion skeletons from here.

IP Adapter – required models

You need several IP Adapter related models.

Official repo and instructions:

https://github.com/cubiq/ComfyUI_IPAdapter_plus?tab=readme-ov-file#installation

Minimum required models (space-saving setup)

If you want to save disk space, this is the minimum setup.

OR

Note: with this setup, the IP Adapter strength will be strong. You need choice 'PLUS (high strength)'.

You need select in the 'Second group' also!

/ComfyUI/models/clip_visionCLIP-ViT-H-14-laion2B-s32B-b79K.safetensors, download and rename

/ComfyUI/models/ipadapter, create it if not presentip-adapter_sd15.safetensors, Basic model, average strength

ip-adapter_sdxl_vit-h.safetensors, SDXL model

ip-adapter-plus_sdxl_vit-h.safetensors, SDXL plus model

ip-adapter-plus-face_sdxl_vit-h.safetensors, SDXL face model

OR

Note: with this setup, the IP Adapter strength will be medium, not maximum. You need choice 'VIT-G (medium strength)'.

You need select in the 'Second group' also!

/ComfyUI/models/clip_visionCLIP-ViT-bigG-14-laion2B-39B-b160k.safetensors, download and rename

/ComfyUI/models/ipadapter, create it if not presentip-adapter_sd15.safetensors, Basic model, average strength

ip-adapter_sd15_vit-G.safetensors, Base model, requires bigG clip vision encoder

ip-adapter_sdxl.safetensors, vit-G SDXL model, requires bigG clip vision encoder

AnimateLCM (AnimateDiff / AnimateCM)

I only tested this workflow with the following model:

https://huggingface.co/wangfuyun/AnimateLCM/tree/main

File:

AnimateLCM_sd15_t2v.ckptPlace it here:

ComfyUI/models/animatediff_models/⚠️ I did not test other AnimateDiff / AnimateLCM models — others might work, but this one is confirmed.

controlnet Models - openpose

Can download:

https://huggingface.co/Lucetepolis/FuzzyHazel/tree/main

File:

controlnet11Models_openpose.safetensorsPlace it here:

ComfyUI/models/controlnet/⚠️ I did not test other models — others might work, but this one is confirmed.

Required background removal model

You also need to download the RMBG model:

Create this folder if it does not exist:

ComfyUI/models/rembgDownload the model file from:

https://huggingface.co/briaai/RMBG-1.4/tree/mainRename the file exactly as:

briarmbg.pth⚠️ Important:

Do not use

.safetensorsor any other file name.The workflow will throw an error if the file is named incorrectly.

This model is required for the automatic background removal steps in the workflow.

Automatic model downloads on first run

On the first run, the workflow will automatically download additional required models.

This is expected behavior.

For example:

ComfyUI/models/rembg/isnet-anime.onnx

100%|████████████████████████████████████████| 176M/176M [00:00<00:00, 830GB/s]If you see downloads like this in the console, nothing is wrong — just let the process finish.

After these models are downloaded once, subsequent runs will be faster.

Model note (important)

The workflow’s default model, “GeekyGhost LCM V2”, is no longer available.

Good news:

It works with the V1 version

And in theory any LCM SD 1.5 model can be used

I tested several of them.

⚠️ Important:

Non-LCM models produced poor or unusable results, so I strongly recommend sticking to LCM SD 1.5 models.

Final step

After downloading and placing all required files,

👉 restart ComfyUI.

After restart: configuring the workflow

After restarting ComfyUI, we should now be able to configure everything properly.

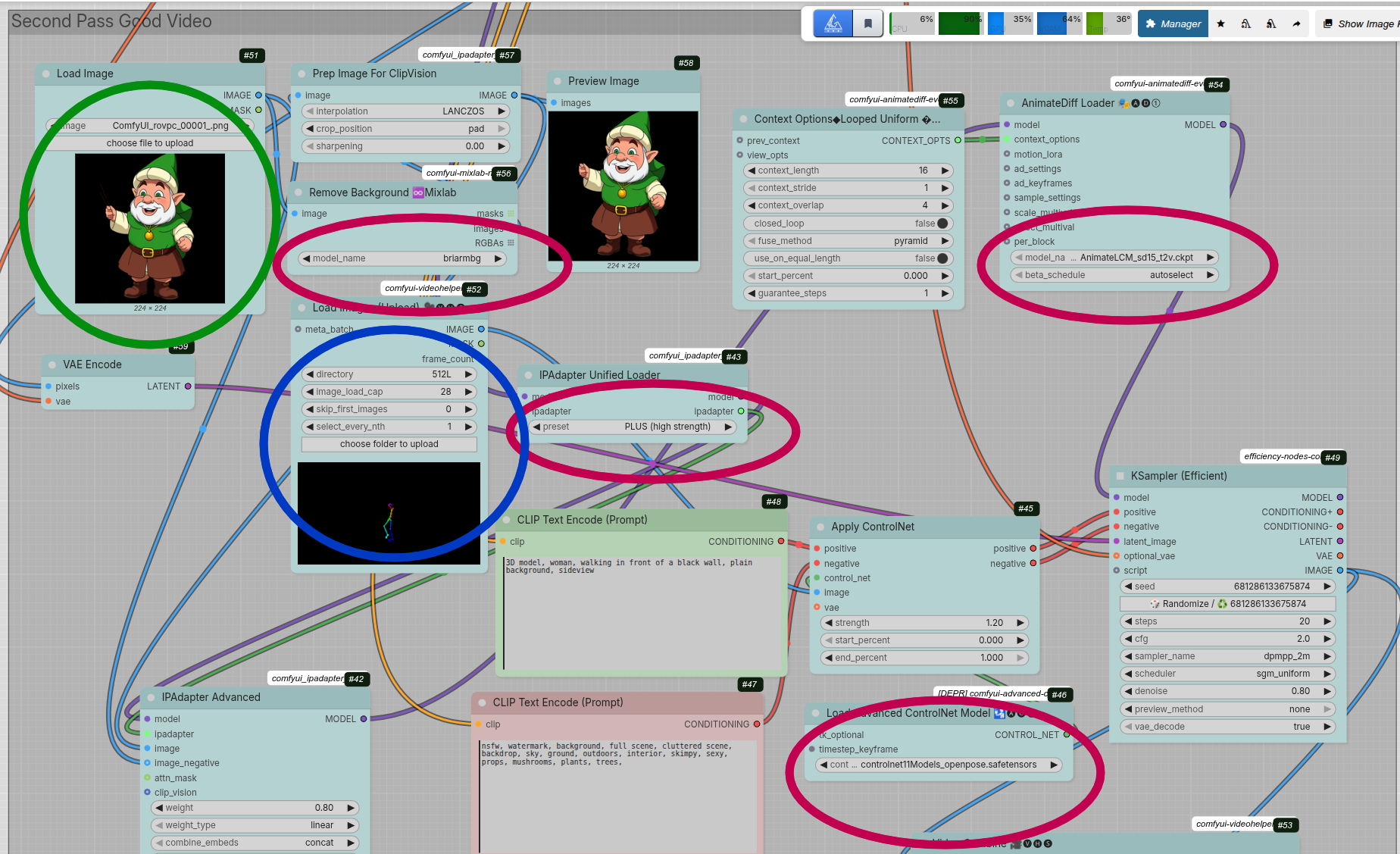

In the screenshots:

🔴 Red circles show where models must be selected (check)

🟧 This must be checked in both groups:

the peach-colored “First pass”

the gray “Second pass”

In picture center: Load Checkpoint - Best the LCM models.

under: IPAdapter preset (VIT-G or PLUS)

a little up and to the left: Remove Bakground model - briarmbg!

top-right: AnimateDiff model - AnimateLCM_sd15_t2v; or you can try another LCM models

center-right: Controlnet model

Source image

🟢 Green circles mark the starting image

This is the image from which the workflow generates the sprite

You must provide this image in both groups as well.

💡 Tip:

After a few seconds, you can see the result of the background removal in the rmbg Preview.

If the background removal is very poor:

Fix it manually

Use the cleaned image as the new source image

This greatly improves the final result.

Generating directions (Left, Right, Back, etc.)

Each movement direction must be generated separately.

Because of this, I strongly recommend:

When you get a result you like, set the

Seedto fixedWrite the seed value down

Use the same seed to generate the other directions

This helps keep the character consistent.

Folder selection per direction

This is how it works:

In the First pass group

In the blue-circled node:

Load Images / VHSSelect (unzipped folders):

characterWalkingAndRunning_betterCrops/

full sized base images/

woman walking bones/

Lusing “choose folder to upload”

👉 This example is for L = Left.

In the Second pass group

Select:

characterWalkingAndRunning_betterCrops/

512 by 512 crops/

woman walking bones/

LAgain, L for Left.

When generating another direction:

Change both folders accordingly

(for example fromLtoR)

Running the workflow

If everything is set correctly:

Press Run

The workflow will generate:

a video (GIF)

and then a sprite sheet

📝 Note:

In the First pass group, the

VideoCombinenode is completely unnecessaryYou can safely bypass or delete it

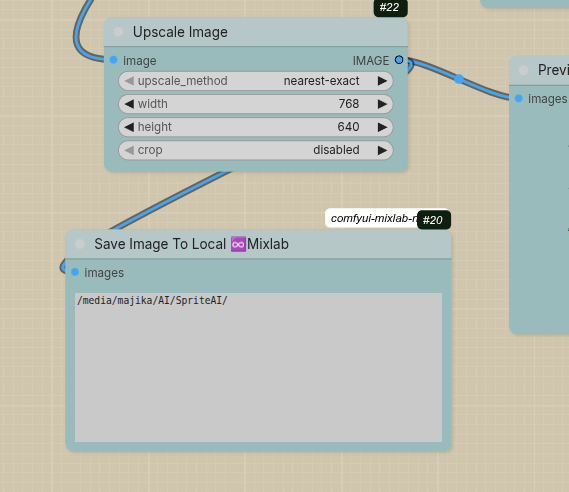

Saving the sprite sheet

In the bottom-left corner of the workflow, you will find: "Save image to local"

Here you must specify the folder where the sprite sheet should be saved.

Examples:

Windows:

C:\your\folder\Linux:

/your/folder

⚠️ If you do not set this:

The images will end up in the

tempfolderEverything else is saved to the default

outputdirectory

Final notes

I hope I didn’t miss anything.

If you get stuck, feel free to reach out.

I’m currently searching for better OpenPose collections —

if you know any good ones, I’d be happy to hear about them!

Good luck, and happy sprite making 🚀