NSFW DISCLAIMER- Discussions of 18+ content below. If you're not 18 I want you to run extremely far away from this article, now. Thank you.

MORAL DISCLAIMER: I shouldn't have to say this but please don't use this to make deepfakes of people without their consent, or worse things. Please be responsible and be a good person.

Hello everyone,

I've recently begun digging into the world of local generation, and I've spent the last few weeks banging my head against the wall with Claude, Copilot and Grok, trying to figure out how to set up a basic workflow in ComfyUI so I can make my own NSFW videos locally, and not rely on an outside source. I finally got it after plenty of hours of still videos and body horror. Below, I'm going to go into detail on how I finally got a workflow going, and the video model actually handles nsfw pretty well.

So I made this article to hopefully help you guys skip weeks of braindead generations that leave me wondering why I exist, and get you going quickly.

TL;DR- You need 8GB+ VRAM, ~30 GB of free space for models, and be willing to wait 20-40 minutes depending on the length of your generation, from 3-5 seconds. You can go longer, but the wait times will just get longer.

Glossary/Terms to Know (skip this if you are already familiar with AI workflows):

Required Computer Specs- Don't even try this unless you have a minimum of 8 GB of VRAM on your machine. Usually, you can figure out how much you have by searching "specifications" or "machine name" or something along those lines. Anything under 8 GB won't work for this, Wan 2.2 is very heavy and uses a lot of memory.

VRAM- VRAM is different from regular memory, and uses your graphics card. It basically determines how much video you can store and process, and how fast. This is where the majority of the work is being done during the workflow, so you want to optimize that.

Pinokio- Just an interface to download ComfyUI, which is where the real meat happens. Pinokio is kinda like a personal internet and lets you run models on your own machine vs. having to rent server space or use another service.

ComfyUI- A graphical user interface designed explicitly for text to image, text to video, image to video stable diffusion workflows. It starts as a blank canvas, and each step in the process is called a "Node." This is where you'll be generating and loading your images and models.

Node- building block for tasks in ComfyUI. Each node connects to at least 1 other node and is a visual representation of the workflow as it moves between steps.

SEX

Sorry if that part was boring, I included the word sex in bold above to get your attention back. But the stuff above is crucial to understand because you could import the workflow and have absolutely no idea what to do with it.

Here's the meat of what you need to do to get started. Once you've ascertained that you have > 8 GB of VRAM, download pinokio, which is free. Once Pinokio is installed, there should be a button that says "Discover," click that, and then type in ComfyUI and download that. ComfyUI has a lot of dependencies and will take a while to download. This will likely be the longest part of the process, other than downloading the models below.

Once ComfyUI is downloaded, you'll be presented with a blank canvas. This is where the fun begins. For the workflow I used, you'll need to understand where your ComfyUI models and such are stored, and what models you need to store and where.

To find where your ComfyUI assets are stored, usually it's installed in:

C:/pinokio/api/comfy.git/app/models

Instead of comfy.git, yours might say ComfyUI, but regardless it should be under pinokio -> api, and then in comfy (.git or UI, whatever it says) -> app -> models. Here is where you'll store your models.

DOWNLOADS YOU NEED:

Pinokio download- Pinokio

Model you need- https://huggingface.co/Phr00t/WAN2.2-14B-Rapid-AllInOne/tree/main/v10

Within this folder, download:

wan2.2-i2v-rapid-aio-v10-nsfw.safetensors

That is your checkpoint, the main model that has the model weights that will actually be doing the processing of the image.

Clip vision - split_files/clip_vision/clip_vision_h.safetensors · Comfy-Org/Wan_2.1_ComfyUI_repackaged at main

Once you've downloaded these, save them in the following locations.

Checkpoint- C:/pinokio/api/comfy.git/app/models/checkpoints

Put the wan2.2-i2v-rapid-aio-v10-nsfw.safetensors file in that folder.

Clip vision- C:/pinokio/api/comfy.git/app/models/clip_vision

Put the clip_vision_h.safetensors file in that folder

Video Upscaler (optional):

RealESRGAN_x2plus.pth · nateraw/real-esrgan at main

Video upscaler- C:/pinokio/api/comfy.git/app/models/upscale_models

Save the RealESRGAN file in the upscale_models folder if you want to upscale your video from 512x512 or whatever you choose, after the frames have been generated

That's it! Then, you just need the image you want to turn into video, and to adjust some settings.

The JSON of the workflow is attached.

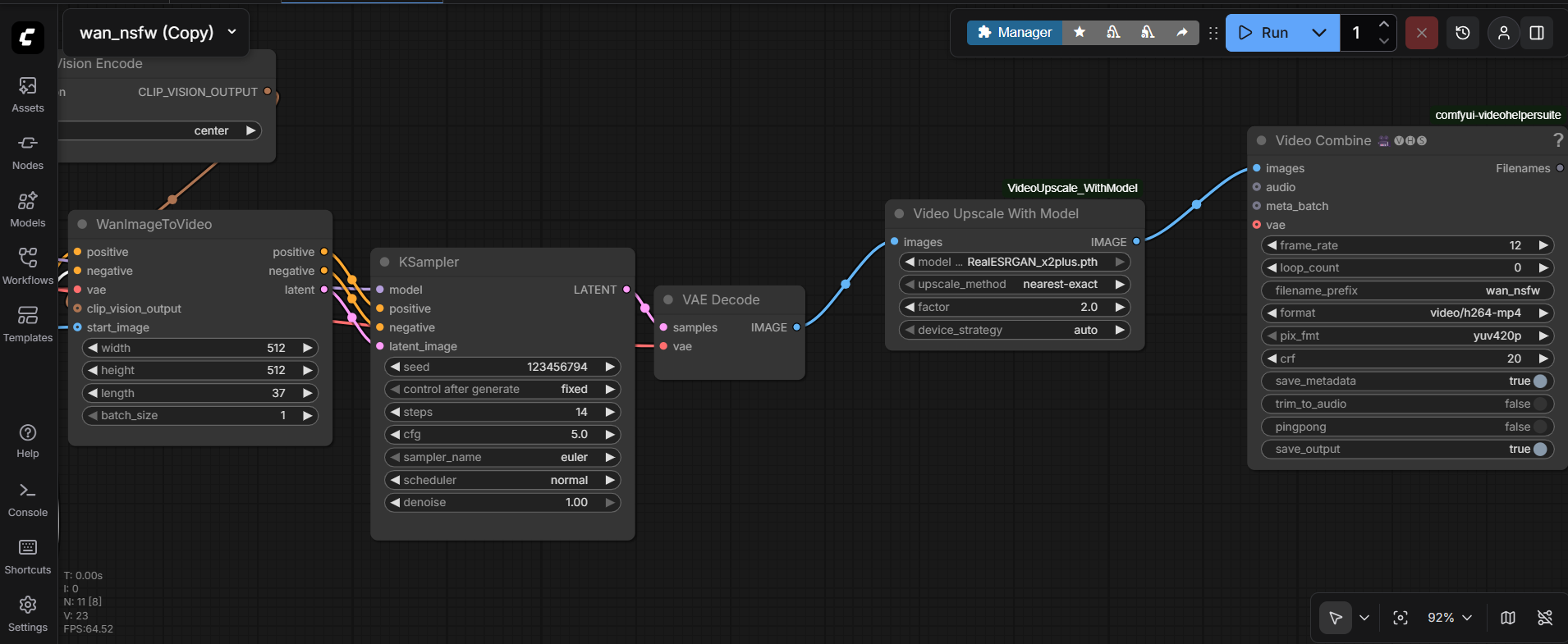

Once you have ComfyUI and all the requisite models installed, download the JSON and you can simply drag and drop it into ComfyUI and it should populate the workflow.

Once you download the JSON attached and have the models installed, make sure the nodes have the right models selected. They will show up as red if the model is wrong or missing. Make sure you have a file inserted in the "Load Image" node.

EDIT (I forgot this initially lol my bad): You will probably need to install ComfyUI-VideoHelperSuite by navigating to manager in ComfyUI (top-middle button) -> custom node manager -> type in the above and install and restart ComfyUI, then it should work.

The settings I run:

Positive prompt:

I have these baseline words in my positive prompt to ensure things come out smoothly-

smooth and fluid motion, static camera, high quality, detailed lighting, consistent character identity, clean background, stable animation.

Add your specific motion request to the top of the prompt, above "smooth and fluid motion".

Negative prompt basics (can definitely add to this):

blurry, distorted face, deformed eyes, extra limbs, extra fingers, mutated hands, bad anatomy, bad proportions, unnatural body, warped features, low detail, low resolution, grainy, noisy, oversaturated, artifacts, glitch, motion blur, duplicate body parts, stretched limbs, disfigured, unrealistic skin, incorrect lighting, inconsistent character, identity drift, off-model face, wrong facial structure, asymmetrical face, bad hands, bad fingers, missing fingers, extra arms, extra legs, malformed limbs, twisted pose, unnatural pose, watermark, text, logo, signature

See above an example of the image prompt. (You can chat with The Dragon on my GGPT btw).

Next, you need to ensure your generation settings are light enough on memory to run on 8 gb VRAM. I've found that setting the resolution to 512x512 runs well enough on my machine, and produces decent enough results. You aren't gonna get industry-quality results, but for what it is, it's amazing. See below a picture of my settings.

Basics about the workflow and what it's doing:

WanImageToVideo node:

I'd recommend starting at a width of 512 and a height of 512, which has proven to work on 8 GB VRAM. It's a low enough final quality that it runs on 8 GB VRAM, but high enough quality that it actually looks good, for the most part.The length in the workflow is set to 37, which is equivalent to roughly a 3 second clip. This is determined by frame_rate in the final Video Combine node, which is set to 12. So you're generating 37 frames that will play back at a rate of 12 per second, so just over 3 seconds long.

KSampler node:

Here is where you set your seed, steps, sampler and cfg. I would recommend leaving the sampler_name and cfg untouched. I use 14 steps because anything over 14 doesn't really produce better results and just wastes compute. 14 steps is enough to save VRAM but also squeeze out the best quality possible. The seed is what you'll change if you want different results from the same prompt with the same image. If one seed has a woman winking and smiling, maybe the other seed will have her smiling and winking, in a different order. Just play with it and see what you get.

Video Upscale with Model-

You can delete this node if it gives you an error, or you can go to the ComfyUI manager, which is in the top center of the screen, then click "Custom Node Manager", and install ComfyUI-VideoUpscale_WithModel. You'll need to restart ComfyUI entirely once it's installed. I usually just shut down pinokio and re-start it. Once it's installed, ensure your upscaler is in the right folder listed above and make sure it's selected in the node. A concern I have with this node is when I upscale, it sometimes looks artificial and over-processed. The upscaler 2x's the resolution to 1024x1024, so if you're okay with 512x512, I'd recommend just keeping it there to avoid over-cooking it.

Video Combine Node-

Here is where you'll adjust the frame_rate if you want, but generally 12 frames is advised. This is basically your output node and includes how your final video will be output, in what form, and to where it will be sent. When your video is generated, it will appear in the "Assets" tab on the left side of the screen. You can right-click on the node and click "Hide Preview" to save VRAM as well, by default it shows a preview of the last video you generated.

Troubleshooting:

If it's not working/gives some sort of OOM or CUDA memory error-

Locate the run_nvidia_gpu.bat file if you have a NVIDIA GPU, otherwise I'm not sure, but I would imagine AMD's BAT file is named similarly. The file should be somewhere in your ComfyUI folder, just use the search functionality to locate it.

Once you find the file, the code within should look something like this:

C:\Windows\System32>.\python_embeded\python.exe -s ComfyUI\main.py --windows-standalone-build

If you're getting memory errors, add these to the end, after standalone-build: --lowvram and --disable-smart-memory

Some things you need to know-

This is not going to be a fast process by any means if you're only working with 8 GB. Expect 15-20 minutes of generation time for a 3 second clip, and close to 30-40 minutes for a 5 second clip. But, the motion is genuinely awesome and it really understands NSFW well. A few hours of generation can produce some cool clips that are entirely yours, cut off from outside influences.

I know that was an insane amount of information, but I wish someone had made something like this when I had started, so hopefully this helps. My DMs are always open if anyone has questions, or post questions here and I can try to help. Good luck generating!

Also, follow me if you liked this guide. @Yiggity69 here, on Reddit, Twitter and on Girlfriend GPT.

Thanks for reading!