TL;DR (Quick Summary)

This guide explains how to create high-quality LoRAs for fictional, fully synthetic people using Z-Image and Ostris AI Toolkit, without relying on real human photos.

For examples, see the LoRas I've published.

The core idea is simple:

Generate two ultra-clean reference images (face + body) in Z-Image (or using any other method you prefer, as long as the images are anatomically perfect and have good quality)

Use ChatGPT to generate a consistent image set based on those references

Carefully edit, crop, and caption the dataset

Train the LoRA on Ostris (Runpod) with a stable, repeatable setup, or with any other method you prefer (locally or not) as long as the machine have the necessary capabilities.

If your references are good and consistent, the resulting LoRA will be flexible, realistic, and reliable.

Scope and Intent

This article focuses on LoRAs for people who do not exist, trained entirely from AI-generated images.

The workflow is optimized for:

Z-Image Turbo

Ostris AI Toolkit

Training on Runpod (cloud GPU) - runpod.io

If you already have your own training pipeline, you can skip the training section and focus on dataset creation and captioning, which are the most critical parts. If you already know how to create a dataset and caption it, then you can still read the initial part that deals with the dataset generation itself.

What You Need

ComfyUI (or another frontend) running Z-Image

A workflow that includes face detailing and can generate realistic images

ChatGPT (paid plan) for controlled image generation

An image editor of your choice

Ostris AI Toolkit (Recommended: run it on Runpod or another cloud service with sufficient VRAM and RAM to avoid compromises in the training)

Step 1 — Generate the Two Core Reference Images

This is the most important step in the entire process.

If your reference images are low quality, inconsistent, or poorly lit, everything downstream will suffer.

Reference Image 1: Face (Close-Up), at least 1024x1024 pixels

Frontal close-up

Neutral expression (no smile, no visible emotion)

Clean, neutral lighting

No noise, no pixelation

To avoid Z-Image producing one of its “default faces”, I strongly recommend:

Using ControlNet (any method you prefer) to guide facial structure

Writing a highly detailed face prompt, including:

sex

age

ethnicity

face shape

nose, eyes, lips

hair type, length, and color

SOFT ARTIFICIAL LIGHTING

NEUTRAL BACKGROUND

Alternative method (very effective):

Use an existing photo only for lighting and framing, via IMG2IMG with very high denoise (90%+, change if needed).

Z-Image will preserve lighting and composition, but completely change the person to follow your prompt.

Reference Image 2: Body (No Face), at least 1024x1536 pixels

Full-body image

Head can have the hair tied back or else be cropped out entirely - ChatGPT will understand that this is only the body reference

Ideally generated in bikini / swimwear (or tight athletic wear) to allow ChatGPT to get an idea of the body. DO NOT use nude images, ChatGPT will complain.

Front view, arms relaxed at the sides

Neutral lighting, excellent quality

Again, you can use IMG2IMG with high denoise (85%+, change if needed) to transfer general proportions without copying the original body.

Step 2 — Generate the Remaining Images with ChatGPT

Once you have your face and body references, move to ChatGPT.

Explain clearly what you want to do. A prompt like this works well:

“I want to generate a dataset of ten images to later train a LoRA for Z-Image using Ostris. I already have a synthetic face and a body reference (100% AI-generated, it's not a real person). I will ask you to generate new images of this same person with different framing and poses. I’ll describe each shot, and you generate the image. OK?”

ChatGPT will confirm and ask for the references (or at least it did with me several times)

Upload both reference images and request the first image immediately.

Generating the Dataset

Ask for images one by one, specifying:

framing

pose

clothing

background

lighting

There is no universal rule for poses or quantity.

I personally use 8–14 images, usually around 10, with excellent results.

What matters most is consistency and variety.

Example prompts I used:

“First, I want a new close-up, smiling, wearing a simple green t-shirt, seated on a sofa at home. Neutral indoor light.”

“Now a half-body shot, bikini, front view, at the beach. Neutral background, sea and sky only.”

“Now a full-body shot in tight gym clothing, standing in a gym. No mirrors, minimal background distractions.”

“Now a side-profile close-up, shoulders up, wearing a strapless dress. Neutral background.”

Sometimes I explicitly explain why I want certain outfits (e.g., body definition).

This helps avoid content moderation issues. Also, if an image comes out different than what you asked for, explain it to ChatGPT and asks it to generate a new one.

If ChatGPT starts drifting from the original images (different face or body), simply re-upload the same references in the same chat and say it’s losing consistency, asking it to consider these new images. It reliably snaps back.

Step 3 — Prepare the Dataset

ChatGPT images often have:

slightly low contrast

imperfect white balance

Open your image editor and do quick corrections only:

auto white balance

basic contrast / brightness

minor RGB tweaks

This takes under 30 seconds per image. DO NOT overdo it or it can cause problems in the training.

Cropping and Resolution

Prepare images in:

1024×1024

512×1024

You can freely crop tighter than the original framing. Sometimes I turn an “upper-body” into a close-up during cropping, for example.

ChatGPT images outputs have quality enough that, even if cropeed to 900 pixels for example, they can be mild resized back to 1024 without degrading quality. Obviously, do not overdo that either.

At the end, aim for:

8–14 images

at least 4–5 close-ups, three to four upper-body/half-body

multiple angles, facial expressions (smiling, serious), hair tied up and loose.

at least:

one side view

two full-body shots (front and back, both in swimwear or tight clothing)

Step 4 — File Naming and Captions (Tags)

Name your files like:

KEYWORD1.png

KEYWORD2.png

KEYWORD3.png

Where KEYWORD is your LoRA trigger word.

For each image, create a .txt file with the same name.

Captioning Scheme (What I Use)

Again, there is a lot of controversy around tagging. MY way of tagging is the following:

For almost all images I use:

<KEYWORD>, <framing>, <indoor/outdoor>, soft <artificial/natural> light, (neutral background)

Special cases:

use

side viewfor profile imagesuse

back viewfor the back view

Framing Options

close-upupper-bodyhalf-bodyfull-bodyside viewback view

Examples

JOHNNOBODY, close-up portrait, indoor, soft artificial light, neutral background

JOHNNOBODY, upper-body, outdoor, soft natural light

JOHNNOBODY, full-body, indoor, soft artificial light, neutral background

JOHNNOBODY, back view, indoor, soft artificial light

JOHNNOBODY, side view, indoor, soft artificial light

If you already have your own captioning system, use it. This one is shared because beginners often get stuck here (I did at first).

Now, CHECK YOUR DATASET! All the images are in the correct resolutions? DO I have the necessary variety? Do all the faces (and bodies) look consistent? Are the tags correct? This is the last stop before...

Step 5 — Training on Ostris (Runpod)

If you already know how to train, you can skip this section.

I train on Runpod to avoid local VRAM and RAM limitations.

Cost Note

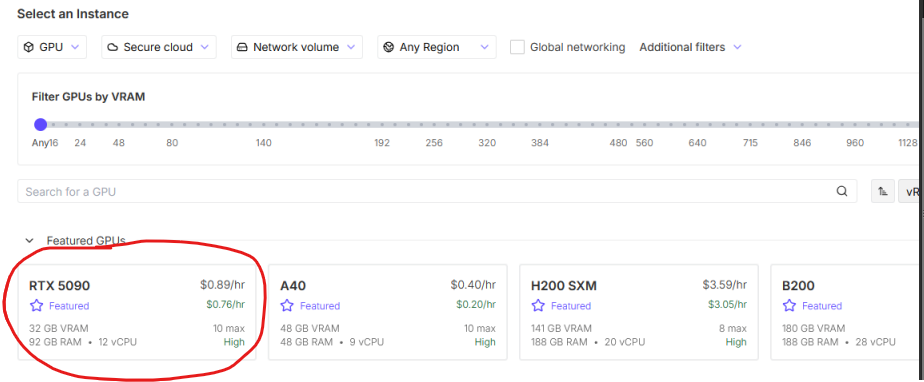

Using an RTX 5090 (prices as of beginning of 2026):

~3000 steps

≈ $1 per LoRA

Important safety tip:

Load credits

Remove your credit card (you can add it again when you need more credits)

This caps potential losses if you forget a pod running (even with the auto-topping off I sleep better this way) or if somehow your account gets compromised.

Creating the Pod

The default options (GPU → Secure Cloud → Network Volume → Any Region) are OK

Select RTX 5090

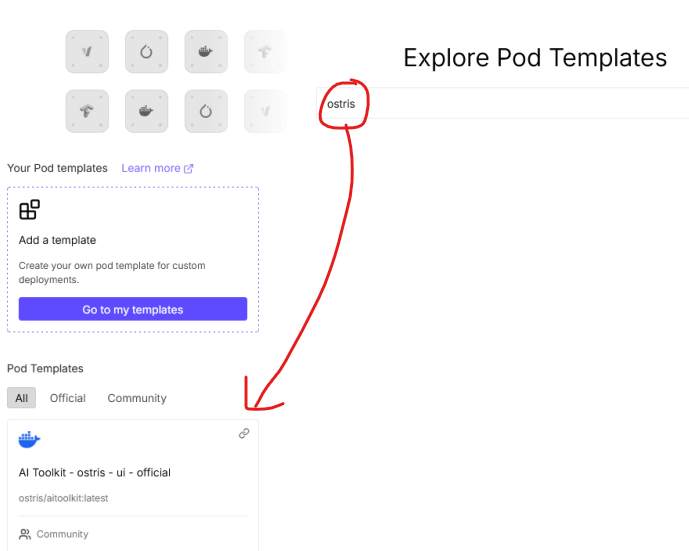

Scroll down and click on CHANGE TEMPLATE

Select the template: AI Toolkit – Ostris – UI – Official

Click on the EDIT button to the side of the "CHANGE TEMPLATE" button to change the default password (insert a simple but non-obvious password and click SET OVERRIDES)

Click the big blue button "DEPLOY ON DEMAND" at the bottom

A new browser window will be opened. Wait until the “HTTP SERVICE” blue link appears, click it and log in.

Dataset Upload

Go to DATASETS (in the menu at the right side)

Create a new dataset

Upload both images and

.txtfiles

If after a minute after uploading the images the UI hangs, reload the page — it’s safe.

Now you should see your dataset images with their tags just below them. Check everything, because now the training will begin.

Training Job Settings

Leave everything default except:

Training Name

This will be the name of your LoRa file. Example:KEYWORD_zimg_v1Trigger Word

Exactly your keyword. Nothing else. In our example, JOHNNOBODYModel Architecture

SelectZ-Image Turbo w/ Trainer Adapter.Once selected, a new drop box will appear - you don't need to change anything.LOW VRAM - DISABLE THIS OPTION (you’re on a 5090, there's plenty of VRAM)

Max Step Saves to Keep - Set to 8 (this is the number of LoRa versions the training will keep in the end. With 3000 steps, it will keep from 1000 to 3000, eight versions)

Samples (Very Important to follow the training)

At the bottom of the options you have ten fields to inert prompts for the sample images. Keep only 3 samples, with prompts:

KEYWORD, close-up

KEYWORD, side view

KEYWORD, full body

This is enough to monitor training and avoiding losing time generating lots of samples.

At the top of the screen, click CREATE JOB and, then, click on the "PLAY" icon that will appear to start the training.

Monitoring and Downloading

To the left side you have the training log, to the right side the list of LoRas saved with their "download" button to their side (this list, obviously, starts empty).

Samples appear every 250 steps. You can see them using the "SAMPLES" tab. The first row is generated BEFORE thet training begin (these are the control images).

LoRA files are saved every 250 steps (hence creating a new LoRa every 250 steps), but you don't need all of them.

I usually:

download from 1500 steps onward

final decision often lands at 3000 steps, but I keep all of them saved to be sure - test them and see which works best.

Sometimes fewer steps are better — especially if the dataset lacks variation.

Cleanup (Don’t Forget This)

When finished, be sure to have downloaded ALL the LoRa versions you want, go back to the main RUNPOD tab, and, in the PODS tab:

Click on the three dots to the right side of the price:

Stop the pod

Terminate the pod

This will make your hourly cost go back to zero. DON'T FORGET IT, otherwise your credits will be spent for nothing! And don't fall asleep in the middle of a training just to wake up the next morning with your credits gone (yes, it happened to me).

Finally, to be sure, select Billing at the menu on the right side and check if Current Spend Rate = 0.000

Only then close the browser, so your credits will be there the next time you need to use them.

Final Notes

If your LoRA:

ignores prompts

locks into one expression

fails to change hair or pose

The problem is almost always the dataset, not the training.

Good references + consistency = good LoRA.

If you have feedback, improvements, or alternative workflows, feel free to share.

I hope this helps.