Introduction:

I got into ComfyUI about 6 months ago. I'm an engineer and a carpenter, so I get a lot of enjoyment out of building really cool stuff. Hopefully you can appreciate my efforts.

In this guide, I'll cover the following:

A brief overview of each setion and it's capabilities.

Setup & Installation of custom nodes, including errors or issues.

Initial setup and run, including a breakdown.

Controlnet and I2I

Hi Rez fix, Upscalers, Detailers, & Post Production

Troubleshooting and common issues.

Advanced Options.

1. Overview

I've used my ZIT all in one workflow as an example for this. They are all similar. I will break down the differences in the detailed area.

Prompt assist and controlnet module:

Main user input area:

Controlnet:

Draft Area (applies only to certain workflows)

Hi Rez Fix/ Ultimate Upscaler:

Detailer Group (Note; I'll address the NSFW Anantomy correction version of this in another article)

Seed VR2 Upscaler:

Post Production Suite:

Save Group:

2. Setup & Installation:

Notes:

Start with this section when initially loading. I have them in both English and Chinese.They are broken out as follows:

Basic instructions

Directions for each section

Useful nodes and downloads

Folder location

This area should answer the majority of your questions on how to use. You will also find detailed instructions in each Module

Custom Nodes:

This is a very node heavy workflow. The initial "You are missing the following nodes" message can be intimidating. This is because I basically have 8 workflows in one, so instead of several small "you are missing" messages, you get one very large one. Take the time to download and install them. You'll need most of them for other people's workflows as well.

Custom Node Manager:

Almost all of the missing nodes can be found here. Just click on the "Install missing custom nodes" button in the ComfyUI manager.

Missing Nodes:

I have tried to go through and remove all the specialty nodes that are not in the current manager.

Go to the "Notes" section. Here you will find a list of the repositopries for all of the nodes:

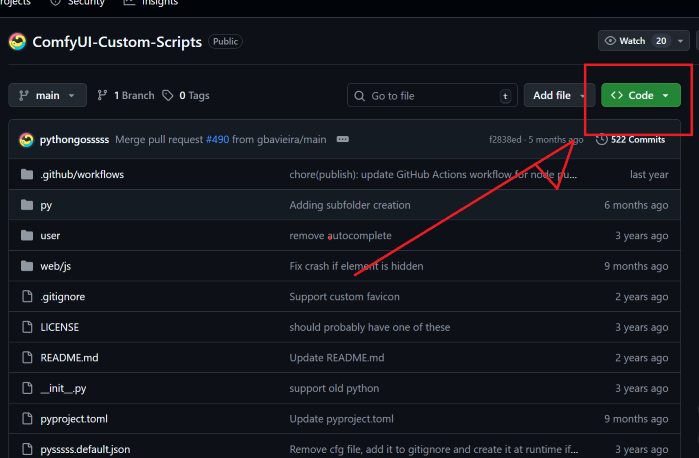

Click on this button here to download it with GIT

Once downloaded, go to the " ComfyUI_windows_portable\ComfyUI\custom_nodes\(Name of custon node folder) " and open it.

Open up the Command prompt (windows 11, right click and "open in terminal") and type ' python.exe -m pip install -r requirements.txt '

The requirements should all download and install:

Note: The desktop version (which you should abandon and go to portable due to the ComfyUI upgrades) will sometimes give you a "Numpty" error. This means you are running on a newer version of Python than is supported by ComfyUI. You will get this error globally.

Option 1: Create a Venv (virtual enviornment) based on the version of Python ComfyUI is running

Option 2 (overwhelmingly the best option): Switch to Windows Portable.

Some node folders do not require this. Look for the "requirements.txt" file.

Detailers:

YoloV8 models. what's the difference? Why does it say some are dangerous?

The "n" in YoloV8N stands for "nano". It's a pruned version of the "s" model. It runs faster. You'll find the 'n' model particularly helpful in the face detailer. I recommend trying the "s" models first.

the ".pt" stands for "pickletensor". they can be modified to be corrupt. Huggingface scans and labels these. Do not use any from another site!!!!

3. Inital Setup and Run:

🛑🚫✋Do not manually bypass anything! There are switches for everything.

In the center of every workflow (in whatever color I was feeling that day) You'll find the Master Bypasser. I'll go through each grouping. Note that they may be slightly different, depending on the workflow and modules:

Initial usage. I'll initially start out with the bypasser looking like this:

If you are using prompt assist, then the Image (or I2I) and Prompt assist need to be on. Otherwise, leave them off

💾 Nothing saves unless it is turned on!

I almost always leave the "Save Draft" switch on. This way if I mess up, I can just reload the workflow in that image.

Models: Each Platform has it's own unique section, but generally they are all the same. This is where you make your intial selections of what you want to bake with.

Prompt (Illustrious shown for reference. Sorry Flux and ZIT. No negative prompt, no style selector 😢)

Enter yor prompts here.

⚠️ IF you are using Prompt assist, leave blank or put any changes only.

Style selector: You can use what you want. Hover over the image to see what it adds and subtracts.

Qwen Prompt enhance Note: if this node does not work for you, you need to update ComfyUi (11-2-2026)

2b instruct model works best

the quant (in yellow): determines the speed of the model and will also shorten your promt. Even high VRAM just leave it at 4bit. You gain nothing.

The prompt instructions (in red): Detrermines how the LLM operates. I have options in the notes if you wnat to change it. It operates differently for each model.

Note: See troubleshooting guide if you are having problems installing This.

Lora Loader:

This loader does NOT need trigger words (i.e. JEDDTTL2). It does it automatically.

You can have as many as you want loaded. Just turn them on and off. Saves a lot of time

Weight is distributed evenly across the model and clip

Note: In some special cases (such as lightning Loras and Control Loras), you want them to only cross the model. If that's the case (such as my WAN models), you'll see a seperate Lora Loader for those.

ZIT: ZImage hates a lot of added crap. When experimenting, use one at a time, then add the other. Don't set them to 1.0 or below 0.4

⚠️ Loras are noise. The more weight you put on a model, the dirtier it gets. If you are trying to get detail, use a workflow that has detailers or Sigma schedulers in it.

I have several options for this.

Draft Mode (only in certain workflows)

⚠️ THIS DOES NOT WORK UNLESS YOU HAVE THE SEED FIXED!!!!!!!! It will just stop here and you cannot get past it!

At the top of the draft window (or in the Sampler area) is this set of controls. The play sound will "beep" when the image is baked to this point and teh run will stop here until you hit the Stop/Pause button.

The draft image gets saved.

Make any corrections before going forward. This includes adding (or turning off) any detailers/ hi rez fix/ Seed VR2, etc.

This should cover everything. You will find additional information in the "Notes" section as well as in each area.

4. Controlnet:

Basic Controls:

In the main bypass relay area, the top area of switches controls this (They differ fropm model and version):

Load Image:

This image controls the Florence prompt assist as well as the Controlnet

💡The (down)load Florence model shoudl have a dropdown menu if it is blank. Select the 'Large Prompt Gen" option. It should automatically download it for you

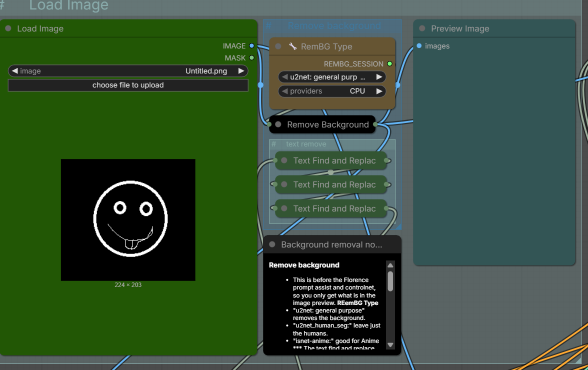

Remove background:

The base model seems to do well, but there are breakdowns in the notes in that area, especially regarding Anime and humans.

The text remove: it removes the word "background, black background, dark background"

⚠️ if you turn off Florence and leave ths on, you will get an error.

Florence Prompt:

This area generates a prompt (or tags ,depending on the model and how I have it set) from the Image.

Controlnet:

You have the option to create a mask or load your own. Instructions are in the notes in that area.

5. Hi Rez Fix, Detailers, Seed VR2, Post Processing:

Hi Rez Fix:

There are several different versions of this as each model works slightly different:

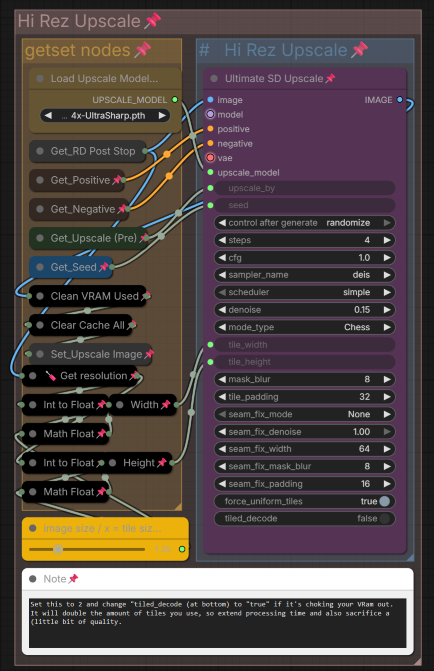

Upscale Model:

which upscaler you use matters.

I prefer Anime sharp for Anime

Remecri, or Ersgan for Realistic people

Nickleback for a good low VRAM all around

4x Ultrasharp for cyber/ architectural/ non human.

NMKD if I want to increase the size of the image.

Image size slider:

This determines your tiling sizes (how big or small it breaks the image up to work with)

1 is none (Heavy VRAM)

1.5 is still okay (8gb)

2.0 for 6gb or smaller machines (still kinda okay)

Upscaling factor (Hi Rez Fix):

Under every Aspect ratio in the main area should be a slider that says either prescale or Hi rex fix. This determines how much UPscaling happens in the Hi rez fix model. I've found that moving it up TOO high causes issues down the line. 1-1.5 seems to be best.

Let SEED VR2 do most of the heavy upscaling work.

Detailers: (SFW Version shown)

ZIT & Flux2 workflows:

As the base model does not work with the detailers, you will have some version of the models here:

Checkpoints: Make sure to adjust the checkpoint to match the style you are using

I.e if you are doing Anime, it will look wierd if she has a realistic face, and vice versa

Detailer (hand, eyes, face):

The Uralytics detector determines what is being caputred

Denoise slider: This is the amount of noise added to each Image. The more noise, the more it changes the actual image.

I typically set it at 40-50%

Prompt: (eyes only): This is really good for adding detail to eyes (i.e piercing green eyes, looking at viewer, half closed). Leave blank in normal circumstances.

Expression Detailer:

There are two options:

Use the image and it wil match it (turn the switch on).

Adjust the settings.

⚠️ Will give you an error if there is no image (when not bypassed).

⛔✋🛑 This is for human faces only! It will cause an OOM error (right after the eye detailer and before it even starts) if you push something else through it. Make sure to bypass it.

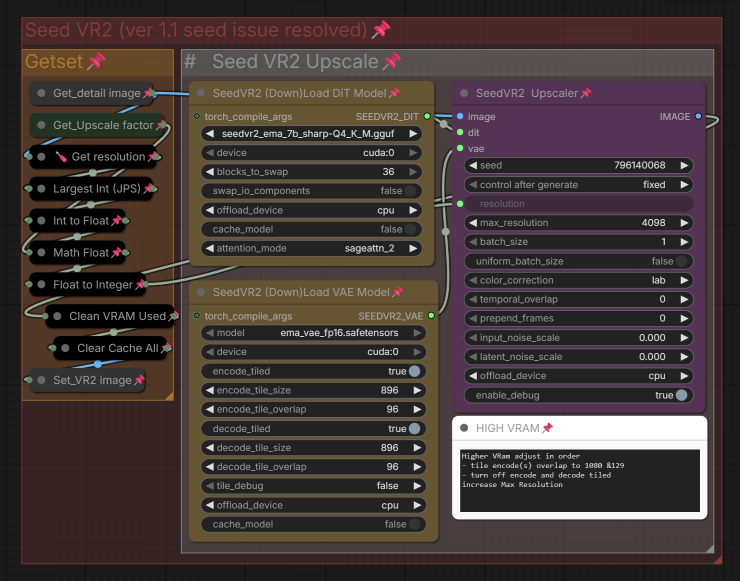

SEED VR2 (Upscaler):

The first time this runs, it will download the models, so it takes a few minutes:

The Upscale factor is controlled in the Aspect Ratio node in the main area:

VAE settings: Encode and decode tiles determine the size of the tile it works with. The smaller the number, the lower the VRAM it requires, but the more overlap and integrity it loses.

See instructions in "Notes"

High VRAM users: Turn off Tile encode and Decode.

Post Processing (my favorite):

This takes an image from okay to Epic. I'll touch on the high points, but read the notes in the lower right corner for more detail.

Smart Effects (Denoise):

Either in the upper lefthand corner or the last node (later versions will have in the upper lefthand corner) This node removes excess noise from an image.

Color Match to Image:

This will match to whatever image you have loaded.

⚠️ Will give you an error if there is no image (when not bypassed)

Read the notes in that section for more information.

Save Nodes:

Nothing saves unless it is turned on!

You can change the folder locations by just renaming them (the tan nodes in the pic above)

Metadata node automatically saves the information to post on CivitAi

6. Troubleshooting errors & Common issues:

🛑⛔🚫⚠️ I have found that almost all issues are related to the following:

You need to update.

Open your manager and click "Update all"

You are on desktop version

copy your log and paste it into Grok or Claude (Grok does a better job) and follow the instructions.

How to deal with "Import failed" errors

Your WIndows Manager shows you something like this:

Go to the subfodler in yor custom-nodes foler that matches the name listed in Blue under the "Import failed" tag

Look for this file

Right click and click 'Open in terminal from the drop down menu:

type "python.exe -m pip install -r requirements.txt", then hit enter

It will launch and fufill the requirements. Pay attention to what the colored text says at the bottom. If you restart Comfyui and the module does not launch, then feed yout log to Grok or Claude to decipher

Basic issues are listed below.

Eden Nodes:

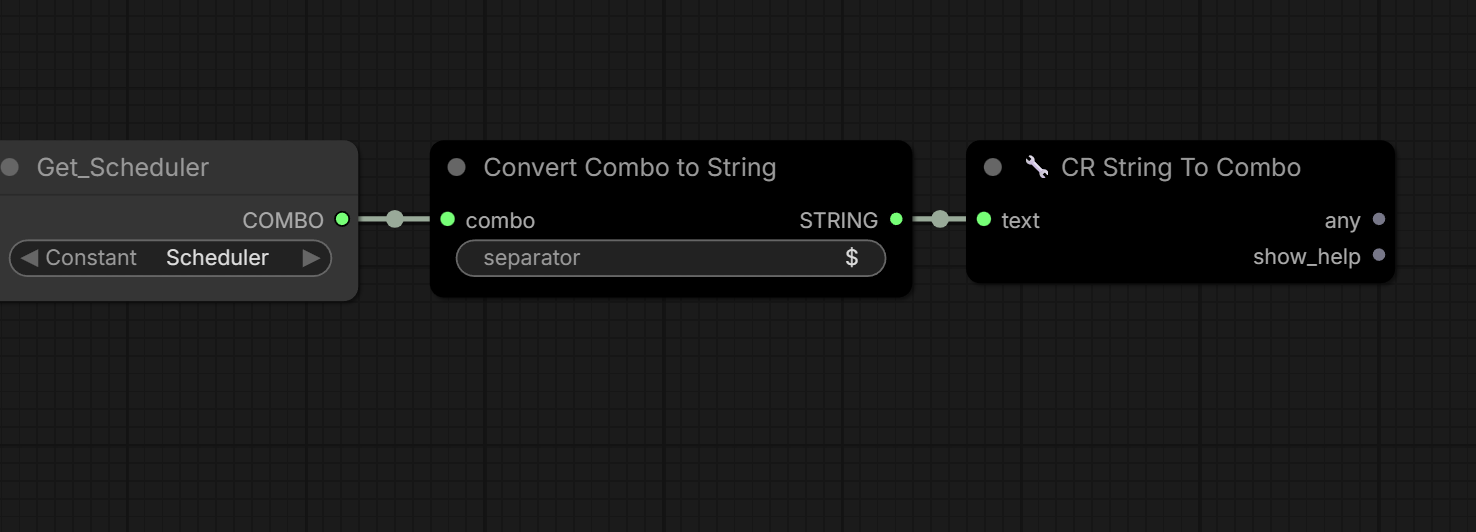

Update: You can replace this node with this combination:

This node is used so that you can use the same scheduler and sampler globally. They are in the Hi Rez Fix area as well as in the Detailers (and in the Sampler areas in Ilustrious)YOU CAN DELETE THEM, but you will have to manually enter the sampler and scheduler for each node.Cannot execute because a node is missing the class_type property: Node ID '#248'Ths is the error you will see. It is the sampler selector node. Once again, just delete it.

⚠️ Florence model precision and attention matters, even on large cards. It shoudl push out a prompt in under 5 seconds even on low VRAM. If you are having errors, mess with those settings.

Black or Noise only images

If you are using Flux2 or Klein, turn off sage attention. This includes if you have it built into your startup (portable)

Your steps are too low

Your set clip last layer is at -1 when it should be at -2 (or the other way around)

Image empty (4 locations)

Load Image (or I2I)

Load Mask

Expression

Color Match (post processing)

"ModelPatchLoader node : Cannot access local variable 'model" where is not associated with a value"

Update ComfyUI to the nightly version, which includes the necessary fixes:

Open ComfyUI Manager (if installed).

In the left column under "Update", switch from "ComfyUI Stable Version" to "ComfyUI Nightly Version".

Click Update/Restart ComfyUI.

Fully restart ComfyUI after the update.

Alternative methods if using portable/manual install:

Run "git pull" in your ComfyUI directory to fetch the latest changes.

Or run the update_comfyui.bat (Windows) or equivalent update script.

Impact Pack Issues:

'DifferentialDiffusion' object has no attribute 'apply'

This happens befopre teh detailers.

You need to update the Impact Pack

If this does nto work for you, place a differential diffusion node between teh mopdel and teh model input.

AILab_QwenVL_PromptEnhancer: This node has been repaired. Just Update the nodes and ComfyUI

C

annot import name 'AutoModelForVision2Seq' from 'transformers'This is the main cause: AutoModelForVision2Seq has been deprecated since transformers v5.0 (officially released around late January 2026).Quick solution: Downgrade transformers to a compatible version (4.57.x is recommended by the repo and related issues for Qwen3-VL). In the terminal (inside your ComfyUI_windows_portable folder):.\python_embeded\python.exe -m pip uninstall transformers.\python_embeded\python.exe -m pip install transformers==4.57.1

[GetNode] ✗ Variable 'Upscale Image' not found! Available: Height, Width, Upscale factor Tip: Make sure SetNode runs BEFORE GetNode in the graph.

You need to update your nodes. This error is specific to KJ nodes, but they automatically update, so most likely you have more than that whioch needs to be updated. Open the manager and click 'Update all"

!!! Exception during processing !!! PytorchStreamReader failed reading zip archive: failed finding central directory

Traceback (most recent call last):

File "G:\comfy\comfy_v0.10.0-default_cu\ComfyUI\execution.py", line 518, in execute

output_data, output_ui, has_subgraph, has_pending_tasks = await get_output_data(prompt_id, unique_id, obj, input_data_all, execution_block_cb=execution_block_cb, pre_execute_cb=pre_execute_cb, v3_data=v3_data)

Sam Model it outdated for the detailers. This is a new issue as of Jan 31st, 2026

Aything everywhere node:

These broadcast several items throughout the workflow. You can tell they are working when what they are sending is highlighted like so:

If they are not highlighted. Make sure you have updated

Florence will give you an error if you do not have "Load Image" Turned on

💡The (down)load Florence model shoudl have a dropdown menu if it is blank. Select the 'Large Prompt Gen" option. It should automatically download it for you

The "Save Mask" node will turn red and give you an error if Controplnet is of and you have 'Save Mask" switched on.

OOM errors

Hi res fix:

turn the upscale down or increase the tiling

Detailers:

It happens occasionally, especially with the face one. Just hit run again.

Seed VR2

Read the notes in that area.

Decrease upscale resolution

Flux 2 workflow:

you will get Mat errors if you do not have teh correct text encoder and model combination

Flux 2 Dav goes with Mistral

2 Klein 4b goes with Qwen_4b

2 Klein 9b goes with Qwen_8b

Loras not working:

At the time of this publication, Flux 2D loras do not work with the Klein models. You need klein Loras.

ZIT all in one:

When using the checkpoint: AttributeError: 'Linear' onject has no attribute 'weight'

This stems for an issue with ComfyUI. It is a mismatch between Clip encoders and it happens at the detailers. Just restart.

6. Advanced Options:

This workflow is modular.

You can take any part of it and move it to another workflow.

You can change the order in which groups are processed

You can add or delete sections entirely.

Getset Nodes:

Each section has a set of nodes that is relilant on the other: Common nodes include:

Seed

Scheduler

Prefix

Height

Width

etc.

Module swap:

In each section is a Get Image and a Set Image Node. Yo can change the order or completly delete a section simply by adjusting these. For example.

The image above is the detailer. It gets its Image from the Hi Res (Upscale Image) then labels it as Detail Image.

If I were to change the GetNode to anther module (say Seed VR2), it woud process it after that. Bear in mind you would have to change the other module as well.

You can also completly remove modules in the same fashion.

Summary

I hope that this was helpful and coveed everything. If you have any questions, Please message me.

Also, if there are errors in this or you have an idea on how to improve it, I always welcome feedback.

Instagram: https://www.instagram.com/synth.studio.models/

This represents many of hours of work. If you enjoy it, please 👍like, 💬 comment , and feel free to ⚡tip 😉

Thanks,

"True Nothing is. Permitted Everything is"- Yoda Ezio, Assassin's Wars

Jay (Lonecat)