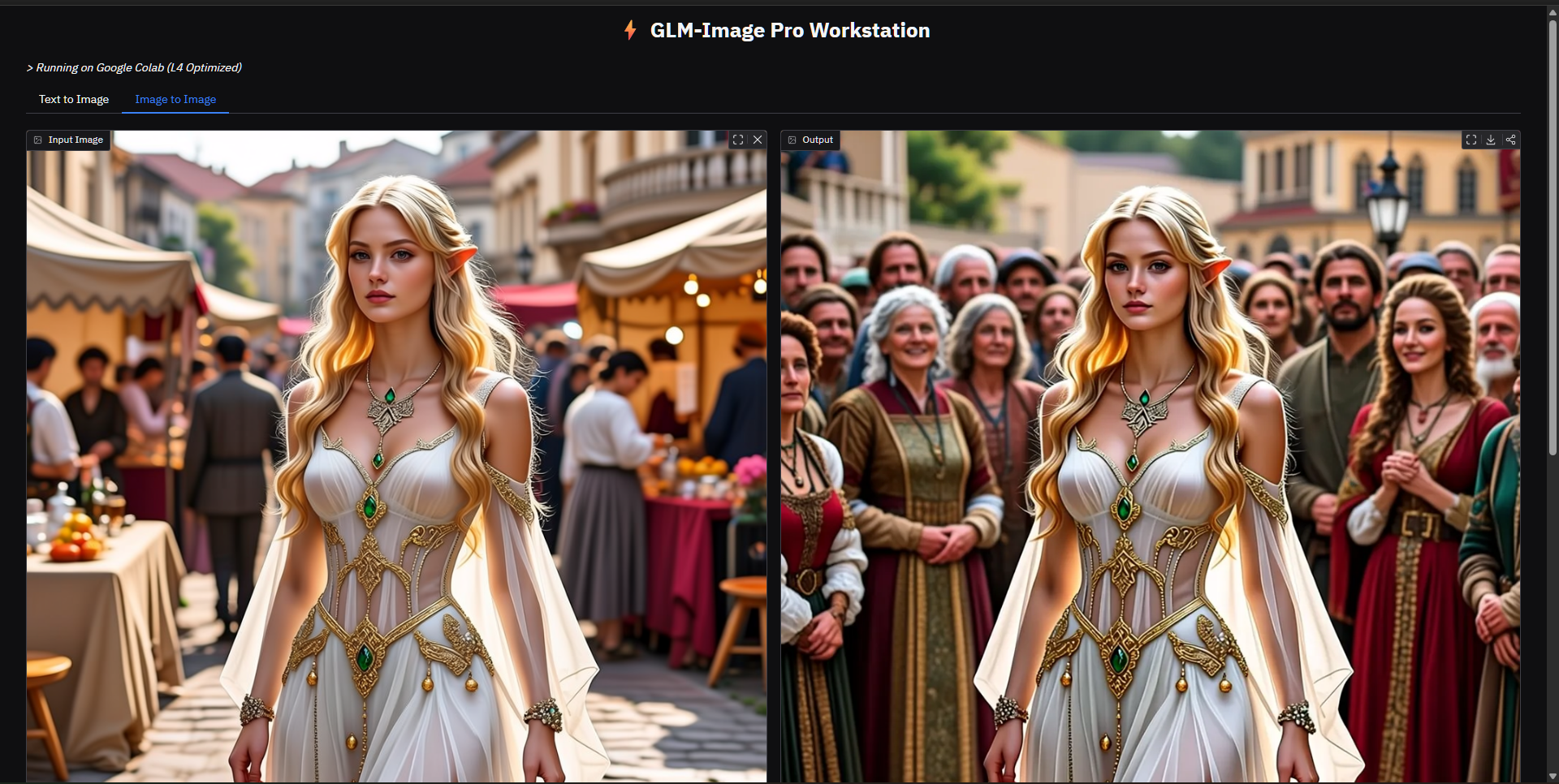

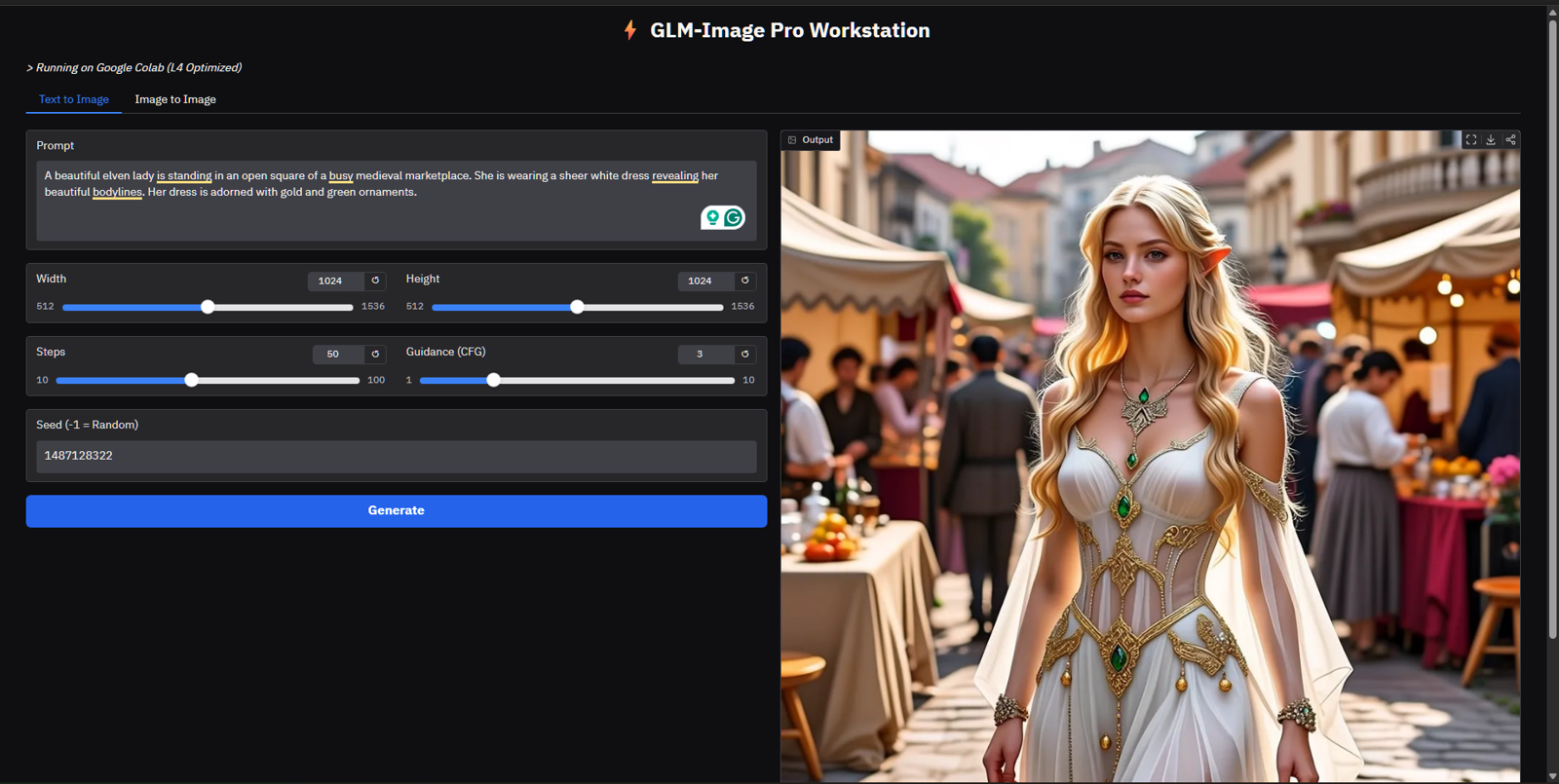

I made a little notebook that runs GLM-Image on Google Colab. There are two components, Vision Encoder and Decoder, and they can run on 24 GB Vram by offloading and swapping the components with the peak VRam usage of 16.7 GB and 40 GB for Ram. The notebook runs on L4, and you need either Colab Pro or buy some credits to run it.

The notebook is uploaded as a zip file here, and you can download and extract the zip file and upload the notebook to Colab for usage. The notebook is comprised of 3 cells and uses Cloudflare tunnel.

When you run the final cell, wait for the model to be downloaded before clicking the Cloudflare link, as there won't be anything to run until the model is downloaded.