Greetings once more Civitai community. It has been a hot minute since last I posted and boy do I have a treat for you!

Movie Studio 1.0

An all inclusive kit to get you started generating you own videos. Groups include T2V, I2V, Dialog Generation, Merge Audio with Lip-sync, Environmental sounds, Music, and a tool to easily layer your audio into an already created video.

This was a fun little project I created that takes my T2V and I2V groups and moves them to the next level. New to AI Generation and think setting up a workflow requires hours to setup and research? Well I have you covered. Every model meticulously documented and linked to download. Simply copy the link and paste -> download where specified. Very detailed setup instructions so you can quickly and easily get it up and running.

LatentSync has been a wonderful tool for lip-syncing audio and video (sometimes the face rec can be touchy) but no real guides existed to set it up start to finish. Well I fixed that.

Getting Started:

1. Update comfyUI ->./update/update_comfyUi

2. Download FFMPEG from https://www.gyan.dev/ffmpeg/builds/packages/ffmpeg-7.1.1-full_build-shared.7z or any other provider with this version.

3. Create folder FFMPEG and unzip files to that location

4. If you are using Windows (and I assume you are) we need to add paths for Comfyui's Embeded Python and FFMPEG as both of these will be required to make LatentSync work.

5. To set path in Windows: Win + R and type sysdm.cpl. Click the advanced tab at the top then Environment variables. In the top window select Path and then edit. Click new then browse. Browse to your python_embeded folder and click ok. Click new again and browse then select your FFMPEG/bin folder.Then click ok. Do the same for the bottom window clicking path -> Edit add Python_embeded and FFMPEG.

Ok now to the fun:

6. Open ComfyUI and load the workflow.

7. If you get a message to download any files you can either let ComfyUI manager do it or you can use my links.

8. Install all missing nodes. (Takes awhile there are a lot of them)

9. If you don't already have it configured ACE-Step model loader will fail you must setup folders for it and move the required files into them - see the notes right beside the group.

Once done everything works except for LatentSync - Here is what you were waiting for:

10.LatentSync error - Torch codec required. This hung me up for several days - but I have the solution for you. Torchcodec and insight face are required to get Lantensync working - first we focus on torch codec:

Torchcodec - navigate to your python_embeded folder - click in the location bar and type CMD to open a command prompt. Then run -python -m pip install torchcodec. If you refresh and load again you get an error insightface does not exist. Now I have tried hell and high water to get this to install but try as I might the damn thing refuses to build the wheel - so.. we will just download the built wheel and install it.So here is the trick:

First you have to figure out the version on embeded python you have - navigate to .\python_embeded you will see a Zip file called PythonXXX (XXX is your version).

Now Download the right Wheel:

Download the wheel to your main ComfyUI folder (the one with the run.bat files) click in the navigation bar and open CMD.

Run the command - python_embedded\python.exe -m pip install insightface-<version>.whl So mine is 3.13 the command looks like this: python_embedded\python.exe -m pip install insightface-0.7.3-cp313-win_amd64.whl.

And were you to run LatentSync you would get one more error: onnxruntime not installed. So back to the main folder and run either:

python.exe -m pip install onnxruntime (for CPU)

python.exe -m pip install onnxruntime-gpu (for GPU)

Load it up and try running the group - It downloads the models automatically.

But not the VAE - See I told you this was a pain.

So navigate to .\ComfyUI\custom_nodes\ComfyUI-LatentSyncWrapper create a folder called checkpoints (unless it is already there) then create these folders inside it: Auxiliary and VAE.

VAE Download - https://huggingface.co/stabilityai/sd-vae-ft-mse/resolve/main/diffusion_pytorch_model.safetensors?download=true

Config Download - https://huggingface.co/stabilityai/sd-vae-ft-mse/resolve/main/config.json?download=true

Place these in the VAE folder.

Stable_syncnet - https://huggingface.co/ByteDance/LatentSync-1.6/resolve/main/stable_syncnet.pt?download=true

Place it in the main checkpoints folder.

Aux - you can download these if you wish:

File 3 - https://huggingface.co/ByteDance/LatentSync-1.6/resolve/main/auxiliary/sfd_face.pth?download=true

Ok everything is in place run the workflow.

Aux is optional - https://huggingface.co/ByteDance/LatentSync-1.6/tree/main

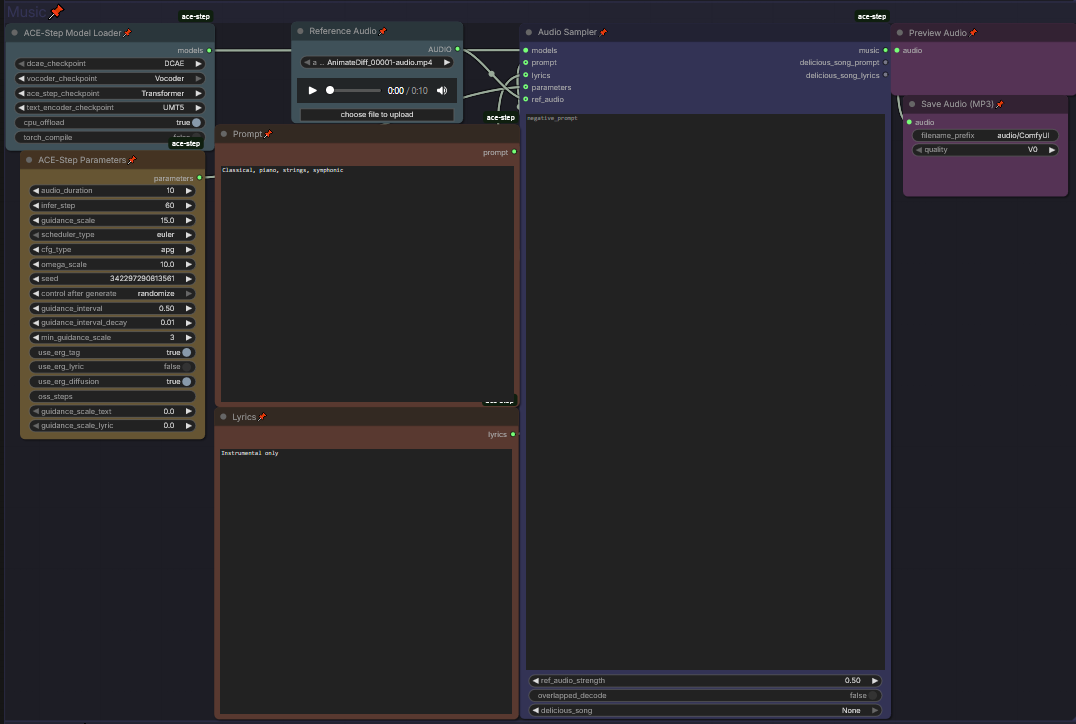

Long winded I know but well worth the effort trust me. Here is a preview of what the workflow looks like:

The T2V and I2V are simply copies of my other posted workflows.

Simple enough each of these tools are modular output from one easily fed into another workflow or group. Because this is modular you can slot in any tools you want I built it specifically to work that way. Enable the tool you want leaving the others disabled. (Note if you leave them enabled they will try to run - low vram bad idea) When you have finished running a group tool use the free model or model and node cache in ComfyUI Manager:

Well that's about it - have fun remember to leave feedback if you want and if you make any changes to this workflow all I ask is you share it! :)

As always a special thanks to my ever patient rubber duck/thinking partner.

Until next time.