This is a guide on how to use TensorRT on compatible RTX graphics cards to increase inferencing speed.

Caveats:

You will have to optimize each checkpoint in order to see the speed benefits.

Optimized checkpoints are unique to your system architecture and cannot be shared/distributed

Optimizing checkpoints takes a lot of space - twice the size of the original base model. So a 2GB base model costs another 4GB of space to optimize, for a total of 6GB optimized model size.

This technology is bleeding edge and I'm not responsible for any issues you create as a result of following the advice in this guide

Prerequisites:

Nvidia RTX gpu with 8GB of VRAM

cudNN 8.9.4.25 for 11.x or higher 11.x version installed in

\venv\Lib\site-packages\torch\libdownload cudNN here: https://developer.nvidia.com/cudnn

download the zip from that website, then put the contents of the

/bin/and/lib/x64/folders (all of the individual files) into:\stable-diffusion-webui\venv\Lib\site-packages\torch\lib

you can check your current version with

pip list

Instructions:

activate your venv

./venv/scripts/activateLaunch webui

./webui-user.batand go to the extensions tab -> install from URLinstall the url

https://github.com/NVIDIA/Stable-Diffusion-WebUI-TensorRTGo to Settings → User Interface → Quick Settings List, add sd_unet. Apply these settings, then reload the UI.

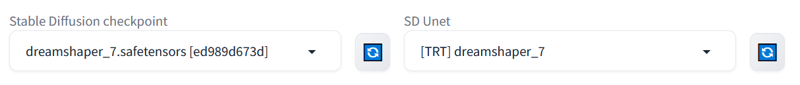

Reload webui. You will have a new tab for TensorRT and a SD Unet dropdown next to the checkpoint dropdown.

Select the checkpoint you want to optimize in the checkpoint dropdown.

Select Preset - this determines what resolution is optimized. Choosing "static" will be faster, but only works for that resolution. Recommend choosing 512x512 -768x768 for generating a range of resolutions for SD1.5 checkpoints

Click "Export Engine" - watch the terminal and wait while the model is "folded" and optimized for your GPU.

After the process finishes the optimized UNet and onnx files are output to

stable-diffusion-webui/models/Unet-trtandstable-diffusion-webui/models/Unet-onnxrespectively.Now, you can select the optimized SD Unet:

Taste the speed!!!