Introduction

Hotshot-XL is a motion module which is used with SDXL that can make amazing animations. It is not AnimateDiff but a different structure entirely, however Kosinkadink who makes the AnimateDiff ComfyUI nodes got it working and I worked with one of the creators to figure out the right settings to get it to give good outputs.

If this is the first time you are getting into AI animation I suggest you start with my AnimateDiff Guide https://civitai.com/articles/2379 This is designed as a supplement to that one.

**WORKFLOWS ARE ATTACHED TO THIS POST TOP RIGHT CORNER TO DOWNLOAD UNDER ATTACHMENTS*

**Workflows Updated as of Nov 3, 2023 - Let me know if there is any issues**

System Requirements

A Windows Computer with a NVIDIA Graphics card with at least 10GB of VRAM. That's Right! The same as AnimateDiff! For me the speed is actually more or less the same also, I have been told some older graphics cards have a speed difference however.

Installing the Dependencies

If you have installed all the nodes in my previous guide you do not need to install any new nodes for the basic workflows. You can skip to the next paragraph. If you want all the nodes from the appendix workflow you will need to additionally install (I suggest you use the ComfyUI Manager to install these rather than Git):

IPAdapter Plus (improved character coherence - currently has a bug - will explain below) - https://github.com/cubiq/ComfyUI_IPAdapter_plus

ComfyUI Frame Interpolation (frame interpolation nodes) - https://github.com/Fannovel16/ComfyUI-Frame-Interpolation

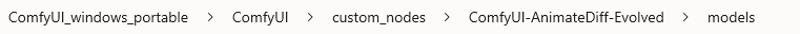

The motion module is available here : https://huggingface.co/hotshotco/Hotshot-XL/tree/main. There are two versions, but the smaller pruned "hsxl_temporal_layers.f16.safetensors" seems to work just as well as the full model. You need to put it here:

If for some reason the above official motion models are not working try this one: https://huggingface.co/Kosinkadink/HotShot-XL-MotionModels/tree/main

I had thought some models work better than others, with the settings correct it seems like they all work fine.

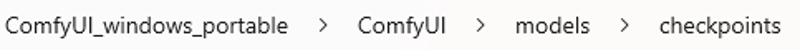

The default model is available here: https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0/tree/main "sd_xl_base_1.0.safetensors"

FenrisXL has been the most flexible checkpoint under various testing based on my experience: https://civitai.com/models/122793/fenrisxl

For Anime I used: https://civitai.com/models/118406/counterfeitxl

I have been told most checkpoints actually work fine with hotshot XL with the settings correct. However I suspect some might be better than others - just like with AnimateDiff.

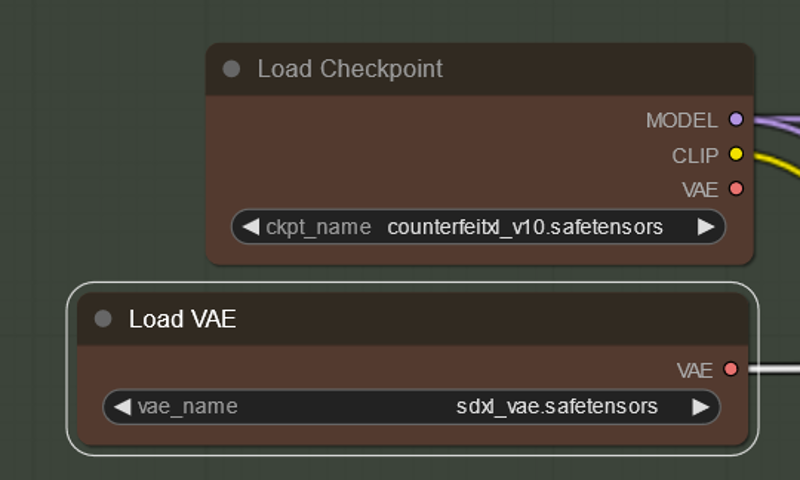

Put them in.

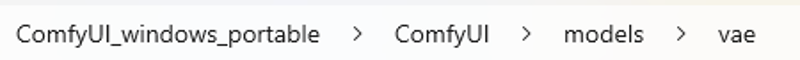

You will need an SDXL VAE. I used this one: https://huggingface.co/stabilityai/sdxl-vae/tree/main

Download "sdxl_vae.safetensors" and put it:

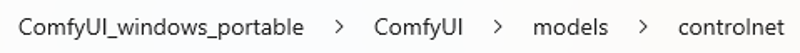

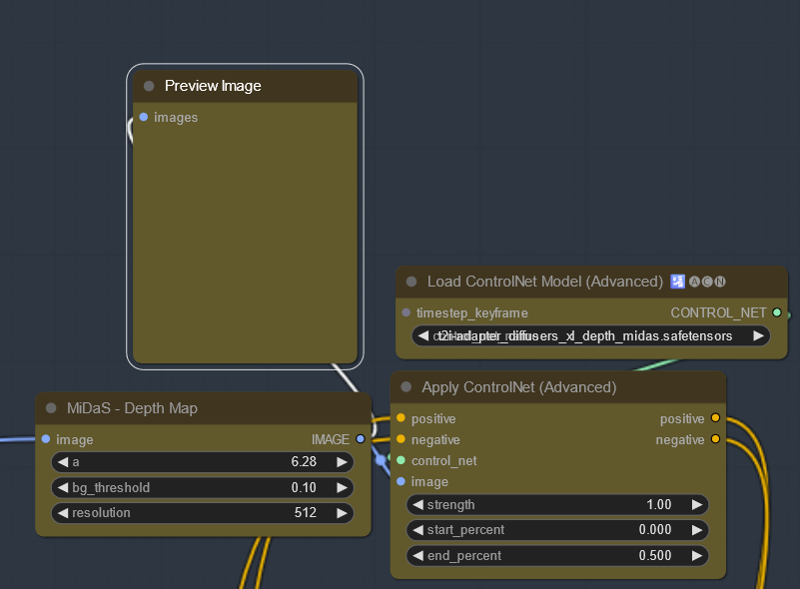

For Vid2Vid I use Depth Controlnet - it seems to be the most robust one to use. You can find a repository of Models here: https://huggingface.co/lllyasviel/sd_control_collection/tree/main. There are a lot of them, not all of them as good as others. For my tutorial workflows I used "t2i-adapter_diffusers_xl_depth_midas" Put it here:

That's it!

Hotshot-XL vs. AnimateDiff

Now that we have AnimateDiff and HotshotXL when would you use one or the other? In this section I hope to compare and contrast them. You can skip this section if you are just starting but I hope this helps those with more experience.

The biggest differences are partially due to structure - AnimateDiff is 16 frames long and hotshot is only 8 (but thank goodness otherwise think of the VRAM usage).

Overall Hotshot seems to take more work to get a good output. Especially to Vid2Vid once you can figure out the settings you can do whatever with it. Even just changing the aspect ratio can mean having to adjust things. Hopefully, as things get fine tuned this becomes easier. SDXL is much better with coherence compared to 1.5, so you can have body positions and things you could not have otherwise.

For Vid2Vid the quality with hotshot can be amazing! Txt2Vid suffers from the shorter context length. Motion can also be a bit more random than AnimateDiff. You also suffer from SDXL not currently having the same number/quality of controlnets.

If I were to summarize AnimateDiff is tried and dependable and can take whatever you throw at it. Hotshot-XL can give amazing results but takes more tweaking.

Node Explanations and Settings Guide

I suggest you do not skip this section, it is likely you may need to tweak some settings to get the output you want. I will discuss them node by node. This is supplemental to my previous guide explaining new nodes used and also nodes whose settings matter for Hotshot. Remember to take my setting with a grain of salt - its what I have found as a starting off point. It is quite possible that there are better base settings - so explore!

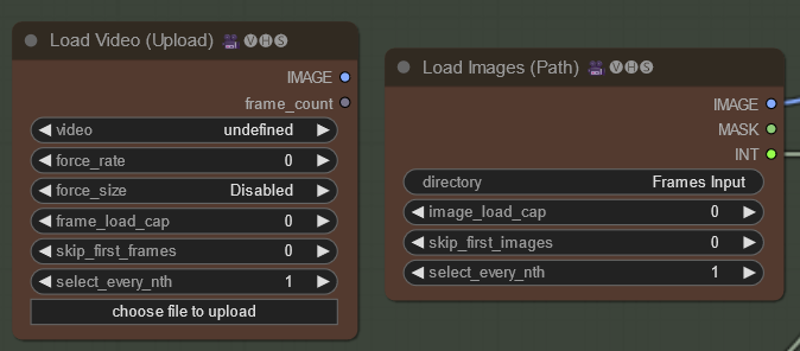

Newer versions of the load images nodes. All you have do do is copy the location of frames in the directory section of the Load Images (Path) node. I have also included a Load Video node that can extract frames from videos so you do not need to split them first. If you use it you have to delete the Load Images node and hook this up instead. See my previous guide for further discussion on load images node. Of note for any of the (upload) nodes - what it actually does is copy the file into:

It will stay there even after comfy is closed so you can use it again by selecting it in the video tab until you delete it.

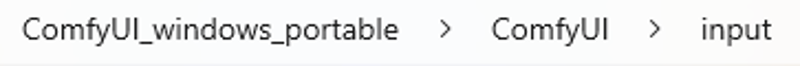

Resolution matters! Changing resolutions can change how the video looks and may require different tuning. Previously I felt that If you follow the resolutions on the GitHub page I found the quality to be a lot worse than something higher. Recent tests seem to show that lower resolution is fine with the rest of the settings adjusted. However if you are doing less than this and your quality is not as good - if you can try a higher resolution. Make sure the height and width are at the right ratio for your video!

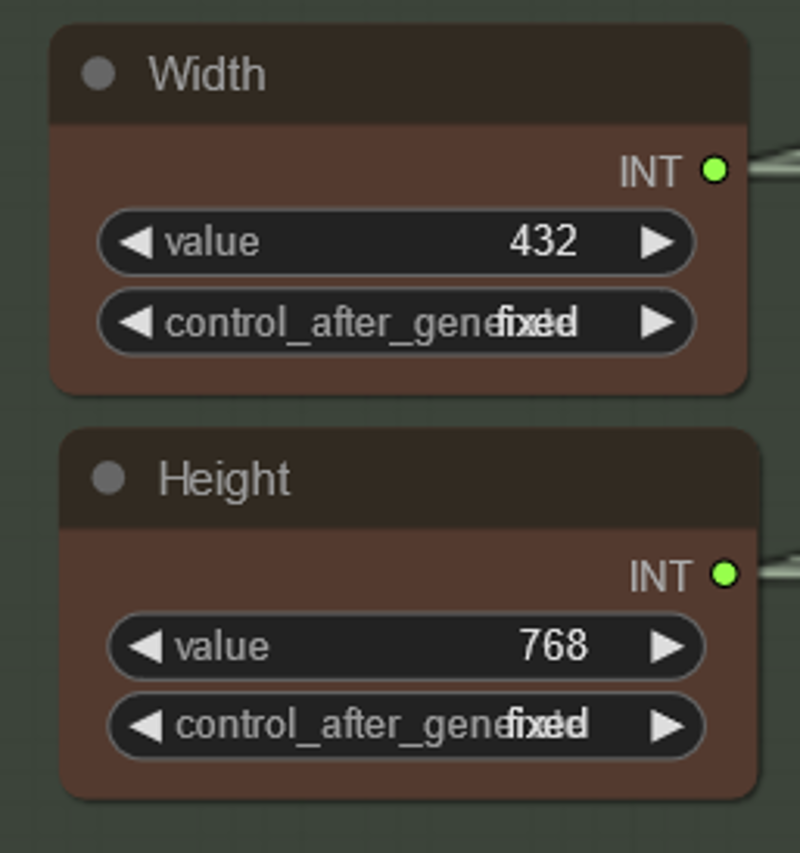

Make sure you have a SDXL Checkpoint and VAE! I think a lot of peoples first tries with Hotshot did not work partial because of a bad choice of models. If your result ends up by looking messy/bury it may be the model (it is possible I do not have settings right for other models but that is for you to explore). The other thing is that Hotshot seems to push for realism - many anime models will end up being realistic. Counterfeit seems to have this problem less but I do occasionally encounter it.

As a note most LORAs seem to work fine but others were more blurry - I expect this is the same issue.

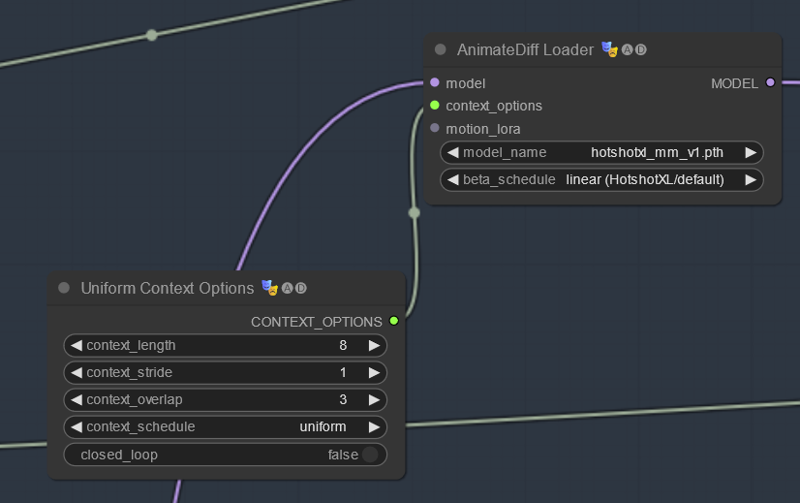

Important here is to notice the different beta scheduler that is essential. Hotshot also seems a bit more 'steerable' with respect to context_overlap. You can try less but at 0 you will notice jumps between each run. I did some testing and felt 3 was a good spot.

Context_Stride is actually helpful here especially with Txt2Vid - it can cause its own kind of artifacts but I would explore increasing it to help with consistency if that is your wish. I often use it at 3.

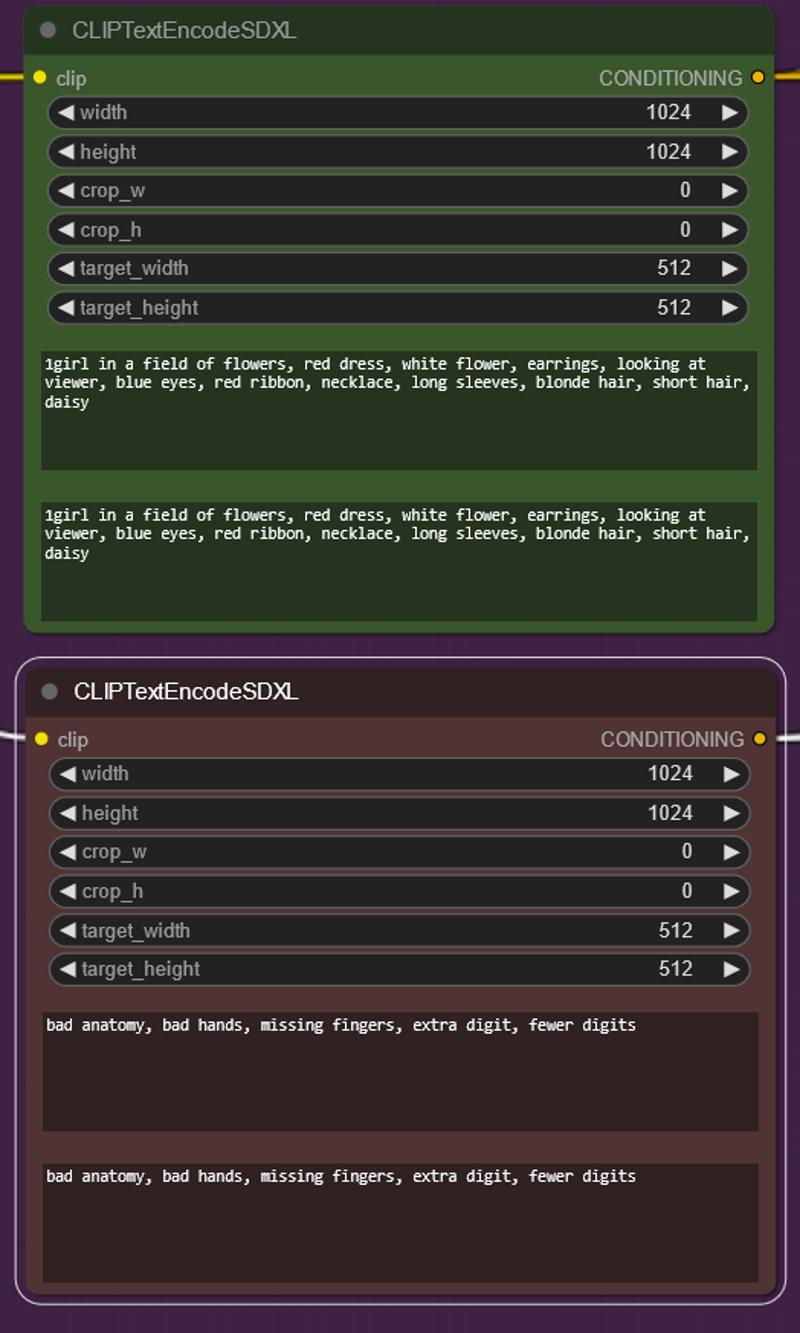

I have done a lot of work on the width/height/crop_w/crop_h/target_width/target_height settings. This seems like a good flexible setup. At this point I am not sure what exactly helps but I think they are important. If you set width/height too high I think it tries to add to much random detail and your output suffers. Target_width and height should be at 512 per developer I changed them a bit in a test without too much change. I did for a while have crop_w and h at the resolution of the latent which did seem to help with stability - it did however mess with IPAdapter so I set back to 0 and did not notice a whole lot of change. Honestly - if anybody anywhere has a good explanation of what these setting actually do or supposed to do let me know.

Prompting also is very important and affects things - Hotshot is a lot more sensitive to prompts especially ones that are more general can cause more inconsistencies. I am not a pro at SDXL prompting and this took some getting used to from 1.5. You likely will spend of bit of time troubleshooting your prompt.

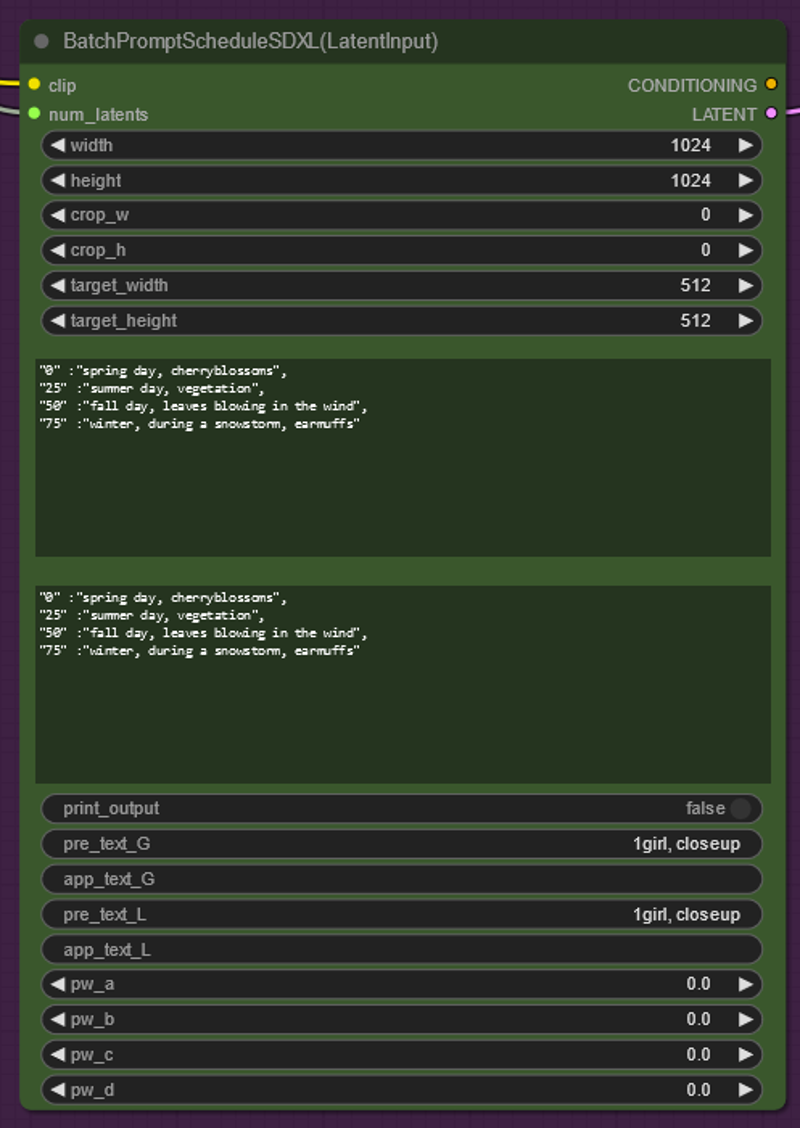

Prompt Travel works out of the box! Nothing much to say here except do note that there is double the amount of pre_text and app_text boxes so do not forget to use them both/duplicate if needed.

FYI the actual max number of frames this can handle is 9999. If you are using the non-latent input version of this node (was in my older workflows and remains in the everything bagel workflow) - make sure Max Frames = Frames of the animation. If you do not prompt travel will not work.

SDXL ControlNet models still are different and less robust than the ones for 1.5 - I hope we get more. I have not tried other models besides depth (diffusers depth models work fine too) after getting bad results with some other types. Its worth exploring and getting back to the community if you find settings that work. I did try a bunch of Start/End percents with strength and generally found that starting strong and ending early was the best approach. For realism that is closer to the video you can end later to have it follow the video closer. This does need to be adjusted to the video that is being used I noticed - some need more or less strength/end. I did not try other preprocessor settings.

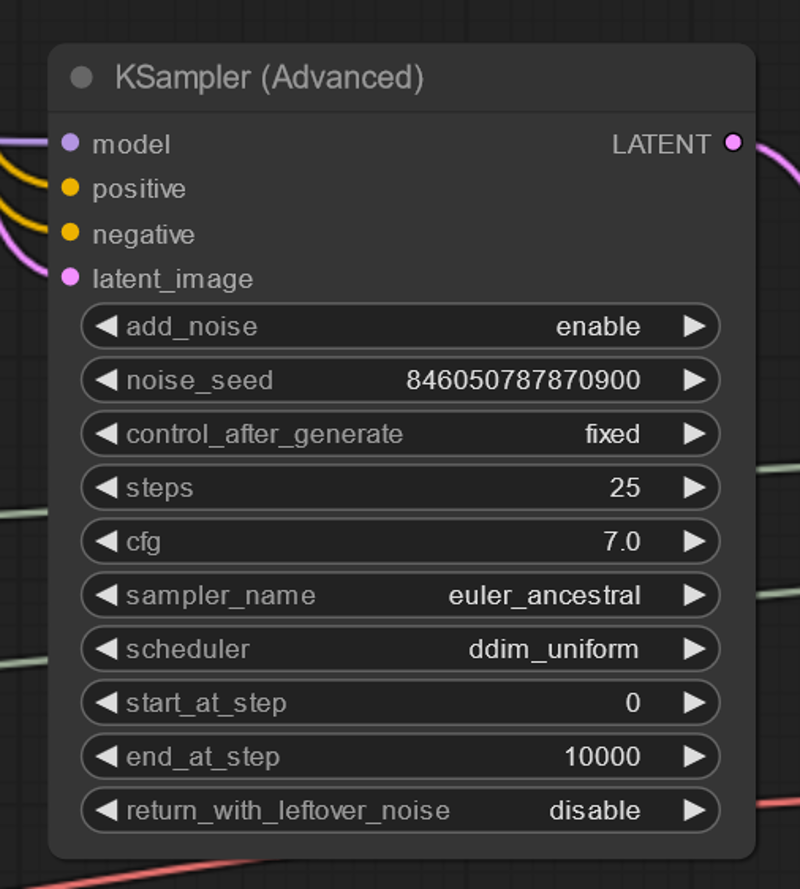

I have used the advanced K sampler for this workflow - there is no reason you cannot use the simple one.

Although I have set noise_seed to fixed for testing reasons you probably want to randomize it. Per the developers there are noticeably better and worse seeds (probably most relevant for txt2img).

Steps have remained the same with my previous workflow but can be increased (the developers used 30).

Sampler I have stuck with Euler_A and ddim_uniform as this is based on the developers settings.

Where is denoise strength? Start_at_step is basically the equivalent here. However it is not linear (ie. 0.5 denose is not step 12) so start by increasing this only a little bit (ie. starting at step 4 or 5 is probably a good place to start)

CFG is your friend - One of my big insights is that you may need to vary this dramatically depending on the aspect ratio/model etc. if things are vague and blurry increase this. I have had to use as high as 20 and as low as 5 to get a reasonable output.

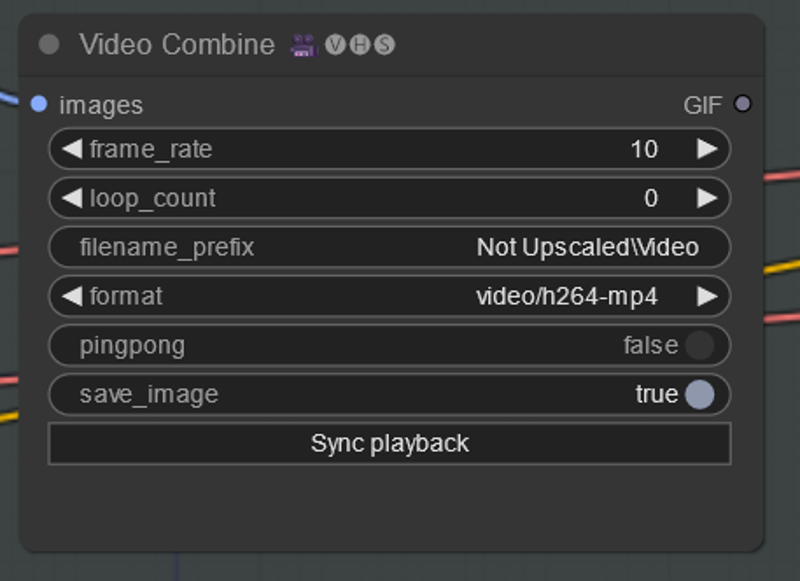

Similar to the old video combine nodes - do remember to change frame rate to what you want and format if you want a gif instead of a video.

Making Videos with Hotshot-XL

The basic workflows that I have are available for download in the top right of this article. The Vid2Vid workflows are designed to work with the same frames downloaded in the first tutorial (re-uploaded here for your convenience). Please note for my videos I also have did an upscale workflow but I have left it out of the base workflows to keep below 10GB VRAM.

Vid2Vid Workflow - The basic Vid2Vid workflow similar to my other guide.

Vid2Vid with Prompt Travel - Just the above with the prompt travel node and the right clip encoder settings so you don't have to.

Txt2Vid Workflow - I would suggest doing some runs 8 frames (ie. not sliding context length) you can get some very nice 1 second gifs with this. Otherwise prompt away!

Txt2Vid with Prompt travel - same as above just with a properly set up prompt travel node.

Everything Bagel - This I will explain below is mostly supposed to be a reference.

The Everything Bagel

I have included this workflow to hopefully answer a lot of questions of what goes where. This is essentially the workflow I used to make the anime conversion in the field with some extra nodes. Please note using this workflow even when IPadapter gets fixed (discussed below) may exceed 12GB VRAM during upscaling but it did not seem to affect speed too much.

There are many ways to upscale but this was my current workflow. I wont go into all the detail on this here, if you really think it is worth a guide you can let me know.

It contains most common nodes you would think about and places them where they should be.

Please see the 2nd set of Hotshot-XL nodes exist in order to ensure that IPAdapter is not used in the "Hires Fix".

IPAdapter

When you do your runs you will notice that yours probably will have more inconsistencies than the videos I have posted. The reason for this is I used IPAdpater which works as reference and is excellent for AI videos. I took a frame from an 8 frame run to use as the reference picture.

BUT as of the writing of this guide it currently got bugged during a ComyUI update that is making it use 6+GB of VRAM, which makes it unusable for most. I have done some fixing of my ComfyUI instance but do not suggest that most should try. If you have 16/24 GB VRAM go ahead and copy and use those nodes!

IPAdapter now works fine with less VRAM than before! Everything should work fine with a 12GB card

What Next?

There is lots to explore in this space, go ahead and be creative - you will likely be the first and you are sure to impress! If you find a controlnet/LORA/model that works well share it with the community!

Troubleshooting

As things get further developed this guide is likely to slowly go out of date and some of the nodes may be depreciated. That does not mean that they won't necessarily work. Hopefully I will have the time to make another guide or somebody else will.

I will put common issues/concerns here with answers as they come up.

If for some reason the official motion models are not working try this one: https://huggingface.co/Kosinkadink/HotShot-XL-MotionModels/tree/main

In Closing

I hope you enjoyed this tutorial. If you did enjoy it please consider subscribing to my YouTube channel (https://www.youtube.com/@Inner-Reflections-AI) or my Instagram/Tiktok/Twitter (https://linktr.ee/Inner_Reflections )

If you are a commercial entity and want some presets that might work for different style transformations feel free to contact me on Reddit or on my social accounts (Instagram and Twitter seem to have the best messenger so I use that mostly).

If you are would like to collab on something or have questions I am happy to be connect on Twitter/Instagram or on my social accounts.

If you’re going deep into Animatediff, you’re welcome to join this Discord for people who are building workflows, tinkering with the models, creating art, etc.

(If you go to the discord with issues please find the adsupport channel and use that so that discussion is in the right place)

Special Thanks

Kosinkadink - for making the nodes that made this all possible

Fizzledorf - for making the prompt travel nodes

Aakash and the Hotshot-XL team - For reaching out and helping make this work (https://github.com/hotshotco/Hotshot-XL)

The AnimateDiff Discord - for the support and technical knowledge to push this space forward