Introduction

Although the compatibility of Stable Diffusion (SD) model with LoRA model can be directly tested with naked eye by generating images using both SD model and LoRA model simultaneously and comparing the generated images with original artworks, visual fatigue can sometimes lead to misjudgments. At such times, one might wonder if there is a way to quantify this compatibility. I recently read an article (Ghost Review) that uses style loss as a metric to evaluate the compatibility of SD model with LoRA model. To verify whether style loss can serve as a metric to evaluate the compatibility of SD model with LoRA model, the following experiments were conducted.

Question

Can style loss serve as a metric to evaluate the compatibility of SD model with LoRA model ?

Methods

The calculation method of style loss used in this article is consistent with the tutorial given in the official PyTorch documentation (Neural Transfer Using PyTorch). Firstly, the input image and the style image are fed into the VGG19 model for computation. Then, the outputs of several randomly selected convolutional layers (conv layers) in the model are extracted, and the Gram matrices of these outputs are calculated. Subsequently, the Mean Squared Error (MSE Loss) between the Gram matrix of the input image and the style image is calculated. Finally, the MSEs obtained from each calculation are summed up to get the total style loss. For instance, if an input image is fed into the VGG19 model, and the outputs of 5 random convolutional layers in the model are extracted, then 5 Gram matrices will be calculated. Each of these 5 matrices is then compared with the corresponding Gram matrices of the style image using MSE, resulting in 5 loss values. Finally, summing up these 5 loss values gives the total style loss of the input image. The calculation method of the Gram matrix is consistent with the official documentation of PyTorch:

def gram_matrix(input):

a, b, c, d=input.size() # a, b, c, d = Batch, Channel, Width, Height

features=input.view(a*b, c*d)

G=torch.mm(features, features.t())

return G.div(a*b*c*d)Here are the specific research details:

Generating Samples: Three SD models (Yesmix V3.5, Aing Diffusion V10.5, Dream Shaper V7) and one LoRA model (Liang Xing Style) were used to generate 20 images each as samples. Among them, Yesmix V3.5 and Aing Diffusion V10.5 are anime models, Dream Shaper V7 is realistic model. The LoRA model involved in the experiment is based on NovelAI, thus it's an anime LoRA model.

Generating Parameters:

Prompts: 1girl, raiden shogun,portrait,beautiful detailed eyes,(shiny skin:1.14),(mature female:1.3),(masterpiece:1.2),(best quality:1.2)

Negative Prompts: lowres,ugly, worst quality, low quality, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, jpeg artifacts, signature, watermark, username, blurry, bad feet, poorly drawn hands, poorly drawn face, mutation

VAE: kl-f8-anime2

Resolution: 640x960, Hires Fix is not used

Sampler: DPM++2M Karras

Steps: 30

CFG Scale: 8

Seed: -1 (means random seed)

LoRA weight: 0.8

To prevent the influence of other factors, the generation of the images did not use any other auxiliary models (such as Embedding, LoRA) except for the aforementioned SD models and LoRA model.

Selection of Style Images: The following three images are selected as style images, as shown in Fig. 1, from left to right are: original artwork by Liang Xing, original artwork by Sakimichan, and a random image I doodled in Photoshop (for extreme testing conditions). Among them, the LoRA model used for testing is trained with multiple original artworks by Liang Xing as the dataset.

Fig. 1 Three style images, from left to right: Liang Xing's artwork, Sakimichan's artwork, random image illustrated by myself

Image Preprocessing: Using

transformsto preprocess the images:rgb_mean = torch.tensor([0.485, 0.456, 0.406]) rgb_std = torch.tensor([0.229, 0.224, 0.225]) transform=transforms.Compose([ transforms.Resize((512, 512)), transforms.ToTensor(), transforms.Normalize(mean=rgb_mean, std=rgb_std) ])First, the image is resized to a 512x512 square image, then

ToTensor()is used to convert the image into tensor and limit the value of each pixels between [0, 1]. Finally, the image is normalized. The used mean and variance are default values of the vgg19 model. Although this operation is not very rigorous (because the overall mean and variance of the test samples may not be close to this default value), in order to control variables, the image preprocessing method is consistent with the method in Ghost Review source code.

As per the details of research mentioned above, if style loss can serve as a compatibility evaluation metric for SD models with respect to the LoRA model, the results of calculated style loss between the generated images from the combination of SD models and the LoRA model and Liang Xing's original paintings should be the smallest (as the styles are most similar). Would this be the case?

Results and Discussion

Before analyzing the results of style loss, here are some images (Fig. 2) generated by the three SD models in combination with the LoRA model. Readers can visually evaluate which generated images appear more similar in style to Liang Xing's original paintings in Fig. 1.

Fig. 2 Here are some images generated by three SD models. From top to bottom: The first row are images generated by Yesmix V3.5, the second row are images generated by Aing Diffusion V10.5, and the third row are images generated by Dream Shaper V7.

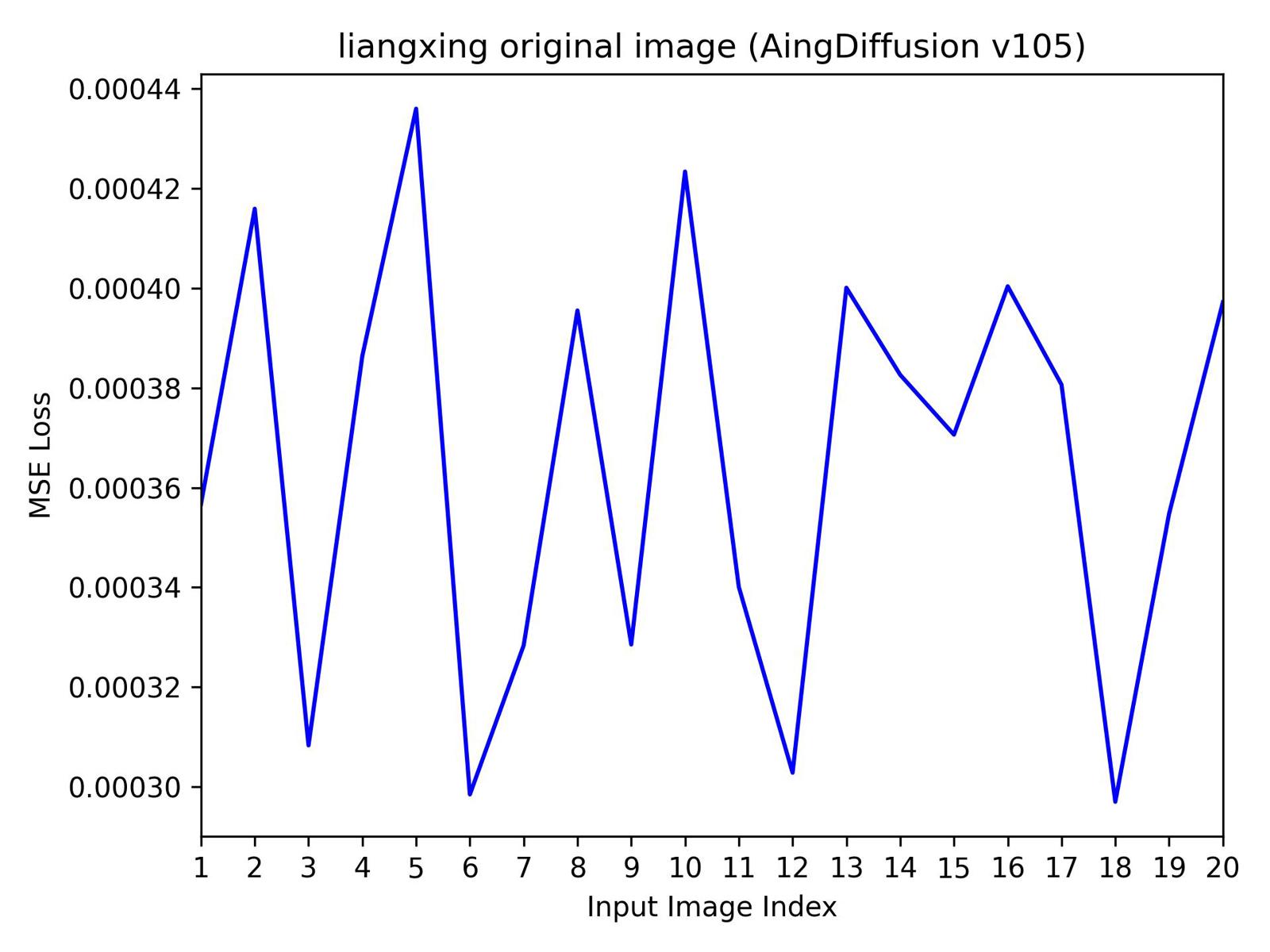

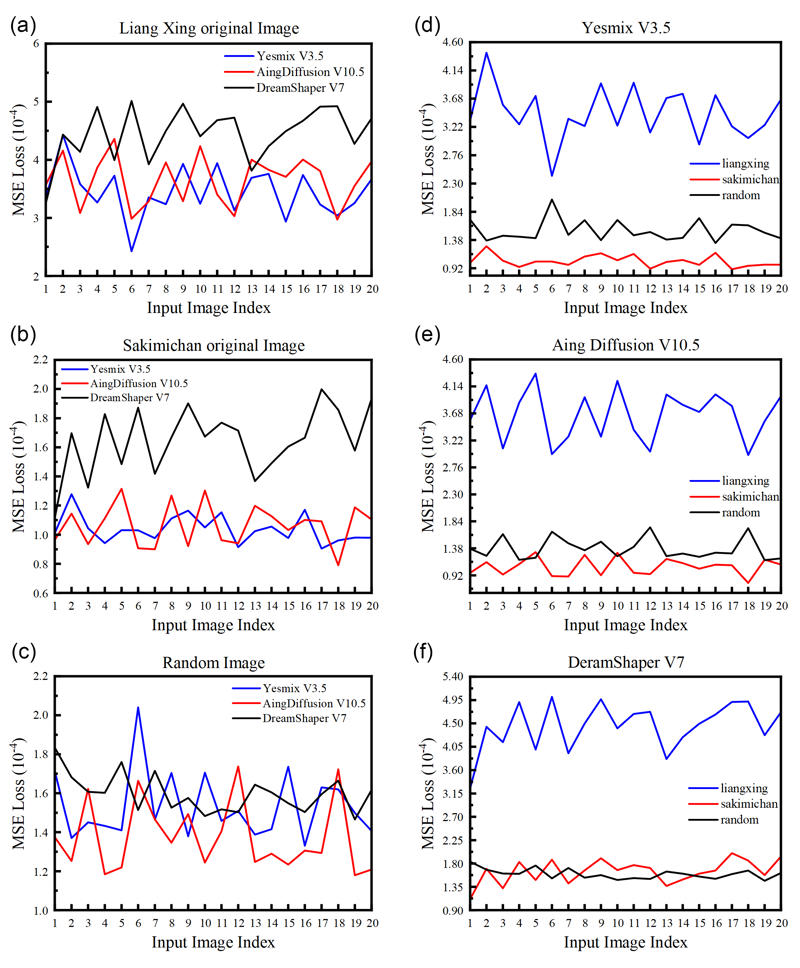

Now let's analyze the calculated style loss. Fig. 3 presents the results of calculated style loss for the generated images from the three SD models against three style images. The x-axis represents the index of 20 generated images, and the y-axis represents the style loss(MSE Loss, magnitude is 1e-4) of these 20 images with the style images (see each subfigure's title). The first column of Fig. 3 represents the style loss calculation results of the three SD models under the same style image; the second column represents the style loss calculation results of a single SD model under three different style images.

Fig. 3 The Style Loss calculation results of three SD models for three style images. (a) The calculated results of three models when the style image is the original artwork by Liang Xing. (b) The calculated results of three models when the style image is the original artwork by Sakimichan. (c) The calculated results of three models when the style image is random image illustrated by myself. (d) The calculated results of the images generated by Yesmix V3.5 under three different style images. (e) The calculated results of the images generated by AingDiffusion V10.5 under three different style images. (f) The calculated results of the images generated by Dream Shaper V7 under three different style images.

In Fig. 3(a), it can be seen that when using Liang Xing's original painting as the style image, the Style Loss calculation results of the three models are all within the same order of magnitude. Although the value of Dream Shaper V7 is slightly higher than the other two models, considering the magnitude, this gap can essentially be ignored. Moreover, upon closer inspection, it can be seen that the style loss of the second generated image from the Yesmix V3.5, an anime model, is almost equal to that of the realistic model Dream Shaper V7. However, if you observe Fig. 2 with naked eye, you will find that the generated images of these two models still have drastically different styles.

The results in Fig. 3(b) show that Dream Shaper V7 has a higher style loss, the cause of this phenomenon is currently unknown. Moreover, the style loss of the two anime models, Yesmix V3.5 and AingDiffusion V10.5, are basically on par. However, if you visually compare Fig. 2 and Fig. 1, you'll notice that even when the style losses of the two models are small and similar, the images generated by these two models are still significantly different in style compared to the original artwork by Sakimichan.

According to visual observation, the images generated by the three SD models are fundamentally different from the random image. However, the results in Fig. 3(c) show that the style loss calculated from the images generated by the three models is not much different. Moreover, when comparing this subfigure with (a) and (b), it can be found that the results of (b) and (c) are lower than those of (a) (and the difference is not significant), which is inconsistent with the visual observation results and assumptions (the generated images are completely different in style compared to the original artwork of Sakimichan and random image). To more intuitively display these results, the calculated results of 20 images generated by a single SD model compared with three different style images are plotted (Fig. 3 (d), (e), (f)). The phenomenon is consistent with what was previously mentioned, and the underlying reason for this phenomenon is currently unknown.

Conclusion

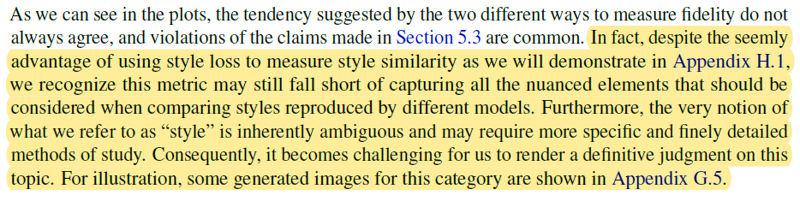

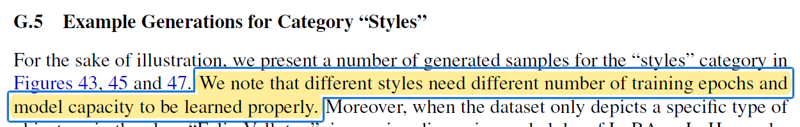

Based on the above analysis, it is not reliable to use style loss as a metric of the compatibility of SD models with LoRA. The results show a significant discrepancy between the calculated style loss and the observed results as well as the hypothesis, suggesting that style loss cannot be the sole criterion for evaluating the compatibility of SD models with LoRA models. This is also mentioned in LyCORIS paper, which points out that while using style loss to measure style similarity has significant advantages, this metric might still fail to capture all the subtle elements that should be considered when comparing the styles replicated by different models. Additionally, the paper also mentions that during the process of training the style model, different styles require different epochs and model capacities to be correctly learned. This point indirectly illustrates that "style" is a complex concept that currently cannot be measured by a single metric.

Fig. 6 The elaboration on style loss in the paper and the phenomena observed during the training of style models.

Appendix

All the results in this article are implemented through programming, therefore, if there is an error in the source code, all the results mentioned in this article would be nonsense. So the test data and source code are zipped and uploaded in Google Drive. Due to the small amount of test data, the results obtained may lack generality. Everyone is welcome to test with other SD models :)