Introduction

We have all been there. You are training a LoRA, staring at the Loss Graph, wondering: "Is Epoch 10 better than Epoch 5? Or did I just overcook it?" Usually, we rely on "vibes"—we generate a few test images and guess. But guesses are expensive, and "looking like the subject" is subjective.

What if you could measure it?

I developed a diagnostic tool called Mirror Metrics (open source on [GitHub]) that uses InsightFace/ArcFace—an industrial-grade biometric system—to mathematically measure the resemblance, flexibility, and geometric stability of your LoRA against your training data. It turns "I think it looks like her" into "It has a 0.76 Similarity Score with high geometric variance."

(Discussion and original feedback on [Reddit Thread]).

Disclaimer: as it's based on InsightFace, this only works on realistic images, not on Anime or Styles or Objects, it's pure facial recognition mathematics.

The Experiment: Visualizing the Data

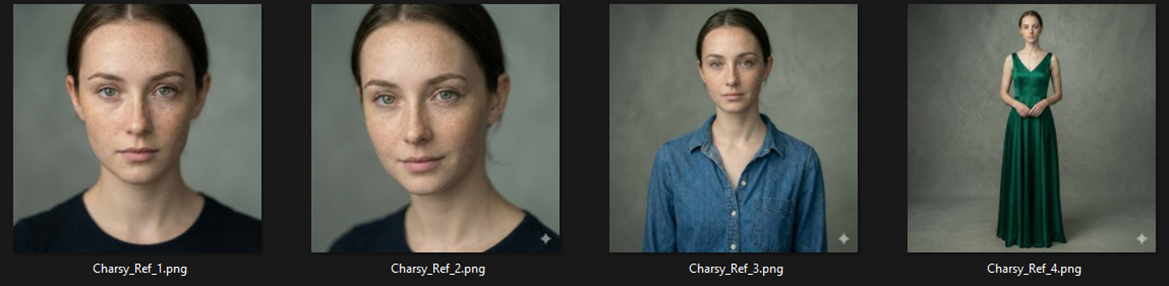

To show you exactly how to interpret these charts in a real-world scenario, I couldn't just tell you. I had to show you. We created a fully synthetic character named "Charsy" and simulated three different training outcomes to see how the charts react to each one:

The Overfit (Rigid copy)

The Underfit (Lost identity)

The Sweet Spot (Flexible identity)

📝 Note on the Data: For the purposes of this Guide, I generated the "Charsy" dataset using Nano Banana (for consistency) and Qwen (for the outliers). While real-world training logs might look slightly messier, this controlled environment provides the perfect "clean lab" to understand the math behind the magic.

Here is the breakdown of the experiment.

1. The Setup (The Dataset)

We started with a solid Reference Set.

Reference: 7 clean images of Charsy (Studio, Outdoor, profile dark, over the shoulder, the usual starting dataset to train a LoRA) to establish the ground truth geometry.

2. The Candidates (The 3 Simulated LoRAs)

We analyzed three output folders representing three distinct stages of training:

🔴 LoRA_OVER (The Clones): Images almost identical to the training data mean. Extremely rigid.

🟡 LoRA_UNDER (The Stranger): The model hasn't learned the features yet; the subject looks like a different person.

🟢 LoRA_OK (The Sweet Spot): Charsy in completely new contexts (Cyberpunk, Medieval) plus a "Stress Test" (we explicitly asked for an older version of her).

3. The Diagnosis (Reading the Charts)

Here is what the data tells us about these three states.

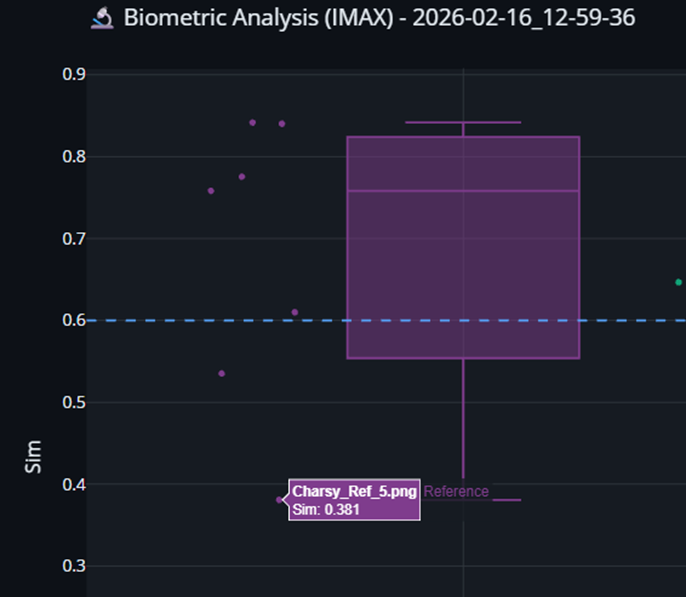

0. The Reference Box: Auditing Your Source Data

(Refer to the Purple Box in Chart 1 - Face Similarity)

Before you even look at what the AI generated (Blue/Green/Yellow), you must look at Purple (Reference). This box represents the images you used to train the model. Spoiler: I Would do a dry run without loras, just to test the dataset before beginning the training.

1. The "Tall Box" Warning

What it means: If the Purple box is very tall (e.g., spanning from 0.60 to 0.90), it means your training data can be inconsistent (pay attention: it’s not a certain fact, but it’s a mathematical clue, consider it because the LoRA Training will too).

Diagnosis: You likely have images with vastly different lighting, heavy makeup, or extreme angles. The AI is confused about which "version" of the face is the correct one.

Action: Try to standardize your dataset. Remove images where the face is obscured or heavily stylized or very different from the rest of the dataset. For example it happened to me that I had included in a dataset a photo of that person from 3-4 years ago, which visually was kind of similar, but then when I processed the dataset I found that the age indicato would tell me it was younger then the other images, and plus it was way lower on the similarity graph, so I excluded it and the dataset ended up being more consistent.

It can on the other end be that you have a very particular image, but you’re sure that it’s important: for example it happened to me that I had in one dataset only one profile foto of the person, and that stood up as and outlier here, but it was much important to have it in the dataset, otherwise the model wouldn’t have had the reference of the profile to learn.

So again: use this as a gauge, and then verify looking at the images.

How to look for it?

Well, if you hover with the mouse on one of the dots, for example the lower one:

You will see the name and similarity score of the image, in this case it's Charsy_Ref_5.png.

Then you go in the reference folder, look at the image and make your considerations.

In our case it’s just a smiling face, yes it is distorted (and maybe nano banana failed to properly recreate the mathematical proportions of the original face).

Summary Checklist for the User

Run the Tool on your Dataset FIRST.

Check the Purple Box.

Evaluate and decide if to prune the Outliers: If you see dots below the box, identify those images and remove them from the folder if indeed they seem to be different from the rest of the set.

Train.

A. Face Similarity: The Overfit Trap

· The Blue Box (Overfit): Look how high (>0.85) and narrow it is.

Diagnosis: The model is cheating. It is memorizing the pixels rather than learning the concept. If you see this, your model is likely "dead" and inflexible. Lower your Learning Rate or reduce Epochs.

The Green Box (Sweet Spot): It sits comfortably around 0.60 - 0.75.

Diagnosis: Notice the "tail" reaching down towards 0.45? That is the "Old Lady" image. The fact that the score drops (but doesn't crash to zero like the Yellow box) is a good sign. It means the model is flexible enough to modify the facial geometry (aging) while keeping the underlying identity recognizable. (this is a perfect example to remind you this is a mathematical tool, not an absolute reality, and you should always interpret the meaning of the data.

· The Yellow Box (Underfit) lower than 0.3: see how low all the images are, and they should be: it’s a totally different character.

Diagnosis: the LoRA didn't learn enough of the character to reproduce it correctly.

B. Age Distribution: The Proof of Flexibility

The Green Column (OK): Do you see that line shooting up to 90 years old?

Diagnosis: This confirms that the LoRA is not "locked" to the dataset's age (25 years old). An Overfitted model (Blue) would have ignored the prompt "Old Woman" just to replicate the exact young face from the training data. The OK model obeys the prompt and applies aging effects correctly.

C. Face Ratio: The "Death" of Geometry

· The Blue Shape (Overfit): It’s almost a flat line.

Diagnosis: The model generates the exact same face shape and jaw opening every single time. It is static and boring.

The Green Shape (OK): It looks like a violin (wide and curvy), plus it’s on the same average horizontal line as the reference images.

Diagnosis: There is variation. The character laughs, shouts, turns her head. The LoRA is "alive" and dynamic, and it’s similar to the reference, while as you can see the “UNDER” lora, yellow, is on a different line, so it shows an overall different face shape then the reference..

D. Detection Confidence: The Quality Check

The Blue/Green High Line:

Diagnosis: Both the Overfitted (Blue) and the Balanced (Green) models score high here (>0.95). This is normal. It means the AI is generating valid, recognizable human faces.

The Yellow Drop (Under):

Diagnosis: If you see low confidence scores (0.60 - 0.70) or erratic drops, it means your model isn't just failing the identity; it’s failing anatomy. It’s generating "monsters" or blurry artifacts that the detector barely recognizes as human.

Look closely at the image from the Yellow set where the girl is looking back .

To us, she looks human. To the AI detector, the extreme angle compresses her facial features, making them harder to map, also it seems that the model there generated a more cartoonish feeling, that would be picked up badly by this fotorealistic diagnostic tool.

· Lesson: A drop in confidence doesn't always mean "Monster". Sometimes it just means "Extreme Pose" or “Cartoonish”. However, if you see drops on front-facing images or realistic images, most probably your model is broken.

E. Profile Stability: The "Turn-Around" Test

X-Axis: How much the head is turned (Yaw).

Y-Axis: How much it still looks like "Charsy" (Similarity).

The Blue Cluster (Over): Often clustered on the left (low angles).

Diagnosis: Overfitted models struggle to turn their heads. They often force a front-facing view even when prompted for a profile, or the identity collapses immediately when the angle changes.

The Green Spread (OK): The dots stretch to the right (high angles) while staying relatively high on the Similarity axis. Usually it will not be a flat horizontal line, but a low sloped diagonal one, it’s important that it matches the slope of the dots from the reference set.

Diagnosis: This is success. The model can generate a side profile (90°) and you can still tell it is her.

F. Pose Variety: The "Statue" Effect

This graph has 3 types of data:

Yaw in the x axis (how much the head is turned),

Pitch in the Y axis (how much the head is tilted up or down), and

Similarity, represented with the dimension of the dot, which tells you how much that image is similar to the dataset median.

If you have sparse points and still medium-big the model is good: it can turn the head and still have it similar to the dataset. It’s basically a sum of the graph A and graph E.

Center Cluster (Blue - Over):

Diagnosis: Look at how the Blue dots are bunched up in the middle (0,0). The model is "stuck." It refuses to look up, down, or sideways. It has memorized one pose (the training data pose) and repeats it forever, plus the similarity is very big on all the dots.

Wide Spread (Green - OK):

Diagnosis: The Green dots are scattered all over the map and not tiny. The character is looking up at the sky, down at her feet, and over her shoulder. The LoRA has learned 3D rotation, not just 2D pixels.

Wide Spread (Yellow - Under):

Diagnosis: The Yellow dots are scattered all over the map and very tiny. The character is looking up at the sky, down at her feet, and over her shoulder but it’s not like the dataset. The LoRA has learned 3D rotation, but not the face.

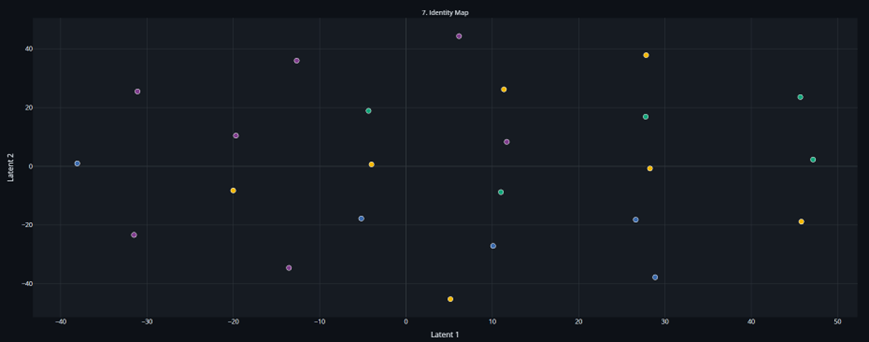

G. Identity Map:

The "Orbit" Theory (in this case the fact that I used Nano Banana to generate most of the images used for this tests shows, because the model is having a “something’s not right” moment in the latent space. Anyway, I’ll explain nonetheless how it should be read.

The Purple Cluster (Reference): This is your ground truth (Home Base).

The Blue Dots (Over): They would be sitting “inside or directly on top” of the Purple cluster.

Diagnosis: Zero Creativity. The model is hiding inside the training data. It’s not generating new art; it’s retrieving old files.

The Yellow Dots (Under): They are (and would be even more if we did a proper training) in a different galaxy, far away from the Purple cluster.

Diagnosis: Wrong person entirely.

The Green Dots (OK - The Sweet Spot): they would not be touching directly the Purple dots, but they "orbit" closely around them.

Diagnosis: This is what you want. They are close enough to be family (same identity), but distinct enough to be unique images (new lighting, new expressions, aging). You want a satellite, not a clone.

Honestly, this last graph is the most obscure to use, it’s mainly to see inside the latent space’s brain, but sometimes the math escapes reality, so use it with caution.

Final Verdict: The "Vibe Check" Checklist

So, before you delete your safetensors file or start epoch 20, look at the charts:

🔴 Is your Blue Box high & narrow? You are training a clone, not a concept. It will fail on complex prompts. Action: Reduce steps or Learning Rate.

🟡 Is your Detection Confidence dropping? Your model is breaking human anatomy. Action: Check your dataset quality or increase Network Dim/Alpha.

🟢 Is your Green Box lower but wide? Does it maintain identity even when rotated or aged? Congratulations. You have created a flexible, robust LoRA (at least mathematically).

Try it yourself

This tool is open and free. Stop guessing. [Link to GitHub]

Download the script, put in the Reference_Images Folder you dataset, and in the Lora_Candidates a folder for each set of loRA generated images, and see the math works.

Post your results in the comments below! Let's compare our "Green Boxes" and figure out the perfect settings together. I'm so curious to see what you think about this tool: is it just a Data Nerd Cool Fantasy? Or is it really helpful?

The word to you!