This is an basic explanation of how I go about finding good prompts, and it works well for me. It can successfully get what I am trying to create most of the time. Of course, we are bound to the limits of the training sets of the models we are using. This isn’t some magic that is going to make SD spit out exactly what you are looking for. But this helps to find the key words

Prompting 101

For this example, I am making a witch and I want swirling “magical” energy around her and a simple black background. So, for that I start with the broad strokes. The image on its basest level needs – a girl, in a black dress, and a hat, a black background. For the prompt you may think <1girl, black background, pointy hat, black dress> but this can be simplified further. A large number of witches already have pointy hats so that prompt is not needed and may even hurt the prompt because (almost) all witches have pointy hats but not hats that are pointy are witch hats. So simply prompting <1girl wearing witch costume, long black dress> in my experience has given better results. Of course, the exact keywords used will be different for each base model (Will explain more later).

I test the prompt and gen a few images. I usually gen 9 because it fits perfectly into a 3x3 grid but really as long as you get a sample size you are comfortable with then its good.

Adding detail

After you have the basic composition think about the details of the image, what makes a witch a witch? Magic, books, odd trinkets? Perhaps glowing eyes? These are the questions to ask about the thing you are trying to make. This is where the rubber meets the road so to speak. You want to add words to your prompt that correlate with images containing things that add the details you want. You can think of this in many different ways but the three that work best for me is:

direct prompting

(Prompting the exact things you want) this works well for things that checkpoint has a lot of data on but fails when trying to prompt things that the checkpoint does not have a lot of training data on,

indirect prompting

(Prompting things where a portion of the idea is relevant to the image I am making) This is a bit more difficult to get right and can lead to instability in the generated images especially if the images the different key words represents are too far apart. This can be really cool because you can get images that are wildly far from what the words mean, or it can turn your image to mush. Just got to test and see.

negative prompting

(prompting things you don’t want to see) When you are adding general prompts – hat, dress, hair these kind of prompts include concepts you may not want in your image. For example in prompting a particular hair style the training data includes a hair tie you don’t want to see. You can try to neg-prompt something to counteract it adding that. Keep in mind that something as specific as <hair tie> may not be in the training data so might need to get creative with some indirect negative prompting. Things like <hair accessory> may work better or <scrunchy>.

Lingo and the creators dialect

Keep in mind that the training data has the same biases and lingo as the person creating it. So one person may call a concept on thing but another person may call it something else. An extreme example of this is the difference between "fox" in a realistic base model and "fox" in a furry base model. Both use the same keywords but produce wildly different results. These kinds of little details are things to look out for. Another example could be "regal" in a fantasy model this would mean a king and so you might get things like crowns, castle, gold and jewelry, But for a photographer it may be something more subtle, a pose, a type of clothing style, probably also gold but less monarch and more popstar. If you can understand what community that person is apart of then you can figure out what they would call something based on the lingo used in those circles.

Looking at example's on the download site will give you a window into the mind of the creator of the model and can be a good starting point for beginners to stable diffusion and those trying a model for the first time.

Pay close attention to the miscellaneous words:

"Photo of" vs "portrait"

"1girl wearing a red dress" vs "1girl, red dress"

As you use different models and try to copy prompts from one model to another you will start to pick up on the differences and how they affect the final image.

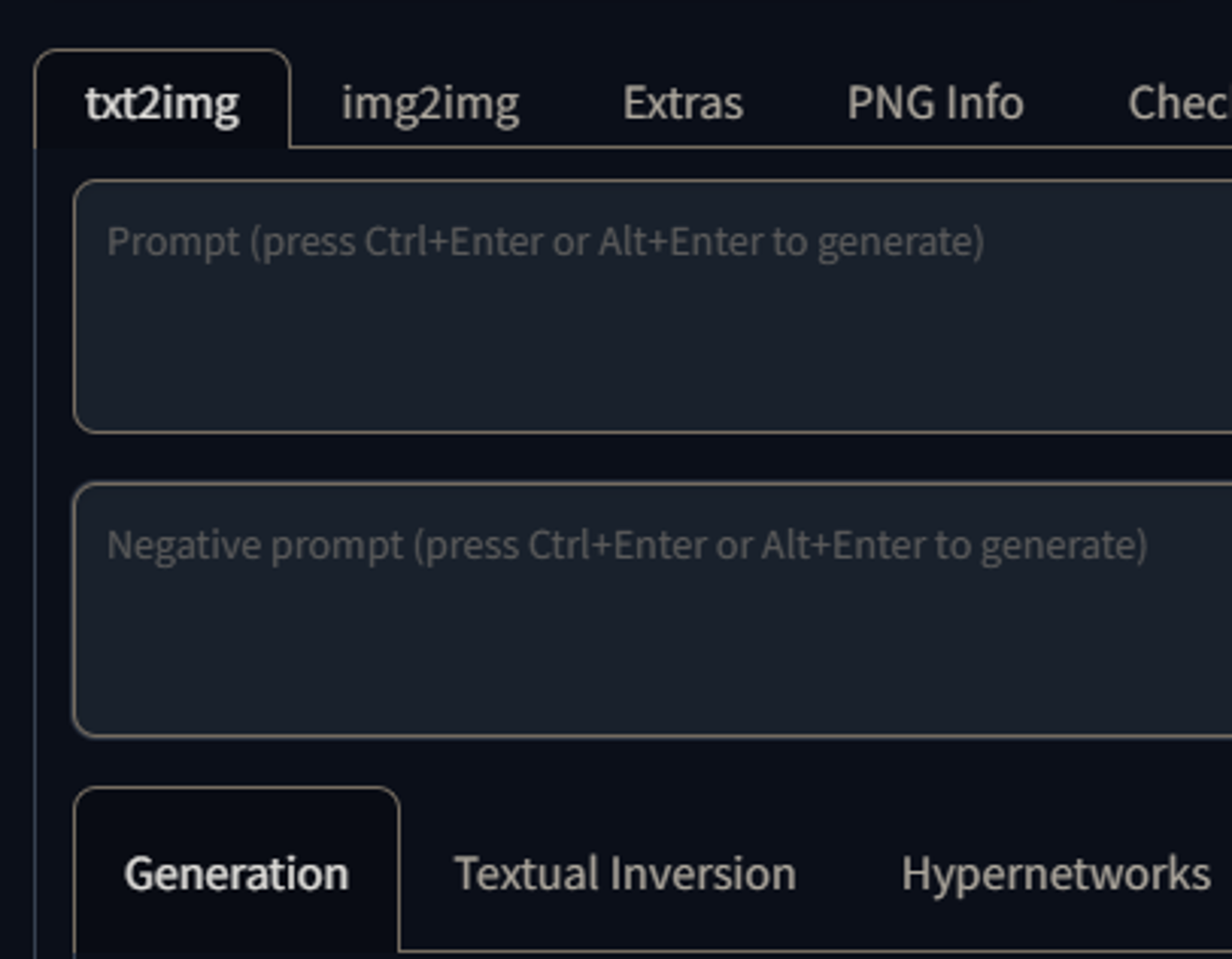

Try this: Load up stable diffusion and you choice base model. then look up a few images made using different models. Copy those prompts into stable diffusion and see what happens. Look for the differences (or lack of) between the generated images. This will help your understanding about how your model reacts to keywords you may not normally use.

Breaking dialect down further

Person looks at image > Matches words with concepts based on their lingo and biases > stable diffusion uses that data to make an image. So by “getting in the head” of the creator you can gain insight into how they made the checkpoint and allows you to more accurately prompt the concepts you want. Reading the description and looking at examples of (the creator of the checkpoint’s) generated art is a good way to understand how they think. If you still find yourself struggling to prompt the things you want, it may be a cultural disconnect. Try to understand the culture and community that that person is apart of, read posts and get in touch with the lingo or move to a model that is better matches your understanding of word-concepts

In this example the model that I am using (Rev animated) does not have a lot of magic training data so I looked for LORA that had the style of magic I was looking for. But for you try to add things that visually push the image closer to your “ideal image”. You can do things like adding celebrities at different weights to get different faces or adding LORA to get concepts that your checkpoint doesn’t have data on. Using open pose to get the pose you want or any number of things. Go wild but remember that if your model does not have the data to make what you want you will need to get that dat from somewhere to get the image you want.

Reductive exploration

Another way to test your base model is to make a prompt that generates a stable image. Then one at a time on a set seed remove a keyword generate then remove another. Look at what changes in the image. This will give you an idea of what that keyword really adds or if it adds anything at all.

Additive exploration

ANOTHER way to test your base model is to add something you know works. Then one at a time add keywords to see how it affects the image produced. Using this method, keeping the prompt size LOW will help you understand each keyword better. More keywords mixed together can muddy the results and cause you to make false assumptions. What added the bread? Was it the "food" or the "baker" keyword?

Weight exploration

A third way to test your base model is just to play with the weights of each keyword. Now weights can be kind of unpredictable in what they actually do. Adding weight to a keyword does not always do you you think it will. In my experience weight controls one or more of these factors:

Size of concept

amount of concept

details in concept

Lets use a coin as an example. Without testing to see we wont know if prompting "(Coin:1.5)" will; make a bunch of coins, make a big coin, or add detail to a single coin. We can guess based on the model we are using: For a fantasy model "coin" may equate to a treasure room, though it is more likely to be "coins" for a treasure room so it may produce an image with a sea of money (channel inner scrooge mc duck). For a more realistic model "coin" may indicate a transaction and so may produce an image of someone buying something at a store.

Contextual prompting

A keyword may produce wildly different images depending on the other keywords in the prompt. This is relatively simple and you may not even notice it but a important thing to keep in mind to prompt at maximum efficiency. For example: the keyword "car" can have very different results depending on the other keywords used. The following keywords can have different results:

Sports - may have a sportscar but add things like "ball" or "player" and you may end up with a car stuck on a sports field

highway - "car, highway" alone will likely produce a modern car as old car's like the model-t were rare to see much less on the highways were invented.

Blockbuster - Was sold in 2011 and so its unlikely that if you simply prompt "Blockbuster, car" that you will generate a car that or newer than 2011.

Of course the above examples assume its a car specific model with a massive amount of car training data. If these examples would be invalid if the training data for example: has only a specific selection of cars.

Note: This is by far the least impactful and hardest way to think about images. Trying to take all factors into account is impossible and the base models creators biases come into play a lot here. I don't recommend to rely on these tips alone. More as a something to consider when planning your prompts.

Things of note:

If your image becomes corrupted you can always get the generation data from a previous image using png info and retrace your steps. Once you get good at matching keywords to images you will be able to figure out which prompts are causing the corruption. part of this is just playing around with your base model. Making small changes and seeing the result.

A good place for beginners is to copy prompts from images made using wildly different models and seeing the difference between the the image you got the prompt for and the one your checkpoint created.

If a word is not in the training data for the checkpoint it may omit parts or the whole prompt. For example: the prompt is <red dress>. Checkpoint does not have "red dress" in its training data.

Outcome 1 - dress is in the training data so it only generates a dress

Outcome 2 - red is in the training data but not dress so it makes other things red

Outcome 3 - neither red nor dress is in the training date so it ignores the prompt

this can get complex when you use descriptors eg torn, fluffy, velvet, regal as the outcome can be heavily influenced by the biases and the subjective nature of describing images using words.

A resource you are using my be inherently unstable, a prompt may correlate to many subjectively different images. And so the output of two images with the same parameters but different seeds can be wildly different. This is because the stability of the image can be thought of as how close the image, concept & prompt are to each other. when all three are working together you get very stable generation.

for things that are either 1 hard to describe (aliens/monsters)

2 we don't have the words to describe it (How do you describe the visual difference between a grasshopper and a bee?)

3 is a prompt with large variation in image (a mustang is a car but it is also a horse).

Why this is important - lets say you want to make a car that looks like a bee. you can take a direct approach by just prompting <car, bee> but this may not create what you are looking for. Breaking it down and taking an indirect approach would be better but if the checkpoint does not break down the bee & car into small enough concepts to put together and there is no lora for it then you are sol.

I would want to break it down into its smallest parts, wheels, black and yellow stripes, windshield, mustangcar ect but the recourses I am using may not get specific enough to be able to put these parts together...