Main : https://sliders.baulab.info/

GitHub repo : https://github.com/rohitgandikota/sliders

Issue thread to add Automatic1111 Please Support : https://github.com/rohitgandikota/sliders/issues/7

SDXL Weights : https://sliders.baulab.info/weights/xl_sliders/

How to allow precise control of concepts in diffusion models?

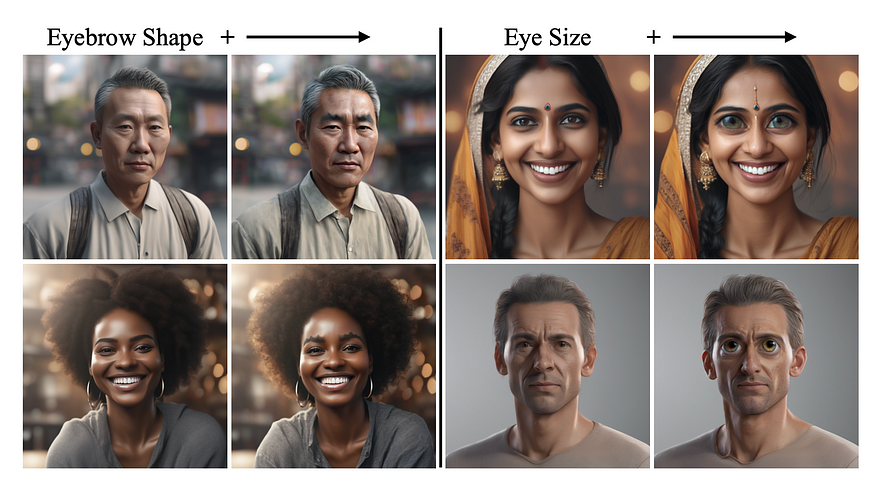

Artists spend significant time crafting prompts and finding seeds to generate a desired image with text-to-image models. However, they need more nuanced, fine-grained control over attribute strengths like eye size or lighting in their generated images. Modifying the prompt disrupts overall structure. Artists require expressive control that maintains coherence.

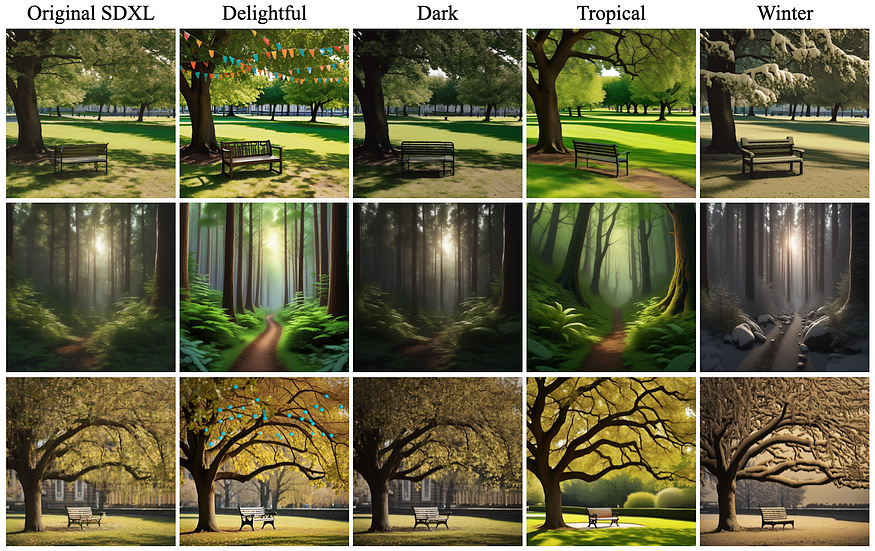

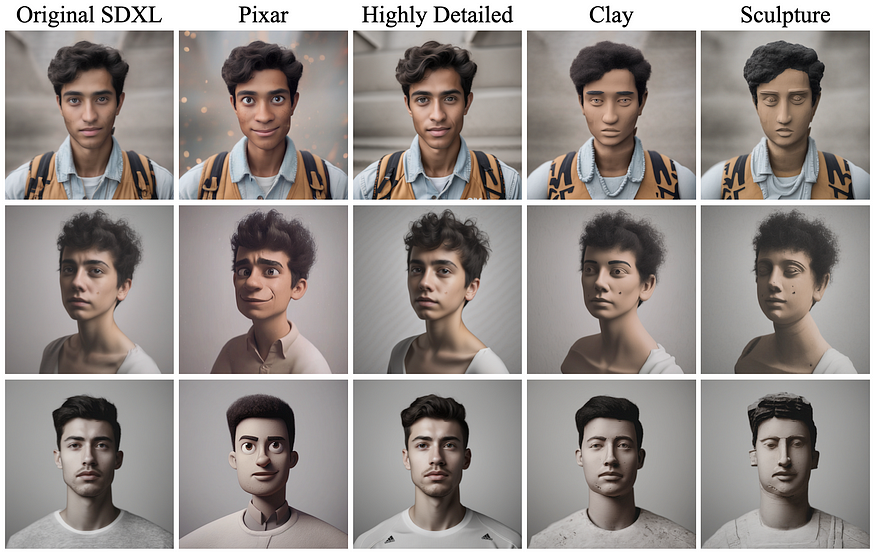

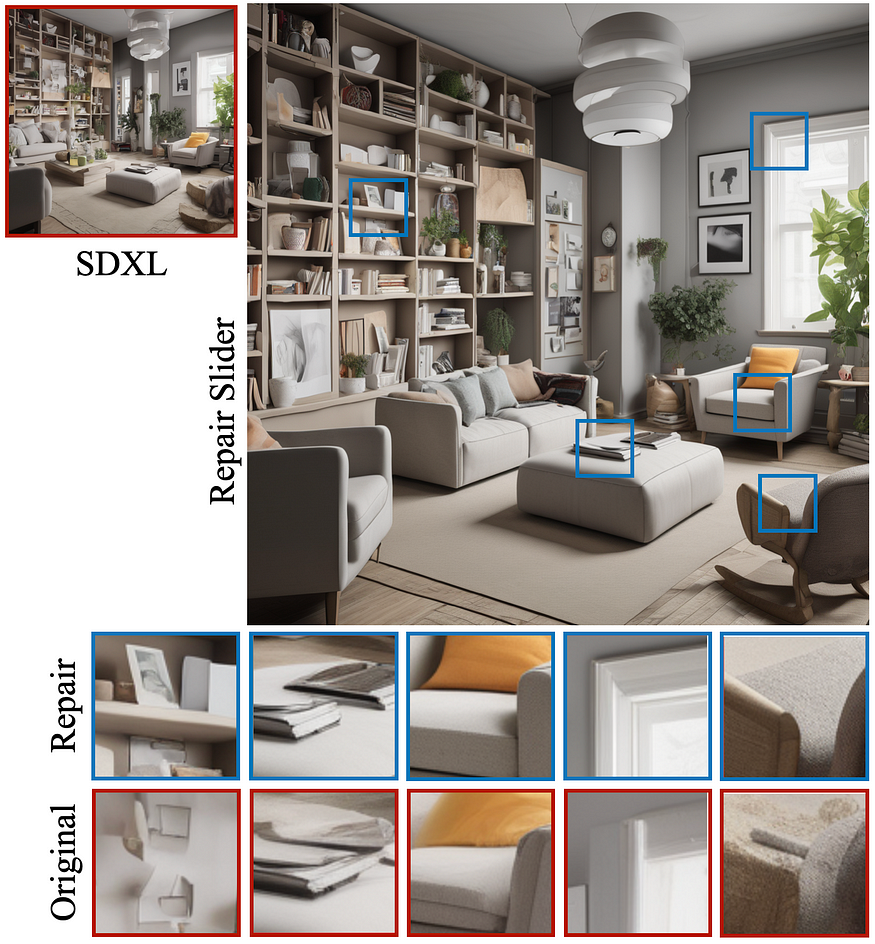

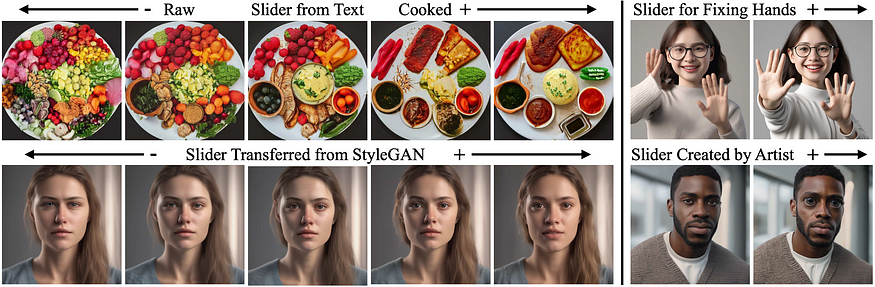

To enable precise editing without changing structure, we present Concept Sliders that are plug-and-play low rank adaptors applied on top of pretrained models. By using simple text descriptions or a small set of paired images, we train concept sliders to represent the direction of desired attributes. At generation time, these sliders can be used to control the strength of the concept in the image, enabling nuanced tweaking.

Why allow concept control in diffusion models?

The ability to precisely modulate semantic concepts during image generation and editing unlocks new frontiers of creative expression for artists utilizing text-to-image diffusion models. As evidenced by recent discourse within artistic communities, limitations in concept control hinder creators’ capacity to fully manifest their vision through these generative technologies. It is also expressed that sometimes these models generate blurry, distorted images

Modifying prompts tends to drastically alter image structure, making fine-tuned tweaks to match artistic preferences difficult. For example, an artist may spend hours crafting a prompt to generate a compelling scene, but lack ability to softly adjust lighter concepts like a subject’s precise age or a storm’s ambience to realize their creative goals. More intuitive, fine-grained control over textual and visual attributes would empower artists to tweak generations for nuanced refinement. In contrast, our Concept Sliders enables nuanced, continuous editing of visual attributes by identifying interpretable latent directions tied to specific concepts. By simply tuning the slider, artists gain finer-grained control over the generative process and can better shape outputs to match their artistic intentions.

How to control concepts in a model?

We propose two types of training — using text prompts alone and using image pairs. For concepts that are hard to describe in text or concepts that are not understood by the model, we propose using the image pair training. We first discuss training for Textual Concept Sliders.

Textual Concept Sliders

The idea is simple but powerful: the pretrained model Pθ*(x) has some pre-existing probability distribution to generate a concept t, so our goal is to learn some low-rank updates to the layers of the model, there by forming a new model Pθ(x) that reshapes its distribution by reducing the probability of an attribute c- and boost the probability of attribute c+ in an image when conditioned on t, according to the original pretrained model: