Demo Video here:

some previews and instructions below:

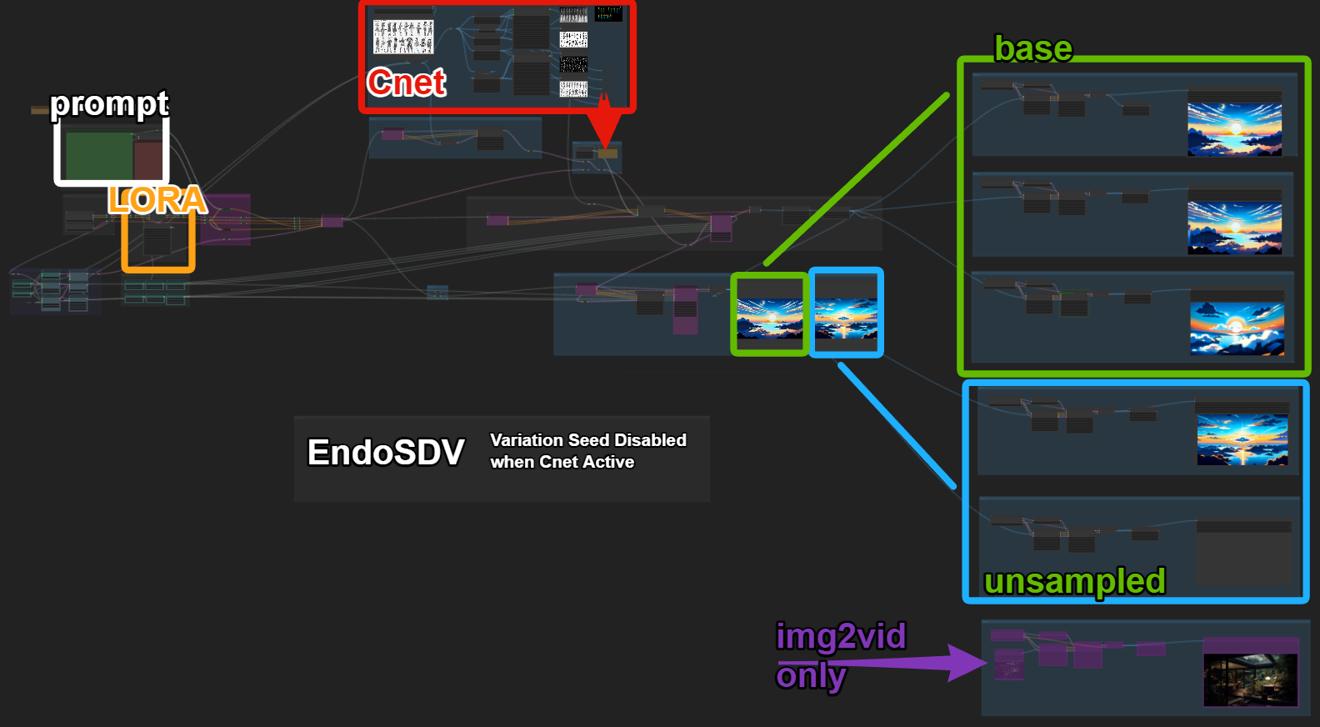

Back at it again with SVD Stable Diffusion Video in Comfy UI

Required Models:

Stable Diffusion SDXL Base

Stable Diffusion Video

1024x576 12fps / 24fpsYou will need:

Comfy UI

(latest version, tested with torch-2.0.1+cu118-cp310-cp310-win_amd64.whl)All the Custom Nodes used in the workflow

(use the Manager to find and update everything you need)

In the first part you can use Text to Image like normal to generate from any Lora or using Control Nets. I provided a switch to turn Cnet on/off, but you do need to move one node connection by the switch.

Unsampler Cnet loopback:

When Cnet is on, the unsampler loop can help to realign your generations.

From there 6 videos with various steps and seeds are generated.

I use OpenPose2.0 + controlLora Sketch, Depth and Canny, but you can use whatever you like :)

All your source images will be saved to the outputs for later use, along with any generated Cnet maps. I'm using .MOV with prores for the video output and use .webp for previews.

There is also an Image to Video line set to bypass for convenience.

The Prompt Field is also a multiline prompt, using seed to select the line.

all demo .webp use my 90's SDXL Lora, you can use any SDXL Lora you like...

Enjoy !