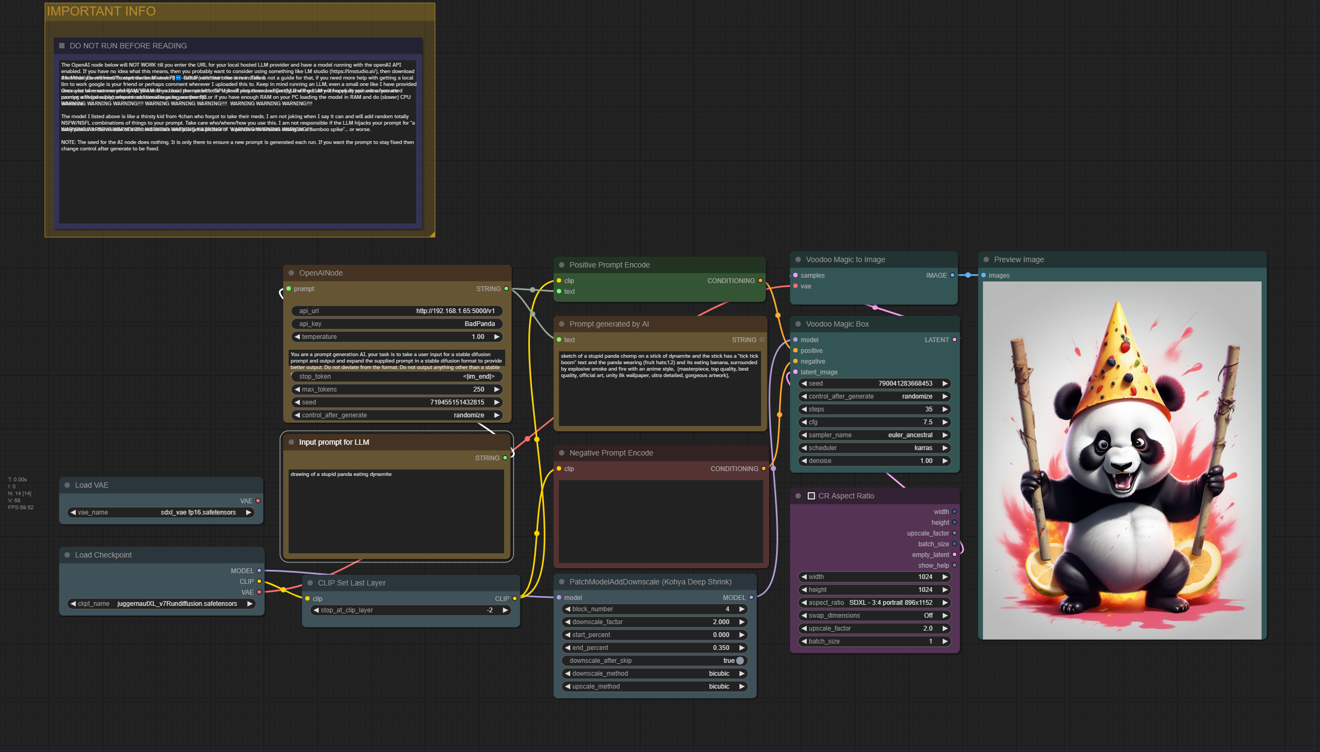

Guide to Using OpenAInode with ComfyUI

This guide will walk you through the process of setting up and using the OpenAInode for generating prompts with the Promptmaster-Mistral-7B model for an unlimited set of fun prompts created from the most basic of inputs!

WARNING WARNING WARNING!!!!

The model I list in this guide is like a thirsty kid from 4chan who forgot to take their meds. I am not joking when I say it can and will add random totally NSFW/NSFL combinations of things to your prompt. Take care who/where/how you use this. I am not responsible if the LLM hijacks your prompt for "a baby panda" in the middle of a discord stream and you get a picture of "a panda with breasts sitting on a bamboo spike"... or worse.

Now, with that out of the way. On to the guide.

Prerequisites

Before we begin, make sure you have the following prerequisites in place:

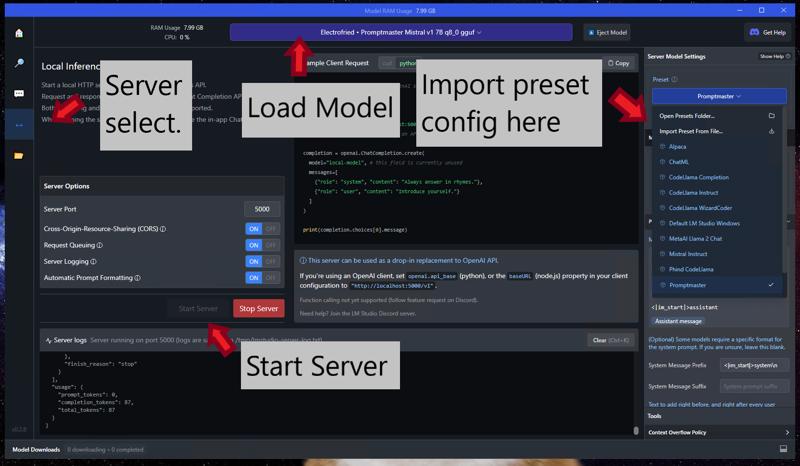

Local LLM Provider: Set up a local hosted LLM provider. If you're new to this, consider using LM Studio. Download and install the "Electrofried/Promptmaster-Mistral-7B-v1-GGUF" model from LM Studio as shown below.

Start Local Server: Attached to this guide is a preset for Promptmaster that works with LM studio, without it the model I listed will not output properly in LM studio. Once the model is installed, start the local server by clicking the "↔️" button, selecting the preset and the model I list above. Note that running an LLM consumes resources, especially RAM/VRAM. If using GPU, be mindful of the hardware requirements. I highly recommend using LM studio only in CPU mode if running on the same machine as stable diffusion unless you have a GPU with 32+GB VRAM (or multiple).

Installation of OpenAInode

Now, let's integrate the OpenAInode into your ComfyUI environment.

Clone the Repository: Visit the OpenAInode GitHub repository at https://github.com/Electrofried/ComfyUI-OpenAINode and clone it into your

custom_nodesdirectory. Use the following command:git clone https://github.com/Electrofried/ComfyUI-OpenAINodeInstall Dependencies: Navigate to the cloned directory and run the following command to install the required dependencies:

cd ComfyUI-OpenAINode pip install -r requirements.txt

Configuring OpenAInode

Now, let's configure the OpenAInode with the necessary settings.

Enter Local LLM URL: Open the OpenAInode configuration file and enter the URL for your locally hosted LLM provider. This ensures that the OpenAInode communicates with your specific model. If you are running LM studio locally then this should already be populated with the correct settings and port unless you changed something in LM studio or are running something else.

Workflow: Load the provided workflow

Using OpenAInode

With everything set up, you can now utilize the OpenAInode to generate prompts.

Prompt Configuration: In your ComfyUI interface, enter a basic prompt with the style of the picture and subject(s). The OpenAInode will process this prompt to generate relevant output. You will need to provide your own negative prompt.

Seed (Optional): Note that the seed for the AI node does nothing in terms of output. It's only there to ensure a new prompt is generated each time. If you want the prompt to stay fixed, consider changing the node to "fixed."

Generate Prompt: Click the appropriate button to generate the prompt. The OpenAInode will interact with your local LLM, and you should receive a formatted prompt with possibly relevant additional tags (Last warning, please read the warning I gave at the top of this post, it was not a joke).

Tips

Prompts should be entered as a basic one line sentence, there is no need to try format them.

Including a style, subject and any details you want. For example "A photo of a Panda wearing a tuxedo."

If you have a second PC in the house with a GPU then you can run LM studio or any other LLM back end on that, then change the URL to the IP address of the computer. This can help speed up prompt generation a lot and reduce your system requirements. If you do this with LM studio make sure to enable CORS in the server settings so it accepts remote requests.

That's it! You're now equipped to use the OpenAInode with ComfyUI. If you encounter any issues or have questions, refer to the provided GitHub repository or seek help from the community.

Go Team Yellow!